这次是对Densenet模型的讲解,这个模型也是需要大家精通掌握的重要模型之一,首先要知道它的网络是怎么传递的,它的出现打破了以往网络设计的思路,是一种非常新颖的设计。

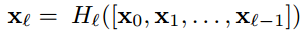

如图所示,第l层的输入不仅与l-1层的输出相关,还有所有之前层的输出有关。

大家看这个可能不是很明白,我就仔细的讲解下这里,图中的照片里的板块(红,绿,紫,黄,橙)分别代表不同的特征图,当然红的是输入(input)图,这里为了方便讲解就是X0,X1,X2,X3,X4讲解,当照片输入的时候经过卷积到x1是的时候加上之前x0是完全传递过来了,x1经过卷积到x2的过程中,x1的特征加上了x0的特征,注意看图中的连线一起形成了x2,x2到x3的时候x1,x2又一次相加一起到了x3。图中的连线是重点,可以理解为传递的过程相加了那几条线。

比如growth_rate是增长率16,举个简单计算,(3,16),(19,16),(16+3+19,16),这样densenet的核心就是输入层一直在变化,输出层没有变化

然后我们需要在大致了解Densenet的设计结构,图中又在次出现重复额板块,所以我们可以将其封装成一个类来写,

然后我们需要在大致了解Densenet的设计结构,图中又在次出现重复额板块,所以我们可以将其封装成一个类来写,

def conv_block(in_channel, out_channel):

layer = nn.Sequential(

nn.BatchNorm2d(in_channel),

nn.ReLU(),

nn.Conv2d(in_channel, out_channel, kernel_size=3, padding=1, bias=False)

)

return layer

这个函数就是方便多次使用

class dense_block(nn.Module):

def __init__(self, in_channel, growth_rate, num_layers):

super(dense_block, self).__init__()

block = []

channel = in_channel

for i in range(num_layers):

block.append(conv_block(channel, growth_rate))

channel += growth_rate

self.net = nn.Sequential(*block)

def forward(self, x):

for layer in self.net:

out = layer(x)

x = torch.cat((out, x), dim=1)

return x

定义这个类的目的是通过for循环多次构造卷积,in_channel是输入通道数,growth_rate是通道数变化的速度,为什么不是固定?block.append(conv_block(channel, growth_rate))

channel += growth_rate

通道数一直是在变化的,nn.Sequential(*block)这里是将block列表里的类型转换,

def transition(in_channel, out_channel):

trans_layer = nn.Sequential(

nn.BatchNorm2d(in_channel),

nn.ReLU(),

nn.Conv2d(in_channel, out_channel, 1),

nn.AvgPool2d(2, 2)

)

return trans_layer

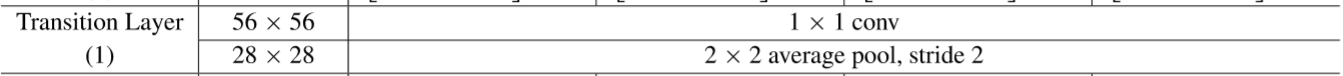

这里是对应这个板块写的函数,它里面的参数都是固定的,很好理解,这个函数的目的是通过1X1的卷积层减少通道数,并使用步幅为2的平均池化层减半高和宽,降低模型的复杂度。

def _make_dense_block(self,channels, growth_rate, num):

block = []

block.append(dense_block(channels, growth_rate, num))

channels += num * growth_rate

return nn.Sequential(*block)

def _make_transition_layer(self,channels):

block = []

block.append(transition(channels, channels // 2))

return nn.Sequential(*block)

由于图中有很多的dense_block块,transition_layer块,所以我们写成函数的, channels // 2就是为了降低复杂度。

class densenet(nn.Module):

def __init__(self, in_channel, num_classes, growth_rate=32, block_layers=[6, 12, 24, 16]):

super(densenet, self).__init__()

self.block1 = nn.Sequential(

nn.Conv2d(in_channel, 64, 7, 2, 3),

nn.BatchNorm2d(64),

nn.ReLU(True),

nn.MaxPool2d(3, 2, padding=1)

)

self.DB1 = self._make_dense_block(64, growth_rate,num=block_layers[0])

self.TL1 = self._make_transition_layer(256)

self.DB2 = self._make_dense_block(128, growth_rate, num=block_layers[1])

self.TL2 = self._make_transition_layer(512)

self.DB3 = self._make_dense_block(256, growth_rate, num=block_layers[2])

self.TL3 = self._make_transition_layer(1024)

self.DB4 = self._make_dense_block(512, growth_rate, num=block_layers[3])

self.global_average = nn.Sequential(

nn.BatchNorm2d(1024),

nn.ReLU(),

nn.AdaptiveAvgPool2d((1,1)),

)

self.classifier = nn.Linear(1024, num_classes)

def forward(self, x):

x = self.block1(x)

x = self.DB1(x)

x = self.TL1(x)

x = self.DB2(x)

x = self.TL2(x)

x = self.DB3(x)

x = self.TL3(x)

x = self.DB4(x)

x = self.global_average(x)

x = x.view(x.shape[0], -1)

x = self.classifier(x)

return x

这是densenet网络的全部流程,对应图片中的结构,大家可能会对self.DB1, self.TL1,里面的参数设置不太了解,这个是我计算出来的,这里的卷积过程可以换成简单写法,我主要是让大家看清楚Densenet网络结构和练习自己的计算能力。

for i, layers in enumerate(block_layers):

block.append(dense_block(channels, growth_rate, layers))

channels += layers * growth_rate

if i != len(block_layers) - 1:

# 每经过一个dense_block模块,则在后面添加一个过渡模块,通道数减半channels//2

block.append(transition(channels, channels // 2))

channels = channels // 2

原本的写法这样更简单,一样是for循环,这里的block_layers=【6,12,24,16】对应每个版块的重复次数,if i != len(block_layers) - 1通过if 判断一下是不是这个卷积最后一次使用,是的话就直接接上transition Layer然后在进行Dense Block 的构造

全部源码

import torch

from torch import nn

def conv_block(in_channel, out_channel):

layer = nn.Sequential(

nn.BatchNorm2d(in_channel),

nn.ReLU(),

nn.Conv2d(in_channel, out_channel, kernel_size=3, padding=1, bias=False)

)

return layer

class dense_block(nn.Module):

def __init__(self, in_channel, growth_rate, num_layers):

super(dense_block, self).__init__()

block = []

channel = in_channel

for i in range(num_layers):

block.append(conv_block(channel, growth_rate))

channel += growth_rate

self.net = nn.Sequential(*block)

def forward(self, x):

for layer in self.net:

out = layer(x)

x = torch.cat((out, x), dim=1)

return x

def transition(in_channel, out_channel):

trans_layer = nn.Sequential(

nn.BatchNorm2d(in_channel),

nn.ReLU(),

nn.Conv2d(in_channel, out_channel, 1),

nn.AvgPool2d(2, 2)

)

return trans_layer

class densenet(nn.Module):

def __init__(self, in_channel, num_classes, growth_rate=32, block_layers=[6, 12, 24, 16]):

super(densenet, self).__init__()

self.block1 = nn.Sequential(

nn.Conv2d(in_channel, 64, 7, 2, 3),

nn.BatchNorm2d(64),

nn.ReLU(True),

nn.MaxPool2d(3, 2, padding=1)

)

self.DB1 = self._make_dense_block(64, growth_rate,num=block_layers[0])

self.TL1 = self._make_transition_layer(256)

self.DB2 = self._make_dense_block(128, growth_rate, num=block_layers[1])

self.TL2 = self._make_transition_layer(512)

self.DB3 = self._make_dense_block(256, growth_rate, num=block_layers[2])

self.TL3 = self._make_transition_layer(1024)

self.DB4 = self._make_dense_block(512, growth_rate, num=block_layers[3])

self.global_average = nn.Sequential(

nn.BatchNorm2d(1024),

nn.ReLU(),

nn.AdaptiveAvgPool2d((1,1)),

)

self.classifier = nn.Linear(1024, num_classes)

def forward(self, x):

x = self.block1(x)

x = self.DB1(x)

x = self.TL1(x)

x = self.DB2(x)

x = self.TL2(x)

x = self.DB3(x)

x = self.TL3(x)

x = self.DB4(x)

x = self.global_average(x)

x = x.view(x.shape[0], -1)

x = self.classifier(x)

return x

def _make_dense_block(self,channels, growth_rate, num):

block = []

block.append(dense_block(channels, growth_rate, num))

channels += num * growth_rate

return nn.Sequential(*block)

def _make_transition_layer(self,channels):

block = []

block.append(transition(channels, channels // 2))

return nn.Sequential(*block)

net = densenet(3,10)

x = torch.rand(1,3,224,224)

for name,layer in net.named_children():

if name != "classifier":

x = layer(x)

print(name, 'output shape:', x.shape)

else:

x = x.view(x.size(0), -1)

x = layer(x)

print(name, 'output shape:', x.shape)