论文名字:AN AUTOMATIC FRAMEWORK FOR EXAMPLE-BASED VIRTUAL MAKEUP

源自英特尔中国研究所 :http://tech.hqew.com/news_373212

论文地址:https://ieeexplore.ieee.org/document/6738660

现存方法需要很多用户输入来调整面部基准点(landmarks)且不能移除用户脸上瑕疵.此文章提出的方法能从很多个妆容里选.用现存算法来检测面部基准点(face landmarks),并用皮肤颜色基于聚类之高斯混合模型(Gaussian Mixture model) 的分割来调整基准点 .接着.皮肤颜色区域被分为三层,妆容用不同方法迁移到不同层..

索引关键词: virtual makeup, face landmark detection

有关的研究,

[1] is an example-based makeup transfer algorithm. But it deeply relies on “before-and-after” pairs, which is taken in the same position, expression and lighting environment. In the pre-processing steps, it needs many user inputs to select special areas, such as eye brows, moles,

and freckles. [2] improves [1] with texture separation and blending example makeup with input face image. But it also needs users to adjust 88 face landmarks. The makeup procedure in our framework is similar to [2], but we improve it with automatic landmark adjustment.

In additional research on virtual makeup is on skin color enhancement, such as [5][6]. [5] also uses “before-andafter” pairs as examples for foundation synthesis. [6] utilizes a physical model to extract hemoglobin and melanin components. It also need user to select skin area which includes spots or moles. Furthermore,

[4] is a makeup suggestion system to optimize the best makeup for user with a professional “before-and-after” pair dataset.

However, since the limited dataset, the cosmetic effects are also limited. There are also some studies on face beautification.

[3] is an interesting study to improve face attractiveness with structure adjustment, which adjusts the input face structure with training lots of attractive faces.

Other studies improve face attractiveness from textures [7][8][9], which remove flaws, moles and wrinkles from faces. Some commercial softwares, such as Taaz [10] and Modi Face [11], provide users with virtual makeup on face photos by simulating the effects of specific cosmetics.

But the manual adjustment of face landmark still limits the user experience in this kind of software usages. 这篇提出的方法能解决两个难题:自动检测face landmark,example和输入图间的妆容合成

First, we utilize state-of-the-art ASM face landmark detection algorithm to locate key points in the face, and automatically adjust skin areas with Gaussian mixture models (GMM). Second, we use bilateral filtering to skin areas to remove flaws, wrinkles, etc.. At last, we use similar makeup technique in [2] to transfer the makeup.

文章剩下部分是这样的. 第二部分:we introduce the face landmark detection method and GMM-based auto-adjustment of skin area. Skin smoothing and makeup transfer methods are introduced in the following section 3 and 4. In section 5, some results are presented. In the last section, we draw a conclusion.

第二部分: DIGITAL MAKEUP FRAMEWORK

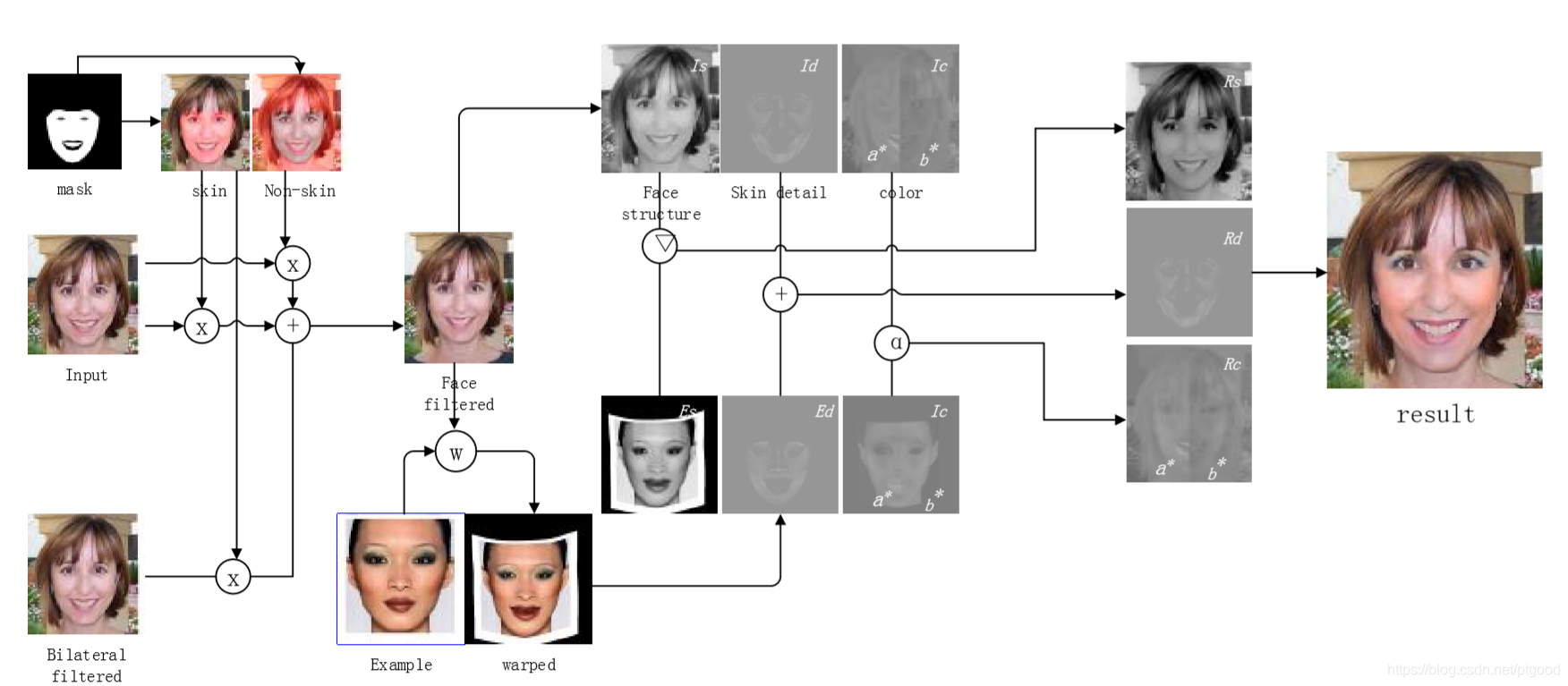

第一步,对输入图自动面部landmark对齐,第二部,skin smoothing based on skin area mask is generated.第三步,smoothed 输入图和提供妆容的样品图间的面部对齐.第四部,分层(layer decomposition),输入图和样品图都分解为3层:脸部结构层,皮肤细节层,,颜色层.不同层运用不同迁移方法来达到更好效果.第五步,所有处理完的层合并.架构如下图

第二部分的每步解析:

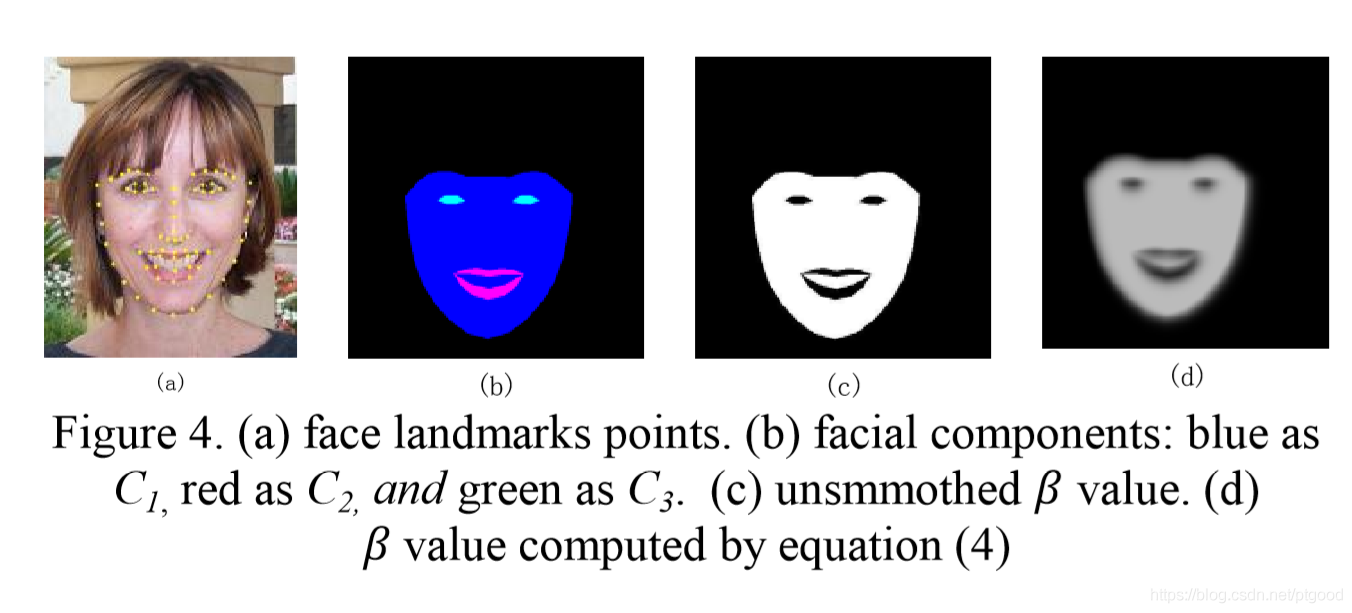

2.1.面部自动对齐

Active Shape Model是面部基准检测常用方法,但可能会因光照条件变化和颜色差异不能准确获得基准点,需要用户手动调整.在作者看来,很多妆容迁移算法都是只处理皮肤区域,而不处理眼睛那些,所以作者用了高斯混合模型(GMM)去调整(refine)皮肤区域

GMM需要在所有区域中采样,作者运用了[9]中的取样方法,运用66个面部特征点来选择皮肤区域

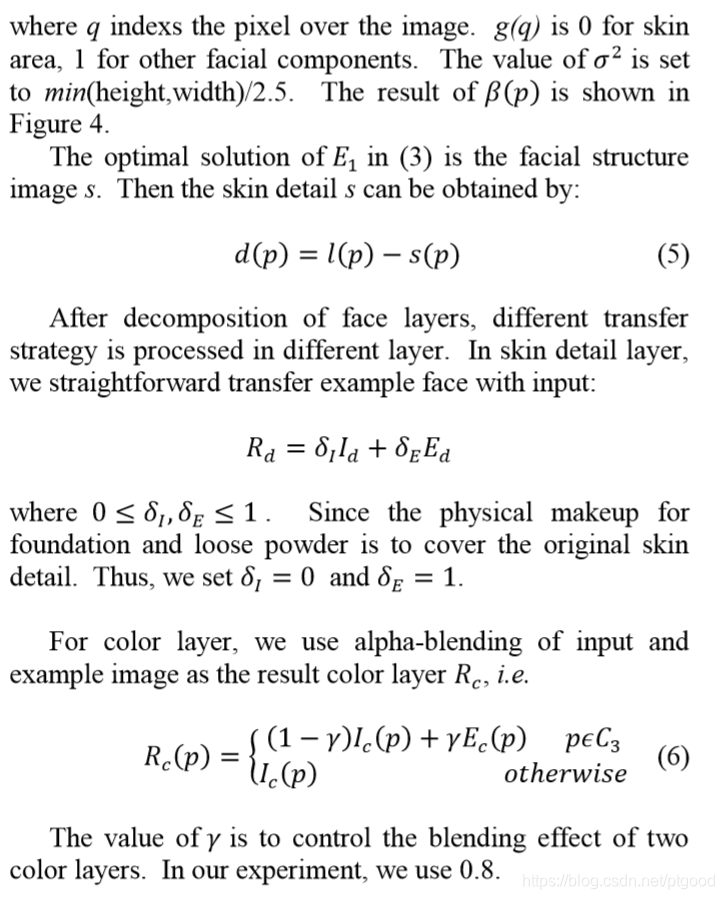

在取样完成后,作者利用GMM[11]来给像素颜色分布建模,

接着用Bayesian分割算法来给GMM统计完的人像分为皮肤和非皮肤区域.是多尺度随机区域(MSRF)和[12]中描述的estimator联合起来使用的

分割完以后.我们得到一个更准确的皮肤和非皮肤区域的分界线.然后再用nearest-neighborhood point来精化分界线.

下巴上的点我们不会改,因为有些人是双下巴.

眉毛上的点也不会变,因为有人有刘海.

/*所以,面部检测是用Active Shape Model这个方法,然后再用高斯混合模型(GMM)去调整面部皮肤区域.具体是用[9]这篇论文里的来取样,运用66个面部特征点来选择皮肤区域,然后再用高斯混合模型(GMM)来给人像的像素颜色建模,高斯模型详见[11]

接着再用Bayesian分割算法来给以上处理好的人像分割成皮肤和非皮肤(眼睛,眉毛那些),具体为利用多尺度随机区域(MSRF)和sequential maximum a posteriori (SMAP)estimator,详见[12],然后就分界得很清楚了,但是还要用nearest-neighborhood point来精化分界线,其中下巴和眉毛都不会变

[9]An Algorithm for Automatic Skin Smoothing in Digital Portraits

[11] C. A. Bouman, “CLUSTER: an unsupervised algorithm for modeling Gaussian mixtures,” https://engineering.pudue.edu/~bouman/software/cluster/manual.p df.

[12] C.A. Bouman and M. Shapiro, “A multiscale random field model for Bayesian image segmentation,” IEEE Trans. Image Processing, vol. 3, no. 2, pp.162-177, March 1994.

*/

2.2.磨皮

Image filtering technology 可以去除瑕疵,但是也会损失细节.我们折中选了 Bilateral filter [11] 来磨皮.

With the skin mask obtained in 2.1 we use a poison image editing method [13] to mosaic input and filtered images together.

2.3.Digital makeup

这个步骤里,样本和输入见先特征对齐,然后图片会被分为三层,皮肤结构层,皮肤细节层和颜色层.最终颜色是在不同层中分别迁移并合并的.而面部特征对齐是通过薄板样条插值(Thin Plate Spline)来把样品变形到输入的,变形控制点是2.1里的结果

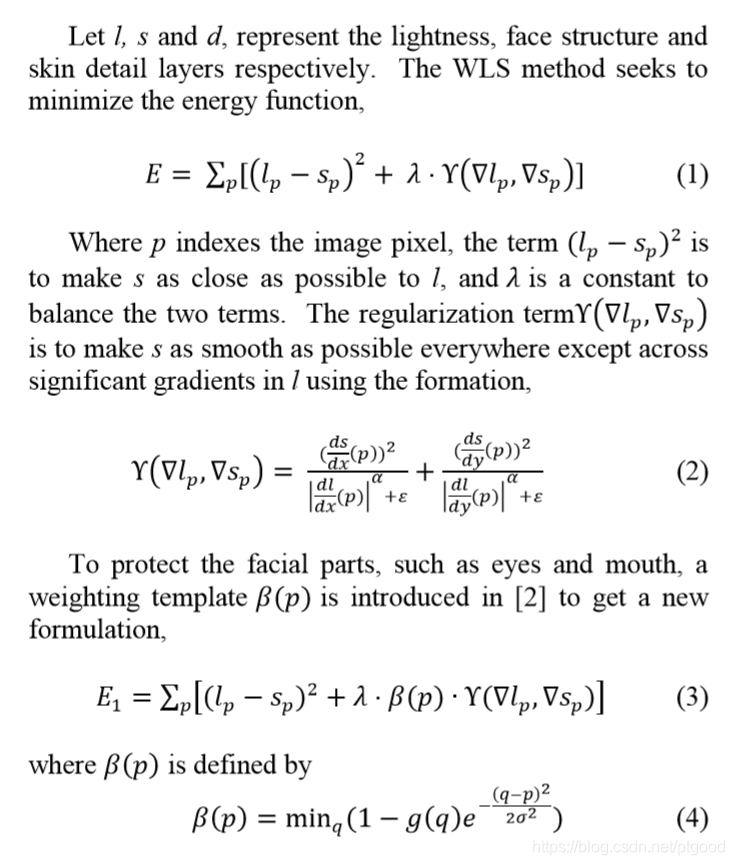

分层细节:

The input image I and the warped example image E are

decomposed into color and lightness layer by

converting them to CIE L*a*b* color space.

The L* channel is considered as lightness layer

and a* and b* the color layer. The lightness

layer is decomposed into face structure and skin

detail layers. While several recent computational

photography techniques can perform image decomposition,

the weighted least squares (WLS) [14] is one of the

state-ofthe-art methods. It was originally proposed

for general edge-preserving image decomposition.

3.结果

美妆样品是从专业化妆手册:[15]里找的,输入图是数据集[16]里找的;

因为磨皮在妆容迁移前就做了,比起其他软件,我们的方法对皮肤瑕疵解决的更好,比如鱼尾纹,![]()

参考文献

11. REFERENCES

[1] W.S. Tong, C.K. Tang, M.S. Brown and Y.Q. Xu, “ExampleBased Cosmetic Transfer,” 15th Pacific Conference on Computer Graphics and Applications, 2007.

[2] D. Guo and T. Sim, “Digital Face Makeup by Example”, In Proc. CVPR, 2009.

[3] T, Leyvand, D. Cohen-Or, G. Dror, and D. Lischinski. “Datadriven enhancement of facial attractiveness”. ACM Trans. Graphics, 27(3):1-9, 2008.

[4] K. Schebaum, T. Ritschel, M. Hullin, T. Thormahlen, V.Blanz, H.P. Seidel, “Computer-Suggested Facial Makeup”, In Proc. EUROGRAPHICS, 2011, Volum 30(2011).

[5] N. Ojima, K. Yoshiba, O.Osanai, and S. Akasaki. “Image synthesis of cosmetic applied skin based on optical properties of foundation layers”, In Proc. ICIS, pages 467-468, 1999.

[6] N. Tsumura, N. Ojima, K. Sato, M. Shiraishi, H. Shimizu, “Image-based skin color and texture analysis/synthesis by extracting hemoglobin and melanin information in the skin”, ACM Trans. Graphics, 22(3):770-779, 2003.

[7] M. Brand and P. Pletscher. “A confitional random field for automatic photo editing”. In Proc. CVPR, 2008.

[8] G. Guo, “Digital Anti-Aging in Face Images”, In Proc. ICCV, 2011.

[9] C. Lee, M.T. Schramm, M. Boutin, J.P. Allebach, “An Algorithm for Automatic Skin Smoothing in Digital Portraits”, In Proc. ICIP, 2009.

[10] S. Milborrow and F. Nicolls. “locating facial features with an extended active shape model”, In Proc. ECCV, 2008.

[11] C. A. Bouman, “CLUSTER: an unsupervised algorithm for modeling Gaussian mixtures,” https://engineering.pudue.edu/~bouman/software/cluster/manual.p df.

[12] C.A. Bouman and M. Shapiro, “A multiscale random field model for Bayesian image segmentation,” IEEE Trans. Image Processing, vol. 3, no. 2, pp.162-177, March 1994.

[13] F.Bookstein. Principal warps: Thin-plate splines and the decomposition of deformations. IEEE Trans. Pattern Analysis and Machine Intelligence, 11(6):567-585, 1989.

[14] Z. Farbman, R. Fattal, D. Lischinski, and R. Szeliski. “Edgepreserving decompositions for multi-scale tone and detail manipulation”, ACM Trans. Graphics, 27(3):1-10, 2008.

[15] F.Nars. Makeup your Mind. PowerHouse Books, 2004.

[16] “Caltech faces 1999 (front),” http://www.vision.caltech.edu/html-files/archive.html.

3210