免费视频教程 https://www.51doit.com/ 或者联系博主微信 17710299606

hbase shell 进入到客户端

1 DDL

在hbase中没有database的概念使用namespace替代database的作用

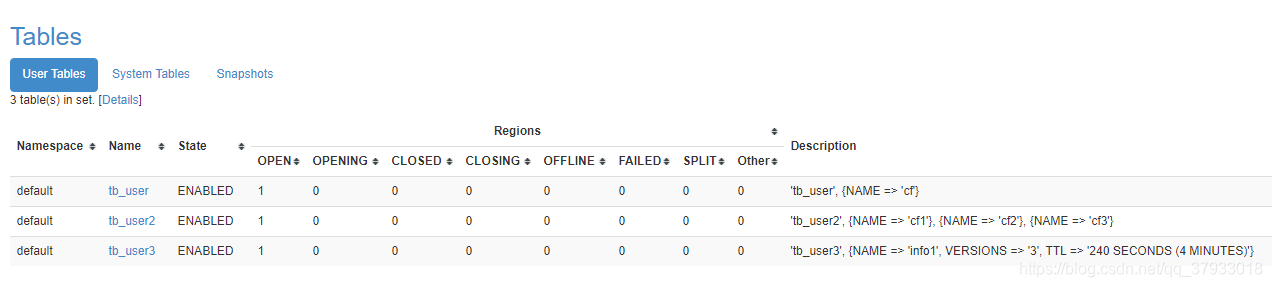

1.1 建表 create (指定列族)

create "tb_user" , "cf" -- 创建表 指定一个列族

create 'tb_user2' ,'cf1' ,'cf2' ,'cf3' --指定多个列族

create "tb_user3" , {NAME=>'info1' ,VERSIONS=>3,TTL=>240} 设置3个数据版本 过期时间1.2 查看系统中的表 list

list

["tb_user", "tb_user2"]1.3 查看表的结构 describe desc

describe 'tb_name'

desc 'tb_name' --查看表结构

COLUMN FAMILIES DESCRIPTION

{NAME => 'cf1', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false', KEEP_DELETED_CELLS

=> 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', REPLI

CATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false', CACHE_BLOOMS_ON_WRITE

=> 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE => '65536'}

{NAME => 'cf2', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false', KEEP_DELETED_CELLS

=> 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', REPLI

CATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false', CACHE_BLOOMS_ON_WRITE

=> 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE => '65536'}

{NAME => 'cf3', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false', KEEP_DELETED_CELLS

=> 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', REPLI

CATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false', CACHE_BLOOMS_ON_WRITE

=> 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE => '65536'} 1.4 插入数据 put

put 'tb_name' ,'rowkey' ,'cf:fieldname' ,'value'

1.hbase(main):025:0> put 'tb_user3' ,'rk001','info1:name','zss'

2.hbase(main):026:0> put 'tb_user3' ,'rk001','info1:age',33

3.hbase(main):027:0> put 'tb_user3' ,'rk001','info1:job','coder'

1) hbase(main):029:0> put 'tb_user3' ,'rk002','info1:job','teacher'

2) hbase(main):030:0> put 'tb_user3' ,'rk002','info1:name','guanyu'

3) hbase(main):031:0> put 'tb_user3' ,'rk002','info1:age',361.5 查看表中的所有数据 scan

scan tb_name

scan 'tb_user3'

ROW COLUMN+CELL

rk001 column=info1:age, timestamp=1598512689282, value=33

rk001 column=info1:job, timestamp=1598512696411, value=coder

rk001 column=info1:name, timestamp=1598512680666, value=zss

rk002 column=info1:age, timestamp=1598512760599, value=36

rk002 column=info1:job, timestamp=1598512737390, value=teacher

rk002 column=info1:name, timestamp=1598512747469, value=guanyu 2数据存储位置

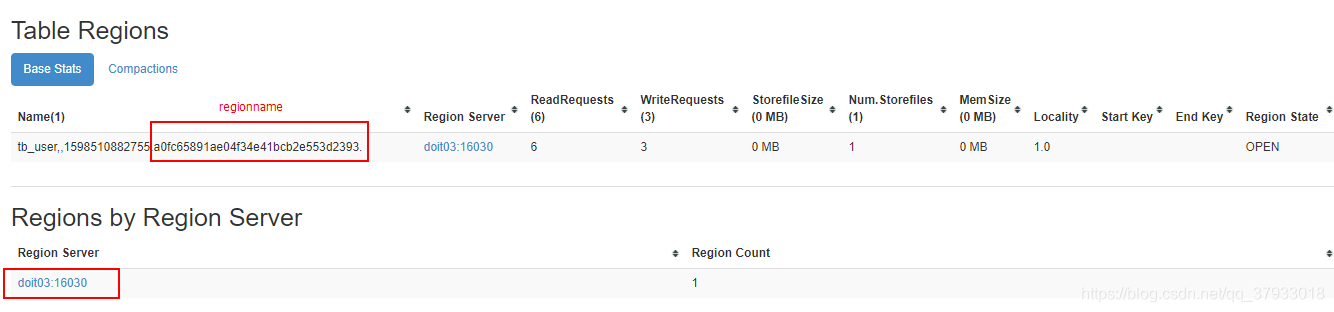

从hbase 的web页面上可以看到 表的信息的

在对应的hdfs系统中有目录

- 1) put数据

- 2) flush表 flush tb_name ---> 将插入的数据写到HDFS指定的目录中

- 3) hbase hfile -p -f hfile的目录

[root@doit01 ~]# hbase hfile -p -f /hbase/data/default/tb_user/a0fc65891ae04f34e41bcb2e553d2393/cf/6f9ac1ff9eaa45509f2fc97ff6844d6a

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/apps/hadoop-3.2.1/share/hadoop/common/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/apps/hbase-2.2.5/lib/client-facing-thirdparty/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

2020-08-27 15:55:45,980 INFO [main] metrics.MetricRegistries: Loaded MetricRegistries class org.apache.hadoop.hbase.metrics.impl.MetricRegistriesImpl

K: 1/cf:name/1598514721783/Put/vlen=3/seqid=4 V: zss

K: 11/cf:name/1598514735040/Put/vlen=2/seqid=6 V: ww

K: 2/cf:name/1598514727708/Put/vlen=3/seqid=5 V: lss

Scanned kv count -> 33删除数据和更新数据

删除数据和更新数据的操作都是向HFile中追加内容 ,在数据的K中记录操作行为 比如 delete/deleteFamily(墓碑标记)

- delete 删除单元格 delete 'tb_user' , '11' ,'cf:name'

- deleteall删除 行/列族/单元格 deleteall 'tb_user' , '1'

- put 更新数据

K: 2/cf:name/1598516882547/Put/vlen=4/seqid=16 V: lss2

K: 1/cf:/1598516244611/DeleteFamily/vlen=0/seqid=12 V:

K: 11/cf:name/1598514735040/Delete/vlen=0/seqid=10 V: