clickhouse连接mysql数据源

- 在linux123上建立好测试的数据库和表并插入数据

create database bigdataClickHouse;

use bigdataClickHouse;

create table student(

id int,

name varchar(40),

age int);

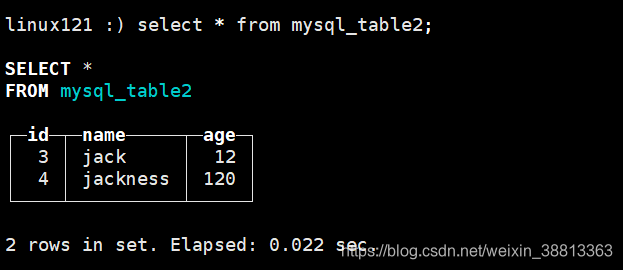

insert into student values(3,'jack',12);

insert into student values(4,'jackness',120);

- clickhouse中建立以mysql为引擎的表

CREATE TABLE mysql_table2 ( `id` UInt32, `name` String, `age` UInt32 )ENGINE = MySQL('linux123:3306', 'bigdataClickHouse', 'student', 'root', '12345678')

- 在clickhouse中可以查到mysql的数据

连接kafka

- 启动kafka并创建主题

启动:kafka-server-start.sh -daemon config/server.properties

创建主题:kafka-topics.sh --zookeeper localhost:2181/myKafka --create --topic clickhouseTest --partitions 1 --replication-factor 1

- 在clickhouse中创建表kafka主题

CREATE TABLE queue(q_date String,level String,message String) ENGINE=Kafka SETTINGS kafka_broker_list='linux122:9092',kafka_topic_list='clickhouseTest',kafka_group_name='group33',kafka_format='CSV',kafka_num_consumers=1;

4.创建daily表

CREATE TABLE daily ( day Date, level String, total UInt64 ) ENGINE = SummingMergeTree(day, (day, level), 8192);

5.创建物化视图

CREATE MATERIALIZED VIEW consumer TO daily AS SELECT q_date as day,level,message FROM queue;

6.向kafka的topic中发送消息

kafka-console-producer.sh --topic clickhouseTest --broker-list localhost:9092

2020-02-20,level2,message2

2020-02-21,level1,message1

7.在daily中查询数据

查看日志发现报错:

StorageKafka (queue): Can’t get assignment. It can be caused by some issue with consumer group (not enough partitions?). Will keep trying.

请问这个如何解决?