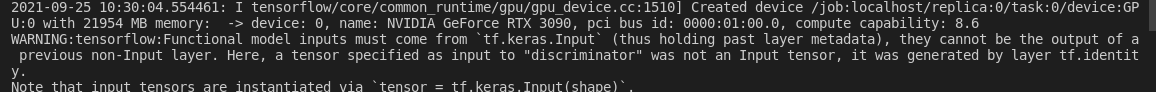

WARNING:tensorflow:Functional model inputs must come from tf.keras.Input (thus holding past layer metadata), they cannot be the output of a previous non-Input layer. Here, a tensor specified as input to “discriminator” was not an Input tensor, it was generated by layer tf.identity.

我的错误:

我的错误:

def build_discriminator_with_teacher(filters=16):

inputs = Input(shape = input_shape, name='dis_input')

x = inputs

z_teacher = Input(shape= (latent_dim,), name='z_teacher')

z_teacher = Dropout(rate=0.75)(z_teacher)

z_embedding = Dense(1024, activation='linear', name='z_embbding_dis')(z_teacher)

#3层卷积

for i in range(3):

filters *= 2

x = Conv2D(filters=filters,

kernel_size=kernel_size,

activation='relu',

strides=2,

padding='same')(x)

x = Flatten()(x)

#(16*16*128--1024,对16*16*128层施加dropout)

x = Dropout(rate=0.2)(x)

x = Dense(1024,activation='relu')(x)

# 对z_embedding和x进行加和操作

x = add([x,z_embedding])

x = Dense(1,activation='linear')(x)

return Model(inputs=[inputs,z_teacher], outputs=x, name='discriminator')

输入层的变量名不要在后面改了!

应把z_teacher改为z_teacher_input