DataNode启动分析

主方法

DataNode通过DataNode.java的main方法进行启动,因为经过的方法太多,只说几个重点的。

secureMain方法

public static void secureMain(String args[], SecureResources resources) {

try {

StringUtils.startupShutdownMessage(DataNode.class, args, LOG);

DataNode datanode = createDataNode(args, null, resources);

if (datanode != null) {

datanode.join();

}

}

}

在secureMain方法中,通过startupShutdownMessage的方法,在日志中打印DataNode的启动信息,描述启动了哪个类,主机,参数,版本,以及编译信息等等。

然后再执行createDataNode方法,去实例化DataNode。

datanode.join()方法,实际上执行了BlockPoolManager.join来管理DataNode上的BPServiceActor。

- 每个DataNode上都有一个BlockPoolManager实例

- 每个BlockPoolManager实例管理着所有名称服务空间对应的BPOfferService实例

- 每个BPOfferService实例管理者对应名称空间到所有NameNode的BPServiceActor工作线程:包含一个Active与若干Standby状态的NN

- BPServiceActor是针对特定的NameNode进行通讯和完成心跳与接收响应命令的工作线程。

createDataNode方法

public static DataNode createDataNode(String args[], Configuration conf,

SecureResources resources) throws IOException {

DataNode dn = instantiateDataNode(args, conf, resources);

if (dn != null) {

dn.runDatanodeDaemon();

}

return dn;

}

runDatanodeDaemon方法,在DataNode实例化后,将DataNode的守护者线程,进行启动。

public void runDatanodeDaemon() throws IOException {

blockPoolManager.startAll();//启动BPServerActor

// start dataXceiveServer

dataXceiverServer.start();

if (localDataXceiverServer != null) {

localDataXceiverServer.start();

}

ipcServer.start();

startPlugins(conf);

}

startDataNode方法

void startDataNode(Configuration conf,

List<StorageLocation> dataDirs,

// DatanodeProtocol namenode,

SecureResources resources

) throws IOException {

initDataXceiver(conf);//初始化DataXceive

startInfoServer(conf);//启动DataNode的HTTP服务

pauseMonitor = new JvmPauseMonitor(conf);

pauseMonitor.start();//启动JVM处理线程

initIpcServer(conf);//初始化RPC服务

blockPoolManager = new BlockPoolManager(this);

blockPoolManager.refreshNamenodes(conf);//在NameNode端进行注册,import

}

DataXceiverServer的初始化

在DataNode的初始化代码中, 会创建一个DataXceiverServer对象监听所有流式接口请求,我们常用的读写操作,在DataNode端都是通过DataXceiverServer来处理的。 TcpPeerServer的监听地址是通过dfs.datanode.address配置项配置的, TcpPeerServer会将Socket设置为No_Delay的方式, 然后设置receiveBuffer为128KB(默认为8KB) , 同时将DataXceiverServer放入线程组dataXceiverServer中, 最后将这个线程组设置为守护线程。

private void initDataXceiver() throws IOException {

// find free port or use privileged port provided

TcpPeerServer tcpPeerServer;

// 设置TCP接收缓冲区 ipc.server.listen.queue.size 128

int backlogLength = getConf().getInt(

CommonConfigurationKeysPublic.IPC_SERVER_LISTEN_QUEUE_SIZE_KEY,

CommonConfigurationKeysPublic.IPC_SERVER_LISTEN_QUEUE_SIZE_DEFAULT);

tcpPeerServer = new TcpPeerServer(dnConf.socketWriteTimeout,

DataNode.getStreamingAddr(getConf()), backlogLength);

if (dnConf.getTransferSocketRecvBufferSize() > 0) {

tcpPeerServer.setReceiveBufferSize(

dnConf.getTransferSocketRecvBufferSize());

}

streamingAddr = tcpPeerServer.getStreamingAddr();

LOG.info("Opened streaming server at " + streamingAddr);

this.threadGroup = new ThreadGroup("dataXceiverServer");

xserver = new DataXceiverServer(tcpPeerServer, getConf(), this);

this.dataXceiverServer = new Daemon(threadGroup, xserver);

this.threadGroup.setDaemon(true); // auto destroy when empty

}

启动DataNode的web服务

private void startInfoServer()

throws IOException {

// SecureDataNodeStarter will bind the privileged port to the channel if

// the DN is started by JSVC, pass it along.

ServerSocketChannel httpServerChannel = secureResources != null ?

secureResources.getHttpServerChannel() : null;

httpServer = new DatanodeHttpServer(getConf(), this, httpServerChannel);

httpServer.start();

if (httpServer.getHttpAddress() != null) {

infoPort = httpServer.getHttpAddress().getPort();

}

if (httpServer.getHttpsAddress() != null) {

infoSecurePort = httpServer.getHttpsAddress().getPort();

}

}

记住http服务的端口是DFS_DATANODE_HTTPS_ADDRESS_KEY。

private static String getHostnameForSpnegoPrincipal(Configuration conf) {

String addr = conf.getTrimmed(DFS_DATANODE_HTTP_ADDRESS_KEY, null);

if (addr == null) {

addr = conf.getTrimmed(DFS_DATANODE_HTTPS_ADDRESS_KEY,

DFS_DATANODE_HTTPS_ADDRESS_DEFAULT);

}

InetSocketAddress inetSocker = NetUtils.createSocketAddr(addr);

return inetSocker.getHostString();

}

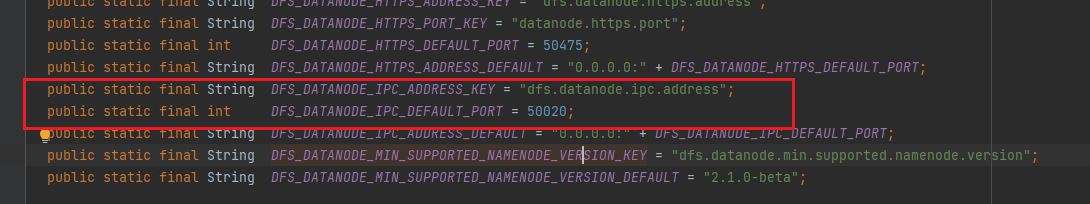

启动DataNode的rpc服务

private void initIpcServer() throws IOException {

InetSocketAddress ipcAddr = NetUtils.createSocketAddr(

getConf().getTrimmed(DFS_DATANODE_IPC_ADDRESS_KEY));//here

ipcServer = new RPC.Builder(getConf())

.setProtocol(ClientDatanodeProtocolPB.class)

.setInstance(service)

.setBindAddress(ipcAddr.getHostName())

.setPort(ipcAddr.getPort())

.setNumHandlers(

getConf().getInt(DFS_DATANODE_HANDLER_COUNT_KEY,

DFS_DATANODE_HANDLER_COUNT_DEFAULT)).setVerbose(false)

.setSecretManager(blockPoolTokenSecretManager).build();

}

通过对 ipcAddr 的创建,发现ipc port的值是dfs.datanode.ipc.address 所赋予的,默认为50020.

向NameNode进行注册(重点)

BlockPoolManager类

private void doRefreshNamenodes(

Map<String, Map<String, InetSocketAddress>> addrMap,

Map<String, Map<String, InetSocketAddress>> lifelineAddrMap)

throws IOException {

Set<String> toRefresh = Sets.newLinkedHashSet();

Set<String> toAdd = Sets.newLinkedHashSet();

Set<String> toRemove;

synchronized (this) {

// Step 1. For each of the new nameservices, figure out whether

// it's an update of the set of NNs for an existing NS,

// or an entirely new nameservice.

for (String nameserviceId : addrMap.keySet()) {

if (bpByNameserviceId.containsKey(nameserviceId)) {

toRefresh.add(nameserviceId);

} else {

toAdd.add(nameserviceId);

}

}

// Step 2. Any nameservices we currently have but are no longer present

// need to be removed.

toRemove = Sets.newHashSet(Sets.difference(

bpByNameserviceId.keySet(), addrMap.keySet()));

// Step 3. Start new nameservices -----------------------------------import------------------------------

if (!toAdd.isEmpty()) {

for (String nsToAdd : toAdd) {

Map<String, InetSocketAddress> nnIdToAddr = addrMap.get(nsToAdd);

Map<String, InetSocketAddress> nnIdToLifelineAddr =

lifelineAddrMap.get(nsToAdd);

ArrayList<InetSocketAddress> addrs =

Lists.newArrayListWithCapacity(nnIdToAddr.size());

ArrayList<InetSocketAddress> lifelineAddrs =

Lists.newArrayListWithCapacity(nnIdToAddr.size());

for (String nnId : nnIdToAddr.keySet()) {

addrs.add(nnIdToAddr.get(nnId));

lifelineAddrs.add(nnIdToLifelineAddr != null ?

nnIdToLifelineAddr.get(nnId) : null);

}

BPOfferService bpos = createBPOS(nsToAdd, addrs, lifelineAddrs);

bpByNameserviceId.put(nsToAdd, bpos);

offerServices.add(bpos);

}

}

startAll();

}

//--------------------------------------------------------------------------------------------------------------------

// Step 4. Shut down old nameservices. This happens outside

// of the synchronized(this) lock since they need to call

// back to .remove() from another thread

if (!toRemove.isEmpty()) {

for (String nsToRemove : toRemove) {

BPOfferService bpos = bpByNameserviceId.get(nsToRemove);

bpos.stop();

bpos.join();

// they will call remove on their own

}

}

// Step 5. Update nameservices whose NN list has changed

if (!toRefresh.isEmpty()) {

for (String nsToRefresh : toRefresh) {

final BPOfferService bpos = bpByNameserviceId.get(nsToRefresh);

Map<String, InetSocketAddress> nnIdToAddr = addrMap.get(nsToRefresh);

Map<String, InetSocketAddress> nnIdToLifelineAddr =

lifelineAddrMap.get(nsToRefresh);

final ArrayList<InetSocketAddress> addrs =

Lists.newArrayListWithCapacity(nnIdToAddr.size());

final ArrayList<InetSocketAddress> lifelineAddrs =

Lists.newArrayListWithCapacity(nnIdToAddr.size());

for (String nnId : nnIdToAddr.keySet()) {

addrs.add(nnIdToAddr.get(nnId));

lifelineAddrs.add(nnIdToLifelineAddr != null ?

nnIdToLifelineAddr.get(nnId) : null);

}

try {

UserGroupInformation.getLoginUser()

.doAs(new PrivilegedExceptionAction<Object>() {

@Override

public Object run() throws Exception {

bpos.refreshNNList(addrs, lifelineAddrs);

return null;

}

});

}

}

}

}

因为HDFS联邦化,集群中存在多个NameNode,上面的方法在于在于更新NameNode的信息,也就是nameservices。对于不存在的nameservices,我们要删除它,并且停止面向其的服务。对于新增的nameservices,我们要在列表中添加它,增加与其的服务。

我们重点看上面的第三步Start new nameservices,对于新增的NameNode开启其 nameservices,与其进行交互。

一个Block Pool对应生成一个 BPOfferService,然后不同的NameNode(Active Standby)生成 对应的BPServiceActor。每一个BPServiceActor复制和对应的NameNode进行汇报。

通过 startAll方法,将所有的BPServiceActor线程进行启动。

BPServiceActor类

public void run() {

while (true) {

// init stuff

try {

// setup storage

connectToNNAndHandshake(); //

break;

}

}

while (shouldRun()) {

try {

offerService();

}

}

}

在BPServiceActor.run方法中,有两个方法,一个connectToNNAndHandshake,进行NameNode的注册,offerService方法进行日常的心跳,全量块,增量快汇报。

private void connectToNNAndHandshake() throws IOException {

// get NN proxy

bpNamenode = dn.connectToNN(nnAddr);

// First phase of the handshake with NN - get the namespace

// info.

NamespaceInfo nsInfo = retrieveNamespaceInfo();

// Verify that this matches the other NN in this HA pair.

// This also initializes our block pool in the DN if we are

// the first NN connection for this BP.

bpos.verifyAndSetNamespaceInfo(nsInfo);

// Second phase of the handshake with the NN.

register();//import

}

这里方法,与NameNode连接,获取NameNode的代理,得到namespace的信息,初始化DataNode的存储磁盘,向NameNode注册。

void register() throws IOException {

while (shouldRun()) {

try {

// Use returned registration from namenode with updated fields

bpRegistration = bpNamenode.registerDatanode(bpRegistration);

break;

}

}

// random short delay - helps scatter the BR from all DNs

scheduleBlockReport(dnConf.initialBlockReportDelay);

}

将DataNode的信息上传到NameNode,然后设置下一次全量块汇报的时间。

NameNode端

在NameNode端,主要是在向host2DatanodeMap(存储ip:host-- DatanodeDescriptor的映射,因为在一个服务器上,可能有多个DataNode),DataNodeMap( datanodeMap是DataNodeUUID和DATANODED的映射), NetworkTopology(将整个集群中的DN存储成了一个树状网络拓扑图, 表示一个具有树状网络拓扑结构的计算机集群, 一个集群可能由多个数据中心Data Center组成),heartbeatManager(心跳管理),这四个数据结构中添加DataNode。

DataNode的注册分为这几种情况,根据ip:porrt和DataNodeUUID排列组合 ,共有四种情况。

全新注册,一个新的DataNode在新的节点加入到集群中。

在原有节点上,旧的DataNode失效,新建DataNode

DataNode重启。

将DataNode转移到新的服务器或者端口。

思想就在于,从清除原先已经失效DataNode的信息,再创建新的DataNode信息加入到相应的数据结构中。

public void registerDatanode(DatanodeRegistration nodeReg)

throws DisallowedDatanodeException, UnresolvedTopologyException {

DatanodeDescriptor nodeS = getDatanode(nodeReg.getDatanodeUuid());

DatanodeDescriptor nodeN = host2DatanodeMap.getDatanodeByXferAddr(

nodeReg.getIpAddr(), nodeReg.getXferPort());

if (nodeN != null && nodeN != nodeS) {

NameNode.LOG.info("BLOCK* registerDatanode: " + nodeN);

// nodeN previously served a different data storage,

// which is not served by anybody anymore.

removeDatanode(nodeN);

// physically remove node from datanodeMap

wipeDatanode(nodeN);

nodeN = null;

}

if (nodeS != null) {

if (nodeN == nodeS) {

// The same datanode has been just restarted to serve the same data

// storage. We do not need to remove old data blocks, the delta will

// be calculated on the next block report from the datanode

if(NameNode.stateChangeLog.isDebugEnabled()) {

NameNode.stateChangeLog.debug("BLOCK* registerDatanode: "

+ "node restarted.");

}

} else {

// nodeS is found

/* The registering datanode is a replacement node for the existing

data storage, which from now on will be served by a new node.

If this message repeats, both nodes might have same storageID

by (insanely rare) random chance. User needs to restart one of the

nodes with its data cleared (or user can just remove the StorageID

value in "VERSION" file under the data directory of the datanode,

but this is might not work if VERSION file format has changed

*/

NameNode.stateChangeLog.info("BLOCK* registerDatanode: " + nodeS

+ " is replaced by " + nodeReg + " with the same storageID "

+ nodeReg.getDatanodeUuid());

}

boolean success = false;

try {

// update cluster map

getNetworkTopology().remove(nodeS);

if(shouldCountVersion(nodeS)) {

decrementVersionCount(nodeS.getSoftwareVersion());

}

nodeS.updateRegInfo(nodeReg);

nodeS.setSoftwareVersion(nodeReg.getSoftwareVersion());

nodeS.setDisallowed(false); // Node is in the include list

// resolve network location

if(this.rejectUnresolvedTopologyDN) {

nodeS.setNetworkLocation(resolveNetworkLocation(nodeS));

nodeS.setDependentHostNames(getNetworkDependencies(nodeS));

} else {

nodeS.setNetworkLocation(

resolveNetworkLocationWithFallBackToDefaultLocation(nodeS));

nodeS.setDependentHostNames(

getNetworkDependenciesWithDefault(nodeS));

}

getNetworkTopology().add(nodeS);

resolveUpgradeDomain(nodeS);

heartbeatManager.register(nodeS);

incrementVersionCount(nodeS.getSoftwareVersion());

startAdminOperationIfNecessary(nodeS);

success = true;

} finally {

if (!success) {

removeDatanode(nodeS);

wipeDatanode(nodeS);

countSoftwareVersions();

}

}

return;

}

DatanodeDescriptor nodeDescr

= new DatanodeDescriptor(nodeReg, NetworkTopology.DEFAULT_RACK);

boolean success = false;

try {

// resolve network location

if(this.rejectUnresolvedTopologyDN) {

nodeDescr.setNetworkLocation(resolveNetworkLocation(nodeDescr));

nodeDescr.setDependentHostNames(getNetworkDependencies(nodeDescr));

} else {

nodeDescr.setNetworkLocation(

resolveNetworkLocationWithFallBackToDefaultLocation(nodeDescr));

nodeDescr.setDependentHostNames(

getNetworkDependenciesWithDefault(nodeDescr));

}

networktopology.add(nodeDescr);

nodeDescr.setSoftwareVersion(nodeReg.getSoftwareVersion());

resolveUpgradeDomain(nodeDescr);

// register new datanode

addDatanode(nodeDescr);

blockManager.getBlockReportLeaseManager().register(nodeDescr);

// also treat the registration message as a heartbeat

// no need to update its timestamp

// because its is done when the descriptor is created

heartbeatManager.addDatanode(nodeDescr);

heartbeatManager.updateDnStat(nodeDescr);

incrementVersionCount(nodeReg.getSoftwareVersion());

startAdminOperationIfNecessary(nodeDescr);

success = true;

} finally {

if (!success) {

removeDatanode(nodeDescr);

wipeDatanode(nodeDescr);

countSoftwareVersions();

}

}

}

}

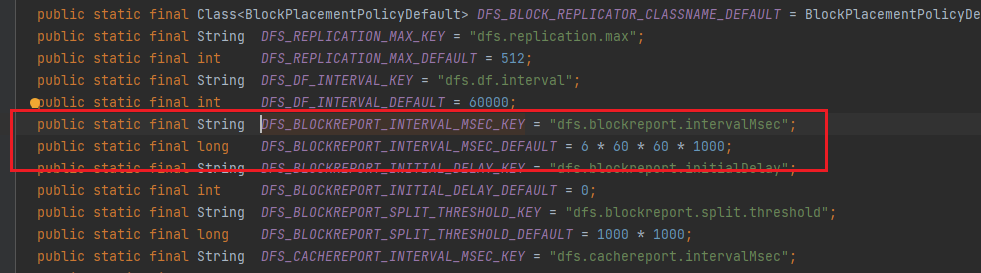

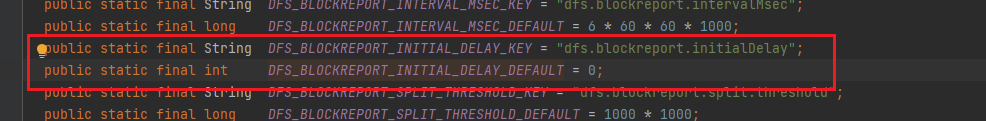

DataNode全量块汇报时间

启动后,前两次是随机发送全量块,然后是定时发送汇报。

void scheduleBlockReport(long delay) {

if (delay > 0) {

// send BR after random delay

lastBlockReport = Time.now()

- ( dnConf.blockReportInterval - DFSUtil.getRandom().nextInt((int)(delay)));

} else {

// send at next heartbeat

lastBlockReport = lastHeartbeat - dnConf.blockReportInterval;

}

resetBlockReportTime = true; // reset future BRs for randomness

}

第一次设置全量块汇报的时间,通过dfs.blockreport.initialDelay来设置,如果dfs.blockreport.initialDelay为零,那么全量块汇报的时间是下次心跳汇报时间,不为零,则为距现在0-dfs.blockreport.initialDelay之间的随机时间。

private void scheduleNextBlockReport(long previousReportStartTime) {

// If we have sent the first set of block reports, then wait a random

// time before we start the periodic block reports.

if (resetBlockReportTime) {

//第二次

lastBlockReport = previousReportStartTime -

DFSUtil.getRandom().nextInt((int)(dnConf.blockReportInterval));

resetBlockReportTime = false;

} else {

// 第三 四.....次

/* say the last block report was at 8:20:14. The current report

* should have started around 9:20:14 (default 1 hour interval).

* If current time is :

* 1) normal like 9:20:18, next report should be at 10:20:14

* 2) unexpected like 11:35:43, next report should be at 12:20:14

*/

lastBlockReport += (now() - lastBlockReport) /

dnConf.blockReportInterval * dnConf.blockReportInterval;

}

}

因为resetBlockReportTime=TRUE,第二次汇报是在距离上一次汇报的0-dfs.blockreport.intervalMsec的随机时间内。随后,则定时进行汇报。