一. 问题描述

今天帮朋友处理一个MySQL的问题,需要迁移到新的服务器。

然后咨询我磁盘怎么划分,我给他的是data用SSD,然后备份和日志盘可以用HDD。

云厂商直接给SSD做了raid1,然后2T的存储可用是1T .

可是备份盘居然也给做了raid,而且raid居然没有配置成功。

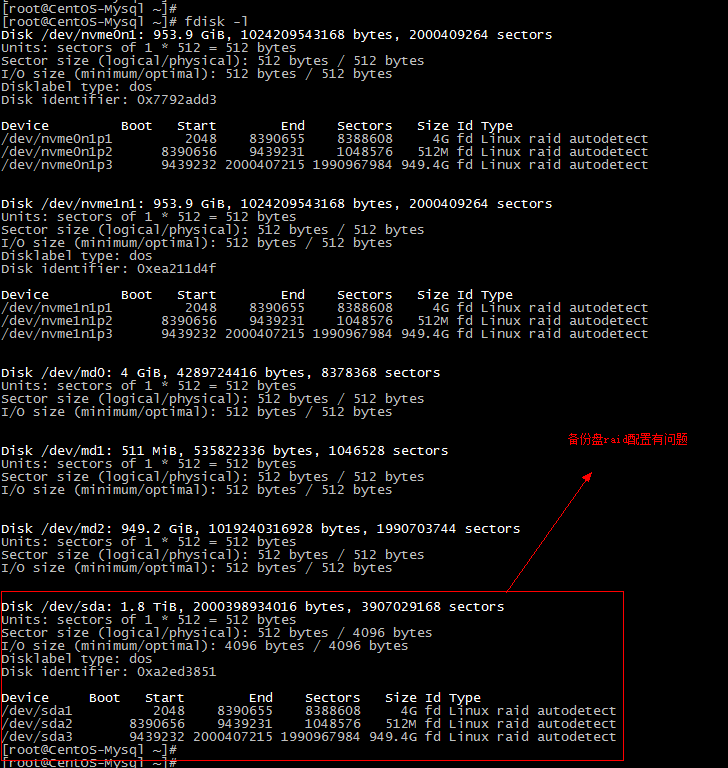

如下图:

fdisk -l

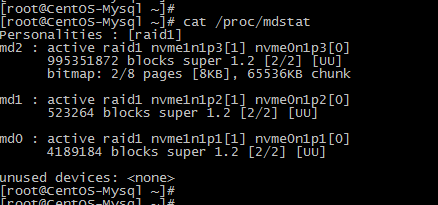

cat /proc/mdstat

查询到的raid只有data盘

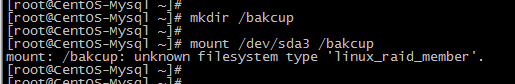

此时我想挂载 /dev/sda3

提示 unknown filesystem type ‘linux_raid_member’.

二.解决方案

百度了一下,发现这个是备份盘/dev/sda配置了raid,但是没有配置成功,此时由于是新的盘,没有数据,可以将/dev/sda配置成 raid0

2.1 创建raid0

命令:

mdadm -Cv /dev/md3 -a yes -n 1 -l 0 /dev/sda

-- 需要使用 --force参数才生效

mdadm -Cv /dev/md3 -a yes -n 1 -l 0 /dev/sda --force

测试记录:

[root@CentOS-Mysql backup]# mdadm -Cv /dev/md3 -a yes -n 1 -l 0 /dev/sda

mdadm: '1' is an unusual number of drives for an array, so it is probably

a mistake. If you really mean it you will need to specify --force before

setting the number of drives.

[root@CentOS-Mysql backup]#

[root@CentOS-Mysql backup]#

[root@CentOS-Mysql backup]# mdadm -Cv /dev/md3 -a yes -n 2 -l 0 /dev/sda

mdadm: You haven't given enough devices (real or missing) to create this array

[root@CentOS-Mysql backup]#

[root@CentOS-Mysql backup]#

[root@CentOS-Mysql backup]#

[root@CentOS-Mysql backup]# mdadm -Cv /dev/md3 -a yes -n 1 -l 0 /dev/sda --force

mdadm: chunk size defaults to 512K

mdadm: partition table exists on /dev/sda

mdadm: partition table exists on /dev/sda but will be lost or

meaningless after creating array

Continue creating array? y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md3 started.

[root@CentOS-Mysql backup]#

通过fdisk -l命令查看

[root@CentOS-Mysql backup]# fdisk -l

Disk /dev/nvme0n1: 953.9 GiB, 1024209543168 bytes, 2000409264 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x7792add3

Device Boot Start End Sectors Size Id Type

/dev/nvme0n1p1 2048 8390655 8388608 4G fd Linux raid autodetect

/dev/nvme0n1p2 8390656 9439231 1048576 512M fd Linux raid autodetect

/dev/nvme0n1p3 9439232 2000407215 1990967984 949.4G fd Linux raid autodetect

Disk /dev/nvme1n1: 953.9 GiB, 1024209543168 bytes, 2000409264 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xea211d4f

Device Boot Start End Sectors Size Id Type

/dev/nvme1n1p1 2048 8390655 8388608 4G fd Linux raid autodetect

/dev/nvme1n1p2 8390656 9439231 1048576 512M fd Linux raid autodetect

/dev/nvme1n1p3 9439232 2000407215 1990967984 949.4G fd Linux raid autodetect

Disk /dev/md0: 4 GiB, 4289724416 bytes, 8378368 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/md1: 511 MiB, 535822336 bytes, 1046528 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/md2: 949.2 GiB, 1019240316928 bytes, 1990703744 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sda: 1.8 TiB, 2000398934016 bytes, 3907029168 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disklabel type: dos

Disk identifier: 0xa2ed3851

Device Boot Start End Sectors Size Id Type

/dev/sda1 2048 8390655 8388608 4G fd Linux raid autodetect

/dev/sda2 8390656 9439231 1048576 512M fd Linux raid autodetect

/dev/sda3 9439232 2000407215 1990967984 949.4G fd Linux raid autodetect

Disk /dev/md3: 1.8 TiB, 2000263577600 bytes, 3906764800 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 524288 bytes / 524288 bytes

2.2 格式化文件系统为ext4

命令:

mkfs.ext4 /dev/md3

测试记录:

[root@CentOS-Mysql backup]# mkfs.ext4 /dev/md3

mke2fs 1.45.6 (20-Mar-2020)

Creating filesystem with 488345600 4k blocks and 122093568 inodes

Filesystem UUID: 81bc5088-f2b5-43d9-80a8-2325215755a6

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000, 7962624, 11239424, 20480000, 23887872, 71663616, 78675968,

102400000, 214990848

Allocating group tables: done

Writing inode tables: done

Creating journal (262144 blocks):

done

Writing superblocks and filesystem accounting information: done

[root@CentOS-Mysql backup]#

2.3 挂载并测试

命令:

mkdir -p /backup

mount /dev/md3 /backup

df -h

touch 1.txt

测试记录:

[root@CentOS-Mysql backup]# mkdir -p /backup

[root@CentOS-Mysql backup]# mount /dev/md3 /backup

[root@CentOS-Mysql backup]#

[root@CentOS-Mysql backup]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 63G 0 63G 0% /dev

tmpfs 63G 0 63G 0% /dev/shm

tmpfs 63G 648K 63G 1% /run

tmpfs 63G 0 63G 0% /sys/fs/cgroup

/dev/md2 934G 57G 830G 7% /

/dev/md1 485M 138M 322M 30% /boot

tmpfs 13G 0 13G 0% /run/user/0

tmpfs 13G 0 13G 0% /run/user/1000

/dev/md3 1.8T 77M 1.7T 1% /backup

[root@CentOS-Mysql backup]# cd /backup

[root@CentOS-Mysql backup]#

[root@CentOS-Mysql backup]# touch 1.txt

[root@CentOS-Mysql backup]# ll

total 24

drwxr-xr-x 3 root root 4096 Jan 6 08:37 ./

drwxr-xr-x 20 root root 4096 Jan 6 08:37 ../

-rw-r--r-- 1 root root 0 Jan 6 08:37 1.txt

drwx------ 2 root root 16384 Jan 6 07:57 lost+found/

[root@CentOS-Mysql backup]#

[root@CentOS-Mysql backup]# echo "abc" >> 1.txt

[root@CentOS-Mysql backup]# more 1.txt

abc

[root@CentOS-Mysql backup]#

2.4 开机自动挂载

命令:

vi /etc/fstab

/dev/md3 /backup ext4 defaults 0 0

2.5 检查raid的配置

命令:

cat /proc/mdstat

测试记录:

[root@CentOS-Mysql db_prod]# cat /proc/mdstat

Personalities : [raid1] [raid6] [raid5] [raid4] [raid0]

md3 : active raid0 sda[0]

1953382400 blocks super 1.2 512k chunks

md2 : active raid1 nvme1n1p3[1] nvme0n1p3[0]

995351872 blocks super 1.2 [2/2] [UU]

bitmap: 5/8 pages [20KB], 65536KB chunk

md1 : active raid1 nvme1n1p2[1] nvme0n1p2[0]

523264 blocks super 1.2 [2/2] [UU]

md0 : active raid1 nvme1n1p1[1] nvme0n1p1[0]

4189184 blocks super 1.2 [2/2] [UU]

unused devices: <none>

[root@CentOS-Mysql db_prod]#

参考:

- https://www.cnblogs.com/hxlinux/p/15139547.html