1.建立自己的音频定义数据集

Urbansounddataste.py

# 创建自定义数据集

import os

import torch

from torch.utils.data import Dataset

import pandas as pd

import torchaudio

class UrbanSoundDataset(Dataset):

def __init__(self,

annotations_file,

audio_dir,

transformation,

target_sample_rate,

num_samples,

device):

self.annotations = pd.read_csv(annotations_file)

self.audio_dir = audio_dir

self.device = device

self.transformation = transformation.to(self.device)

self.target_sample_rate = target_sample_rate

self.num_samples = num_samples

def __len__(self):

return len(self.annotations)

def __getitem__(self, index):

audio_sample_path = self._get_audio_sample_path(index)

label = self._get_audio_sample_label(index)

signal, sr = torchaudio.load(audio_sample_path)

# 声音可能存在多通道

# signal-> (num_channels, samples) -> (2,16000) -> (1,16000)

signal = self._resample_if_necessary(signal, sr)

signal = signal.to(self.device)

signal = self._mix_down_if_necessary(signal)

signal = self._cut_if_necessary(signal)

signal = self._right_pad_if_necessary(signal)

signal = self.transformation(signal)

return signal, label

def _cut_if_necessary(self, signal):

if signal.shape[1] > self.num_samples:

signal =signal[:, :self.num_samples] #取第1s

return signal

def _right_pad_if_necessary(self, signal):

length_signal = signal.shape[1]

if length_signal < self.num_samples:

num_missing_samples = self.num_samples - length_signal

last_dim_padding = (0, num_missing_samples) # 两个数字分别表示左右填充0的个数

# (1,1,2,1) 1,1表示在最后一个维度的左右填充,2,2表示在倒数第二个维度上填充

signal = torch.nn.functional.pad(signal, last_dim_padding)

return signal

def _resample_if_necessary(self, signal, sr):

if sr != self.target_sample_rate:

resampler = torchaudio.transforms.Resample(sr, self.target_sample_rate)

signal = resampler(signal)

return signal

def _mix_down_if_necessary(self, signal):

# 聚合多个通道,并将其混合到一个通道

if signal.shape[0] > 1: # (2, 16000)

signal = torch.mean(signal, dim=0, keepdim=True) # 使用最小值的维度

return signal

def _get_audio_sample_path(self, index):

# 获得数据的路径

fold = f"fold{self.annotations.iloc[index, 5]}"

path = os.path.join(self.audio_dir, fold, self.annotations.iloc[index, 0])

return path

def _get_audio_sample_label(self, index):

return self.annotations.iloc[index, 6]

if __name__ == "__main__":

ANNOTATIONS_FILE = "UrbanSound8K/metadata/UrbanSound8K.csv"

AUDIO_DIR = "audio"

SAMPLE_RATE = 16000 # 采样率

NUM_SAMPLES = 22050 # 样本数量

if torch.cuda.is_available():

device = "cuda"

else:

device = "cpu"

print(f"Using devie {device}")

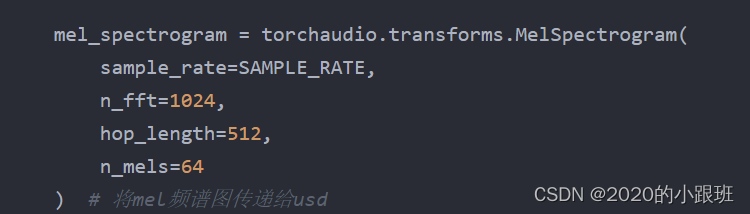

mel_spectrogram = torchaudio.transforms.MelSpectrogram(

sample_rate=SAMPLE_RATE,

n_fft=1024,

hop_length=512,

n_mels=64

) # 将mel频谱图传递给usd

# ms = mel_spectrogram(signal)

usd = UrbanSoundDataset(ANNOTATIONS_FILE,

AUDIO_DIR,

mel_spectrogram,

SAMPLE_RATE,

NUM_SAMPLES,

device)

print(f"There are {len(usd)} samples in the dataset.")

signal, label = usd[0]

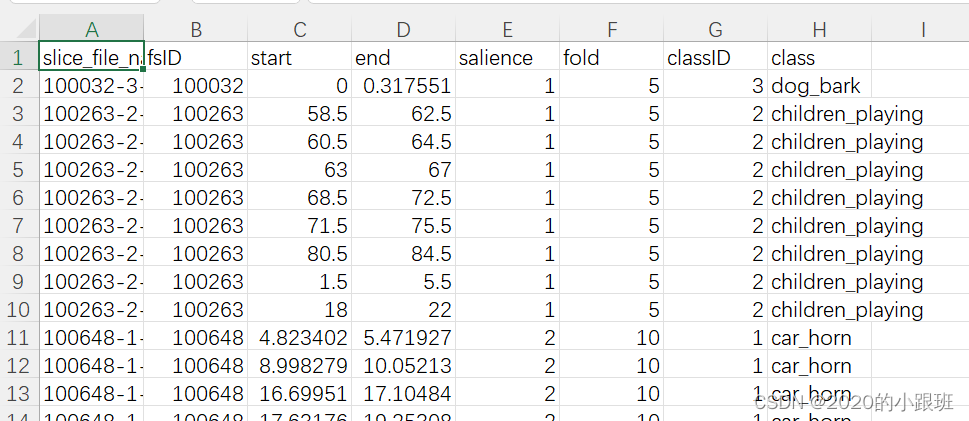

Urbansound8k.csv文件

2. 建立神经网咯模型cnn.py

from torch import nn

from torchsummary import summary

# VGG分类器

class CNNNetwork(nn.Module):

def __init__(self):

super().__init__()

# 4 conv blocks / flatten / linear / softmax

self.conv1 = nn.Sequential(

nn.Conv2d(

in_channels=1,

out_channels=16,

kernel_size=3,

stride=1,

padding=2

),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2)

)

self.conv2 = nn.Sequential(

nn.Conv2d(

in_channels=16,

out_channels=32,

kernel_size=3,

stride=1,

padding=2

),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2)

)

self.conv3 = nn.Sequential(

nn.Conv2d(

in_channels=32,

out_channels=64,

kernel_size=3,

stride=1,

padding=2

),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2)

)

self.conv4 = nn.Sequential(

nn.Conv2d(

in_channels=64,

out_channels=128,

kernel_size=3,

stride=1,

padding=2

),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2)

)

self.flatten = nn.Flatten()

# 对于torch.nn.Flatten(),因为其被用在神经网络中,

# 输入为一批数据,第一维为batch,通常要把一个数据拉成一维,而不是将一

# 批数据拉为一维。所以torch.nn.Flatten()默认从第二维开始平坦化。

self.linear = nn.Linear(128*5*4, 10) # 128:最后一个卷积层的输出,5:

self.softmax = nn.Softmax(dim=1) # 对linear层输出的结果进行归一化

def forward(self, input_data):

x = self.conv1(input_data)

x = self.conv2(x)

x = self.conv3(x)

x = self.conv4(x)

x = self.flatten(x)

logits = self.linear(x)

prediction = self.softmax(logits)

return prediction

if __name__ == "__main__":

cnn = CNNNetwork()

summary(cnn.cuda(), (1, 64, 44))

# 1:输入的通道数量,64是频率轴, 44是时间轴下面看一下网络层具体是怎么样的

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 16, 66, 46] 160

ReLU-2 [-1, 16, 66, 46] 0

MaxPool2d-3 [-1, 16, 33, 23] 0

Conv2d-4 [-1, 32, 35, 25] 4,640

ReLU-5 [-1, 32, 35, 25] 0

MaxPool2d-6 [-1, 32, 17, 12] 0

Conv2d-7 [-1, 64, 19, 14] 18,496

ReLU-8 [-1, 64, 19, 14] 0

MaxPool2d-9 [-1, 64, 9, 7] 0

Conv2d-10 [-1, 128, 11, 9] 73,856

ReLU-11 [-1, 128, 11, 9] 0

MaxPool2d-12 [-1, 128, 5, 4] 0

Flatten-13 [-1, 2560] 0

Linear-14 [-1, 10] 25,610

Softmax-15 [-1, 10] 0

================================================================

Total params: 122,762

Trainable params: 122,762

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.01

Forward/backward pass size (MB): 1.83

Params size (MB): 0.47

Estimated Total Size (MB): 2.31

----------------------------------------------------------------从代码段可以看出,我们的输入是(1,64,44),下面我们来研究一下输入的大小是怎么来的。

网络的输入是音频信号经过梅尔变换后得到的数据,我们先来看一下梅尔变换是什么样子的。

torchaudio.transforms.MelSpectrogram(sample_rate: int = 16000,

n_fft: int = 400,

win_length: Optional[int] = None,

hop_length: Optional[int] = None,

f_min: float = 0.0,

f_max: Optional[float] = None,

pad: int = 0,

n_mels: int = 128,

window_fn: Callable[[...], torch.Tensor] = <built-in method hann_window of type object>, power: float = 2.0,

normalized: bool = False,

wkwargs: Optional[dict] = None,

center: bool = True,

pad_mode: str = 'reflect',

onesided: bool = True,

norm: Optional[str] = None,

mel_scale: str = 'htk')Parameters:

-

sample_rate (int, optional) – 音频信号的采样率. (Default:

16000) -

n_fft (int, optional) – Size of FFT, creates bins. (Default:

n_fft // 2 + 1400) -

win_length (int or None, optional) – Window size. (Default:

n_fft) -

hop_length (int or None, optional) – STFT 窗口之间的跃点长度 (Default:

win_length // 2) -

f_min (float, optional) – Minimum frequency. (Default:

0.) -

f_max (float or None, optional) – Maximum frequency. (Default:

None) -

pad (int, optional) – Two sided padding of signal. (Default:

0) -

n_mels (int, optional) – Number of mel filterbanks. (Default:

128) -

window_fn (Callable[.., Tensor], optional) – A function to create a window tensor that is applied/multiplied to each frame/window. (Default:

torch.hann_window) -

power (float, optional) – Exponent for the magnitude spectrogram, (must be > 0) e.g., 1 for energy, 2 for power, etc. (Default:

2) -

normalized (bool, optional) – Whether to normalize by magnitude after stft. (Default:

False) -

wkwargs (Dict[.., ..] or None, optional) – Arguments for window function. (Default:

None) -

center (bool, optional) – whether to pad on both sides so that the

waveformtt-th frame is centered at time t \times \text{hop\_length}t×hop_length. (Default:True) -

pad_mode (string, optional) – controls the padding method used when is . (Default:

centerTrue"reflect") -

onesided (bool, optional) – controls whether to return half of results to avoid redundancy. (Default:

True) -

norm (str or None, optional) – If ‘slaney’, divide the triangular mel weights by the width of the mel band (area normalization). (Default:

None) -

mel_scale (str, optional) – Scale to use: or . (Default:

htkslaneyhtk)

return:

(channel, n_mels, time)

1->代表的是输入的通道数,这里显然为1

64->梅尔滤波器的个数

44->时间,现在还不确定是怎么来的

然后继续看网络层

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 16, 66, 46] 160

ReLU-2 [-1, 16, 66, 46] 0

MaxPool2d-3 [-1, 16, 33, 23] 0

Conv2d-4 [-1, 32, 35, 25] 4,640

ReLU-5 [-1, 32, 35, 25] 0

MaxPool2d-6 [-1, 32, 17, 12] 0

Conv2d-7 [-1, 64, 19, 14] 18,496

ReLU-8 [-1, 64, 19, 14] 0

MaxPool2d-9 [-1, 64, 9, 7] 0

Conv2d-10 [-1, 128, 11, 9] 73,856

ReLU-11 [-1, 128, 11, 9] 0

MaxPool2d-12 [-1, 128, 5, 4] 0

Flatten-13 [-1, 2560] 0

Linear-14 [-1, 10] 25,610

Softmax-15 [-1, 10] 0

================================================================

Total params: 122,762

Trainable params: 122,762

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.01

Forward/backward pass size (MB): 1.83

Params size (MB): 0.47

Estimated Total Size (MB): 2.31

----------------------------------------------------------------网路层的输出是四维的,以Conv2d-1为例:

-1->batchsize的大小,这里还没有设置

16->输出通道的数量

66*46->输入图片的大小,第一层没有改变大小

输入图片大小 W×W

卷积核大小 F×F

步长 S

padding的像素数 P

于是我们可以得出计算公式为:

N = (W − F + 2P )/S+1

由公式可以得到每一层的输出大小

3.训练神经网络train.py

import torch

import torchaudio

from torch import nn

from torch.utils.data import DataLoader

from UrbanSoundDataset import UrbanSoundDataset

from cnn import CNNNetwork

BATCH_SIZE = 128

Epochs = 10

LEARNING_RATE = 0.001

ANNOTATIONS_FILE = "UrbanSound8K/metadata/UrbanSound8K.csv"

AUDIO_DIR = "audio"

SAMPLE_RATE = 22050 # 采样率

NUM_SAMPLES = 22050 # 样本数量

def create_data_loader(train_data, batch_size):

train_dataloader = DataLoader(train_data, batch_size=batch_size)

return train_dataloader

def train_single_epoch(model, data_loader, loss_fn, optimiser, device):

for input, targets in data_loader:

input, targets = input.to(device), targets.to(device)

# calculate loss #每一个batch计算loss

# 使用当前模型获得预测

predictions = model(input)

loss = loss_fn(predictions, targets)

# backpropagate loss and update weights

optimiser.zero_grad() # 在每个batch中让梯度重新为0

loss.backward() # 反向传播

optimiser.step()

print(f"Loss: {loss.item()}") # 打印最后的batch的loss

def train(model, data_loader, loss_fn, optimiser, device, epochs):

for i in range(epochs):

print(f"Epoch {i+1}")

train_single_epoch(model, data_loader, loss_fn, optimiser, device)

print("---------------------")

print("Training is down.")

if __name__ == "__main__":

if torch.cuda.is_available():

device = "cuda"

else:

device = "cpu"

print(f"Using {device} device")

# instantiating our dataset object and create data loader

mel_spectrogram = torchaudio.transforms.MelSpectrogram(

sample_rate=SAMPLE_RATE,

n_fft=1024,

hop_length=512,

n_mels=64

) # 将mel频谱图传递给usd

# ms = mel_spectrogram(signal)

usd = UrbanSoundDataset(ANNOTATIONS_FILE,

AUDIO_DIR,

mel_spectrogram,

SAMPLE_RATE,

NUM_SAMPLES,

device)

train_dataloader = create_data_loader(usd, BATCH_SIZE)

# build model

cnn = CNNNetwork().to(device)

print(cnn)

# instantiate loss function + opptimiser

loss_fn = nn.CrossEntropyLoss()

optimiser = torch.optim.Adam(cnn.parameters(),

lr=LEARNING_RATE)

# train model

train(cnn, train_dataloader, loss_fn, optimiser, device, Epochs)

torch.save(cnn.state_dict(), "feedforwardnet.pth")

print("Model trained and stored at feedforwardnet.pth")4.使用网络模型进行判别

inference.py

import torch

import torchaudio

from cnn import CNNNetwork

from UrbanSoundDataset import UrbanSoundDataset

from train import ANNOTATIONS_FILE, AUDIO_DIR, NUM_SAMPLES, SAMPLE_RATE

class_mapping =[

"air_conditioner",

"car_horn",

"children_playing",

"dog_bark",

"drilling",

"engine_idling",

"gun_shot",

"jackhammer",

"siren",

"street_music"

]

def predict(model, input, target, class_mapping):

model.eval()

with torch.no_grad():

predictions = model(input)

# Tensor (1, 10) ->[[0.1, 0.01, ... ,0.6]] #概率最大的即为所选

predicted_index = predictions[0].argmax(0)

predicted = class_mapping[predicted_index]

expected = class_mapping[target]

return predicted, expected

if __name__ == "__main__":

# load back the model

cnn = CNNNetwork()

state_dict = torch.load("feedforwardnet.pth")

cnn.load_state_dict(state_dict)

# load urban sound dataset

mel_spectrogram = torchaudio.transforms.MelSpectrogram(

sample_rate=SAMPLE_RATE,

n_fft=1024,

hop_length=512,

n_mels=64

) # 将mel频谱图传递给usd

# ms = mel_spectrogram(signal)

usd = UrbanSoundDataset(ANNOTATIONS_FILE,

AUDIO_DIR,

mel_spectrogram,

SAMPLE_RATE,

NUM_SAMPLES,

"cpu")

# get a sample from urban sound dataset for inference

input, target = usd[0][0], usd[0][1] # [batch size, num_channels, fr, time]

input.unsqueeze_(0)

# make an inference

predicted, expected = predict(cnn, input, target, class_mapping)

print(f"Predicted:'{predicted}', expected:'{expected}'")