https://dblab.xmu.edu.cn/blog/2307/

实验部分

爬虫程序

首先在工程文件夹下创建名叫rentspider的Python文件。

# -*- coding: utf-8 -*-

import requests

from bs4 import BeautifulSoup

import csv

# num表示记录序号

Url_head = "http://fangzi.xmfish.com/web/search_hire.html?h=&hf=&ca=5920"

Url_tail = "&r=&s=&a=&rm=&f=&d=&tp=&l=0&tg=&hw=&o=&ot=0&tst=0&page="

Num = 0

Filename = "rent.csv"

# 把每一页的记录写入文件中

def write_csv(msg_list):

out = open(Filename, 'a', newline='')

csv_write = csv.writer(out,dialect='excel')

for msg in msg_list:

csv_write.writerow(msg)

out.close()

# 访问每一页

def acc_page_msg(page_url):

web_data = requests.get(page_url).content.decode('utf8')

soup = BeautifulSoup(web_data, 'html.parser')

address_list = []

area_list = []

num_address = 0

num_area = 0

msg_list = []

# 得到了地址列表,以及区域列表

for tag in soup.find_all(attrs="list-addr"):

for em in tag:

count = 0

for a in em:

count += 1

if count == 1 and a.string != "[":

address_list.append(a.string)

elif count == 2:

area_list.append(a.string)

num_area += 1

elif count == 4:

if a.string is not None:

address_list[num_address] = address_list[num_address] + "-" + a.string

else:

address_list[num_address] = address_list[num_address] + "-Null"

num_address += 1

# 得到了价格列表

price_list = []

for tag in soup.find_all(attrs="list-price"):

price_list.append(tag.b.string)

# 组合成为一个新的tuple——list并加上序号

for i in range(len(price_list)):

txt = (address_list[i], area_list[i], price_list[i])

msg_list.append(txt)

# 写入csv

write_csv(msg_list)

# 爬所有的页面

def get_pages_urls():

urls = []

# 思明可访问页数134

for i in range(134):

urls.append(Url_head + "1" + Url_tail + str(i+1))

# 湖里可访问页数134

for i in range(134):

urls.append(Url_head + "2" + Url_tail + str(i+1))

# 集美可访问页数27

for i in range(27):

urls.append(Url_head + "3" + Url_tail + str(i+1))

# 同安可访问页数41

for i in range(41):

urls.append(Url_head + "4" + Url_tail + str(i+1))

# 翔安可访问页数76

for i in range(76):

urls.append(Url_head + "5" + Url_tail + str(i+1))

# 海沧可访问页数6

for i in range(6):

urls.append(Url_head + "6" + Url_tail + str(i+1))

return urls

def run():

print("开始爬虫")

out = open(Filename, 'a', newline='')

csv_write = csv.writer(out, dialect='excel')

title = ("address", "area", "price")

csv_write.writerow(title)

out.close()

url_list = get_pages_urls()

for url in url_list:

try:

acc_page_msg(url)

except:

print("格式出错", url)

print("结束爬虫")

分析程序

建立一个名为rent_analyse的Python文件:

# -*- coding: utf-8 -*-

from pyspark.sql import SparkSession

from pyspark.sql.types import IntegerType

def spark_analyse(filename):

print("开始spark分析")

# 程序主入口

spark = SparkSession.builder.master("local").appName("rent_analyse").getOrCreate()

df = spark.read.csv(filename, header=True)

# max_list存储各个区的最大值,0海沧,1为湖里,2为集美,3为思明,4为翔安,5为同安;同理的mean_list, 以及min_list,approxQuantile中位数

max_list = [0 for i in range(6)]

mean_list = [1.2 for i in range(6)]

min_list = [0 for i in range(6)]

mid_list = [0 for i in range(6)]

# 类型转换,十分重要,保证了price列作为int用来比较,否则会用str比较, 同时排除掉一些奇怪的价格,比如写字楼的出租超级贵

# 或者有人故意标签1元,其实要面议, 还有排除价格标记为面议的

df = df.filter(df.price != '面议').withColumn("price", df.price.cast(IntegerType()))

df = df.filter(df.price >= 50).filter(df.price <= 40000)

mean_list[0] = df.filter(df.area == "海沧").agg({

"price": "mean"}).first()['avg(price)']

mean_list[1] = df.filter(df.area == "湖里").agg({

"price": "mean"}).first()['avg(price)']

mean_list[2] = df.filter(df.area == "集美").agg({

"price": "mean"}).first()['avg(price)']

mean_list[3] = df.filter(df.area == "思明").agg({

"price": "mean"}).first()['avg(price)']

mean_list[4] = df.filter(df.area == "翔安").agg({

"price": "mean"}).first()['avg(price)']

mean_list[5] = df.filter(df.area == "同安").agg({

"price": "mean"}).first()['avg(price)']

min_list[0] = df.filter(df.area == "海沧").agg({

"price": "min"}).first()['min(price)']

min_list[1] = df.filter(df.area == "湖里").agg({

"price": "min"}).first()['min(price)']

min_list[2] = df.filter(df.area == "集美").agg({

"price": "min"}).first()['min(price)']

min_list[3] = df.filter(df.area == "思明").agg({

"price": "min"}).first()['min(price)']

min_list[4] = df.filter(df.area == "翔安").agg({

"price": "min"}).first()['min(price)']

min_list[5] = df.filter(df.area == "同安").agg({

"price": "min"}).first()['min(price)']

max_list[0] = df.filter(df.area == "海沧").agg({

"price": "max"}).first()['max(price)']

max_list[1] = df.filter(df.area == "湖里").agg({

"price": "max"}).first()['max(price)']

max_list[2] = df.filter(df.area == "集美").agg({

"price": "max"}).first()['max(price)']

max_list[3] = df.filter(df.area == "思明").agg({

"price": "max"}).first()['max(price)']

max_list[4] = df.filter(df.area == "翔安").agg({

"price": "max"}).first()['max(price)']

max_list[5] = df.filter(df.area == "同安").agg({

"price": "max"}).first()['max(price)']

# 返回值是一个list,所以在最后加一个[0]

mid_list[0] = df.filter(df.area == "海沧").approxQuantile("price", [0.5], 0.01)[0]

mid_list[1] = df.filter(df.area == "湖里").approxQuantile("price", [0.5], 0.01)[0]

mid_list[2] = df.filter(df.area == "集美").approxQuantile("price", [0.5], 0.01)[0]

mid_list[3] = df.filter(df.area == "思明").approxQuantile("price", [0.5], 0.01)[0]

mid_list[4] = df.filter(df.area == "翔安").approxQuantile("price", [0.5], 0.01)[0]

mid_list[5] = df.filter(df.area == "同安").approxQuantile("price", [0.5], 0.01)[0]

all_list = []

all_list.append(min_list)

all_list.append(max_list)

all_list.append(mean_list)

all_list.append(mid_list)

print("结束spark分析")

return all_list

绘图程序

建立一个名为 draw的python文件

# -*- coding: utf-8 -*-

from pyecharts import Bar

def draw_bar(all_list):

print("开始绘图")

attr = ["海沧", "湖里", "集美", "思明", "翔安", "同安"]

v0 = all_list[0]

v1 = all_list[1]

v2 = all_list[2]

v3 = all_list[3]

bar = Bar("厦门市租房租金概况")

bar.add("最小值", attr, v0, is_stack=True)

bar.add("最大值", attr, v1, is_stack=True)

bar.add("平均值", attr, v2, is_stack=True)

bar.add("中位数", attr, v3, is_stack=True)

bar.render()

print("结束绘图")

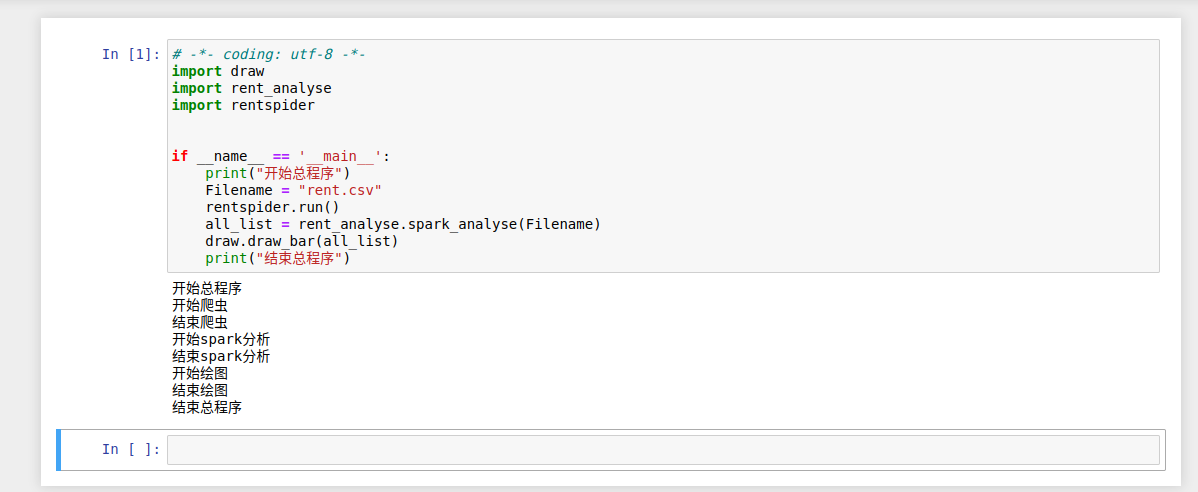

启动程序

建立一个名为run的python文件

# -*- coding: utf-8 -*-

import draw

import rent_analyse

import rentspider

if __name__ == '__main__':

print("开始总程序")

Filename = "rent.csv"

rentspider.run()

all_list = rent_analyse.spark_analyse(Filename)

draw.draw_bar(all_list)

print("结束总程序")

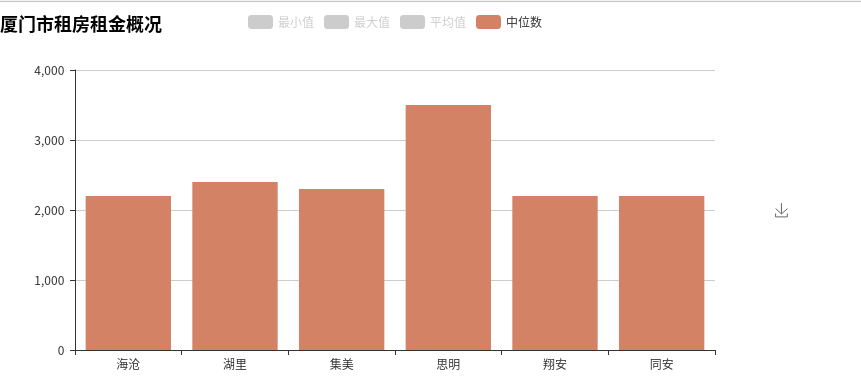

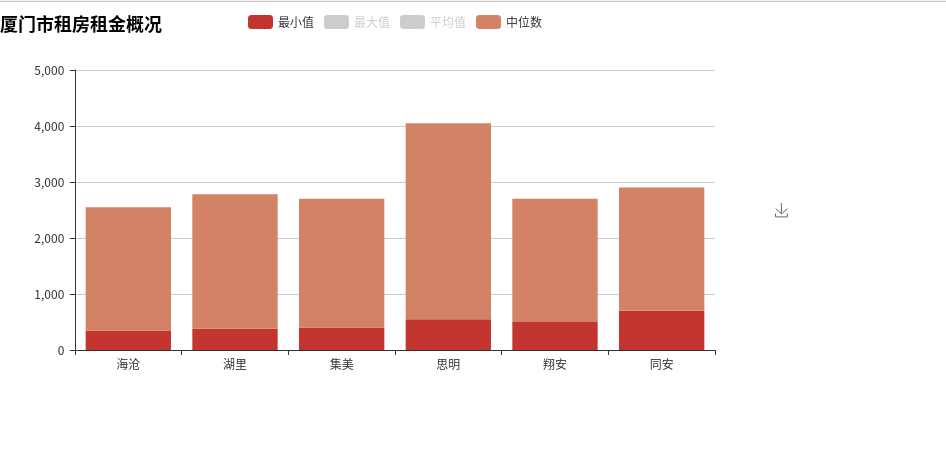

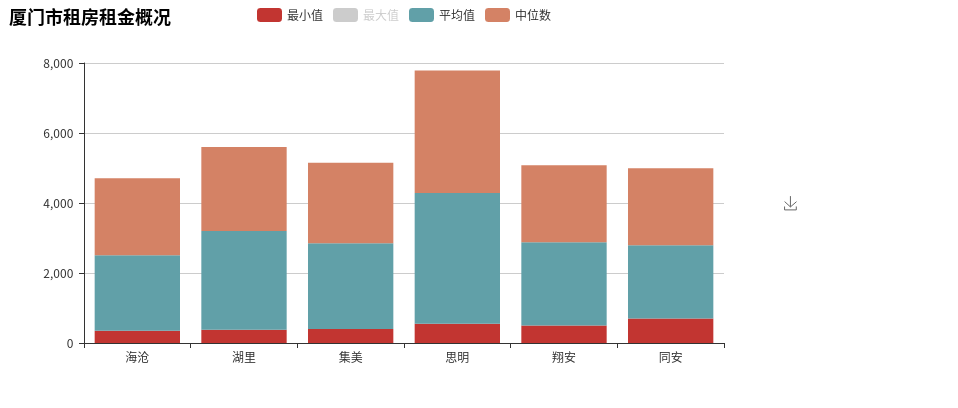

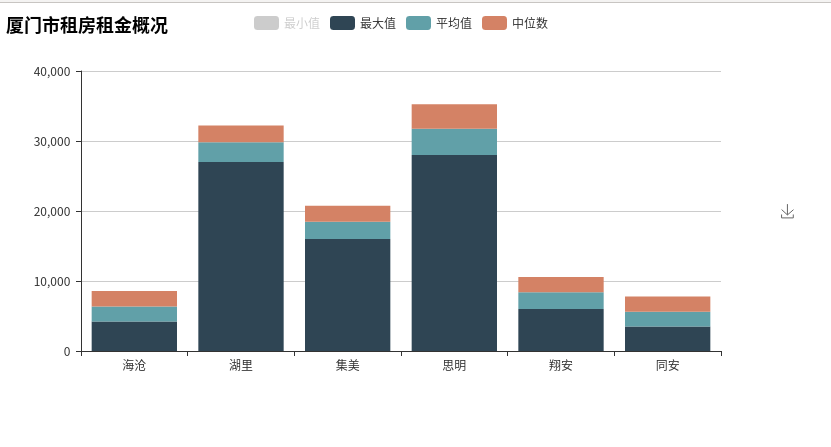

实验结果

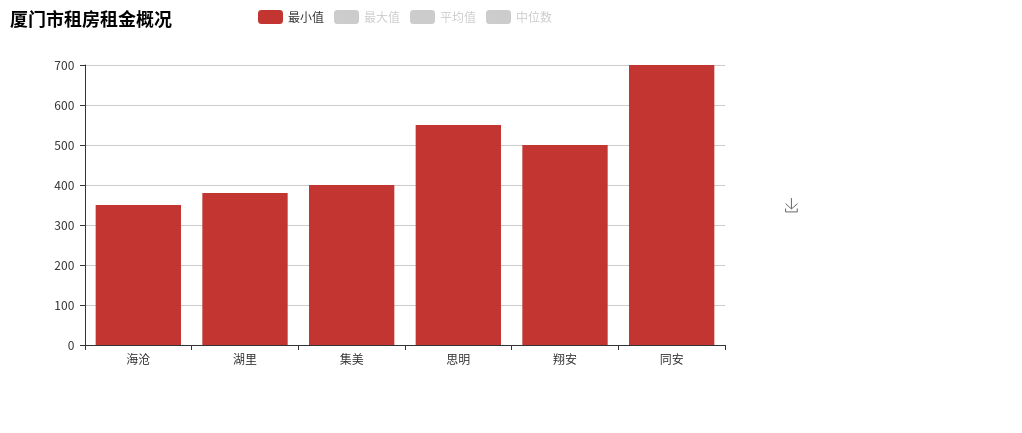

最小值:

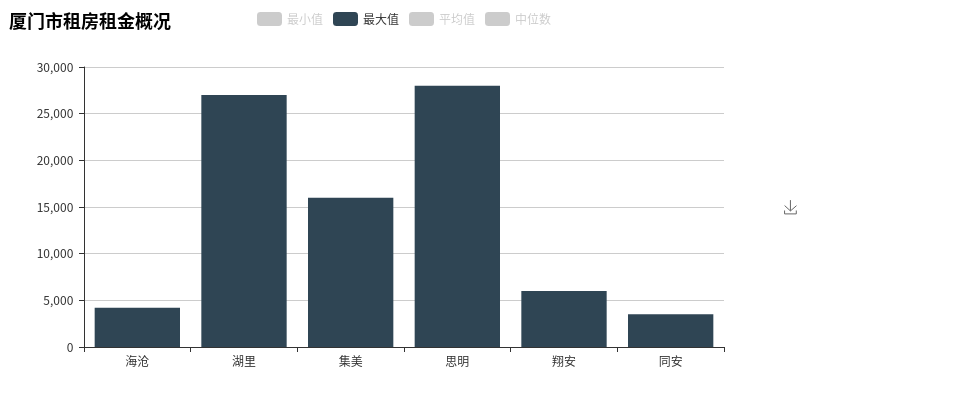

最大值:

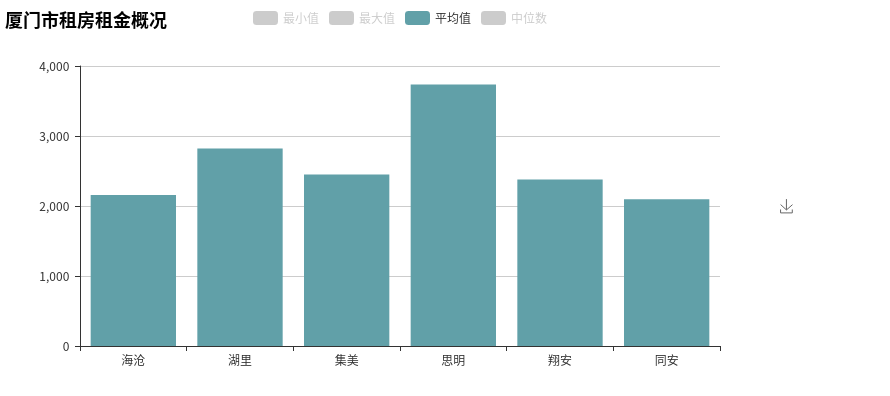

平均值:

中位数: