敲黑板 将上篇 改写成了使用scrapy框架来爬取图片

这次的 使用搜索功能 你可以设置搜索的内容 将搜索结果 中的图片保存下来

pic.py 的脚本 主要的脚本

# -*- coding: utf-8 -*-

import scrapy

import json

import random

import os

from urllib import parse

from BeautyPic.items import BeautypicItem

class PicSpider(scrapy.Spider):

kw="大长腿"

name = "pic"

allowed_domains = ["laosiji.com"]

start_urls = ['https://www.laosiji.com/proxy/api']

cookies_str = "UM_distinctid=16a85bc719e11-0291ba522d6f78-39395704-1fa400-16a85bc719f8b2; _ga=GA1.2.457987246.1557021881; LSJLOGCOOKIE=11911911946108971111151051061054699111109-11273461-1557021880937; OdStatisticsToken=a2bd510b-6855-457b-87fa-89e9c0a729a9-1557021880936; tgw_l7_route=83a50c6e17958c25ad3462765ddb8a87; JSESSIONID=B83B7D9AE6CF4F35E0D95C5C3DCDE0AB; _gid=GA1.2.38970883.1559541613; CNZZDATA1261736092=756492161-1557017437-%7C1559539536; Hm_lvt_9fa8070d0f1a747dc1fd8cc5bdda4088=1557903441,1558061382,1558658949,1559541613; _gat_gtag_UA_132849965_2=1; Hm_lpvt_9fa8070d0f1a747dc1fd8cc5bdda4088=1559541757"

cookies = {i.split("=")[0]: i.split("=")[1] for i in cookies_str.split(";")}

total_count = 21

page_count = 1

post_data = {

"method": "/search/ywf/indexapi",

"cityid": "131",

"search": kw,

"page": str(page_count),

"type": "1"

}

def start_requests(self):

if self.total_count > self.page_count * 20:

yield scrapy.FormRequest(

self.start_urls[0],

cookies=self.cookies,

callback=self.parse,

formdata=self.post_data,

dont_filter=True

)

def parse(self, response):

self.settings.headers = {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3",

"Accept-Encoding": "gzip, deflate",

"Accept-Language": "zh-CN,zh;q=0.9",

"Cache-Control": "max-age=0",

"Connection": "keep-alive"

}

cookies = "UM_distinctid=16a85bc719e11-0291ba522d6f78-39395704-1fa400-16a85bc719f8b2; _ga=GA1.2.457987246.1557021881; LSJLOGCOOKIE=11911911946108971111151051061054699111109-11273461-1557021880937; OdStatisticsToken=a2bd510b-6855-457b-87fa-89e9c0a729a9-1557021880936; tgw_l7_route=83a50c6e17958c25ad3462765ddb8a87; JSESSIONID=B83B7D9AE6CF4F35E0D95C5C3DCDE0AB; _gid=GA1.2.38970883.1559541613; CNZZDATA1261736092=756492161-1557017437-%7C1559539536; Hm_lvt_9fa8070d0f1a747dc1fd8cc5bdda4088=1557903441,1558061382,1558658949,1559541613; _gat_gtag_UA_132849965_2=1; Hm_lpvt_9fa8070d0f1a747dc1fd8cc5bdda4088=1559541757"

# cookies = "UM_distinctid=16a85bc719e11-0291ba522d6f78-39395704-1fa400-16a85bc719f8b2; _ga=GA1.2.457987246.1557021881; LSJLOGCOOKIE=11911911946108971111151051061054699111109-11273461-1557021880937; OdStatisticsToken=a2bd510b-6855-457b-87fa-89e9c0a729a9-1557021880936;gid=GA1.2.798960997.1558061382; Hm_lvt_9fa8070d0f1a747dc1fd8cc5bdda4088=1557021882,1557817656,1557903441,1558061382; tgw_l7_route=b1a3cc9000bfce8fe74cd67462fc2144; JSESSIONID=9C3AEFD9D445FFA1C9502B9EDE5B599B; CNZZDATA1261736092=756492161-1557017437-%7C1558069156; Hm_lpvt_9fa8070d0f1a747dc1fd8cc5bdda4088=1558073069"

cookies = {i.split("=")[0]: i.split("=")[1] for i in cookies.split(";")}

json_list = json.loads(response.body.decode())

self.total_count = int(json_list["body"]["search"]["sns"]["count"])

json_list = json_list["body"]["search"]["sns"]["list"]

url_list = [parse.urljoin( "https://www.laosiji.com/thread/", str(i["resourceid"]) + ".html") for i in json_list if i is not None]

for url in url_list:

print(url)

yield scrapy.Request(

url,

callback=self.parse_detail,

cookies=cookies,

dont_filter=True

)

self.page_count += 1

self.post_data = {

"method": "/search/ywf/indexapi",

"cityid": "131",

"search": self.kw,

"page": str(self.page_count),

"type": "1"

}

if self.total_count > self.page_count * 20:

print("-" * 100)

print(self.post_data)

yield scrapy.FormRequest(

self.start_urls[0],

cookies=self.cookies,

callback=self.parse,

formdata=self.post_data,

dont_filter=True

)

def parse_detail(self, response):

img_list = response.xpath("//div[@class='threa-main-box']/li")

for img_url in img_list:

img_id = img_url.xpath("./div[@class='img-box']/@id").extract_first()

url = img_url.xpath("./div[@class='img-box']/a/img/@src").extract_first()

title=response.xpath("//h1[@class='title']/text()").extract_first()

if url is not None:

item=BeautypicItem()

item["id"]=img_id

item["title"]=title

item["url"]=url

yield item

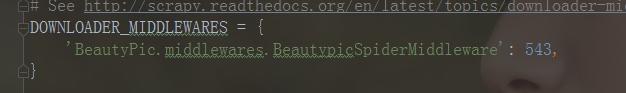

这个是middlewares.py 的脚本 在settings中记得打开

主要是添加了User-Agent 池

class BeautypicSpiderMiddleware(object):

def process_request(self,request, spider):

request.headers["User-Agent"]=random.choice(settings.User_Agents)

print(request.headers["User-Agent"])

return None

最后在itempiple.py 中添加了 图片下载的功能

记得在settings.py 中打开itempiple

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html

import requests

import os

import re

import string

class BeautypicPipeline(object):

def process_item(self, item, spider):

headers = {

"User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64; rv:50.0) Gecko/20100101 Firefox/50.0"

}

url = item["url"]

title=item["title"]

print(url)

if url is not "":

try:

response = requests.get(url, timeout=4)

except:

print("url有误"+url)

else:

str_path = os.path.abspath("D:\BaiduNetdiskDownload\pic")

if not os.path.exists(str_path):

os.mkdir(str_path)

title = title.replace(",", "").replace("。", "").replace(":", "").replace(".",'').replace("?",'').replace("!","").replace("/","").strip()

title=re.sub('[%s]' % re.escape(string.punctuation), '', title)

# title="".join([i for i in title if i not in string.punctuation])

dir_path = str_path + "\\" + title

print(dir_path)

if title is not "":

if not os.path.exists(dir_path):

os.mkdir(dir_path)

file_name = item["id"]

with(open(dir_path + "/" + file_name + ".png", "wb")) as f:

f.write(response.content)

print("保存成功{%s}" % file_name)

最后呢是图片命名 将不符合文件命名规则的特殊符号去掉,使用了string.punctuation

成品 供大家参考 欢迎大家指正