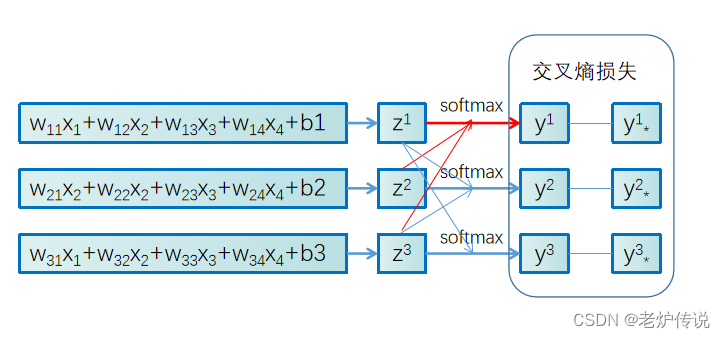

logistic回归参数更新看了几篇博文,感觉理解不透彻,所以自己写一下,希望能有更深的理解。logistic回归输入是一个线性函数 W x + b \boldsymbol{W}\boldsymbol{x}+\boldsymbol{b} Wx+b,为了简单理解,考虑batchsize为1的情况。这时输入 x \boldsymbol{x} x为一个 n × 1 n\times1 n×1的向量,标签 y \boldsymbol{y} y我们采用oneHot编码为一个 m × 1 m\times1 m×1的向量,显然\boldsymbol{b}也是一个 m × 1 m\times1 m×1的向量,参数 W \boldsymbol{W} W为一个 m × n m\times n m×n的矩阵。若 n = 4 n=4 n=4、 m = 3 m=3 m=3,我们用图形表示logistic回归如下:

这里的标签 y \boldsymbol{y} y采用onehot编码,长度为3,如果类别编号为1,则其编码为 { 1 , 0 , 0 } T \{1,0,0\}^T {

1,0,0}T,对应上图的话,就是 y ∗ 1 = 1 y_*^1=1 y∗1=1, y ∗ 2 = 0 y_*^2=0 y∗2=0, y ∗ 3 = 0 y_*^3=0 y∗3=0。损失函数 L L L就是 y 1 y^1 y1和 y ∗ 1 y_*^1 y∗1的交叉熵损失+ y 2 y^2 y2和 y ∗ 2 y_*^2 y∗2的交叉熵损失+ y 3 y^3 y3和 y ∗ 3 y_*^3 y∗3的交叉熵损失。

L = ∑ i = 1 3 y ∗ i log y i = y ∗ 1 log y 1 + y ∗ 2 log y 2 + y ∗ 3 log y 3 \begin{aligned} L&=\sum_{i=1}^3y^i_*\log{y^i}\\ &=y^1_*\log{y^1}+y^2_*\log{y^2}+y^3_*\log{y^3} \end{aligned} L=i=1∑3y∗ilogyi=y∗1logy1+y∗2logy2+y∗3logy3

上式中:

y 1 = e z 1 e z 1 + e z 2 + e z 3 y 2 = e z 2 e z 1 + e z 2 + e z 3 y 3 = e z 3 e z 1 + e z 2 + e z 3 \begin{aligned} y^1&=\frac{e^{z^1}}{e^{z^1}+e^{z^2}+e^{z^3}}\\ y^2&=\frac{e^{z^2}}{e^{z^1}+e^{z^2}+e^{z^3}}\\ y^3&=\frac{e^{z^3}}{e^{z^1}+e^{z^2}+e^{z^3}}\\ \end{aligned} y1y2y3=ez1+ez2+ez3ez1=ez1+ez2+ez3ez2=ez1+ez2+ez3ez3

而

z 1 = w 1 T x + b 1 z 2 = w 2 T x + b 2 z 3 = w 3 T x + b 3 \begin{aligned} z^1=\boldsymbol{w_1}^T \boldsymbol{x}+b_1\\ z^2=\boldsymbol{w_2}^T \boldsymbol{x}+b_2\\ z^3=\boldsymbol{w_3}^T \boldsymbol{x}+b_3 \end{aligned} z1=w1Tx+b1z2=w2Tx+b2z3=w3Tx+b3

其中, w 1 = { w 11 , w 12 , w 13 , w 14 } T \boldsymbol{w_1}=\{w_{11},w_{12},w_{13},w_{14}\}^T w1={

w11,w12,w13,w14}T, x = { x 1 , x 2 , x 3 , x 4 } T \boldsymbol{x}=\{x_{1},x_{2},x_{3},x_{4}\}^T x={

x1,x2,x3,x4}T因此:

损失函数 L L L对 w 1 \boldsymbol{w_1} w1求导:

∂ L ∂ w 1 = ∂ L ∂ y 1 ∂ y 1 ∂ z 1 ∂ z 1 ∂ w 1 + ∂ L ∂ y 2 ∂ y 2 ∂ z 1 ∂ z 1 ∂ w 1 + ∂ L ∂ y 3 ∂ y 3 ∂ z 1 ∂ z 1 ∂ w 1 = y 1 ∗ y 1 × y 1 ( 1 − y 1 ) × x − y 2 ∗ y 2 × y 1 y 2 × x − y 3 ∗ y 3 × y 1 y 3 × x = ( y 1 ∗ ( 1 − y 1 ) − y 2 ∗ y 1 − y 3 ∗ y 1 ) x = ( y 1 ∗ − y 1 ( y 1 ∗ + y 2 ∗ + y 3 ∗ ) ) x = ( y 1 ∗ − y 1 ) x \begin{aligned} \frac{\partial L}{\partial \boldsymbol{w_1}}&=\frac{\partial L}{\partial y_1}\frac{\partial y_1}{\partial z^1}\frac{\partial z^1}{\partial \boldsymbol{w_1}}+\frac{\partial L}{\partial y_2}\frac{\partial y_2}{\partial z^1}\frac{\partial z^1}{\partial \boldsymbol{w_1}}+\frac{\partial L}{\partial y_3}\frac{\partial y_3}{\partial z^1}\frac{\partial z^1}{\partial \boldsymbol{w_1}}\\ &=\frac{y_1^*}{y_1}\times y_1(1-y_1)\times \boldsymbol{x}-\frac{y_2^*}{y_2}\times y_1y_2\times \boldsymbol{x}-\frac{y_3^*}{y_3}\times y_1y_3\times \boldsymbol{x}\\ &=(y_1^*(1-y_1)-y_2^*y_1-y_3^*y_1)\boldsymbol{x}\\ &=(y_1^*-y_1(y_1^*+y_2^*+y_3^*))\boldsymbol{x}\\ &=(y_1^*-y_1)\boldsymbol{x}\\ \end{aligned} ∂w1∂L=∂y1∂L∂z1∂y1∂w1∂z1+∂y2∂L∂z1∂y2∂w1∂z1+∂y3∂L∂z1∂y3∂w1∂z1=y1y1∗×y1(1−y1)×x−y2y2∗×y1y2×x−y3y3∗×y1y3×x=(y1∗(1−y1)−y2∗y1−y3∗y1)x=(y1∗−y1(y1∗+y2∗+y3∗))x=(y1∗−y1)x

注意 ( y 1 ∗ + y 2 ∗ + y 3 ∗ ) (y_1^*+y_2^*+y_3^*) (y1∗+y2∗+y3∗)是标签onehot编码的三个值,和正好为1。同理可得到剩下的两个导数:

∂ L ∂ w 2 = ( y 2 ∗ − y 2 ) x ∂ L ∂ w 3 = ( y 3 ∗ − y 3 ) x \frac{\partial L}{\partial \boldsymbol{w_2}} = (y_2^*-y_2)\boldsymbol{x}\\ \frac{\partial L}{\partial \boldsymbol{w_3}} = (y_3^*-y_3)\boldsymbol{x} ∂w2∂L=(y2∗−y2)x∂w3∂L=(y3∗−y3)x

交叉熵损失函数 L L L关于 w \boldsymbol{w} w的梯度为:

[ ( y 1 ∗ − y 1 ) x 1 ( y 2 ∗ − y 2 ) x 1 ( y 3 ∗ − y 3 ) x 1 ( y 1 ∗ − y 1 ) x 2 ( y 2 ∗ − y 2 ) x 2 ( y 3 ∗ − y 3 ) x 2 ( y 1 ∗ − y 1 ) x 3 ( y 2 ∗ − y 2 ) x 3 ( y 3 ∗ − y 3 ) x 3 ( y 1 ∗ − y 1 ) x 4 ( y 2 ∗ − y 2 ) x 4 ( y 3 ∗ − y 3 ) x 4 ( y 1 ∗ − y 1 ) x 5 ( y 2 ∗ − y 2 ) x 5 ( y 3 ∗ − y 3 ) x 5 ] T \left[ \begin{aligned} &(y_1^*-y_1)x1&(y_2^*-y_2)x1\space\space\space\space&(y_3^*-y_3)x1\\ &(y_1^*-y_1)x2&(y_2^*-y_2)x2\space\space\space\space&(y_3^*-y_3)x2\\ &(y_1^*-y_1)x3&(y_2^*-y_2)x3\space\space\space\space&(y_3^*-y_3)x3\\ &(y_1^*-y_1)x4&(y_2^*-y_2)x4\space\space\space\space&(y_3^*-y_3)x4\\ &(y_1^*-y_1)x5&(y_2^*-y_2)x5\space\space\space\space&(y_3^*-y_3)x5\\ \end{aligned} \right]^T

(y1∗−y1)x1(y1∗−y1)x2(y1∗−y1)x3(y1∗−y1)x4(y1∗−y1)x5(y2∗−y2)x1 (y2∗−y2)x2 (y2∗−y2)x3 (y2∗−y2)x4 (y2∗−y2)x5 (y3∗−y3)x1(y3∗−y3)x2(y3∗−y3)x3(y3∗−y3)x4(y3∗−y3)x5

T

这样交叉熵损失函数 L L L关于 w \boldsymbol{w} w的梯度用numpy的外积计算表示为:

∂ L ∂ w = n u m p y . o u t e r ( x , y ∗ − y ) \frac{\partial L}{\partial \boldsymbol{w}}=numpy.outer(\boldsymbol{x},\boldsymbol{y^*}-\boldsymbol{y}) ∂w∂L=numpy.outer(x,y∗−y)

用同样的方法可以推导出:

∂ L ∂ b = y ∗ − y \frac{\partial L}{\partial \boldsymbol{b}}=\boldsymbol{y^*}-\boldsymbol{y} ∂b∂L=y∗−y