参考论文:Core Challenges in Embodied Vision-Language Planning

论文作者:Jonathan Francis, Nariaki Kitamura, Felix Labelle, Xiaopeng Lu, Ingrid Navarro, Jean Oh

论文原文:https://arxiv.org/abs/2106.13948

论文出处:Journal of Artificial Intelligence Research 74 (2022) 459-515

论文被引:27(11/19/2023)

论文中的工作截止到2021年,在此基础上补充了近几年具身智能领域相关的数据集和基准。

思维导图

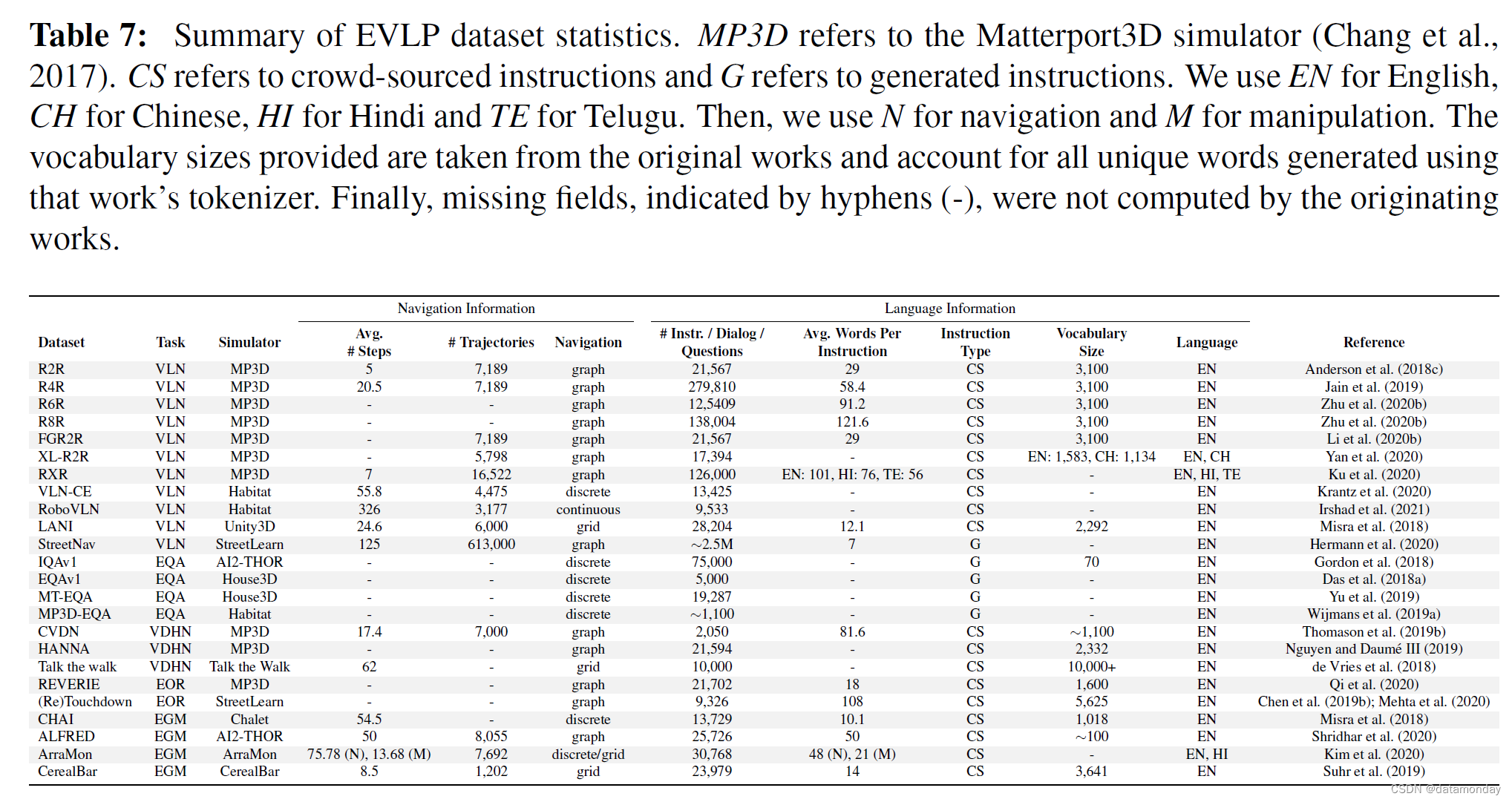

具身视觉语言规划数据集

EVLP 数据集有三个主要方面:

- 视觉观察(visual observations):一般来说,视觉观察包括 RGB 图像,通常与深度数据或语义掩码(semantic masks)搭配使用。这些观察结果可以代表室内和室外环境,既可以是基于照片的真实环境,也可以是基于合成的环境。

- 自然语言提示(natural language prompts):语言提示的类型各不相同。语言提示的形式可能是问题(questions),分步指令(instructions)以及需要通过对话或描述进行某种澄清的模糊指令(ambiguous instructions)。在语言序列的复杂性和词汇范围方面,语言也会有所不同。

- 导航演示(navigation demonstrations):导航轨迹在行动空间的粒度(或离散化)以及所提供的行动序列或轨迹(trajectory)与其他两个维度的隐式对齐(implicit alignment)等方面也各不相同。

VLN Datasets

R2R

论文标题:Vision-and-Language Navigation: Interpreting visually-grounded navigation instructions in real environments

论文作者:Peter Anderson, Qi Wu, Damien Teney, Jake Bruce, Mark Johnson, Niko Sünderhauf, Ian Reid, Stephen Gould, Anton van den Hengel

论文原文:https://arxiv.org/abs/1711.07280

论文出处:CVPR 2018 Spotlight presentation

论文被引:1089(11/19/2023)

论文代码:–

项目主页:https://bringmeaspoon.org/

A robot that can carry out a natural-language instruction has been a dream since before the Jetsons cartoon series imagined a life of leisure mediated by a fleet of attentive robot helpers. It is a dream that remains stubbornly distant. However, recent advances in vision and language methods have made incredible progress in closely related areas. This is significant because a robot interpreting a natural-language navigation instruction on the basis of what it sees is carrying out a vision and language process that is similar to Visual Question Answering. Both tasks can be interpreted as visually grounded sequence-to-sequence translation problems, and many of the same methods are applicable. To enable and encourage the application of vision and language methods to the problem of interpreting visually-grounded navigation instructions, we present the Matterport3D Simulator – a large-scale reinforcement learning environment based on real imagery. Using this simulator, which can in future support a range of embodied vision and language tasks, we provide the first benchmark dataset for visually-grounded natural language navigation in real buildings – the Room-to-Room (R2R) dataset.

R4R

论文标题:Stay on the Path: Instruction Fidelity in Vision-and-Language Navigation

论文作者:Vihan Jain, Gabriel Magalhaes, Alexander Ku, Ashish Vaswani, Eugene Ie, Jason Baldridge

论文原文:https://arxiv.org/abs/1905.12255

论文出处: ACL 2019

论文被引:132(11/19/2023)

论文代码:–

项目主页:–

Advances in learning and representations have reinvigorated work that connects language to other modalities. A particularly exciting direction is Vision-and-Language Navigation(VLN), in which agents interpret natural language instructions and visual scenes to move through environments and reach goals. Despite recent progress, current research leaves unclear how much of a role language understanding plays in this task, especially because dominant evaluation metrics have focused on goal completion rather than the sequence of actions corresponding to the instructions. Here, we highlight shortcomings of current metrics for the Room-to-Room dataset (Anderson et al.,2018b) and propose a new metric, Coverage weighted by Length Score (CLS). We also show that the existing paths in the dataset are not ideal for evaluating instruction following because they are direct-to-goal shortest paths. We join existing short paths to form more challenging extended paths to create a new data set, Room-for-Room (R4R). Using R4R and CLS, we show that agents that receive rewards for instruction fidelity outperform agents that focus on goal completion.

R8R

论文标题:BabyWalk: Going Farther in Vision-and-Language Navigation by Taking Baby Steps

论文作者:Wang Zhu, Hexiang Hu, Jiacheng Chen, Zhiwei Deng, Vihan Jain, Eugene Ie, Fei Sha

论文原文:https://arxiv.org/abs/2005.04625

论文出处:ACL 2020

论文被引:62(11/19/2023)

论文代码:https://github.com/Sha-Lab/babywalk,40 star

项目主页:–

Learning to follow instructions is of fundamental importance to autonomous agents for vision-and-language navigation (VLN). In this paper, we study how an agent can navigate long paths when learning from a corpus that consists of shorter ones. We show that existing state-of-the-art agents do not generalize well. To this end, we propose BabyWalk, a new VLN agent that is learned to navigate by decomposing long instructions into shorter ones (BabySteps) and completing them sequentially. A special design memory buffer is used by the agent to turn its past experiences into contexts for future steps. The learning process is composed of two phases. In the first phase, the agent uses imitation learning from demonstration to accomplish BabySteps. In the second phase, the agent uses curriculum-based reinforcement learning to maximize rewards on navigation tasks with increasingly longer instructions. We create two new benchmark datasets (of long navigation tasks) and use them in conjunction with existing ones to examine BabyWalk’s generalization ability. Empirical results show that BabyWalk achieves state-of-the-art results on several metrics, in particular, is able to follow long instructions better. The codes and the datasets are released on our project page: https://github.com/Sha-Lab/babywalk.

FGR2R

论文标题:Sub-Instruction Aware Vision-and-Language Navigation

论文作者:Yicong Hong, Cristian Rodriguez-Opazo, Qi Wu, Stephen Gould

论文原文:https://arxiv.org/abs/2004.02707

论文出处:ACL 2020

论文被引:44(11/19/2023)

论文代码:https://github.com/YicongHong/Fine-Grained-R2R,39 star

项目主页:–

Vision-and-language navigation requires an agent to navigate through a real 3D environment following natural language instructions. Despite significant advances, few previous works are able to fully utilize the strong correspondence between the visual and textual sequences. Meanwhile, due to the lack of intermediate supervision, the agent’s performance at following each part of the instruction cannot be assessed during navigation. In this work, we focus on the granularity of the visual and language sequences as well as the traceability of agents through the completion of an instruction. We provide agents with fine-grained annotations during training and find that they are able to follow the instruction better and have a higher chance of reaching the target at test time. We enrich the benchmark dataset Room-to-Room (R2R) with sub-instructions and their corresponding paths. To make use of this data, we propose effective sub-instruction attention and shifting modules that select and attend to a single sub-instruction at each time-step. We implement our sub-instruction modules in four state-of-the-art agents, compare with their baseline models, and show that our proposed method improves the performance of all four agents.

We release the Fine-Grained R2R dataset (FGR2R) and the code at this https URL.

RxR

论文标题:Room-Across-Room: Multilingual Vision-and-Language Navigation with Dense Spatiotemporal Grounding

论文作者:Alexander Ku, Peter Anderson, Roma Patel, Eugene Ie, Jason Baldridge

论文原文:https://arxiv.org/abs/2010.07954

论文出处:EMNLP 2020

论文被引:183(11/19/2023)

论文代码:https://github.com/google-research-datasets/RxR,97 star

项目主页:–

We introduce Room-Across-Room (RxR), a new Vision-and-Language Navigation (VLN) dataset. RxR is multilingual (English, Hindi, and Telugu) and larger (more paths and instructions) than other VLN datasets. It emphasizes the role of language in VLN by addressing known biases in paths and eliciting more references to visible entities. Furthermore, each word in an instruction is time-aligned to the virtual poses of instruction creators and validators. We establish baseline scores for monolingual and multilingual settings and multitask learning when including Room-to-Room annotations. We also provide results for a model that learns from synchronized pose traces by focusing only on portions of the panorama attended to in human demonstrations. The size, scope and detail of RxR dramatically expands the frontier for research on embodied language agents in simulated, photo-realistic environments.

XL R2R

论文标题:Cross-lingual Vision-Language Navigation

论文作者:An Yan, Xin Eric Wang, Jiangtao Feng, Lei Li, William Yang Wang

论文原文:https://arxiv.org/abs/1910.11301

论文出处:ICLR 2020

论文被引:11(11/19/2023)

论文代码:https://github.com/zzxslp/XL-VLN,11 star

项目主页:–

Commanding a robot to navigate with natural language instructions is a long-term goal for grounded language understanding and robotics. But the dominant language is English, according to previous studies on vision-language navigation (VLN). To go beyond English and serve people speaking different languages, we collect a bilingual Room-to-Room (BL-R2R) dataset, extending the original benchmark with new Chinese instructions. Based on this newly introduced dataset, we study how an agent can be trained on existing English instructions but navigate effectively with another language under a zero-shot learning scenario. Without any training data of the target language, our model shows competitive results even compared to a model with full access to the target language training data. Moreover, we investigate the transferring ability of our model when given a certain amount of target language training data.

VLN-CE

论文标题:Beyond the Nav-Graph: Vision-and-Language Navigation in Continuous Environments

论文作者:Jacob Krantz, Erik Wijmans, Arjun Majumdar, Dhruv Batra, Stefan Lee

论文原文:https://arxiv.org/abs/2004.02857

论文出处:ECCV 2020

论文被引:169(11/19/2023)

论文代码:https://github.com/jacobkrantz/VLN-CE,188 star

项目主页:https://jacobkrantz.github.io/vlnce/

We develop a language-guided navigation task set in a continuous 3D environment where agents must execute low-level actions to follow natural language navigation directions. By being situated in continuous environments, this setting lifts a number of assumptions implicit in prior work that represents environments as a sparse graph of panoramas with edges corresponding to navigability. Specifically, our setting drops the presumptions of known environment topologies, short-range oracle navigation, and perfect agent localization. To contextualize this new task, we develop models that mirror many of the advances made in prior settings as well as single-modality baselines. While some of these techniques transfer, we find significantly lower absolute performance in the continuous setting – suggesting that performance in prior `navigation-graph’ settings may be inflated by the strong implicit assumptions.

RoboVLN

论文标题:Hierarchical Cross-Modal Agent for Robotics Vision-and-Language Navigation

论文作者:Muhammad Zubair Irshad, Chih-Yao Ma, Zsolt Kira

论文原文:https://arxiv.org/abs/2104.10674

论文出处:ICRA 2021

论文被引:47(11/19/2023)

论文代码:https://github.com/GT-RIPL/robo-vln,60 star

项目主页:https://zubair-irshad.github.io/projects/robo-vln.html

Deep Learning has revolutionized our ability to solve complex problems such as Vision-and-Language Navigation (VLN). This task requires the agent to navigate to a goal purely based on visual sensory inputs given natural language instructions. However, prior works formulate the problem as a navigation graph with a discrete action space. In this work, we lift the agent off the navigation graph and propose a more complex VLN setting in continuous 3D reconstructed environments. Our proposed setting, Robo-VLN, more closely mimics the challenges of real world navigation. Robo-VLN tasks have longer trajectory lengths, continuous action spaces, and challenges such as obstacles. We provide a suite of baselines inspired by state-of-the-art works in discrete VLN and show that they are less effective at this task. We further propose that decomposing the task into specialized high- and low-level policies can more effectively tackle this task. With extensive experiments, we show that by using layered decision making, modularized training, and decoupling reasoning and imitation, our proposed Hierarchical Cross-Modal (HCM) agent outperforms existing baselines in all key metrics and sets a new benchmark for Robo-VLN.

LANI

论文标题:Mapping Instructions to Actions in 3D Environments with Visual Goal Prediction

论文作者:Dipendra Misra, Andrew Bennett, Valts Blukis, Eyvind Niklasson, Max Shatkhin, Yoav Artzi

论文原文:https://arxiv.org/abs/1809.00786

论文出处:EMNLP 2018

论文被引:154(11/19/2023)

论文代码:https://github.com/lil-lab/ciff,30 star

项目主页:–

We propose to decompose instruction execution to goal prediction and action generation. We design a model that maps raw visual observations to goals using LINGUNET, a language-conditioned image generation network, and then generates the actions required to complete them. Our model is trained from demonstration only without external resources. To evaluate our approach, we introduce two benchmarks for instruction following: LANI, a navigation task; and CHAI, where an agent executes household instructions. Our evaluation demonstrates the advantages of our model decomposition, and illustrates the challenges posed by our new benchmarks.

StreetNav

论文标题:Learning To Follow Directions in Street View

论文作者:Karl Moritz Hermann, Mateusz Malinowski, Piotr Mirowski, Andras Banki-Horvath, Keith Anderson, Raia Hadsell

论文原文:https://arxiv.org/abs/1903.00401

论文出处:AAAI 2020

论文被引:59(11/19/2023)

论文代码:https://github.com/google-deepmind/streetlearn,271 star(先前的两个工作,本文未提及开源代码)

项目主页:–

Navigating and understanding the real world remains a key challenge in machine learning and inspires a great variety of research in areas such as language grounding, planning, navigation and computer vision. We propose an instruction-following task that requires all of the above, and which combines the practicality of simulated environments with the challenges of ambiguous, noisy real world data. StreetNav is built on top of Google Street View and provides visually accurate environments representing real places. Agents are given driving instructions which they must learn to interpret in order to successfully navigate in this environment. Since humans equipped with driving instructions can readily navigate in previously unseen cities, we set a high bar and test our trained agents for similar cognitive capabilities. Although deep reinforcement learning (RL) methods are frequently evaluated only on data that closely follow the training distribution, our dataset extends to multiple cities and has a clean train/test separation. This allows for thorough testing of generalisation ability. This paper presents the StreetNav environment and tasks, models that establish strong baselines, and extensive analysis of the task and the trained agents.

EQA Datasets

EQA 中的数据集主要根据所使用的环境类型和所问的问题类型而有所不同。

IQUADv1

论文标题:IQA: Visual Question Answering in Interactive Environments

论文作者:Daniel Gordon, Aniruddha Kembhavi, Mohammad Rastegari, Joseph Redmon, Dieter Fox, Ali Farhadi

论文原文:https://arxiv.org/abs/1712.03316

论文出处:CVPR 2018

论文被引:385(11/19/2023)

论文代码:https://github.com/danielgordon10/thor-iqa-cvpr-2018,120 star

项目主页:–

介绍视频:https://www.youtube.com/watch?v=pXd3C-1jr98

We introduce Interactive Question Answering (IQA), the task of answering questions that require an autonomous agent to interact with a dynamic visual environment. IQA presents the agent with a scene and a question, like: “Are there any apples in the fridge?” The agent must navigate around the scene, acquire visual understanding of scene elements, interact with objects (e.g. open refrigerators) and plan for a series of actions conditioned on the question. Popular reinforcement learning approaches with a single controller perform poorly on IQA owing to the large and diverse state space. We propose the Hierarchical Interactive Memory Network (HIMN), consisting of a factorized set of controllers, allowing the system to operate at multiple levels of temporal abstraction. To evaluate HIMN, we introduce IQUAD V1, a new dataset built upon AI2-THOR, a simulated photo-realistic environment of configurable indoor scenes with interactive objects (code and dataset available at this https URL). IQUAD V1 has 75,000 questions, each paired with a unique scene configuration. Our experiments show that our proposed model outperforms popular single controller based methods on IQUAD V1. For sample questions and results, please view our video: this https URL

EQAv1

论文标题:Embodied Question Answering

论文作者:Abhishek Das, Samyak Datta, Georgia Gkioxari, Stefan Lee, Devi Parikh, Dhruv Batra

论文原文:https://arxiv.org/abs/1711.11543

论文出处:CVPR 2018

论文被引:582(11/19/2023)

论文代码:https://github.com/facebookresearch/EmbodiedQA,282 star

项目主页:https://embodiedqa.org/

We present a new AI task – Embodied Question Answering (EmbodiedQA) – where an agent is spawned at a random location in a 3D environment and asked a question (“What color is the car?”). In order to answer, the agent must first intelligently navigate to explore the environment, gather information through first-person (egocentric) vision, and then answer the question (“orange”).

This challenging task requires a range of AI skills – active perception, language understanding, goal-driven navigation, commonsense reasoning, and grounding of language into actions. In this work, we develop the environments, end-to-end-trained reinforcement learning agents, and evaluation protocols for EmbodiedQA.

MP3D-EQA

论文标题:Embodied Question Answering in Photorealistic Environments with Point Cloud Perception

论文作者:Erik Wijmans, Samyak Datta, Oleksandr Maksymets, Abhishek Das, Georgia Gkioxari, Stefan Lee, Irfan Essa, Devi Parikh, Dhruv Batra

论文原文:https://arxiv.org/abs/1904.03461

论文出处:CVPR 2019

论文被引:134(11/19/2023)

论文代码:https://github.com/facebookresearch/EmbodiedQA,282 star

项目主页:https://embodiedqa.org/

To help bridge the gap between internet vision-style problems and the goal of vision for embodied perception we instantiate a large-scale navigation task – Embodied Question Answering [1] in photo-realistic environments (Matterport 3D). We thoroughly study navigation policies that utilize 3D point clouds, RGB images, or their combination. Our analysis of these models reveals several key findings. We find that two seemingly naive navigation baselines, forward-only and random, are strong navigators and challenging to outperform, due to the specific choice of the evaluation setting presented by [1]. We find a novel loss-weighting scheme we call Inflection Weighting to be important when training recurrent models for navigation with behavior cloning and are able to out perform the baselines with this technique. We find that point clouds provide a richer signal than RGB images for learning obstacle avoidance, motivating the use (and continued study) of 3D deep learning models for embodied navigation.

MT-EQA

论文标题:Multi-Target Embodied Question Answering

论文作者:Licheng Yu, Xinlei Chen, Georgia Gkioxari, Mohit Bansal, Tamara L. Berg, Dhruv Batra

论文原文:https://arxiv.org/abs/1904.04686

论文出处:CVPR 2019

论文被引:77(11/19/2023)

论文代码:https://github.com/facebookresearch/EmbodiedQA,282 star

项目主页:https://embodiedqa.org/

Embodied Question Answering (EQA) is a relatively new task where an agent is asked to answer questions about its environment from egocentric perception. EQA makes the fundamental assumption that every question, e.g., “what color is the car?”, has exactly one target (“car”) being inquired about. This assumption puts a direct limitation on the abilities of the agent. We present a generalization of EQA - Multi-Target EQA (MT-EQA). Specifically, we study questions that have multiple targets in them, such as “Is the dresser in the bedroom bigger than the oven in the kitchen?”, where the agent has to navigate to multiple locations (“dresser in bedroom”, “oven in kitchen”) and perform comparative reasoning (“dresser” bigger than “oven”) before it can answer a question. Such questions require the development of entirely new modules or components in the agent. To address this, we propose a modular architecture composed of a program generator, a controller, a navigator, and a VQA module. The program generator converts the given question into sequential executable sub-programs; the navigator guides the agent to multiple locations pertinent to the navigation-related sub-programs; and the controller learns to select relevant observations along its path. These observations are then fed to the VQA module to predict the answer. We perform detailed analysis for each of the model components and show that our joint model can outperform previous methods and strong baselines by a significant margin.

VDN Datasets

Just Ask

论文标题:Just Ask:An Interactive Learning Framework for Vision and Language Navigation

论文作者:Ta-Chung Chi, Mihail Eric, Seokhwan Kim, Minmin Shen, Dilek Hakkani-tur

论文原文:https://arxiv.org/abs/1912.00915

论文出处:AAAI 2020

论文被引:48(11/19/2023)

论文代码:–

项目主页:–

In the vision and language navigation task, the agent may encounter ambiguous situations that are hard to interpret by just relying on visual information and natural language instructions. We propose an interactive learning framework to endow the agent with the ability to ask for users’ help in such situations. As part of this framework, we investigate multiple learning approaches for the agent with different levels of complexity. The simplest model-confusion-based method lets the agent ask questions based on its confusion, relying on the predefined confidence threshold of a next action prediction model. To build on this confusion-based method, the agent is expected to demonstrate more sophisticated reasoning such that it discovers the timing and locations to interact with a human. We achieve this goal using reinforcement learning (RL) with a proposed reward shaping term, which enables the agent to ask questions only when necessary. The success rate can be boosted by at least 15% with only one question asked on average during the navigation. Furthermore, we show that the RL agent is capable of adjusting dynamically to noisy human responses. Finally, we design a continual learning strategy, which can be viewed as a data augmentation method, for the agent to improve further utilizing its interaction history with a human. We demonstrate the proposed strategy is substantially more realistic and data-efficient compared to previously proposed pre-exploration techniques.

HANNA

论文标题:Help, Anna! Visual Navigation with Natural Multimodal Assistance via Retrospective Curiosity-Encouraging Imitation Learning

论文作者:Khanh Nguyen, Hal Daumé III

论文原文:https://arxiv.org/abs/1909.01871

论文出处:EMNLP 2019

论文被引:129(11/19/2023)

论文代码:https://github.com/khanhptnk/hanna, 27 star

项目主页:–

Mobile agents that can leverage help from humans can potentially accomplish more complex tasks than they could entirely on their own. We develop “Help, Anna!” (HANNA), an interactive photo-realistic simulator in which an agent fulfills object-finding tasks by requesting and interpreting natural language-and-vision assistance. An agent solving tasks in a HANNA environment can leverage simulated human assistants, called ANNA (Automatic Natural Navigation Assistants), which, upon request, provide natural language and visual instructions to direct the agent towards the goals. To address the HANNA problem, we develop a memory-augmented neural agent that hierarchically models multiple levels of decision-making, and an imitation learning algorithm that teaches the agent to avoid repeating past mistakes while simultaneously predicting its own chances of making future progress. Empirically, our approach is able to ask for help more effectively than competitive baselines and, thus, attains higher task success rate on both previously seen and previously unseen environments. We publicly release code and data at this https URL . A video demo is available at this https URL .

CVDN

论文标题:Vision-and-Dialog Navigation

论文作者:Jesse Thomason, Michael Murray, Maya Cakmak, Luke Zettlemoyer

论文原文:https://arxiv.org/abs/1907.04957

论文出处:CoRL 2019

论文被引:263(11/19/2023)

论文代码:https://github.com/mmurray/cvdn,58 star

项目主页:https://cvdn.dev/

Robots navigating in human environments should use language to ask forassistance and be able to understand human responses. To study this challenge,we introduce Cooperative Vision-and-Dialog Navigation, a dataset of over 2kembodied, human-human dialogs situated in simulated, photorealistic homeenvironments. The Navigator asks questions to their partner, the Oracle, whohas privileged access to the best next steps the Navigator should takeaccording to a shortest path planner. To train agents that search anenvironment for a goal location, we define the Navigation from Dialog Historytask. An agent, given a target object and a dialog history between humanscooperating to find that object, must infer navigation actions towards the goalin unexplored environments. We establish an initial, multi-modalsequence-to-sequence model and demonstrate that looking farther back in thedialog history improves performance. Sourcecode and a live interface demo can be found at https://cvdn.dev/

Talk the Walk

论文标题:Talk the Walk: Navigating New York City through Grounded Dialogue

论文作者:Harm de Vries, Kurt Shuster, Dhruv Batra, Devi Parikh, Jason Weston, Douwe Kiela

论文原文:https://arxiv.org/abs/1807.03367

论文出处:ICLR 2019

论文被引:132(11/19/2023)

论文代码:https://github.com/facebookresearch/talkthewalk,113 star

项目主页:–

We introduce “Talk The Walk”, the first large-scale dialogue dataset grounded in action and perception. The task involves two agents (a “guide” and a “tourist”) that communicate via natural language in order to achieve a common goal: having the tourist navigate to a given target location. The task and dataset, which are described in detail, are challenging and their full solution is an open problem that we pose to the community. We (i) focus on the task of tourist localization and develop the novel Masked Attention for Spatial Convolutions (MASC) mechanism that allows for grounding tourist utterances into the guide’s map, (ii) show it yields significant improvements for both emergent and natural language communication, and (iii) using this method, we establish non-trivial baselines on the full task.

EOR Datasets

REVERIE

论文标题:REVERIE: Remote Embodied Visual Referring Expression in Real Indoor Environments

论文作者:Yuankai Qi, Qi Wu, Peter Anderson, Xin Wang, William Yang Wang, Chunhua Shen, Anton van den Hengel

论文原文:https://arxiv.org/abs/1904.10151

论文出处:CVPR 2020

论文被引:205(11/19/2023)

论文代码:https://github.com/YuankaiQi/REVERIE,94 star

项目主页:https://yuankaiqi.github.io/REVERIE_Challenge/dataset.html

One of the long-term challenges of robotics is to enable robots to interact with humans in the visual world via natural language, as humans are visual animals that communicate through language. Overcoming this challenge requires the ability to perform a wide variety of complex tasks in response to multifarious instructions from humans. In the hope that it might drive progress towards more flexible and powerful human interactions with robots, we propose a dataset of varied and complex robot tasks, described in natural language, in terms of objects visible in a large set of real images. Given an instruction, success requires navigating through a previously-unseen environment to identify an object. This represents a practical challenge, but one that closely reflects one of the core visual problems in robotics. Several state-of-the-art vision-and-language navigation, and referring-expression models are tested to verify the difficulty of this new task, but none of them show promising results because there are many fundamental differences between our task and previous ones. A novel Interactive Navigator-Pointer model is also proposed that provides a strong baseline on the task. The proposed model especially achieves the best performance on the unseen test split, but still leaves substantial room for improvement compared to the human performance.

Touchdown

论文标题:Touchdown: Natural Language Navigation and Spatial Reasoning in Visual Street Environments

论文作者:Howard Chen, Alane Suhr, Dipendra Misra, Noah Snavely, Yoav Artzi

论文原文:https://arxiv.org/abs/1811.12354

论文出处:CVPR 2019

论文被引:312(11/19/2023)

论文代码:https://github.com/lil-lab/touchdown,83 star

项目主页:https://touchdown.ai/

We study the problem of jointly reasoning about language and vision through a navigation and spatial reasoning task. We introduce the Touchdown task and dataset, where an agent must first follow navigation instructions in a real-life visual urban environment, and then identify a location described in natural language to find a hidden object at the goal position. The data contains 9,326 examples of English instructions and spatial descriptions paired with demonstrations. Empirical analysis shows the data presents an open challenge to existing methods, and qualitative linguistic analysis shows that the data displays richer use of spatial reasoning compared to related resources.

EGM Datasets

CHAI

论文标题:Mapping Instructions to Actions in 3D Environments with Visual Goal Prediction

论文作者:Dipendra Misra, Andrew Bennett, Valts Blukis, Eyvind Niklasson, Max Shatkhin, Yoav Artzi

论文原文:https://arxiv.org/abs/1809.00786

论文出处:EMNLP 2018

论文被引:154(11/19/2023)

论文代码:https://github.com/lil-lab/ciff,30 star

项目主页:–

We propose to decompose instruction execution to goal prediction and action generation. We design a model that maps raw visual observations to goals using LINGUNET, a language-conditioned image generation network, and then generates the actions required to complete them. Our model is trained from demonstration only without external resources. To evaluate our approach, we introduce two benchmarks for instruction following: LANI, a navigation task; and CHAI, where an agent executes household instructions. Our evaluation demonstrates the advantages of our model decomposition, and illustrates the challenges posed by our new benchmarks.

ALFRED

论文标题:ALFRED: A Benchmark for Interpreting Grounded Instructions for Everyday Tasks

论文作者:Mohit Shridhar, Jesse Thomason, Daniel Gordon, Yonatan Bisk, Winson Han, Roozbeh Mottaghi, Luke Zettlemoyer, Dieter Fox

论文原文:https://arxiv.org/abs/1912.01734

论文出处:CVPR 2020

论文被引:489(11/19/2023)

论文代码:https://github.com/askforalfred/alfred,288 star

项目主页:https://askforalfred.com/

ArraMon

论文标题:ArraMon: A Joint Navigation-Assembly Instruction Interpretation Task in Dynamic Environments

论文作者:Hyounghun Kim, Abhay Zala, Graham Burri, Hao Tan, Mohit Bansal

论文原文:https://arxiv.org/abs/2011.07660

论文出处:EMNLP Findings 2020

论文被引:13(11/19/2023)

论文代码:https://github.com/hyounghk/ArraMon,4 star

项目主页:https://arramonunc.github.io/

For embodied agents, navigation is an important ability but not an isolated goal. Agents are also expected to perform specific tasks after reaching the target location, such as picking up objects and assembling them into a particular arrangement. We combine Vision-and-Language Navigation, assembling of collected objects, and object referring expression comprehension, to create a novel joint navigation-and-assembly task, named ArraMon. During this task, the agent (similar to a PokeMON GO player) is asked to find and collect different target objects one-by-one by navigating based on natural language instructions in a complex, realistic outdoor environment, but then also ARRAnge the collected objects part-by-part in an egocentric grid-layout environment. To support this task, we implement a 3D dynamic environment simulator and collect a dataset (in English; and also extended to Hindi) with human-written navigation and assembling instructions, and the corresponding ground truth trajectories. We also filter the collected instructions via a verification stage, leading to a total of 7.7K task instances (30.8K instructions and paths). We present results for several baseline models (integrated and biased) and metrics (nDTW, CTC, rPOD, and PTC), and the large model-human performance gap demonstrates that our task is challenging and presents a wide scope for future work. Our dataset, simulator, and code are publicly available at: this https URL

CerealBar

论文标题:Executing Instructions in Situated Collaborative Interactions

论文作者:Alane Suhr, Claudia Yan, Charlotte Schluger, Stanley Yu, Hadi Khader, Marwa Mouallem, Iris Zhang, Yoav Artzi

论文原文:https://arxiv.org/abs/1910.03655

论文出处:EMNLP 2019 long paper

论文被引:68(11/19/2023)

论文代码:https://github.com/lil-lab/cerealbar,26 star

项目主页:https://lil.nlp.cornell.edu/cerealbar/

We study a collaborative scenario where a user not only instructs a system to complete tasks, but also acts alongside it. This allows the user to adapt to the system abilities by changing their language or deciding to simply accomplish some tasks themselves, and requires the system to effectively recover from errors as the user strategically assigns it new goals. We build a game environment to study this scenario, and learn to map user instructions to system actions. We introduce a learning approach focused on recovery from cascading errors between instructions, and modeling methods to explicitly reason about instructions with multiple goals. We evaluate with a new evaluation protocol using recorded interactions and online games with human users, and observe how users adapt to the system abilities.

Benchmarks

TEACh

论文标题:TEACh: Task-driven Embodied Agents that Chat

论文作者:Aishwarya Padmakumar, Jesse Thomason, Ayush Shrivastava, Patrick Lange, Anjali Narayan-Chen, Spandana Gella, Robinson Piramuthu, Gokhan Tur, Dilek Hakkani-Tur

论文原文:https://arxiv.org/abs/2110.00534

论文出处:AAAI 2022

论文被引:92(11/19/2023)

论文代码:https://github.com/alexa/teach

项目主页:–

Robots operating in human spaces must be able to engage in natural language interaction with people, both understanding and executing instructions, and using conversation to resolve ambiguity and recover from mistakes. To study this, we introduce TEACh, a dataset of over 3,000 human–human, interactive dialogues to complete household tasks in simulation. A Commander with access to oracle information about a task communicates in natural language with a Follower. The Follower navigates through and interacts with the environment to complete tasks varying in complexity from “Make Coffee” to “Prepare Breakfast”, asking questions and getting additional information from the Commander. We propose three benchmarks using TEACh to study embodied intelligence challenges, and we evaluate initial models’ abilities in dialogue understanding, language grounding, and task execution.

在人类空间中运行的机器人必须能够与人进行自然语言交互,既能理解和执行指令,又能利用对话解决歧义并从错误中恢复过来。为了研究这一点,我们引入了 TEACh,这是一个包含 3,000 多条人与人互动对话的数据集,用于在模拟中完成家庭任务。指挥官可以获取任务的甲骨文信息,并与跟随者用自然语言进行交流。跟随者在环境中导航并与环境互动,完成从煮咖啡到准备早餐等复杂程度不同的任务,同时提出问题并从指挥官那里获得更多信息。我们利用 TEACh 提出了三个基准来研究体现智能的挑战,并评估了初始模型在对话理解、语言基础和任务执行方面的能力。

ObjectFolder

论文标题:ObjectFolder: A Dataset of Objects with Implicit Visual, Auditory, and Tactile Representations

论文作者:Ruohan Gao, Yen-Yu Chang, Shivani Mall, Li Fei-Fei, Jiajun Wu

论文原文:https://arxiv.org/abs/2109.07991

论文出处:CoRL 2021

论文被引:41(11/19/2023)

论文代码:https://github.com/rhgao/ObjectFolder,137 star

项目主页:https://ai.stanford.edu/~rhgao/objectfolder/

Multisensory object-centric perception, reasoning, and interaction have been a key research topic in recent years. However, the progress in these directions is limited by the small set of objects available – synthetic objects are not realistic enough and are mostly centered around geometry, while real object datasets such as YCB are often practically challenging and unstable to acquire due to international shipping, inventory, and financial cost. We present ObjectFolder, a dataset of 100 virtualized objects that addresses both challenges with two key innovations. First, ObjectFolder encodes the visual, auditory, and tactile sensory data for all objects, enabling a number of multisensory object recognition tasks, beyond existing datasets that focus purely on object geometry. Second, ObjectFolder employs a uniform, object-centric, and implicit representation for each object’s visual textures, acoustic simulations, and tactile readings, making the dataset flexible to use and easy to share. We demonstrate the usefulness of our dataset as a testbed for multisensory perception and control by evaluating it on a variety of benchmark tasks, including instance recognition, cross-sensory retrieval, 3D reconstruction, and robotic grasping.

ObjectFolder 2.0

论文标题:ObjectFolder 2.0: A Multisensory Object Dataset for Sim2Real Transfer

论文作者:Ruohan Gao, Zilin Si, Yen-Yu Chang, Samuel Clarke, Jeannette Bohg, Li Fei-Fei, Wenzhen Yuan, Jiajun Wu

论文原文:https://arxiv.org/abs/2204.02389

论文出处:CVPR 2022

论文被引:19(11/19/2023)

论文代码:https://github.com/rhgao/ObjectFolder,137 star

项目主页:https://ai.stanford.edu/~rhgao/objectfolder2.0/

Objects play a crucial role in our everyday activities. Though multisensory object-centric learning has shown great potential lately, the modeling of objects in prior work is rather unrealistic. ObjectFolder 1.0 is a recent dataset that introduces 100 virtualized objects with visual, acoustic, and tactile sensory data. However, the dataset is small in scale and the multisensory data is of limited quality, hampering generalization to real-world scenarios. We present ObjectFolder 2.0, a large-scale, multisensory dataset of common household objects in the form of implicit neural representations that significantly enhances ObjectFolder 1.0 in three aspects. First, our dataset is 10 times larger in the amount of objects and orders of magnitude faster in rendering time. Second, we significantly improve the multisensory rendering quality for all three modalities. Third, we show that models learned from virtual objects in our dataset successfully transfer to their real-world counterparts in three challenging tasks: object scale estimation, contact localization, and shape reconstruction. ObjectFolder 2.0 offers a new path and testbed for multisensory learning in computer vision and robotics. The dataset is available at this https URL.

BEHAVIOR 100

论文标题:BEHAVIOR: Benchmark for Everyday Household Activities in Virtual, Interactive, and Ecological Environments

论文作者:Sanjana Srivastava, Chengshu Li, Michael Lingelbach, Roberto Martín-Martín, Fei Xia, Kent Vainio, Zheng Lian, Cem Gokmen, Shyamal Buch, C. Karen Liu, Silvio Savarese, Hyowon Gweon, Jiajun Wu, Li Fei-Fei

论文原文:https://arxiv.org/abs/2108.03332

论文出处:CoRL 2021

论文被引:84(11/19/2023)

论文代码:–

项目主页:https://behavior.stanford.edu/

We introduce BEHAVIOR, a benchmark for embodied AI with 100 activities in simulation, spanning a range of everyday household chores such as cleaning, maintenance, and food preparation. These activities are designed to be realistic, diverse, and complex, aiming to reproduce the challenges that agents must face in the real world. Building such a benchmark poses three fundamental difficulties for each activity: definition (it can differ by time, place, or person), instantiation in a simulator, and evaluation. BEHAVIOR addresses these with three innovations. First, we propose an object-centric, predicate logic-based description language for expressing an activity’s initial and goal conditions, enabling generation of diverse instances for any activity. Second, we identify the simulator-agnostic features required by an underlying environment to support BEHAVIOR, and demonstrate its realization in one such simulator. Third, we introduce a set of metrics to measure task progress and efficiency, absolute and relative to human demonstrators. We include 500 human demonstrations in virtual reality (VR) to serve as the human ground truth. Our experiments demonstrate that even state of the art embodied AI solutions struggle with the level of realism, diversity, and complexity imposed by the activities in our benchmark. We make BEHAVIOR publicly available at this http URL to facilitate and calibrate the development of new embodied AI solutions.

BEHAVIOR-1K

论文标题:BEHAVIOR-1K: A Benchmark for Embodied AI with 1,000 Everyday Activities and Realistic Simulation

论文作者:Chengshu Li, Ruohan Zhang, Josiah Wong, Cem Gokmen, Sanjana Srivastava, Roberto Martín-Martín, Chen Wang, Gabrael Levine, Michael Lingelbach, Jiankai Sun, Mona Anvari, Minjune Hwang, Manasi Sharma, Arman Aydin, Dhruva Bansal, Samuel Hunter, Kyu-Young Kim, Alan Lou, Caleb R Matthews, Ivan Villa-Renteria, Jerry Huayang Tang, Claire Tang, Fei Xia, Silvio Savarese, Hyowon Gweon, Karen Liu, Jiajun Wu, Li Fei-Fei

论文原文:https://openreview.net/pdf?id=_8DoIe8G3t

论文出处:CoRL 2022 Oral

论文被引:43(11/19/2023)

论文代码:–

项目主页:https://behavior.stanford.edu/behavior-1k

Keywords: Embodied AI Benchmark, Everyday Activities, Mobile Manipulation

TL;DR: BEHAVIOR-1K is a novel human-centric benchmark for Embodied AI in simulation with 1000 everyday activities, a diverse dataset of 5,000+ objects and 50 scenes, and a simulation environment, OmniGibson, that reaches high levels of simulation realism.

Abstract: We present BEHAVIOR-1K, a comprehensive simulation benchmark for human-centered robotics. BEHAVIOR-1K includes two components, guided and motivated by the results of an extensive survey on “what do you want robots to do for you?”. The first is the definition of 1,000 everyday activities, grounded in 50 scenes (houses, gardens, restaurants, offices, etc.) with more than 5,000 objects annotated with rich physical and semantic properties. The second is OmniGibson, a novel simulation environment that supports these activities via realistic physics simulation and rendering of rigid bodies, deformable bodies, and liquids. Our experiments indicate that the activities in BEHAVIOR-1K are long-horizon and dependent on complex manipulation skills, both of which remain a challenge for even state-of-the-art robot learning solutions. To calibrate the simulation-to-reality gap of BEHAVIOR-1K, we provide an initial study on transferring solutions learned with a mobile manipulator in a simulated apartment to its real-world counterpart. We hope that BEHAVIOR-1K’s human-grounded nature, diversity, and realism make it valuable for embodied AI and robot learning research. Project website: https://behavior.stanford.edu.

Mini-BEHAVIOR

论文标题:Mini-BEHAVIOR: A Procedurally Generated Benchmark for Long-horizon Decision-Making in Embodied AI

论文作者: Emily Jin, Jiaheng Hu, Zhuoyi Huang, Ruohan Zhang, Jiajun Wu, Li Fei-Fei, Roberto Martín-Martín

论文原文:https://arxiv.org/abs/2310.01824v1

论文出处:–

论文被引:–(11/19/2023)

论文代码:https://github.com/StanfordVL/mini_behavior,18 star

项目主页:–

We present Mini-BEHAVIOR, a novel benchmark for embodied AI that challenges agents to use reasoning and decision-making skills to solve complex activities that resemble everyday human challenges. The Mini-BEHAVIOR environment is a fast, realistic Gridworld environment that offers the benefits of rapid prototyping and ease of use while preserving a symbolic level of physical realism and complexity found in complex embodied AI benchmarks. We introduce key features such as procedural generation, to enable the creation of countless task variations and support open-ended learning. Mini-BEHAVIOR provides implementations of various household tasks from the original BEHAVIOR benchmark, along with starter code for data collection and reinforcement learning agent training. In essence, Mini-BEHAVIOR offers a fast, open-ended benchmark for evaluating decision-making and planning solutions in embodied AI. It serves as a user-friendly entry point for research and facilitates the evaluation and development of solutions, simplifying their assessment and development while advancing the field of embodied AI. Code is publicly available at https://github.com/StanfordVL/mini_behavior.

我们介绍的 Mini-BEHAVIOR 是一种新颖的具身人工智能基准,它挑战智能体使用推理和决策技能来解决类似于人类日常挑战的复杂活动。Mini-BEHAVIOR 环境是一个快速、逼真的网格世界环境,具有快速原型设计和易于使用的优点,同时还保留了复杂的具身人工智能基准中的物理逼真度和复杂性的象征性水平。我们引入了程序生成等关键功能,从而能够创建无数的任务变体,并支持开放式学习。Mini-BEHAVIOR 提供了原始 BEHAVIOR 基准中各种家庭任务的实现,以及用于数据收集和强化学习智能体训练的启动代码。从本质上讲,Mini-BEHAVIOR 提供了一个快速、开放式的基准,用于评估具身人工智能的决策和规划解决方案。它是一个用户友好型的研究切入点,有助于评估和开发解决方案,简化评估和开发工作,同时推动具身人工智能领域的发展。代码可通过 https://github.com/StanfordVL/mini_behavior 公开获取。

Alexa Arena

论文标题:Alexa Arena: A User-Centric Interactive Platform for Embodied AI

论文作者:Qiaozi Gao, Govind Thattai, Suhaila Shakiah, Xiaofeng Gao, Shreyas Pansare, Vasu Sharma, Gaurav Sukhatme, Hangjie Shi, Bofei Yang, Desheng Zheng, Lucy Hu, Karthika Arumugam, Shui Hu, Matthew Wen, Dinakar Guthy, Cadence Chung, Rohan Khanna, Osman Ipek, Leslie Ball, Kate Bland, Heather Rocker, Yadunandana Rao, Michael Johnston, Reza Ghanadan, Arindam Mandal, Dilek Hakkani Tur, Prem Natarajan

论文原文:https://arxiv.org/abs/2303.01586

论文出处:NIPS 2023

论文被引:14(11/19/2023)

论文代码:https://github.com/amazon-science/alexa-arena,75 star

项目主页:–

We introduce Alexa Arena, a user-centric simulation platform for Embodied AI (EAI) research. Alexa Arena provides a variety of multi-room layouts and interactable objects, for the creation of human-robot interaction (HRI) missions. With user-friendly graphics and control mechanisms, Alexa Arena supports the development of gamified robotic tasks readily accessible to general human users, thus opening a new venue for high-efficiency HRI data collection and EAI system evaluation. Along with the platform, we introduce a dialog-enabled instruction-following benchmark and provide baseline results for it. We make Alexa Arena publicly available to facilitate research in building generalizable and assistive embodied agents.

Open X-Embodiment

论文标题:Open X-Embodiment: Robotic Learning Datasets and RT-X Models

论文作者:Quan Vuong, Sergey Levine, Homer Rich Walke, et.al.

论文原文:https://openreview.net/forum?id=zraBtFgxT0

论文出处:CoRL 2023 Workshop TGR Oral

论文被引:–(11/19/2023)

论文代码:–

项目主页:https://robotics-transformer-x.github.io/

Keywords: robotics, robots, robotics research, robot learning, future of robotics, robotic control, cross-embodiment

TL;DR: Scaling up robot learning across many embodiments

Abstract: Large, high-capacity models trained on diverse datasets have shown remarkable successes on efficiently tackling downstream applications. In domains from NLP to Computer Vision, this has led to a consolidation of pretrained models, with general pretrained backbones serving as a starting point for many applications. Can such a consolidation happen in robotics? Conventionally, robotic learning methods train a separate model for every application, every robot, and even every environment. Can we instead train ``generalist’’ X-robot policy that can be adapted efficiently to new robots, tasks, and environments? In this paper, we provide datasets in standardized data formats and models to make it possible to explore this possibility in the context of robotic manipulation, alongside experimental results that provide an example of effective X-robot policies. We assemble a dataset from 22 different robots collected through a collaboration between 21 institutions, demonstrating 527 skills (160266 tasks). We show that a high-capacity model trained on this data, which we call RT-X, exhibits positive transfer and improves the capabilities of multiple robots by leveraging experience from other platforms.

CortexBench

论文标题:Where are we in the search for an Artificial Visual Cortex for Embodied Intelligence?

论文作者:Arjun Majumdar, Karmesh Yadav, Sergio Arnaud, Yecheng Jason Ma, Claire Chen, Sneha Silwal, Aryan Jain, Vincent-Pierre Berges, Pieter Abbeel, Jitendra Malik, Dhruv Batra, Yixin Lin, Oleksandr Maksymets, Aravind Rajeswaran, Franziska Meier

论文原文:https://arxiv.org/abs/2303.18240

论文出处:RRL 2023 Spotlight

论文被引:27(11/19/2023)

论文代码:https://github.com/facebookresearch/eai-vc,380 star

项目主页:https://eai-vc.github.io/

Keywords: representation learning, pre-training, foundation models, embodied AI, reinforcement learning

TL;DR: We present the largest and most comprehensive empirical study of visual foundation models for Embodied AI (EAI).

Abstract: We present the largest and most comprehensive empirical study of visual foundation models for Embodied AI (EAI). First, we curate CORTEXBENCH, consisting of 17 different EAI tasks spanning locomotion, navigation, dexterous and mobile manipulation. Next, we systematically evaluate existing visual foundation models and find that none is universally dominant. To study the effect of pre-training data scale and diversity, we combine ImageNet with over 4,000 hours of egocentric videos from 7 different sources (over 5.6M images) and train different sized vision transformers using Masked Auto-Encoding (MAE) on slices of this data. These models required over 10,000 GPU-hours to train and will be open-sourced to the community. We find that scaling dataset size and diversity does not improve performance across all tasks but does so on average. Finally, we show that adding a second pre-training step on a small in-domain dataset improves performance, matching or outperforming the best known results in this setting.

我们介绍了针对具身人工智能(EAI)的视觉基础模型进行的规模最大、最全面的实证研究。首先,我们整理了 CORTEXBENCH,其中包括 17 种不同的 EAI 任务,涵盖运动、导航、灵巧和移动操作。接下来,我们对现有的视觉基础模型进行了系统评估,发现没有一个模型具有普遍优势。为了研究预训练数据规模和多样性的影响,我们将 ImageNet 与来自 7 个不同来源的超过 4000 小时的以自我为中心的视频(超过 560 万张图像)结合起来,并在这些数据的切片上使用 MAE 训练不同大小的 Vision Transformer。这些模型的训练需要超过 10,000 个 GPU 小时,并将向社区开源。我们发现,扩大数据集的规模和多样性并不能提高所有任务的性能,但平均而言可以。最后,我们表明,在小型域内数据集上增加第二个预训练步骤可以提高性能,与该环境中已知的最佳结果相当或更优。