分布式集群下数据可见性问题分析记录

1. 问题背景介绍

AntDB 3.1在做benchmarksql压测时,一直有个core down 问题。起初一直以为是触发器问题,因触发器的逻辑不太熟悉,一直先紧着别的问题修正。最近定位这个问题时,根据core文件的堆栈信息发现,是foreign key检查的问题。

可通过如下脚本复现该问题。

1-1. 集群搭建

为了简化测试环境,排除其他干扰项,此处搭建一个1C1D的AntDB集群。

postgres=# table pgxc_node;

node_name | node_type | node_port | node_host | nodeis_primary | nodeis_preferred | node_id

-----------+-----------+-----------+---------------+----------------+------------------+-----------

cd2 | C | 38000 | 169.254.3.127 | f | f | 455674804

dn1 | D | 39001 | 169.254.3.127 | f | f | -560021589

(2 rows)

1-2. 建表SQL

创建复制表test, testa,其中test(sid)外键关联testa的id字段。

drop table if exists testa, test;

create table testa(id serial primary key, sex varchar(2)) DISTRIBUTE BY REPLICATION;

insert into testa(sex) values('m');

create table test(id serial primary key, name varchar(20), sid integer references testa on delete cascade) DISTRIBUTE BY REPLICATION;

1-2. 测试脚本

#!/bin/bash

for((i=0;i<=1000;i++));

do

psql -X -p 38000 -U postgres <<EOF

begin;

insert into testa(sex) values('m');

update testa set sex = 'f' where id = 1 returning *;

insert into test(name, sid) values('zl', 1) returning *;

end;

EOF

done

1-3. 测试方法

后台同时运行2次以上脚本,一段时间(很快,约1min)后即可在dn1上产生core文件。

[dev@CentOS-7 ~/workspace_adb31]$ sh test.sh >test.result 2>&1 &

[1] 27726

[dev@CentOS-7 ~/workspace_adb31]$ sh test.sh >test.result 2>&1 &

[2] 27748

[dev@CentOS-7 ~/workspace_adb31]$ sh test.sh >test.result 2>&1 &

[3] 29055

1-4. core 文件堆栈

[dev@CentOS-7 ~/workspace_adb31]$ gdb postgres /data/dev/adb31/dn/dn1/core.22968

(gdb) bt

#0 0x00007fe831fd71f7 in raise () from /lib64/libc.so.6

#1 0x00007fe831fd88e8 in abort () from /lib64/libc.so.6

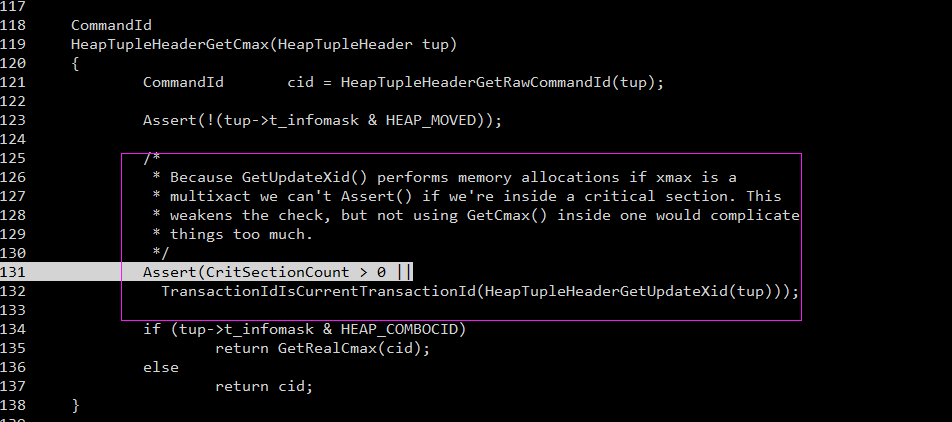

#2 0x0000000000aa730d in ExceptionalCondition (conditionName=0xd41db0 "!(CritSectionCount > 0 || TransactionIdIsCurrentTransactionId(( (!((tup)->t_infomask & 0x0800) && ((tup)->t_infomask & 0x1000) && !((tup)->t_infomask & 0x0080)) ? HeapTupleGetUpdateXid(tup) : ( (tup)-"..., errorType=0xd41cc4 "FailedAssertion", fileName=0xd41c80 "/home/dev/workspace_adb31/adb_sql/src/backend/utils/time/combocid.c", lineNumber=132) at /home/dev/workspace_adb31/adb_sql/src/backend/utils/error/assert.c:54

#3 0x0000000000af1cad in HeapTupleHeaderGetCmax (tup=0x7fe819dfd360) at /home/dev/workspace_adb31/adb_sql/src/backend/utils/time/combocid.c:131

#4 0x00000000004de247 in heap_lock_tuple (relation=0x7fe833bf3aa0, tuple=0x7fff69b0dc10, cid=3, mode=LockTupleKeyShare, wait_policy=LockWaitBlock, follow_updates=1 '\001', buffer=0x7fff69b0dc58, hufd=0x7fff69b0dc40) at /home/dev/workspace_adb31/adb_sql/src/backend/access/heap/heapam.c:5100

#5 0x00000000006ebe94 in ExecLockRows (node=0x1fe9950) at /home/dev/workspace_adb31/adb_sql/src/backend/executor/nodeLockRows.c:179

#6 0x00000000006c8577 in ExecProcNode (node=0x1fe9950) at /home/dev/workspace_adb31/adb_sql/src/backend/executor/execProcnode.c:585

#7 0x00000000006c4436 in ExecutePlan (estate=0x1fe9748, planstate=0x1fe9950, use_parallel_mode=0 '\000', operation=CMD_SELECT, sendTuples=1 '\001', numberTuples=1, direction=ForwardScanDirection, dest=0x101de40 <spi_printtupDR>) at /home/dev/workspace_adb31/adb_sql/src/backend/executor/execMain.c:1624

#8 0x00000000006c2379 in standard_ExecutorRun (queryDesc=0x1fe7798, direction=ForwardScanDirection, count=1) at /home/dev/workspace_adb31/adb_sql/src/backend/executor/execMain.c:352

#9 0x00000000006c21e8 in ExecutorRun (queryDesc=0x1fe7798, direction=ForwardScanDirection, count=1) at /home/dev/workspace_adb31/adb_sql/src/backend/executor/execMain.c:297

#10 0x000000000070a5a5 in _SPI_pquery (queryDesc=0x1fe7798, fire_triggers=0 '\000', tcount=1) at /home/dev/workspace_adb31/adb_sql/src/backend/executor/spi.c:2499

#11 0x0000000000709ffd in _SPI_execute_plan (plan=0x1fe9338, paramLI=0x1fe7328, snapshot=0x0, crosscheck_snapshot=0x0, read_only=0 '\000', fire_triggers=0 '\000', tcount=1) at /home/dev/workspace_adb31/adb_sql/src/backend/executor/spi.c:2272

#12 0x0000000000706d29 in SPI_execute_snapshot (plan=0x1fe9338, Values=0x7fff69b0e0d0, Nulls=0x7fff69b0e090 " \340\260i", snapshot=0x0, crosscheck_snapshot=0x0, read_only=0 '\000', fire_triggers=0 '\000', tcount=1) at /home/dev/workspace_adb31/adb_sql/src/backend/executor/spi.c:536

#13 0x0000000000a207d0 in ri_PerformCheck (riinfo=0x1fd1938, qkey=0x7fff69b0e590, qplan=0x1fe9338, fk_rel=0x7fe833bf64c8, pk_rel=0x7fe833bf3aa0, old_tuple=0x0, new_tuple=0x7fff69b0ebd0, detectNewRows=0 '\000', expect_OK=5) at /home/dev/workspace_adb31/adb_sql/src/backend/utils/adt/ri_triggers.c:3153

#14 0x0000000000a1b71a in RI_FKey_check (trigdata=0x7fff69b0ebf0) at /home/dev/workspace_adb31/adb_sql/src/backend/utils/adt/ri_triggers.c:457

#15 0x0000000000a1b7bd in RI_FKey_check_ins (fcinfo=0x7fff69b0e770) at /home/dev/workspace_adb31/adb_sql/src/backend/utils/adt/ri_triggers.c:489

#16 0x000000000069e377 in ExecCallTriggerFunc (trigdata=0x7fff69b0ebf0, tgindx=0, finfo=0x1fe3730, instr=0x0, per_tuple_context=0x1f93e00) at /home/dev/workspace_adb31/adb_sql/src/backend/commands/trigger.c:1929

#17 0x00000000006a181b in AfterTriggerExecute (event=0x1fe5338, rel=0x7fe833bf64c8, trigdesc=0x1fe3570, finfo=0x1fe3730, instr=0x0, per_tuple_context=0x1f93e00, trig_tuple_slot1=0x0, trig_tuple_slot2=0x0) at /home/dev/workspace_adb31/adb_sql/src/backend/commands/trigger.c:4055

#18 0x00000000006a1cb9 in afterTriggerInvokeEvents (events=0x1fdf9e8, firing_id=1, estate=0x1fe3308, delete_ok=1 '\001') at /home/dev/workspace_adb31/adb_sql/src/backend/commands/trigger.c:4262

#19 0x00000000006a213b in AfterTriggerEndQuery (estate=0x1fe3308) at /home/dev/workspace_adb31/adb_sql/src/backend/commands/trigger.c:4481

#20 0x00000000006c2526 in standard_ExecutorFinish (queryDesc=0x1fd23f0) at /home/dev/workspace_adb31/adb_sql/src/backend/executor/execMain.c:425

#21 0x00000000006c241d in ExecutorFinish (queryDesc=0x1fd23f0) at /home/dev/workspace_adb31/adb_sql/src/backend/executor/execMain.c:393

#22 0x000000000094630a in ProcessQuery (plan=0x1fb6728, sourceText=0x1fb75d8 "INSERT INTO public.test AS test (id, name, sid) VALUES ($1, $2, $3) RETURNING id, name, sid", params=0x1fd2280, dest=0x1fd2358, completionTag=0x7fff69b0eed0 "INSERT 0 1") at /home/dev/workspace_adb31/adb_sql/src/backend/tcop/pquery.c:237

#23 0x0000000000947c69 in PortalRunMulti (portal=0x1fadd88, isTopLevel=1 '\001', setHoldSnapshot=1 '\001', dest=0x1fd2358, altdest=0x101ddc0 <donothingDR>, completionTag=0x7fff69b0eed0 "INSERT 0 1") at /home/dev/workspace_adb31/adb_sql/src/backend/tcop/pquery.c:1408

#24 0x0000000000947750 in FillPortalStore (portal=0x1fadd88, isTopLevel=1 '\001') at /home/dev/workspace_adb31/adb_sql/src/backend/tcop/pquery.c:1139

#25 0x000000000094705c in PortalRun (portal=0x1fadd88, count=9223372036854775807, isTopLevel=1 '\001', dest=0x1f79aa8, altdest=0x1f79aa8, completionTag=0x7fff69b0f0e0 "") at /home/dev/workspace_adb31/adb_sql/src/backend/tcop/pquery.c:850

#26 0x0000000000942012 in exec_execute_message (portal_name=0x1f79278 "", max_rows=9223372036854775807) at /home/dev/workspace_adb31/adb_sql/src/backend/tcop/postgres.c:2459

#27 0x0000000000945004 in PostgresMain (argc=1, argv=0x1ef8720, dbname=0x1ef8618 "postgres", username=0x1ef85f0 "postgres") at /home/dev/workspace_adb31/adb_sql/src/backend/tcop/postgres.c:4844

#28 0x00000000008b0548 in BackendRun (port=0x1ef1940) at /home/dev/workspace_adb31/adb_sql/src/backend/postmaster/postmaster.c:4633

#29 0x00000000008afcbb in BackendStartup (port=0x1ef1940) at /home/dev/workspace_adb31/adb_sql/src/backend/postmaster/postmaster.c:4307

#30 0x00000000008abf96 in ServerLoop () at /home/dev/workspace_adb31/adb_sql/src/backend/postmaster/postmaster.c:1847

#31 0x00000000008ab5bf in PostmasterMain (argc=4, argv=0x1ec9ba0) at /home/dev/workspace_adb31/adb_sql/src/backend/postmaster/postmaster.c:1455

#32 0x0000000000749259 in main (argc=4, argv=0x1ec9ba0) at /home/dev/workspace_adb31/adb_sql/src/backend/main/main.c:232

1-5. core文件分析

1-5-1. 外键检查执行SQL

SELECT 1 FROM ONLY "public"."testa" x WHERE "id" OPERATOR(pg_catalog.=) 1 FOR KEY SHARE OF x

1-5-2. 外键检查执行Plan

外键检查的执行计划是ExecLockRows,即先从testa中查出id=1的记录,然后再尝试加Key Share锁。

(gdb) pn planstate

$7 = {ps = {type = T_LockRowsState, plan = 0x1fd5fa0, state = 0x1fe9748, instrument = 0x0, worker_instrument = 0x0, list_cluster_instrument = 0x0, targetlist = 0x0, qual = 0x0, lefttree = 0x1fe9be0, righttree = 0x0, initPlan = 0x0, subPlan = 0x0, chgParam = 0x0, ps_ResultTupleSlot = 0x1fe9a68, ps_ExprContext = 0x0, ps_ProjInfo = 0x0, rownum = 1, rownum_marked = 0, ps_TupFromTlist = 0 '\000'}, lr_arowMarks = 0x1fedac8, lr_epqstate = {estate = 0x0, planstate = 0x0, origslot = 0x0, plan = 0x1fd62b8, arowMarks = 0x0, epqParam = 0}, lr_curtuples = 0x1feb2c8, lr_ntables = 1}

1-5-3. 行记录可见性分析

1-5-3-1. 外键记录查询可见性

(gdb) f 5

#5 0x00000000006ebe94 in ExecLockRows (node=0x1fe9950) at /home/dev/workspace_adb31/adb_sql/src/backend/executor/nodeLockRows.c:179

179 test = heap_lock_tuple(erm->relation, &tuple,

(gdb) p tuple->t_self

$3 = {ip_blkid = {bi_hi = 0, bi_lo = 1}, ip_posid = 34}

(gdb) p *tuple->t_data

$4 = {t_choice = {t_heap = {t_xmin = 17543, t_xmax = 17544, t_field3 = {t_cid = 1, t_xvac = 1}}, t_datum = {datum_len_ = 17543, datum_typmod = 17544, datum_typeid = 1}}, t_ctid = {ip_blkid = {bi_hi = 0, bi_lo = 1}, ip_posid = 38}, t_infomask2 = 49154, t_infomask = 9474, t_hoff = 24 '\030', t_bits = 0x7fe819dfd377 ""}

(gdb) p/x 9474

$5 = 0x2502

(gdb) p *estate->es_snapshot

$8 = {satisfies = 0xaf398f <HeapTupleSatisfiesMVCC>, xmin = 17544, xmax = 17547, xip = 0x1fdfc98, xcnt = 1, subxip = 0x0, subxcnt = 0, suboverflowed = 0 '\000', takenDuringRecovery = 0 '\000', copied = 1 '\001', curcid = 3, speculativeToken = 0, active_count = 1, regd_count = 2, ph_node = {first_child = 0x0, next_sibling = 0x1fdf990, prev_or_parent = 0x1fdf410}, whenTaken = 0, lsn = 0, max_xcnt = 2144}

(gdb) p *estate->es_snapshot ->xip@1

$9 = {17544}

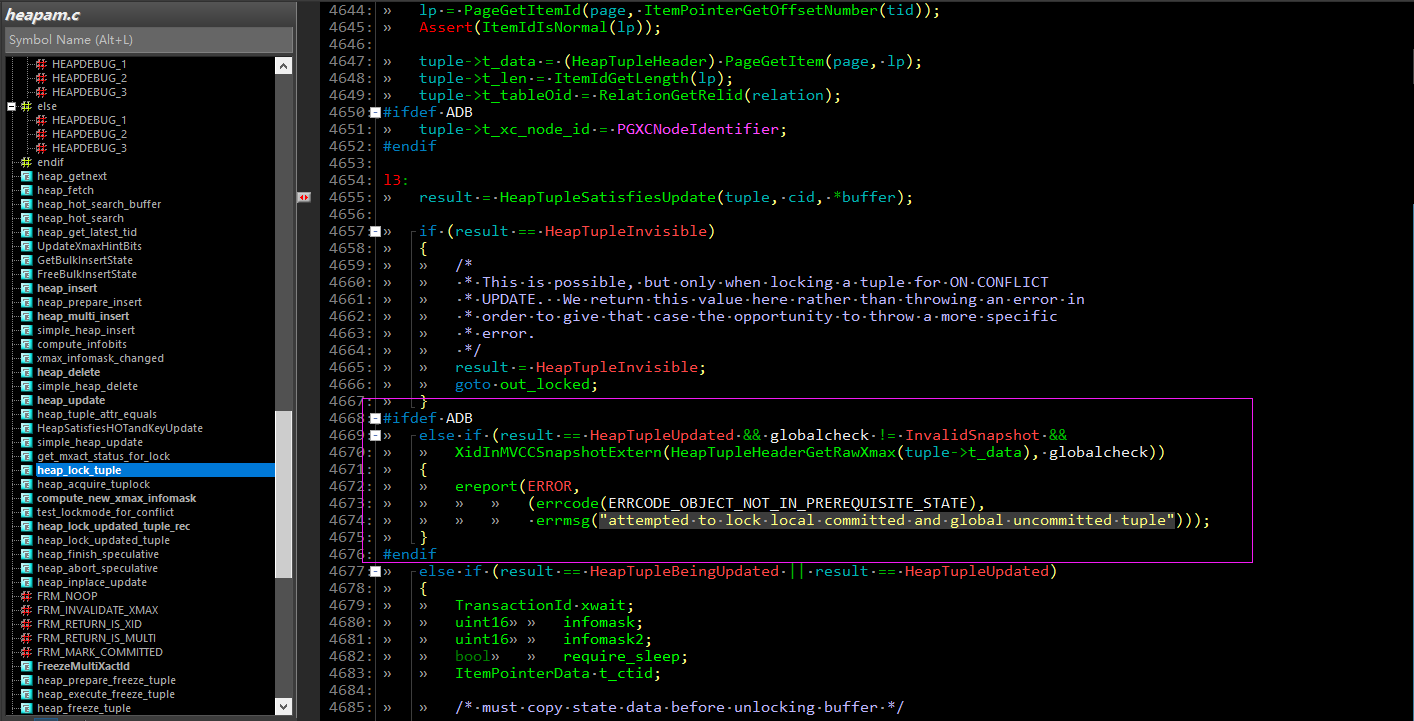

查看栈帧5,查询(即SELECT 1 FROM ONLY "public"."testa" x WHERE "id" OPERATOR(pg_catalog.=) 1)所返回的tuple。可以看出,该记录为事务17544更新时的old tuple,且已经本地提交(infomask包含HEAP_XMAX_COMMITTED),而此时的全局snapshot,显示17544还处于活跃事务列表中,即此时,查询所得的记录属于local committed but global uncommitted的记录。即站在global snapshot的角度,这条记录是可见的。这点本无可厚非,但错就错在后续检查该记录的update可见性时,是站在本地procarray和clog的角度,而不是参照global snapshot。

1-5-3-2. 外键记录更新可见性

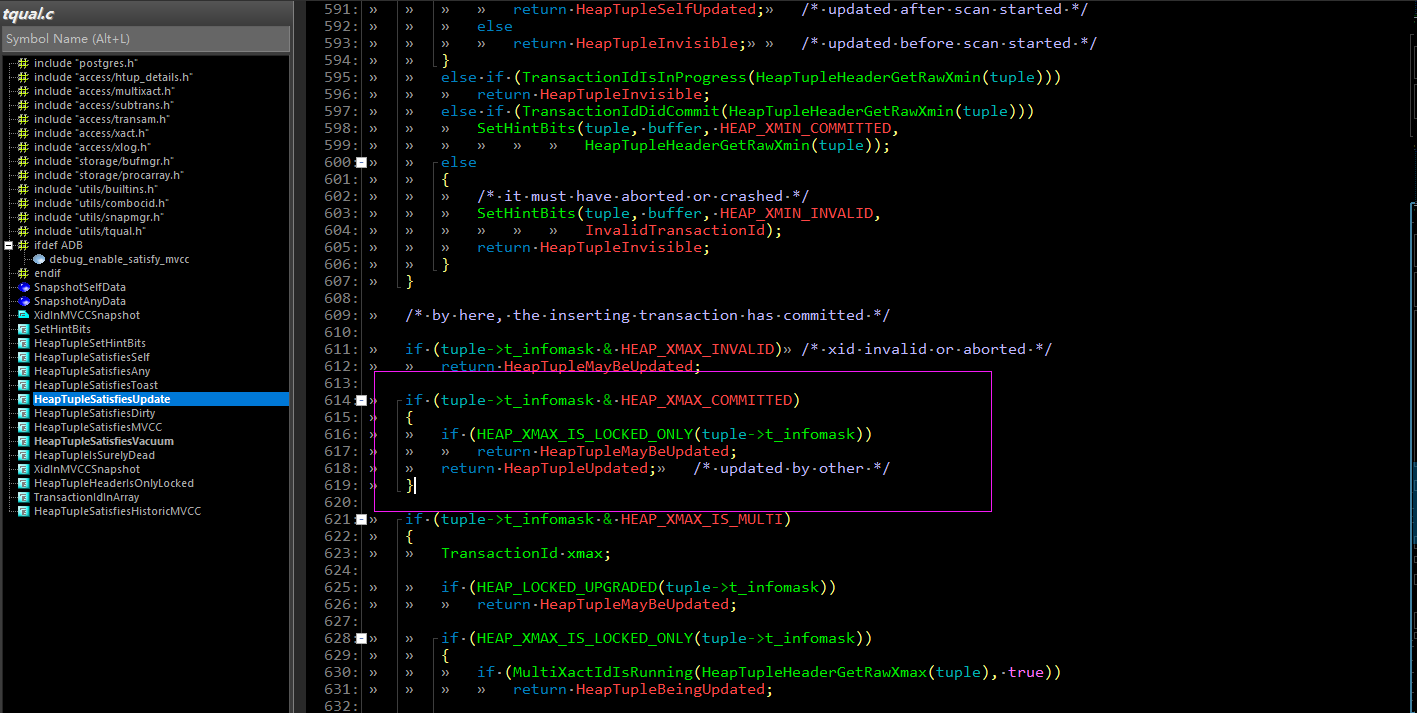

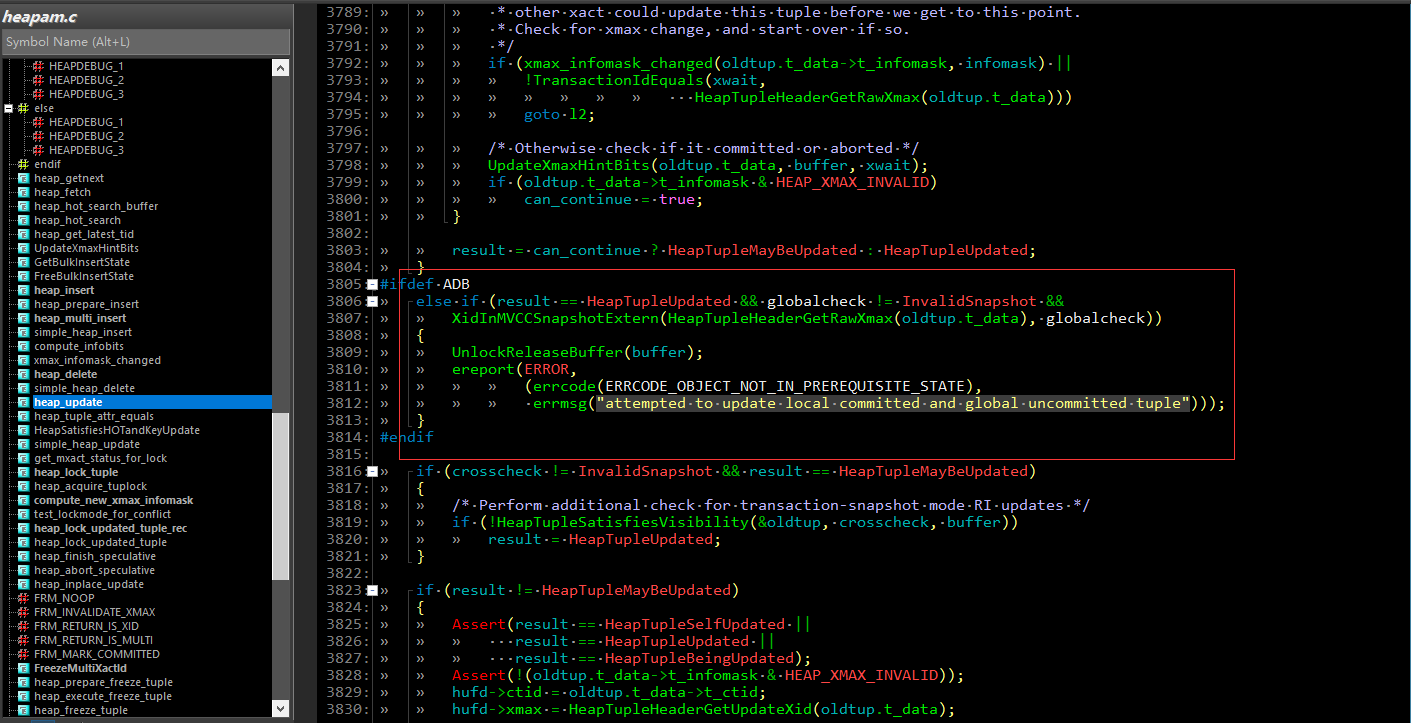

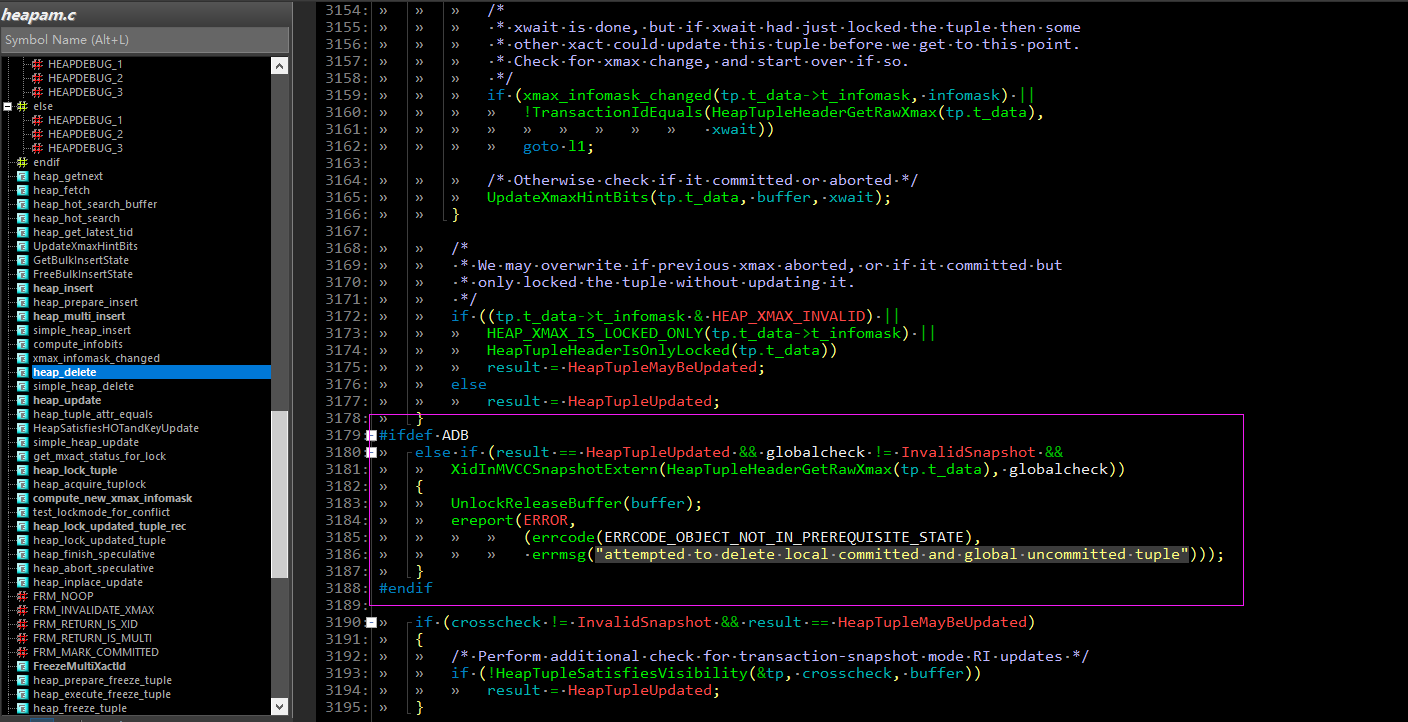

根据HeapTupleSatisfiesUpdate代码,可以判定该记录的更新可见性为HeapTupleUpdated,即该记录是被别人更新的老记录。

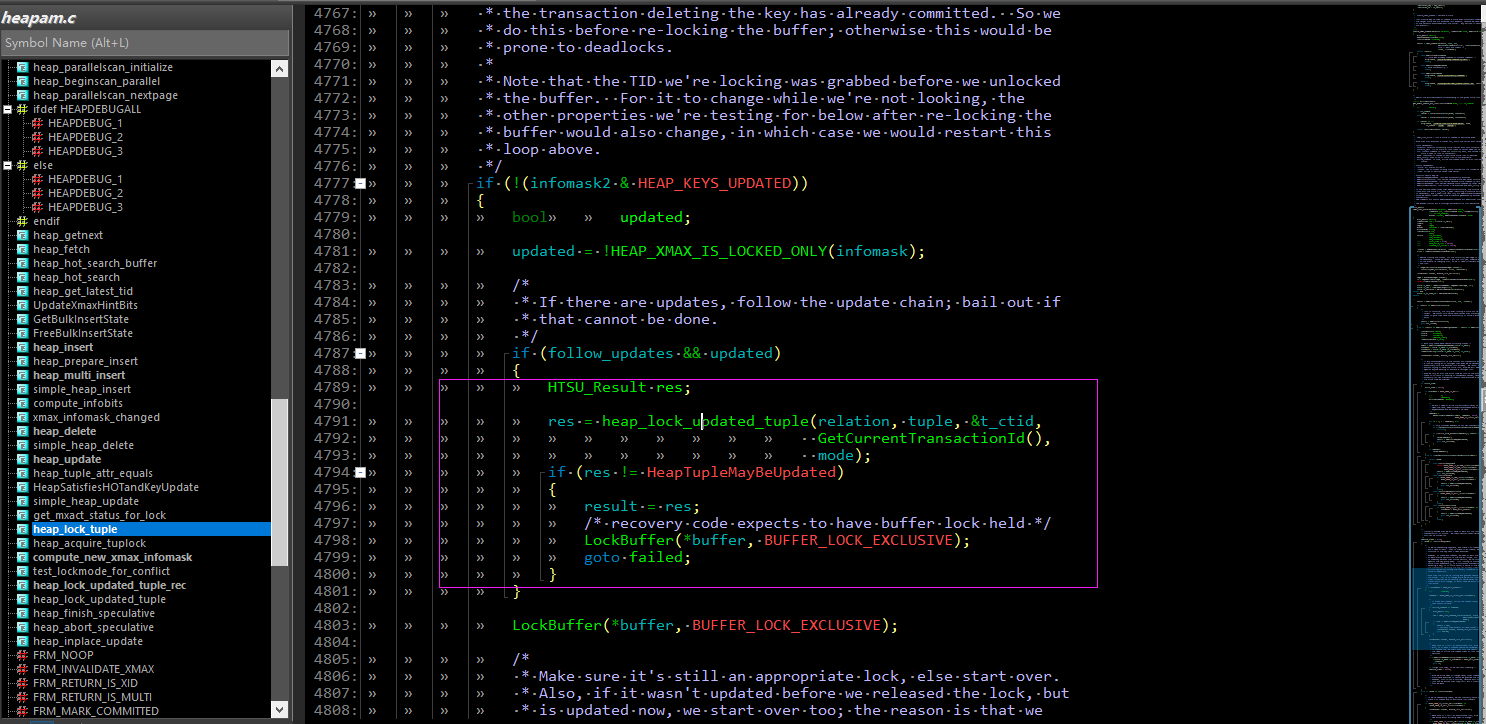

根据heap_lock_tuple的逻辑,当该记录的更新可见性为HeapTupleUpdated时,尝试对更新链的下一版本的记录加锁。

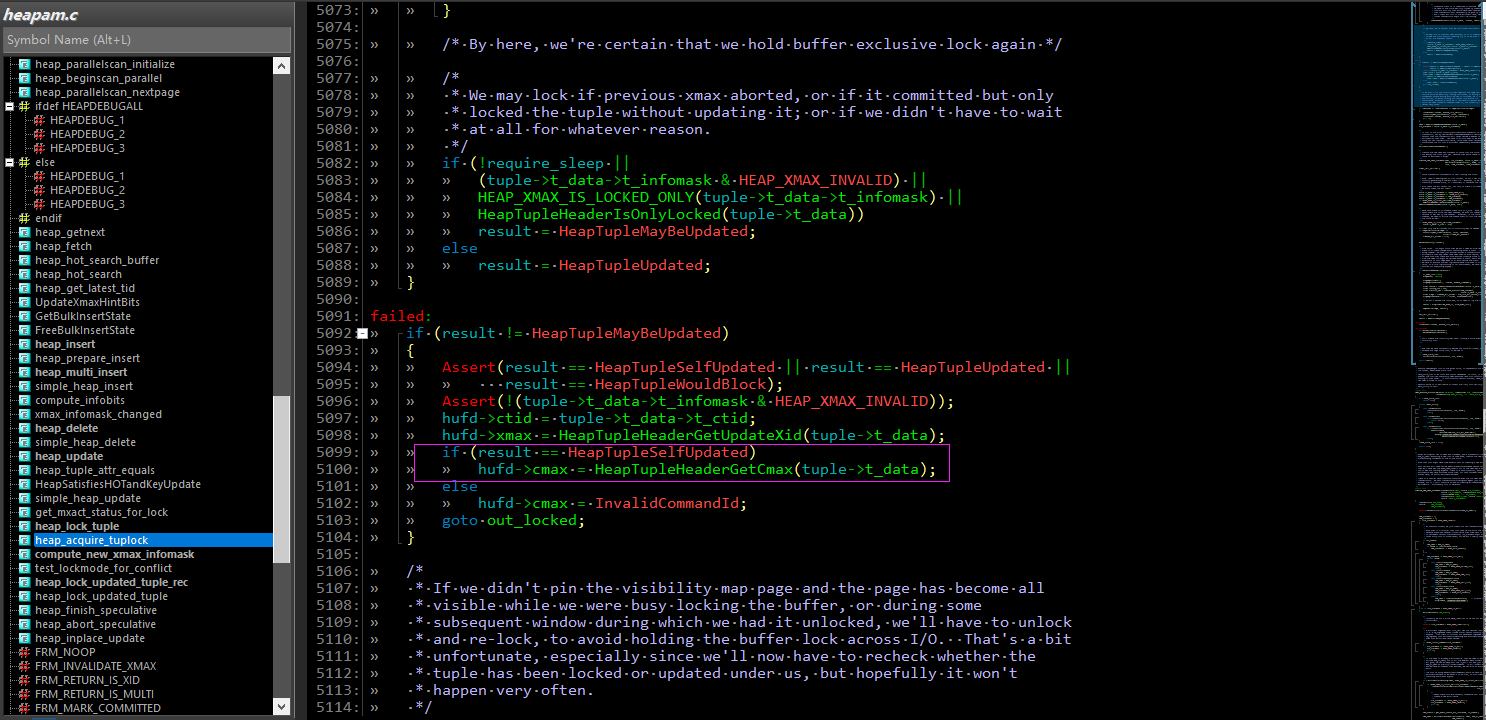

但下一个版本的记录的更新可见性为HeapTupleSelfUpdated,这就捂脸了,后续的代码断言与此处逻辑矛盾。

这也是core down的位置。

2. 问题引申

鉴于AntDB全局事务,全局snapshot的设计方案:集群节点先提交事务,AGTM最后提交事务。

以上述集群1C1D为例,一次事务提交,按照如下顺序提交:

- cd2 commit cn节点执行一阶段提交

- dn1 prepare 其他节点执行两阶段prepare

- agtm prepare

- dn1 commit prepared 'xxx' 其他节点执行两阶段commit

- agtm commit prepared 'xxx'

配合上述定位分析,这条local committed but global uncommitted的记录可能是造成问题的元凶,那么,便构造一些场景,分析下这种情况下会产生什么样的结果。

2-1. 异常环境构造

2-1-1. 直接查询看到global uncommited的记录

- 创建表t,插入一条记录ctid=(0,1)。

postgres=# create table t(id int, value int);

CREATE TABLE

postgres=# insert into t values(1,1);

INSERT 0 1

postgres=# select ctid,xmin,xmax,infomask,pg_explain_infomask(infomask),* from t;

ctid | xmin | xmax | infomask | pg_explain_infomask | id | value

-------+-------+------+----------+-----------------------------------------+----+-------

(0,1) | 17555 | 0 | 2304 | HEAP_XMIN_COMMITTED | HEAP_XMAX_INVALID | 1 | 1

(1 row)

- 会话1显式开启事务,更新记录,查询到新记录ctid=(0,2)。

postgres=# begin;

BEGIN

postgres=# update t set value = 99 where id = 1;

UPDATE 1

postgres=# select ctid,xmin,xmax,infomask,pg_explain_infomask(infomask),* from t;

ctid | xmin | xmax | infomask | pg_explain_infomask | id | value

-------+-------+------+----------+----------------------------------+----+-------

(0,2) | 17556 | 0 | 10240 | HEAP_XMAX_INVALID | HEAP_UPDATED | 1 | 99

(1 row)

postgres=# end;

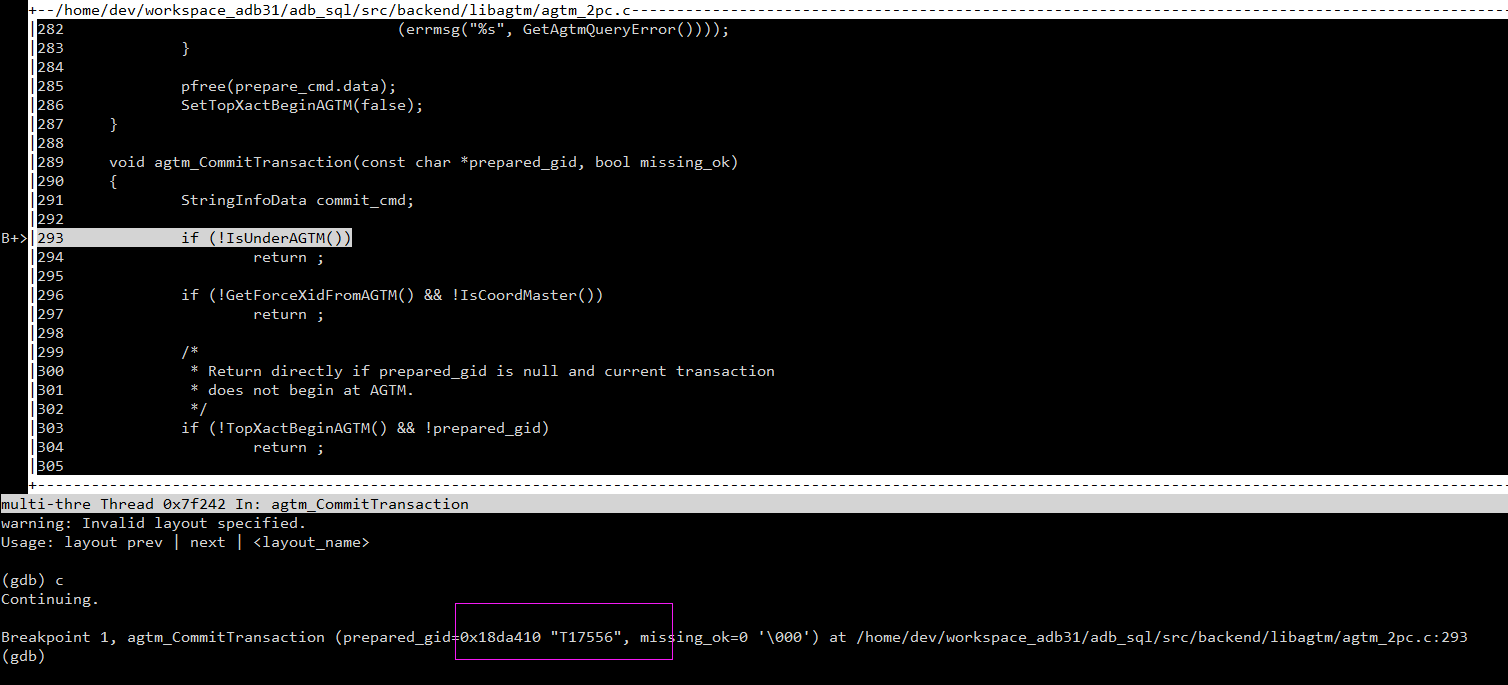

- 会话1在agtm_CommitTransaction打断点,阻止AGTM提交事务,构造出两条local committed but global uncommitted的记录,即ctid=(0,1), (0,2)的记录。

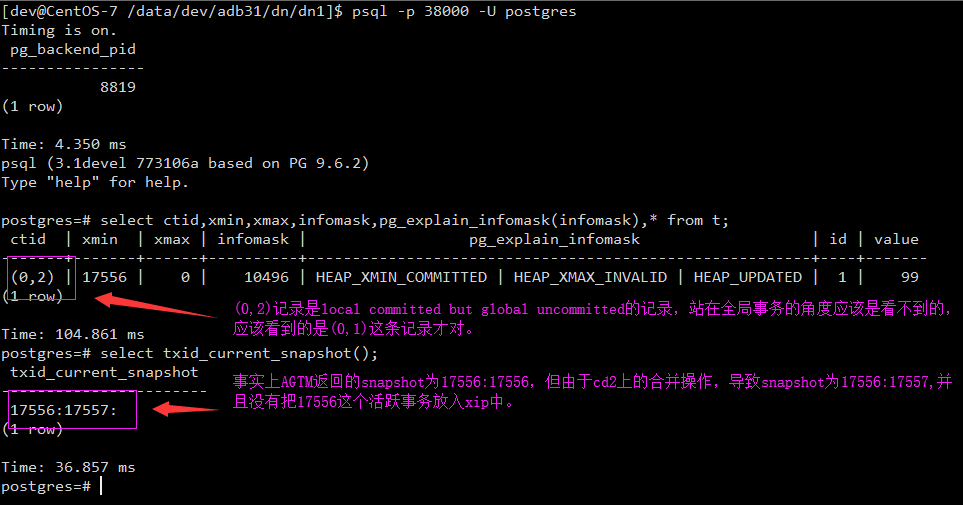

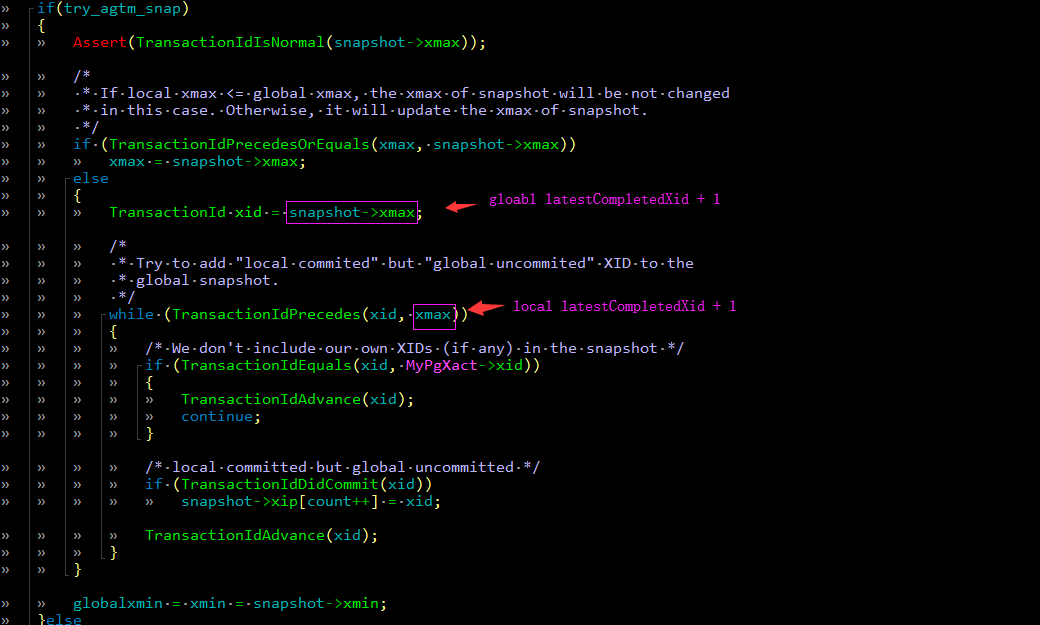

- 会话2执行查询,看到了global uncommitted 的记录。此时,AGTM上17556事务并没有提交,但是看当前snapshot会发现,17556并没有在活跃事务列表中。通过查看代码,发现AGTM返回的global snapshot没有问题,问题出在cd2节点收到global snapshot后,与local snapshot做了合并操作(至于为什么这样做,属于历史遗留问题),合并的过程中,因本地已经提交了17556事务,所以新产生的mixed snapshot->xmax = 17557,并且17556这个活跃的事务就被cd2给丢掉了(这应该是个bug)。

2-1-2. 更新后再查询看到global uncommitted的记录

- 步骤同上。

- 步骤同上。

- 步骤同上。

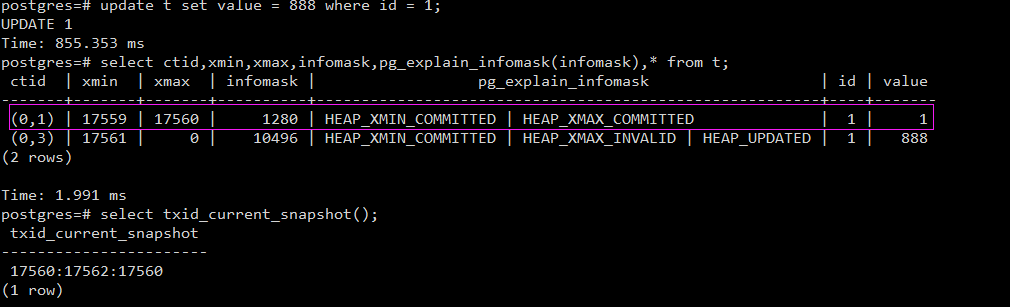

- 会话2执行更新后,再查询,同样看到了global uncommitted的记录ctid=(0,1)。因为此时站在global snapshot的角度,(0,1)这条记录的xmax是活跃的,所以是可见的。而记录ctid=(0,2)因17561是local committed and global committed的记录,所以不可见。

2-1-3. 删除后再查询看到global uncommitted的记录

- 步骤同上。

- 步骤同上。

- 步骤同上。

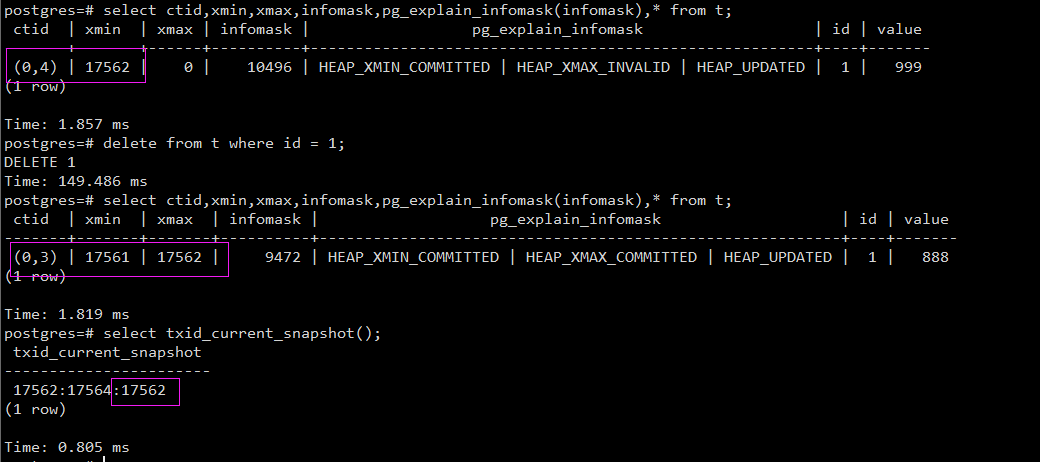

- 会话2执行删除后,再查询,同样看到了global uncommitted的记录ctid=(0,3)。因为此时站在global snapshot的角度,(0,3)这条记录的xmax是活跃的,所以是可见的。

3. 问题修复

AntDB是以AGTM提供全局事务,且AGTM作为事务的最后一环,很容易出现local committed but global uncommitted的记录并被其他事务删/改/查。而且通过上述分析,我们会发现在这种情况,会出现很多问题。那么,如何修正呢?

3-1. 修正查询到global uncommitted记录

3-1-1. 保留snapshot合并

保留local snapshot与global snapshot的合并操作,但当$L_(xmax) >G_(xmax)$时,需要对集合$[G_(xmax), L_(xmax)]$的每个local committed的事务加入到snapshot的活跃事务列表中。

3-1-2. 取消snapshot合并

取消local snapshot与global snapshot的合并操作,直接以global snapshot为准,此时,本地所有$xid >= G_(xmax)$的事务都是in-progress的。

WARNING!一直以来,AntDB都是以第一种方法为准,这种方法没有经过系统的测试。

3-2. 修正更新后查询到global uncommitted的记录

3-3. 修正删除后查询到global uncommitted的记录

3-4. 修正lock时查询到global uncommitted的记录

4. 后记

本文的修正方法,对上述提出的问题,均做了修正,包括foreign key check的core down问题。但是修正的patch尚未提交,需要大量测试方可。