Linear Regression解决的是连续的预测和拟合问题,而Logistic Regression解决的是离散的分类问题。两种方式,但本质殊途同归,两者都可以算是指数函数族的特例。

在分类问题中,y取值在{0,1}之间,因此,上述的Liear Regression显然不适应。

Sigmoid函数范围在[0,1]之间,参数 θ 只不过控制曲线的陡峭程度。以0.5为截点,>0.5则y值为1,< 0.5则y值为0,这样就实现了两类分类的效果。

Python版:

import numpy as np

import matplotlib.pyplot as plt

a = np.loadtxt('ex1.txt')

print(a.shape)

X=a[:,0:2]

y=a[:,2:3]

m=y.shape[0]

l=X.shape[1]

print(m,l)

X0=np.ones(m)

X_m=X

x1=X[:,0:1]

x2=X[:,1:2]

pos0=np.where(y==0)

pos1=np.where(y==1)

posf0=pos0[0]

posf1=pos1[0]

def featureNormalize(X,l):

X_norm=X

mu=np.mean(X,0)

print(mu)

sigma=np.std(X)

X_norm=(X-mu)/sigma

print(X_norm.shape)

return X_norm

X_norm=featureNormalize(X,l)

X=X_norm

x11=X[:,0:1]

x21=X[:,1:2]

print(x11)

X=np.c_[X0,X]

n=X.shape[1]

print(y.shape)

print(n)

print(type(X))

theta=np.zeros((n,1))

alpha=0.003

iterations=5000

def gradientdescentlogistic(theta,alpha,iterations,X,y,m):

J_h=np.zeros((iterations,1))

for i in range (0,iterations):

h_x=1/(1+np.exp(-np.dot(X,theta)))

theta=theta-alpha*np.dot(X.transpose(),(h_x-y))

J=-sum(y*np.log(h_x)+(1-y)*np.log(1-h_x))/m

J_h[i,:]=J

return theta,J_h

(theta,J_h)=gradientdescentlogistic(theta,alpha,iterations,X,y,m)

print(theta)

print(J_h)

#定义决策边界函数:

def plot_decision_boundary(x11,x21):

x1_m=np.array([(x11.min()),(x11.max())])

posx1m=np.where(x11==x1_m)

x2_m=x21[posx1m[0],:]

#x2_m=np.array([(x21.min()),(x21.max())])

x_m=np.c_[x1_m,x2_m]

print(x_m)

x_mf=np.c_[np.ones(2),x_m]

print(x_mf)

h_hat=1/(1+np.exp(-np.dot(x_mf,theta)))

x11_m=np.array([(x11.min()-3),(x11.max()+3)])

plt.plot(x11_m,h_hat)

plot_decision_boundary(x11,x21)

plt.scatter(x1[posf0],x2[posf0],marker='o',color='r',s=20)

plt.scatter(x1[posf1],x2[posf1],marker='o',color='b',s=20)

plt.show()

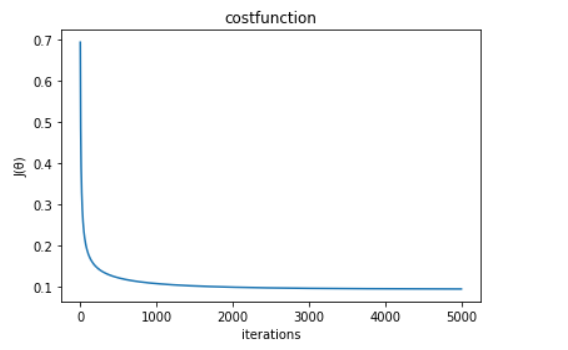

x2=np.arange(iterations)

plt.plot(x2,J_h)

plt.title("costfunction")

plt.xlabel("iterations")

plt.ylabel("J(θ)")

plt.show()

Matlab版:

clear ; close all; clc

data = load('ex1.txt');

X = data(:, [1, 2]); y = data(:, 3); % 成绩和录取结果

plotData(X, y);

hold on;

% Labels and Legend

xlabel('Exam 1 score')

ylabel('Exam 2 score')

% Specified in plot order

legend('Admitted', 'Not admitted')

[m, n] = size(X);

X = [ones(m, 1) X];

% Initialize fitting parameters

initial_theta = zeros(n + 1, 1);

% Compute and display initial cost and gradient

[cost, grad] = costFunction(initial_theta, X, y);

options = optimset('GradObj', 'on', 'MaxIter', 400);

[theta, cost] = ...

fminunc(@(t)(costFunction(t, X, y)), initial_theta, options);

plotDecisionBoundary(theta, X, y);

function plotDecisionBoundary(theta, X, y)

plotData(X(:,2:3), y);

hold on

if size(X, 2) <= 3

plot_x = [min(X(:,2))-2, max(X(:,2))+2];

plot_y = (-1./theta(3)).*(theta(2).*plot_x + theta(1));

plot(plot_x, plot_y)

legend('Admitted', 'Not admitted', 'Decision Boundary')

axis([30, 100, 30, 100])

else

u = linspace(-1, 1.5, 50);

v = linspace(-1, 1.5, 50);

z = zeros(length(u), length(v));

% Evaluate z = theta*x over the grid

for i = 1:length(u)

for j = 1:length(v)

z(i,j) = mapFeature(u(i), v(j))*theta;

end

end

z = z'; % important to transpose z before calling contour

contour(u, v, z, [0, 0], 'LineWidth', 2)

end

hold off

end

function [J, grad] = costFunction(theta, X, y)

m = length(y); % number of training examples

J = 0;

grad = zeros(size(theta));

J= -1 * sum( y .* log( sigmoid(X*theta) ) + (1 - y ) .* log( (1 - sigmoid(X*theta)) ) ) / m ;

grad = ( X' * (sigmoid(X*theta) - y ) )/ m ;

function plotData(X, y)

figure; hold on;

pos = find(y == 1); neg = find(y == 0);

plot(X(pos, 1), X(pos, 2), 'k+','LineWidth', 2, 'MarkerSize', 7);

plot(X(neg, 1), X(neg, 2), 'ko', 'MarkerFaceColor', 'y','MarkerSize', 7);

hold off;

end

本代码中用到的数据集:https://download.csdn.net/download/qq_20406597/10375205