代码部分:

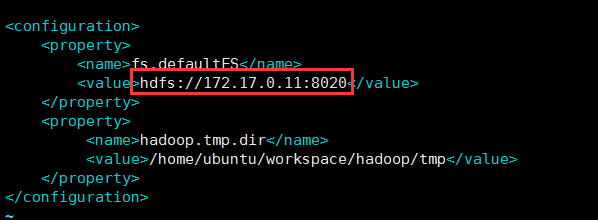

core-site.xml配置

hdfs-site.xml配置

报错信息:

org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /hello.txt could only be replicated to 0 nodes instead of minReplication (=1). There are 1 datanode(s) running and 1 node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(BlockManager.java:1595)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:3287)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:677)

at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.addBlock(AuthorizationProviderProxyClientProtocol.java:213)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:485)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2086)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2082)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1693)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2080)

原因:服务器是使用的公网ip,公网ip绑定总是失败,最后就绑定了内网ip

解决方法:

1.停止hdfs服务

2.重新配置:

(1)core-site.xml

(2)hdfs-site.xml不变,还是原来的配置

(3)配置hosts

(4)本地需要配置外网映射

2.删除hadoop的临时目录

3.重新格式化hadoop:hdfs namenode -format

4.启动hdfs服务

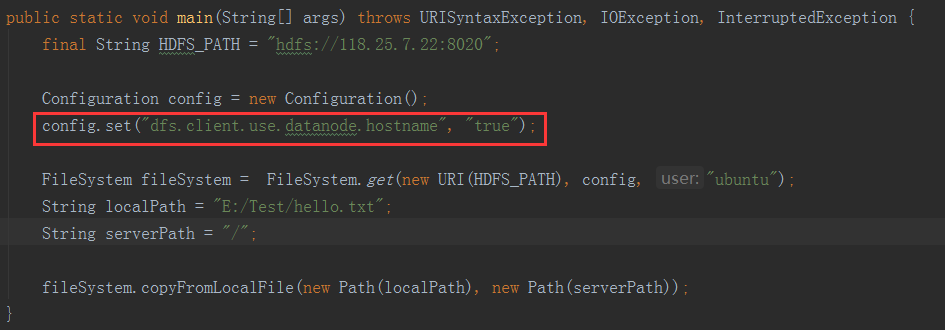

5.运行代码,代码中也加了一行,如下图:

最后运行成功!!!