学习率周期性变化,能后解决陷入鞍点的问题,更多的方式请参考https://github.com/bckenstler/CLR

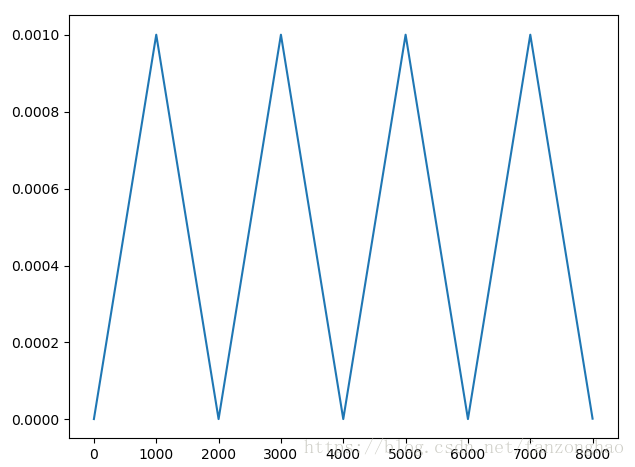

一,学习率周期性变化

global_steps=tf.placeholder(shape=[1],dtype=tf.int64)

cycle = tf.cast(tf.floor(1. + tf.cast(global_steps, dtype=tf.float32) /(2 * 1000.)), dtype=tf.float32)

x = tf.cast(tf.abs(tf.cast(global_steps, dtype=tf.float32) / 1000. - 2. * cycle + 1.), dtype=tf.float32)

learning_rate = 1e-6 + (1e-3 - 1e-6) * tf.maximum(0., (1 - x))

with tf.Session() as sess:

lr_list = []

cycle_list=[]

for i in range(8000):

lr=sess.run(learning_rate,feed_dict={global_steps:[i]})

lr_list.append(lr)

cl = sess.run(cycle, feed_dict={global_steps: [i]})

cycle_list.append(cl)

plt.plot(lr_list)

plt.show()

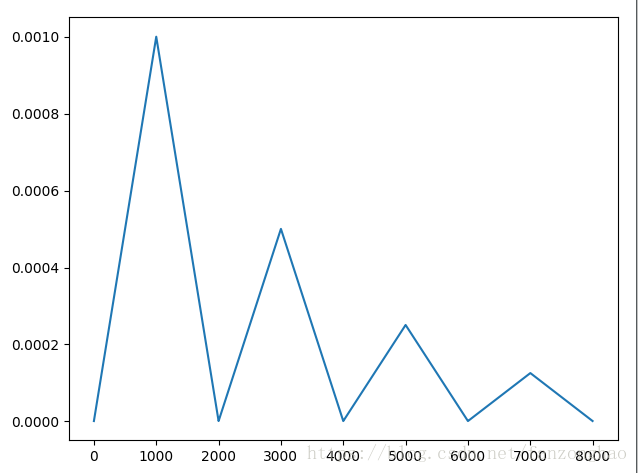

print(cycle_list)二,学习率周期性衰减

global_steps=tf.placeholder(shape=[1],dtype=tf.int64)

cycle = tf.cast(tf.floor(1. + tf.cast(global_steps, dtype=tf.float32) /(2 * 1000.)), dtype=tf.float32)

x = tf.cast(tf.abs(tf.cast(global_steps, dtype=tf.float32) / 1000. - 2. * cycle + 1.), dtype=tf.float32)

learning_rate = 1e-6 + (1e-3 - 1e-6) * tf.maximum(0., (1 - x))/tf.cast(2**(cycle-1),dtype=tf.float32)

with tf.Session() as sess:

lr_list = []

cycle_list=[]

for i in range(8000):

lr=sess.run(learning_rate,feed_dict={global_steps:[i]})

lr_list.append(lr)

cl = sess.run(cycle, feed_dict={global_steps: [i]})

cycle_list.append(cl)

plt.plot(lr_list)

plt.show()

print(cycle_list)