版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/lovely_J/article/details/82429659

IDEA:2018.2.3

VMware:14

HaDoop:2.7.1

juit:4.12

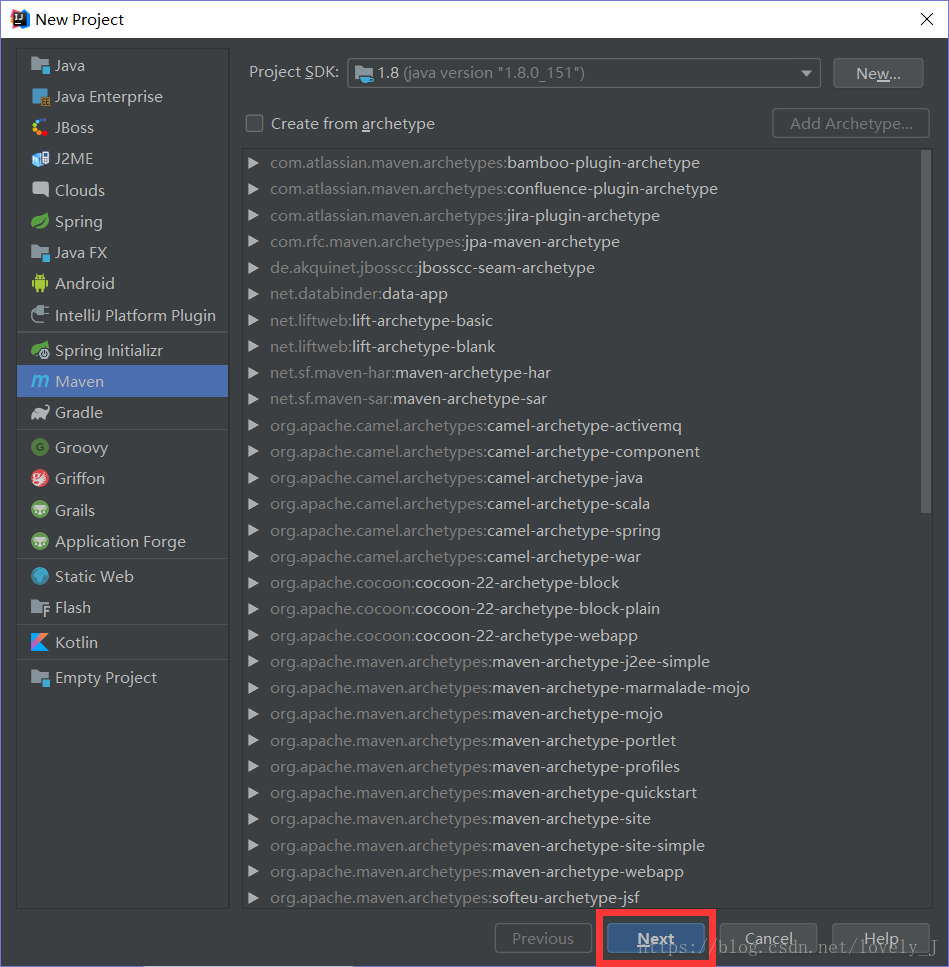

- 新建一个Maven项目(不需要选择类型)

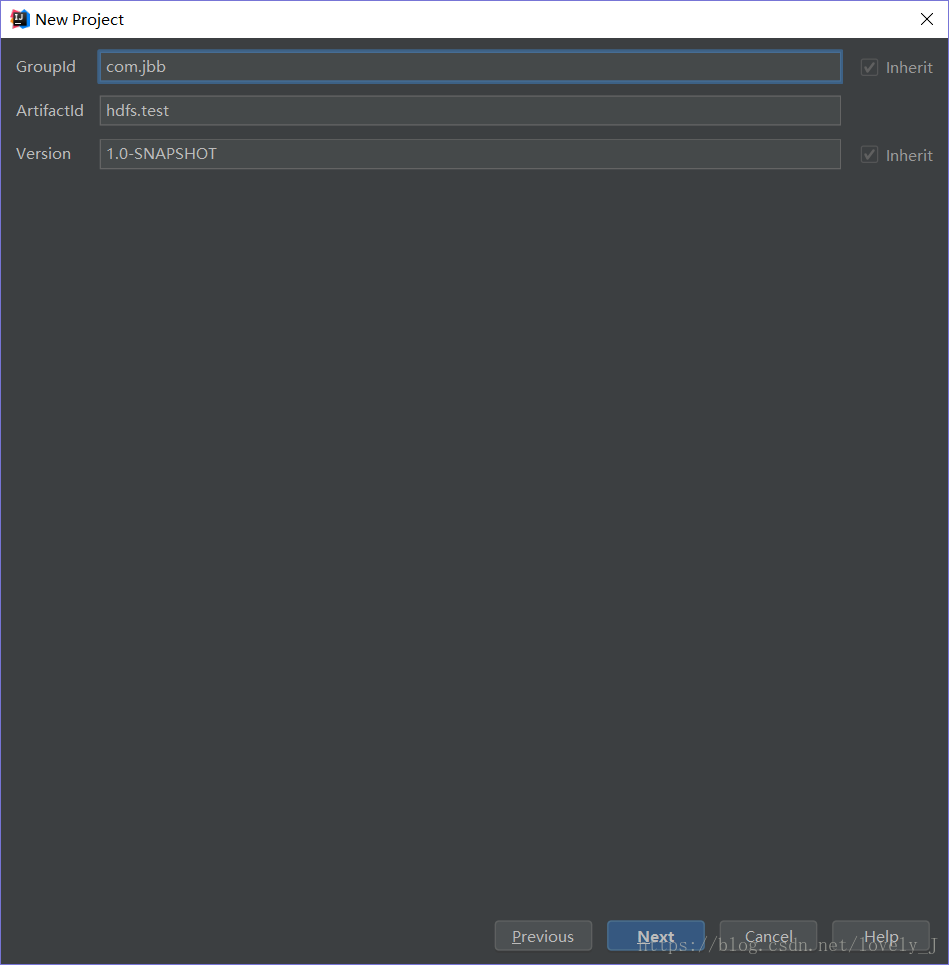

- 填写GroupId和 ArtifactId(这个根据大家习惯来写,如果有好的建议欢迎在下面留言)

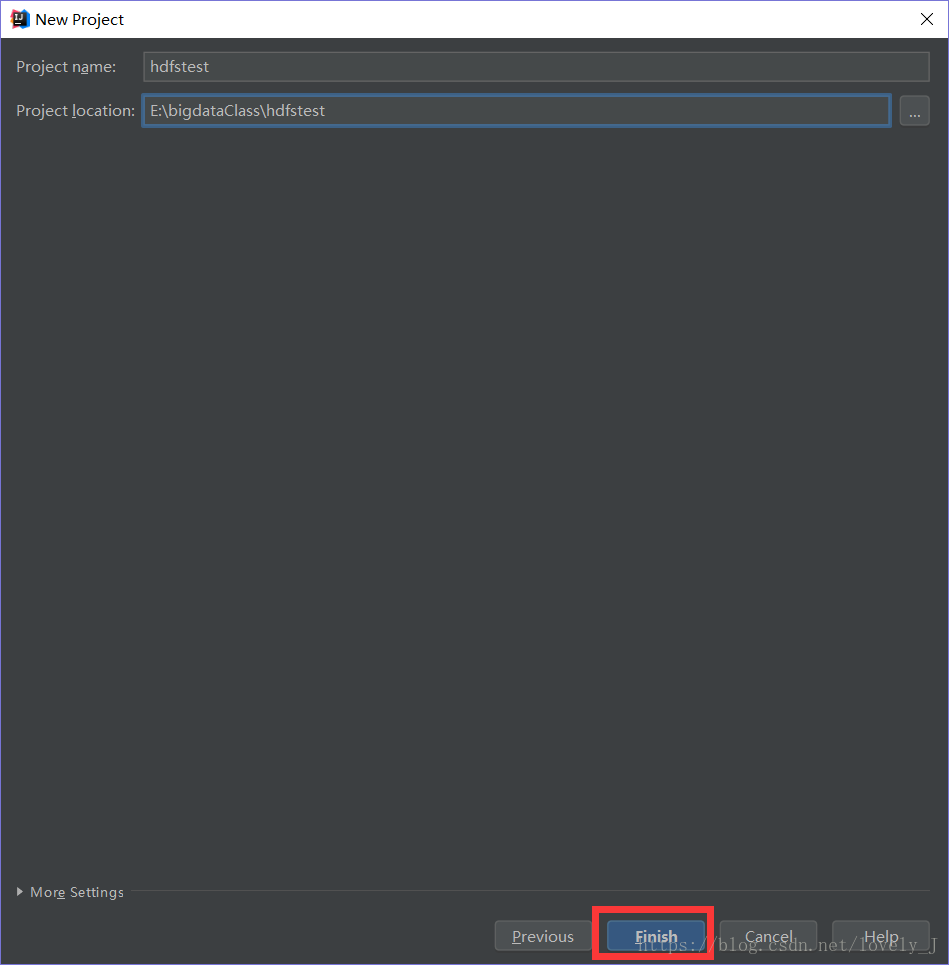

- 然后选择文件保存路径(个人建议路径里的文件夹名尽量不要有中文)

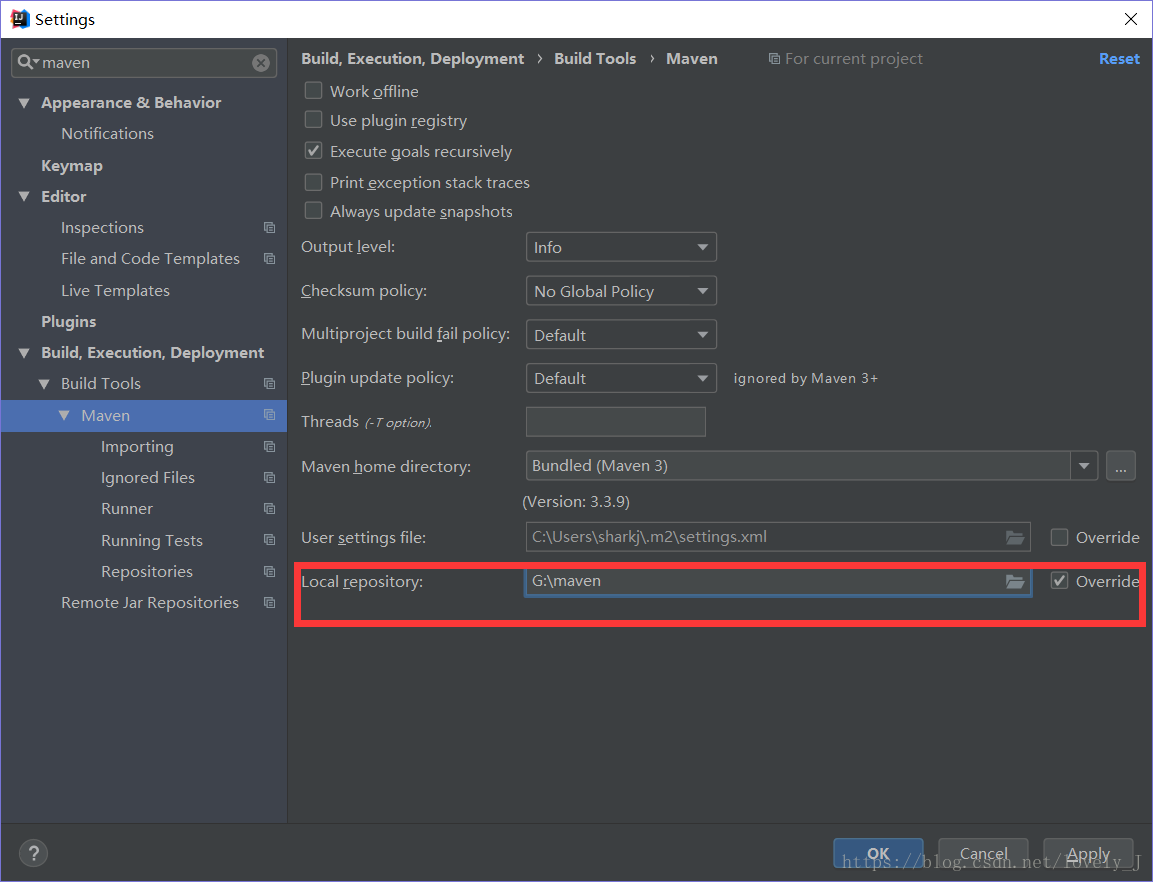

- 等待项目创建,可以在等待的间隙吧Maven仓库的地址修改一下,毕竟Maven下载包还是挺占地方的

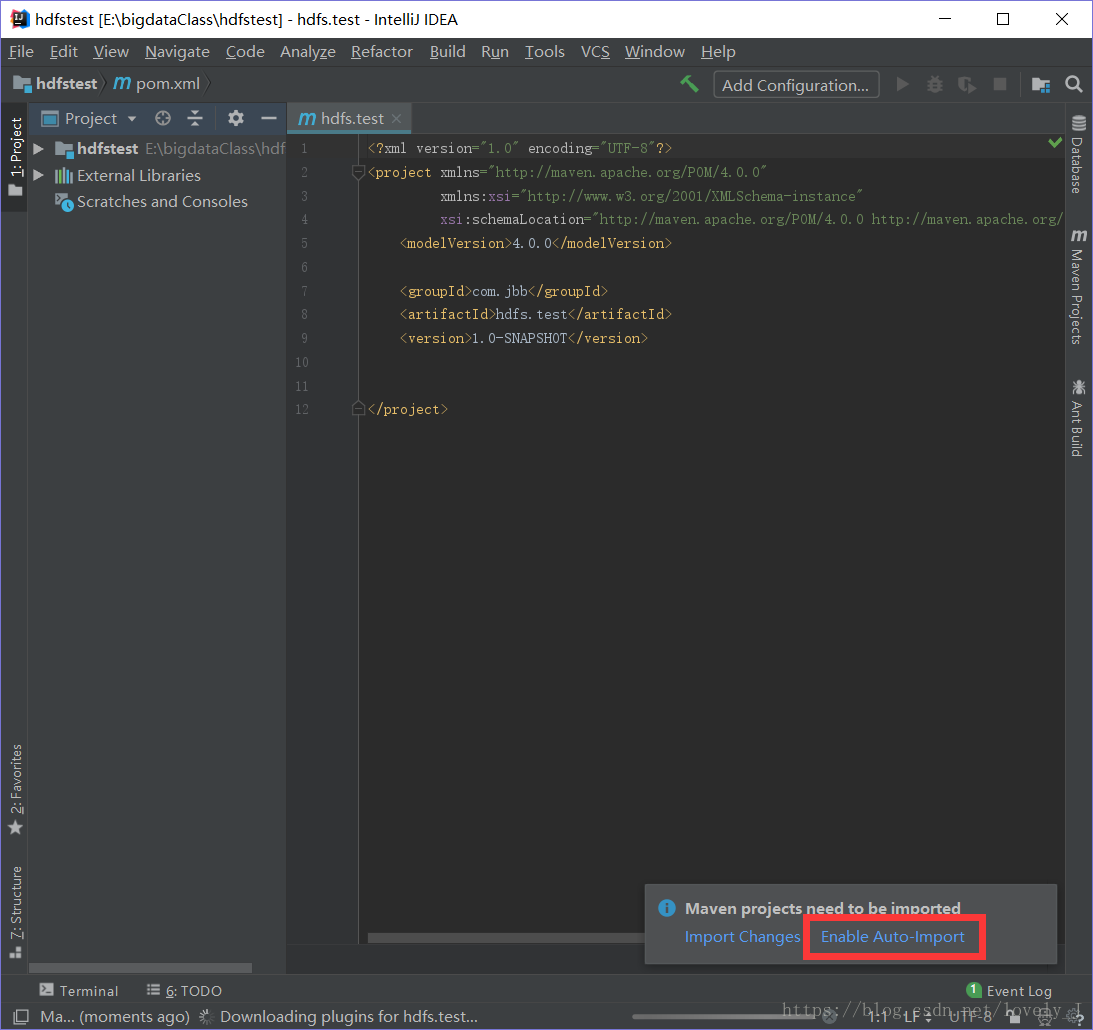

加载好之后我一般都会选择自动引入包

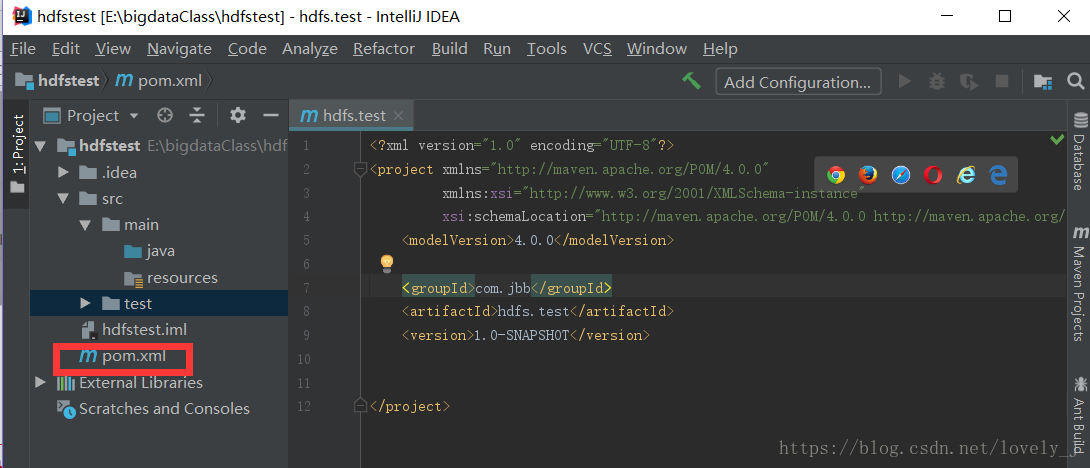

先找到pom.xml文件

然后添加dependency注意,HaDoop的版本号要和虚拟机里的一样

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-common -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.7.1</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-hdfs -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.7.1</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-client -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.7.1</version>

</dependency>

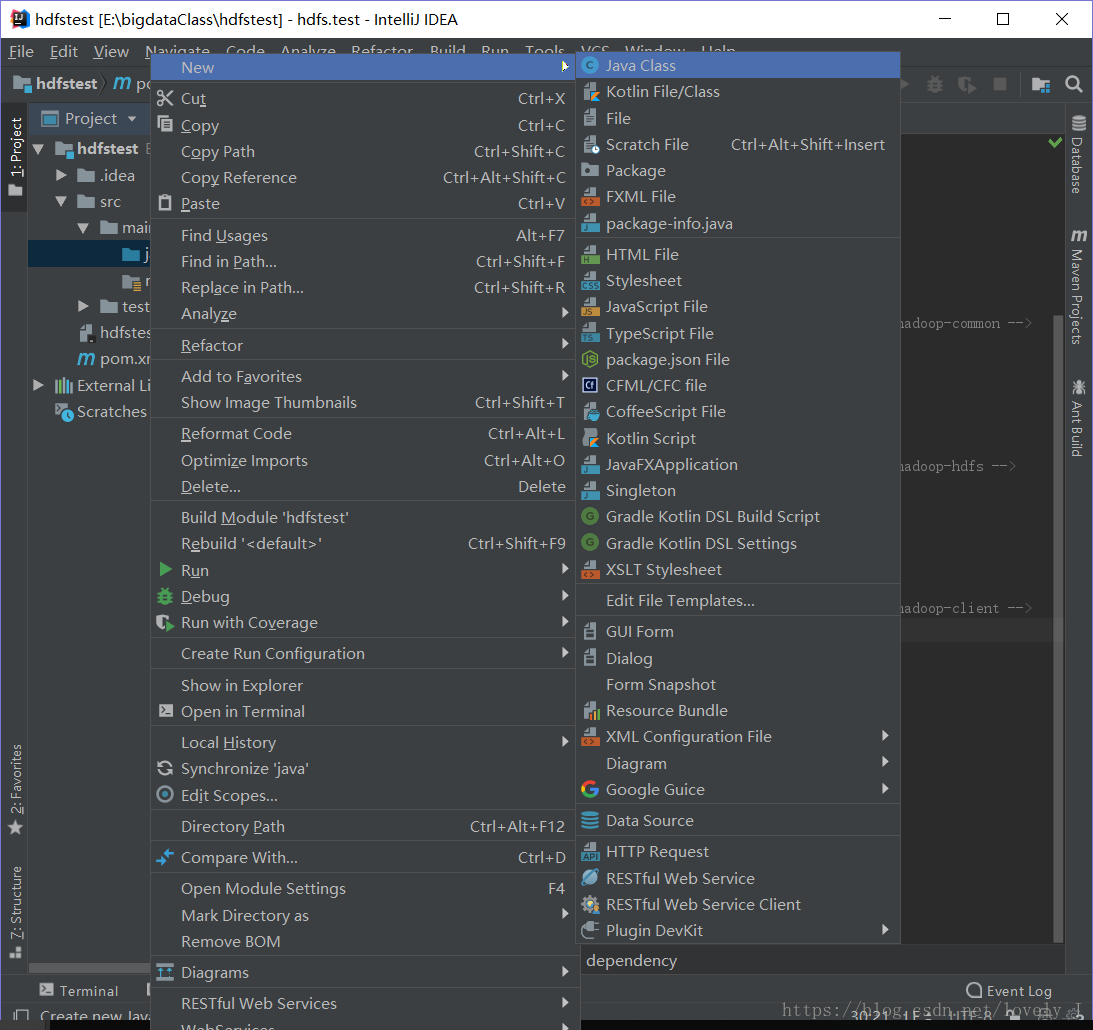

</dependencies>- bhjb在main/java下新建class

- 这里使用Junit的测试方法,大家可以试一下

其中的demo是我自己做的一个练习,因为还没有学文件复制所以操作看起来有点费事

public class HdfsTest {

//配置链接虚拟机的IP

public static final String HDFS_PATH = "hdfs://192.168.171.128:9000";

//hdfs文件系统

FileSystem fileSystem = null;

//获取环境对象

Configuration configuration = null;

@Before

public void setUp() throws Exception {

configuration = new Configuration();

fileSystem = FileSystem.get(new URI(HDFS_PATH), configuration);

System.out.println("HDFS APP SETUP");

}

@Test

public void create() throws IOException {

//创建文件

FSDataOutputStream outputStream = fileSystem.create(new Path("/hdfsapi/test/a.txt"));

outputStream.write("hello hadoop".getBytes());

outputStream.flush();

outputStream.close();

}

@Test

public void cat() throws IOException {

FSDataInputStream inputStream = fileSystem.open(new Path("/hdfsapi/test/a.txt"));

IOUtils.copyBytes(inputStream, System.out, 1024);

inputStream.close();

}

@Test

public void mkdir() throws IOException {

fileSystem.mkdirs(new Path("/hdfsapi/test"));

}

@Test

public void upset() throws URISyntaxException, IOException {

//上传文件,路径大家记得改一下

String file = "E:/hadoopTest/output/test.txt";

InputStream inputStream = new FileInputStream(new File(file));

FSDataOutputStream outputStream = fileSystem.create(new Path("/hdfsapi/park/aaa.txt"));

IOUtils.copyBytes(inputStream, outputStream, configuration);

// fileSystem.copyFromLocalFile();底层是调用了IOUtils.copyBytes()

}

@Test

public void download() throws URISyntaxException, IOException {

// 获取输入流

InputStream in = fileSystem.open(new Path("/park/2.txt"));

// 获取输出流

String file = "E:/hadoopTest/output/test.txt";

OutputStream outputStream = new FileOutputStream(new File(file));

IOUtils.copyBytes(in, outputStream, configuration);

in.close();

outputStream.close();

}

@Test

public void demo1() throws URISyntaxException, IOException {

configuration = new Configuration();

fileSystem = (FileSystem) FileSystem.get(new URI(HDFS_PATH), configuration);

// 1、在hdfs创建目录teacher。

// 2、在teacher目录下上传文件score.txt。

String file = "E:/hadoopTest/score.txt";

InputStream inputStream = new FileInputStream(new File(file));

OutputStream outputStream = fileSystem.create(new Path("/hdfs/teacher/score.txt"));

IOUtils.copyBytes(inputStream, outputStream, configuration);

// 3、在hdfs创建目录student,并在student目录下创建新目录Tom、LiMing、Jerry.

fileSystem.mkdirs(new Path("/hdfs/student/Tom"));

fileSystem.mkdirs(new Path("/hdfs/student/LiMing"));

fileSystem.mkdirs(new Path("/hdfs/student/Jerry"));

// 4、在Tom目录下上传information.txt,同时上传到LiMing、Jerry目录下。

file = "E:/hadoopTest/information.txt";

inputStream = new FileInputStream(new File(file));

outputStream = fileSystem.create(new Path("/hdfs/student/Tom/information.txt"));

IOUtils.copyBytes(inputStream, outputStream, configuration);

// file = "E:/hadoopTest/information.txt";

inputStream = new FileInputStream(new File(file));

outputStream = fileSystem.create(new Path("/hdfs/student/LiMing/information.txt"));

IOUtils.copyBytes(inputStream, outputStream, configuration);

// file = "E:/hadoopTest/information.txt";

inputStream = new FileInputStream(new File(file));

outputStream = fileSystem.create(new Path("/hdfs/student/Jerry/information.txt"));

IOUtils.copyBytes(inputStream, outputStream, configuration);

// 5、将student重命名为MyStudent。

fileSystem.rename(new Path("/hdfs/student"), new Path("/hdfs/MyStudent"));

// 6、将Tom下的information.txt下载到E:/tom目录中

file = "E:/tom";

inputStream = fileSystem.open(new Path("/hdfs/MyStudent/Tom/information.txt"));

outputStream = new FileOutputStream(new File(file));

IOUtils.copyBytes(inputStream, outputStream, configuration);

// 7、将teacher下的score.txt也下载到此目录

inputStream = fileSystem.open(new Path("/hdfs/teacher/score.txt"));

outputStream = new FileOutputStream(new File(file));

IOUtils.copyBytes(inputStream, outputStream, configuration);

// 8、删除hdfs中的Tom、LiMing目录

fileSystem.delete(new Path("/hdfs/Tom"), true);

fileSystem.delete(new Path("/hdfs/LiMing"), true);

inputStream.close();

outputStream.close();

}

@After

public void tearDown() throws Exception {

fileSystem.close();

configuration = null;

System.out.println("HDFS APP SHUTDOWN");

}

}