版权声明:版权为江米条所有 https://blog.csdn.net/LZJSTUDY/article/details/83023233

安卓java c++ 视频实时传输

要做一个视频实时传输并别图像追踪识别的项目。

本项目先采用TCP建立链接,然后在用UDP实时传输,用压缩图像帧内为JPEG的方式,加快传输速率。

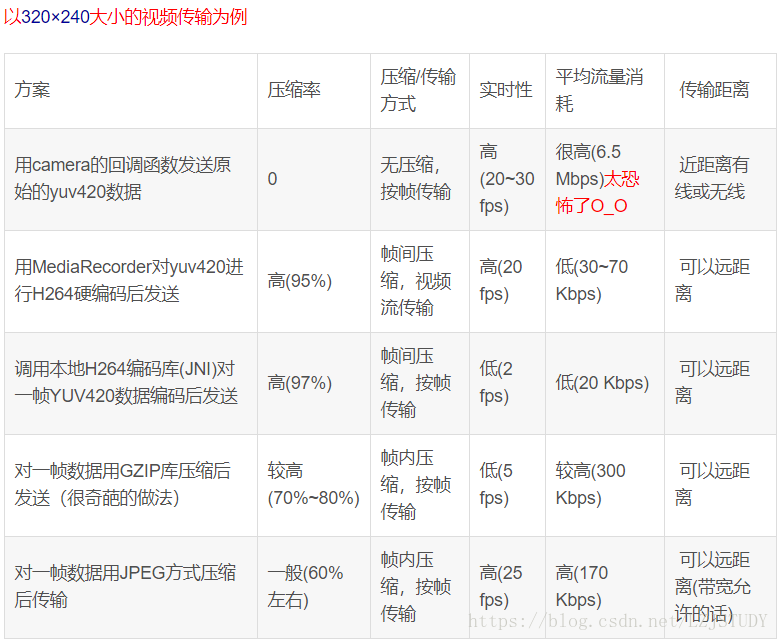

下图是转的。。。

我采用的是第五种方案,为了牺牲了流量(局域网不怕),选了高FPS的。

安卓代码参考的别人的参考这里

public class MainActivity extends AppCompatActivity implements SurfaceHolder.Callback, Camera.PreviewCallback {

private final int TCP_PORT = 6666; //TCP通讯的端口号

private final int UDP_PORT = 7777; //UDP通讯的端口号

private final String SERVER_IP = "152.18.10.101"; //服务器端的IP地址

private Camera mCamera;

private Camera.Size previewSize;

private DatagramSocket packetSenderSocket; //发送图像帧的套接字

private long lastSendTime; //上一次发送图像帧的时间

private InetAddress serverAddress; //服务端地址

private final LinkedList<DatagramPacket> packetList = new LinkedList<>();

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

final SurfaceView surfaceView = (SurfaceView) findViewById(R.id.surfaceView);

SurfaceHolder holder = surfaceView.getHolder();

holder.setKeepScreenOn(true);

holder.addCallback(this);

//开启通讯连接线程,连接服务端

new ConnectThread().start();

}

@Override

public void surfaceCreated(SurfaceHolder holder) {

//获取相机

if (mCamera == null) {

mCamera = Camera.open(); //打开后摄像头

Camera.Parameters parameters = mCamera.getParameters();

//设置预览图大小

//注意必须为parameters.getSupportedPreviewSizes()中的长宽,否则会报异常

parameters.setPreviewSize(960, 544);

previewSize = parameters.getPreviewSize();

//设置自动对焦

parameters.setFocusMode(Camera.Parameters.FOCUS_MODE_CONTINUOUS_PICTURE);

mCamera.setParameters(parameters);

mCamera.cancelAutoFocus();

//设置回调

try {

mCamera.setPreviewDisplay(holder);

mCamera.setPreviewCallback(this);

} catch (IOException e) {

e.printStackTrace();

}

}

//开始预览

mCamera.startPreview();

}

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) {

}

@Override

public void surfaceDestroyed(SurfaceHolder holder) {

//释放相机

if (mCamera != null) {

mCamera.setPreviewCallback(null);

mCamera.stopPreview();

mCamera.release();

mCamera = null;

}

}

/**

* 获取每一帧的图像数据

*/

@Override

public void onPreviewFrame(byte[] data, Camera camera) {

long curTime = System.currentTimeMillis();

//每20毫秒发送一帧

if (serverAddress != null && curTime - lastSendTime >= 20) {

lastSendTime = curTime;

//NV21格式转JPEG格式

YuvImage image = new YuvImage(data, ImageFormat.NV21, previewSize.width ,previewSize.height, null);

ByteArrayOutputStream bos = new ByteArrayOutputStream();

image.compressToJpeg(new Rect(0, 0, previewSize.width, previewSize.height), 40, bos);

int packMaxSize = 65500; //防止超过UDP包的最大大小

byte[] imgBytes = bos.toByteArray();

Log.i("tag", imgBytes.length + "");

//打包

DatagramPacket packet = new DatagramPacket(imgBytes, imgBytes.length > packMaxSize ? packMaxSize : imgBytes.length,

serverAddress, UDP_PORT);

//添加到队尾

synchronized (packetList) {

packetList.addLast(packet);

}

}

}

/**

* 连接线程

*/

private class ConnectThread extends Thread {

@Override

public void run() {

super.run();

try {

//创建连接

packetSenderSocket = new DatagramSocket();

Socket socket = new Socket(SERVER_IP, TCP_PORT);

serverAddress = socket.getInetAddress();

//断开连接

socket.close();

//启动发送图像数据包的线程

new ImgSendThread().start();

} catch (IOException e) {

e.printStackTrace();

}

}

}

/**

* 发送图像数据包的线程

*/

private class ImgSendThread extends Thread {

@Override

public void run() {

super.run();

while (packetSenderSocket != null) {

DatagramPacket packet;

synchronized (packetList) {

//没有待发送的包

if (packetList.isEmpty()) {

try {

Thread.sleep(10);

continue;

} catch (InterruptedException e) {

e.printStackTrace();

}

}

//取出队头

packet = packetList.getFirst();

packetList.removeFirst();

}

try {

packetSenderSocket.send(packet);

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

}

UDP数据包看这里,实际上超不过1472字节,并不是65507字节,由以太网的物理特性决定的。参考这里: 网络-UDP,TCP数据包的最大传输长度分析

C++代码我自己写的

这是all.h

#ifndef ALL_H

#define ALL_H

#include <stdio.h>

#include <winsock2.h>

#include <cv.h>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

using namespace cv;

#pragma comment(lib,"ws2_32.lib")

#define Formatted

int TCP_PORT = 6666; //TCP通讯的端口号

int UDP_PORT = 7777; //UDP通讯的端口号

const int BUF_SIZE = 1024 * 1024;//1M的缓存

WSADATA wsd;

SOCKET sServer;

SOCKET sClient;

SOCKADDR_IN addrServ;//服务器地址

char buf[BUF_SIZE];

int retVal;

#endif 这是tcprun.h

#ifndef TCP_RUN

#define TCP_RUN

#include "All.h"

class tcprun

{

public:

tcprun();

~tcprun();

int init(int port);

private:

};

tcprun::tcprun()

{

}

int tcprun::init(int port)

{

if (WSAStartup(MAKEWORD(2, 2), &wsd) != 0)

{

printf("调用socket库失败");

return -1;

}

sServer = socket(AF_INET, SOCK_STREAM, IPPROTO_TCP);

if (INVALID_SOCKET == sServer)

{

printf("无效套接字,失败");

WSACleanup();//清理网络环境,释放socket所占的资源

return -1;

}

addrServ.sin_family = AF_INET;

addrServ.sin_port = htons(port);//htons是将整型变量从主机字节顺序转变成网络字节顺序, 就是整数在地址空间存储方式变为高位字节存放在内存的低地址处。//这里是port端口3333

addrServ.sin_addr.s_addr = INADDR_ANY;//0.0.0.0(本机)

retVal = bind(sServer, (LPSOCKADDR)&addrServ, sizeof(SOCKADDR_IN));//绑定套接字

if (SOCKET_ERROR == retVal)

{

printf("绑定失败");

closesocket(sServer);

WSACleanup();

return -1;

}

retVal = listen(sServer, 1);

if (SOCKET_ERROR == retVal)

{

printf("监听失败");

closesocket(sServer);

WSACleanup();

return -1;

}

sockaddr_in addrClient;

int addrClientlen = sizeof(addrClient);

sClient = accept(sServer, (sockaddr FAR*)&addrClient, &addrClientlen);

if (INVALID_SOCKET == sClient)

{

printf("接收无效");

closesocket(sServer);

WSACleanup();

return -1;

}

char buff[1024];//输出内容

memset(buff, 0, sizeof(buff));

recv(sClient, buff, sizeof(buff), 0);

printf("%s\n", buff);

closesocket(sServer);

closesocket(sClient);

WSACleanup();//清理网络环境,释放socket所占的资源

}

tcprun::~tcprun()

{

}

#endif TCP里面的buff是为了测试是否TCP建立连接

这是新封装的udp类

#ifndef UdpAndPic_H

#define UdpAndPic_H

#include "All.h"

class UdpAndPic

{

public:

UdpAndPic();

~UdpAndPic();

void makepic(SOCKET s, int width, int height);

int init(int port, int width, int height);

};

UdpAndPic::UdpAndPic()

{

}

UdpAndPic::~UdpAndPic()

{

}

int UdpAndPic::init(int port, int width, int height)

{

WORD wVersionRequested;//套接字库版本号

WSADATA wsaData;

int err;

wVersionRequested = MAKEWORD(2, 2);//定义套接字的版本号

err = WSAStartup(wVersionRequested, &wsaData);//创建套接字

if (err != 0) { return 0; }///创建套接字失败处理

if (LOBYTE(wsaData.wVersion) != 2 || HIBYTE(wsaData.wVersion) != 2)

{

WSACleanup(); return 0;

} SOCKET SrvSock = socket(AF_INET, SOCK_DGRAM, 0);//创建套接字

SOCKADDR_IN SrvAddr;

SrvAddr.sin_addr.S_un.S_addr = inet_addr("0.0.0.0");//绑定服务端IP地址

SrvAddr.sin_family = AF_INET;//服务端地址族

SrvAddr.sin_port = htons(port);//绑定服务端端口号

bind(SrvSock, (SOCKADDR*)&SrvAddr, sizeof(SOCKADDR));

int len = sizeof(SOCKADDR);

memset(buf, 0, sizeof(buf));

SOCKADDR ClistAddr;

err = recvfrom(SrvSock, buf, BUF_SIZE, 0, (SOCKADDR*)&ClistAddr, &len);//等待接收客户端的请求到来

if (err == -1)

{

printf("%s\n", "接收失败");

}

//循环接收数据

printf("开始连接……");

makepic(SrvSock,width,height);//开始制图

closesocket(SrvSock);//关闭套接字

WSACleanup();

return 0;

}

void UdpAndPic::makepic(SOCKET SrvSock,int width,int height)

{

namedWindow("video", 0);//用参数0代表可以用鼠标拖拽大小,1不可以

while (true)

{

recv(SrvSock, buf, BUF_SIZE, 0);

printf(buf);

//buf为图像再内存中的地址

CvMat mat = cvMat(width, height, CV_8UC3, buf);//从内存读取 /*CvMat用已有数据data初始化矩阵*/

IplImage *pIplImage = cvDecodeImage(&mat, 1);//第二个参数为flag,在opencv文档中查询

cvShowImage("video", pIplImage);

//cvResizeWindow("video", width, height);//改变窗体大小

cvReleaseImage(&pIplImage);//cvDecodeImage产生的IplImage*对象***需要手动释放内存***

memset(buf, 0, sizeof(buf));

if (waitKey(100) == 27)

break;//按ASCII为27的ESC退出

}

}

#endif在函数makepic中,要注意的是,cvDecodeImage产生的IPLImage对象需要手动释放内存,不然就卡崩白屏。

我这里是接收到client传过来的字节流,存到char *buf里面,然后用CvMat从内存中读取JPEG的数据。opencv里有专门的函数可以解jpeg的,因为opencv 本来就依赖于libjpeg,在它上面解压缩jpeg文件。

最后是cpp

#ifndef TEST_RUN

#define TEST_RUN

#include "TcpRun.h"

#include "All.h"

#include "UdpAndPic.h"

int main()

{

tcprun *t = new tcprun();

UdpAndPic *u=new UdpAndPic();

if (t->init(6666) != -1)//6666是端口

{

printf("%s\n", "TCP正确,开始执行UDP…");

if (u->init(7777,640,480) != -1)//4444是端口,后面了两个是CvMat用已有数据data初始化矩阵的宽和高

{

}

else

{

printf("%s\n", "UDP错误");

}

}

else

{

printf("%s\n", "TCP错误");

}

system("PAUSE");

return 0;

}

#endif