版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/qq_33733970/article/details/83713726

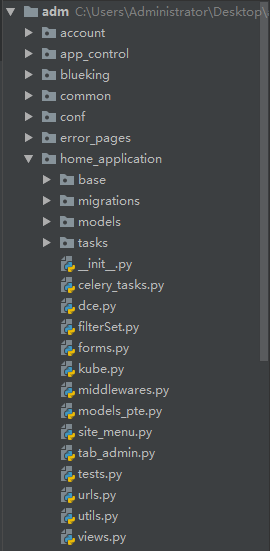

目录结构:

首先,celery_tasks要放到根目录下否则会报:

[2018-11-04 16:56:17,616: ERROR/MainProcess] Received unregistered task of type 'home_application.celery_tasks.update_deployment'.

The message has been ignored and discarded.

Did you remember to import the module containing this task?

Or maybe you are using relative imports?

Please see http://bit.ly/gLye1c for more information.

The full contents of the message body was:

{'utc': False, 'chord': None, 'args': [], 'retries': 0, 'expires': None, 'task': 'home_application.celery_tasks.update_deployment', 'callbacks': None, 'errbacks': None, 'timelimit': (None, None), 'taskset': None, 'kwargs': {'args': [u't-p', u't-p-others-t-m', 1, {'module_id': u'19', 'proj_code': u't-p'}, u'10.131.178.174/daocloud/m19:20181104.163755', 80, 1, 1073741824, u'/sample/', 'default']}, 'eta': None, 'id': '389534de-2b58-4a76-a237-be1189b724ef'} (411b)

Traceback (most recent call last):

File "/data/bkce/paas_agent/apps/Envs/adm/lib/python2.7/site-packages/celery/worker/consumer.py", line 455, in on_task_received

strategies[name](message, body,

KeyError: 'home_application.celery_tasks.update_deployment'

配置的时候在conf/default.py文件中配置:

# 是否启用celery任务

IS_USE_CELERY = True

# 本地开发的 celery 的消息队列(RabbitMQ)信息

BROKER_URL_DEV = 'amqp://guest:guest@localhost:5672'

# TOCHANGE 调用celery任务的文件路径, List of modules to import when celery starts.

CELERY_IMPORTS = (

# 这里要注意,配置好路径,否则也会报上述错误

'home_application.celery_tasks',

)

使用的时候直接在celery_tasks.py中:

from celery import task

@task.task()

def update_deployment(app_name, deployment_name, replicas, labels, image, container_port, cpu, memory, health_check_url,

namespace='default'):

# some code

调用的时候在views.py中直接调用就可以了:

def deploy_image_dce(proj_code, category, name, module_id, ver, urlpath, instance_num, node_port, cpu, memory):

if result == 'Find':

# 设置为后台任务

result = update_deployment.delay(proj_code, deployment_name, replicas, labels, image, container_port, cpu,

memory, urlpath, namespace)

the_task = update_deployment.AsyncResult(result.id)

print_util('result.id', result.id)

print_util('the_task.state', the_task.state)

别忘了起celery,否则任务会一直处于PENDING,我用的蓝鲸部署平台,勾选下就可以了。

Finished!!!

[2018-11-04 17:42:44,524: INFO/MainProcess] Received task: home_application.celery_tasks.update_deployment[44ce5e85-68b9-4669-ad30-688e31ee3566]

[2018-11-04 17:42:44,529: WARNING/Worker-1] {'spec': {'replicas': 0}, 'metadata': {'annotations': {'kubernetes.io/change-cause': 'update replica'}}}

[2018-11-04 17:42:44,587: WARNING/Worker-1] replicas->replicas-1

[2018-11-04 17:42:44,591: INFO/Worker-1] Starting new HTTP connection (1): 10.131.178.176

[2018-11-04 17:42:49,629: WARNING/Worker-1] sleep 5

[2018-11-04 17:42:49,631: INFO/Worker-1] Starting new HTTP connection (1): 10.131.178.176

[2018-11-04 17:42:54,662: WARNING/Worker-1] sleep 5

[2018-11-04 17:42:54,663: INFO/Worker-1] Starting new HTTP connection (1): 10.131.178.176

[2018-11-04 17:42:59,695: WARNING/Worker-1] sleep 5

[2018-11-04 17:42:59,696: INFO/Worker-1] Starting new HTTP connection (1): 10.131.178.176

[2018-11-04 17:43:04,727: WARNING/Worker-1] sleep 5

[2018-11-04 17:43:04,728: INFO/Worker-1] Starting new HTTP connection (1): 10.131.178.176

[2018-11-04 17:43:09,759: WARNING/Worker-1] sleep 5

[2018-11-04 17:43:09,760: INFO/Worker-1] Starting new HTTP connection (1): 10.131.178.176

[2018-11-04 17:43:14,793: WARNING/Worker-1] sleep 5

[2018-11-04 17:43:14,794: INFO/Worker-1] Starting new HTTP connection (1): 10.131.178.176

[2018-11-04 17:43:19,830: WARNING/Worker-1] sleep 5

[2018-11-04 17:43:19,831: INFO/Worker-1] Starting new HTTP connection (1): 10.131.178.176

[2018-11-04 17:43:24,866: WARNING/Worker-1] sleep 5

[2018-11-04 17:43:24,868: INFO/Worker-1] Starting new HTTP connection (1): 10.131.178.176

[2018-11-04 17:43:24,897: WARNING/Worker-1] {'kind': 'Deployment', 'spec': {'replicas': 0, 'strategy': {'rollingUpdate': {'maxSurge': 0, 'maxUnavailable': 1}}, 'terminationGracePeriodSeconds': 66, 'template': {'spec': {'affinity': {'podAntiAffinity': {'requiredDuringSchedulingIgnoredDuringExecution': [{'labelSelector': {'matchExpressions': [{'operator': 'In', 'values': [u't-p-others-t-m'], 'key': 'name'}]}, 'topologyKey': 'kubernetes.io/hostname'}]}, 'nodeAffinity': {'preferredDuringSchedulingIgnoredDuringExecution': [{'preference': {'matchExpressions': [{'operator': 'In', 'values': ['active'], 'key': 'as.stat'}]}, 'weight': 1}]}}, 'containers': [{'livenessProbe': {'initialDelaySeconds': 60, 'httpGet': {'path': u'/sample/', 'scheme': 'HTTP', 'port': 80}, 'successThreshold': 1, 'timeoutSeconds': 5, 'failureThreshold': 5}, 'name': u't-p-others-t-m', 'image': u'10.131.178.174/daocloud/m19:20181104.163755', 'volumeMounts': [{'mountPath': '/home/tomcat/apache-tomcat-9.0.8/logs', 'name': u't-p-others-t-m-logs'}], 'readinessProbe': {'initialDelaySeconds': 60, 'httpGet': {'path': u'/sample/', 'scheme': 'HTTP', 'port': 80}, 'successThreshold': 1, 'timeoutSeconds': 5, 'failureThreshold': 5}, 'ports': [{'containerPort': 80}], 'resources': {'limits': {'cpu': 1, 'memory': 1073741824}}}], 'volumes': [{'hostPath': {'path': u'/logs/t-p-others-t-m', 'type': ''}, 'name': u't-p-others-t-m-logs'}]}, 'metadata': {'labels': {'dce.daocloud.io/component': u't-p-others-t-m', 'module_id': u'19', 'proj_code': u't-p', 'dce.daocloud.io/app': u't-p'}, 'name': u't-p-others-t-m'}}, 'revisionHistoryLimit': 10, 'selector': {'matchLabels': {'dce.daocloud.io/component': u't-p-others-t-m'}}}, 'apiVersion': 'apps/v1beta1', 'metadata': {'labels': {'dce.daocloud.io/component': u't-p-others-t-m', 'module_id': u'19', 'proj_code': u't-p', 'dce.daocloud.io/app': u't-p'}, 'name': u't-p-others-t-m'}}

[2018-11-04 17:43:24,951: WARNING/Worker-1] Created

[2018-11-04 17:43:24,952: INFO/Worker-1] Starting new HTTP connection (1): 10.131.178.176

[2018-11-04 17:43:25,071: WARNING/Worker-1] {'spec': {'replicas': 1}, 'metadata': {'annotations': {'kubernetes.io/change-cause': 'update replica'}}}

[2018-11-04 17:43:25,246: WARNING/Worker-1] replicas-1->replicas

[2018-11-04 17:43:25,273: INFO/MainProcess] Task home_application.celery_tasks.update_deployment[44ce5e85-68b9-4669-ad30-688e31ee3566] succeeded in 40.7457830608s: 'Created'

worker: Warm shutdown (MainProcess)

-------------- celery@adm v3.1.18 (Cipater)

---- **** -----

--- * *** * -- Linux-3.10.0-693.el7.x86_64-x86_64-with-centos-7.4.1708-Core

-- * - **** ---

- ** ---------- [config]

- ** ---------- .> app: default:0x7f7eac4238d0 (djcelery.loaders.DjangoLoader)

- ** ---------- .> transport: amqp://adm:**@10.131.59.170:5672/test_adm

- ** ---------- .> results: database

- *** --- * --- .> concurrency: {min=1, max=8} (prefork)

-- ******* ----

--- ***** ----- [queues]

-------------- .> celery exchange=celery(direct) key=celery

[tasks]

. home_application.celery_tasks.update_deployment

[2018-11-04 18:20:19,137: WARNING/MainProcess] /data/bkce/paas_agent/apps/Envs/adm/lib/python2.7/site-packages/celery/apps/worker.py:161: CDeprecationWarning:

Starting from version 3.2 Celery will refuse to accept pickle by default.

The pickle serializer is a security concern as it may give attackers

the ability to execute any command. It's important to secure

your broker from unauthorized access when using pickle, so we think

that enabling pickle should require a deliberate action and not be

the default choice.

If you depend on pickle then you should set a setting to disable this

warning and to be sure that everything will continue working

when you upgrade to Celery 3.2::

CELERY_ACCEPT_CONTENT = ['pickle', 'json', 'msgpack', 'yaml']

You must only enable the serializers that you will actually use.

warnings.warn(CDeprecationWarning(W_PICKLE_DEPRECATED))

[2018-11-04 18:20:19,152: INFO/MainProcess] Connected to amqp://adm:**@10.131.59.170:5672/test_adm

[2018-11-04 18:20:19,166: INFO/MainProcess] mingle: searching for neighbors

[2018-11-04 18:20:20,175: INFO/MainProcess] mingle: all alone

[2018-11-04 18:20:20,194: WARNING/MainProcess] celery@adm ready.

[2018-11-04 18:20:35,898: INFO/MainProcess] Received task: home_application.celery_tasks.update_deployment[2b5f1c4b-920c-4fe5-af39-6f0070ccac60]

[2018-11-04 18:20:35,901: WARNING/Worker-1] {'spec': {'replicas': 0}, 'metadata': {'annotations': {'kubernetes.io/change-cause': 'update replica'}}}

[2018-11-04 18:20:35,961: WARNING/Worker-1] replicas->replicas-1

[2018-11-04 18:20:35,963: INFO/Worker-1] Starting new HTTP connection (1): 10.131.178.176

[2018-11-04 18:20:40,997: WARNING/Worker-1] sleep 5

[2018-11-04 18:20:40,999: INFO/Worker-1] Starting new HTTP connection (1): 10.131.178.176

[2018-11-04 18:20:46,031: WARNING/Worker-1] sleep 5

[2018-11-04 18:20:46,033: INFO/Worker-1] Starting new HTTP connection (1): 10.131.178.176

[2018-11-04 18:20:51,068: WARNING/Worker-1] sleep 5

[2018-11-04 18:20:51,069: INFO/Worker-1] Starting new HTTP connection (1): 10.131.178.176

[2018-11-04 18:20:56,101: WARNING/Worker-1] sleep 5

[2018-11-04 18:20:56,102: INFO/Worker-1] Starting new HTTP connection (1): 10.131.178.176

[2018-11-04 18:21:01,132: WARNING/Worker-1] sleep 5

[2018-11-04 18:21:01,133: INFO/Worker-1] Starting new HTTP connection (1): 10.131.178.176

[2018-11-04 18:21:06,163: WARNING/Worker-1] sleep 5

[2018-11-04 18:21:06,164: INFO/Worker-1] Starting new HTTP connection (1): 10.131.178.176

[2018-11-04 18:21:11,197: WARNING/Worker-1] sleep 5

[2018-11-04 18:21:11,198: INFO/Worker-1] Starting new HTTP connection (1): 10.131.178.176

[2018-11-04 18:21:16,227: WARNING/Worker-1] sleep 5

[2018-11-04 18:21:16,228: INFO/Worker-1] Starting new HTTP connection (1): 10.131.178.176

[2018-11-04 18:21:21,264: WARNING/Worker-1] sleep 5

[2018-11-04 18:21:21,265: INFO/Worker-1] Starting new HTTP connection (1): 10.131.178.176

[2018-11-04 18:21:21,292: WARNING/Worker-1] {'kind': 'Deployment', 'spec': {'replicas': 0, 'strategy': {'rollingUpdate': {'maxSurge': 0, 'maxUnavailable': 1}}, 'terminationGracePeriodSeconds': 66, 'template': {'spec': {'affinity': {'podAntiAffinity': {'requiredDuringSchedulingIgnoredDuringExecution': [{'labelSelector': {'matchExpressions': [{'operator': 'In', 'values': [u't-p-others-t-m'], 'key': 'name'}]}, 'topologyKey': 'kubernetes.io/hostname'}]}, 'nodeAffinity': {'preferredDuringSchedulingIgnoredDuringExecution': [{'preference': {'matchExpressions': [{'operator': 'In', 'values': ['active'], 'key': 'as.stat'}]}, 'weight': 1}]}}, 'containers': [{'livenessProbe': {'initialDelaySeconds': 60, 'httpGet': {'path': u'/sample/', 'scheme': 'HTTP', 'port': 80}, 'successThreshold': 1, 'timeoutSeconds': 5, 'failureThreshold': 5}, 'name': u't-p-others-t-m', 'image': u'10.131.178.174/daocloud/m19:20181104.163755', 'volumeMounts': [{'mountPath': '/home/tomcat/apache-tomcat-9.0.8/logs', 'name': u't-p-others-t-m-logs'}], 'readinessProbe': {'initialDelaySeconds': 60, 'httpGet': {'path': u'/sample/', 'scheme': 'HTTP', 'port': 80}, 'successThreshold': 1, 'timeoutSeconds': 5, 'failureThreshold': 5}, 'ports': [{'containerPort': 80}], 'resources': {'limits': {'cpu': 1, 'memory': 1073741824}}}], 'volumes': [{'hostPath': {'path': u'/logs/t-p-others-t-m', 'type': ''}, 'name': u't-p-others-t-m-logs'}]}, 'metadata': {'labels': {'dce.daocloud.io/component': u't-p-others-t-m', 'module_id': u'19', 'proj_code': u't-p', 'dce.daocloud.io/app': u't-p'}, 'name': u't-p-others-t-m'}}, 'revisionHistoryLimit': 10, 'selector': {'matchLabels': {'dce.daocloud.io/component': u't-p-others-t-m'}}}, 'apiVersion': 'apps/v1beta1', 'metadata': {'labels': {'dce.daocloud.io/component': u't-p-others-t-m', 'module_id': u'19', 'proj_code': u't-p', 'dce.daocloud.io/app': u't-p'}, 'name': u't-p-others-t-m'}}

[2018-11-04 18:21:21,350: WARNING/Worker-1] Created

[2018-11-04 18:21:21,352: INFO/Worker-1] Starting new HTTP connection (1): 10.131.178.176

[2018-11-04 18:21:21,625: WARNING/Worker-1] {'spec': {'replicas': 1}, 'metadata': {'annotations': {'kubernetes.io/change-cause': 'update replica'}}}

[2018-11-04 18:21:21,706: WARNING/Worker-1] replicas-1->replicas

[2018-11-04 18:21:21,726: INFO/MainProcess] Task home_application.celery_tasks.update_deployment[2b5f1c4b-920c-4fe5-af39-6f0070ccac60] succeeded in 45.8252220787s: 'Created'