1.通过缓存实现map端的left join

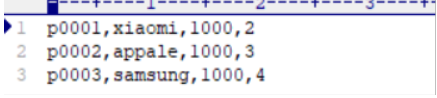

缓存文件pdts.txt内容:

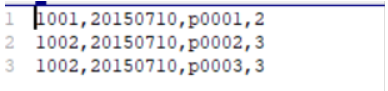

orders.txt文件内容

1.1)在驱动程序中增加指定文件缓存:

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.filecache.DistributedCache;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

import java.net.URI;

public class MapSideJoin extends Configured implements Tool {

@Override

public int run(String[] args) throws Exception {

Configuration conf = super.getConf();

//注意,这里的缓存文件的添加,只能将缓存文件放到hdfs文件系统当中,放到本地加载不到,pdts.txt在hdfs文件系统上

DistributedCache.addCacheFile(new URI("hdfs://node01:8020/mr/cachefile/pdts.txt"),conf);

Job job = Job.getInstance(conf, MapSideJoin.class.getSimpleName());

job.setJarByClass(MapSideJoin.class);

job.setInputFormatClass(TextInputFormat.class);

TextInputFormat.addInputPath(job,new Path("file:///E:\\input\\orders.txt"));

job.setMapperClass(JoinMap.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

job.setOutputFormatClass(TextOutputFormat.class);

TextOutputFormat.setOutputPath(job,new Path("file:///E:\\cacheout1"));

boolean b = job.waitForCompletion(true);

return b?0:1;

}

public static void main(String[] args) throws Exception {

Configuration configuration = new Configuration();

int run = ToolRunner.run(configuration, new MapSideJoin(), args);

System.exit(run);

}

}1.2)在自定义Map中通过上下文对象获取缓存文件

import org.apache.hadoop.filecache.DistributedCache;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.net.URI;

import java.util.HashMap;

/**

* map端c操作商品缓存数据

*/

public class JoinMap extends Mapper<LongWritable,Text,Text,Text> {

HashMap<String,String> b_tab = new HashMap<String, String>();

String line = null;

/**

* map端的初始化方法,从中可以获取我们的缓存文件,一次性加载到map当中来

* 使用缓存类DistributedCache.getLocalCacheFiles方法从context上下文中获取缓存数据

*/

@Override

public void setup(Context context) throws IOException, InterruptedException {

//这种方式获取所有的缓存文件

URI[] cacheFiles = DistributedCache.getCacheFiles(context.getConfiguration());

//获取到缓存文件路径

Path[] localCacheFiles = DistributedCache.getLocalCacheFiles(context.getConfiguration());

//根据URI得到hdfs文件系统,从而拿到缓存文件

FileSystem fileSystem = FileSystem.get(cacheFiles[0], context.getConfiguration());

FSDataInputStream open = fileSystem.open(new Path(cacheFiles[0]));

BufferedReader bufferedReader = new BufferedReader(new InputStreamReader(open));

while ((line = bufferedReader.readLine())!=null){

String[] split = line.split(",");

b_tab.put(split[0],split[1]+"\t"+split[2]+"\t"+split[3]);

}

fileSystem.close();

IOUtils.closeStream(bufferedReader);

}

@Override

public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//这里读的是这个map task所负责的那一个切片数据(在hdfs上)

String[] fields = value.toString().split(",");

String orderId = fields[0];

String date = fields[1];

String pdId = fields[2];

String amount = fields[3];

//获取map当中的商品详细信息

String productInfo = b_tab.get(pdId);

context.write(new Text(orderId), new Text(date + "\t" + productInfo+"\t"+amount));

}

}2)执行方法得到结果文件cacheout1内容