爬去网页通用流程

这样看着虽然很麻烦,但是爬取网页都离不开这四个步骤,以后如果爬取更复杂的网页内容,只需要在这个基础上添加内容就ok了。

import requests

class Qiushi:

# 初始化函数

def __init__(self,name):

self.name = name

self.url_base = 'https://www.qiushibaike.com/8hr/page/{}/'

self.headers = {'User-Agent':'Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:64.0) Gecko/20100101 Firefox/64.0'}

def make_url(self):

"""

生成下载连接列表

:return:

"""

#爬取糗事百科的前十页

return [self.url_base.format(i) for i in range(1,11)]

def download(self,url_str):

"""

通过requests.get()方法下载指定页面,获得页面结果

:param url_str:

:return:

"""

result = requests.get(url_str,headers=self.headers)

return result.content

def save_content(self,html_content,page_num):

"""

以html 形式存储下载内容

:param html_content:

:param page_num:

:return:

"""

# 先创建download文件夹,然后把爬取的内容存储在download文件里

file_name = './download/'+'{}--第{}页.html'.format(self.name,page_num)

with open(file_name,'wb') as fb:

fb.write(html_content)

def run(self):

"""

下载主线程,实现主要逻辑

:return:

"""

# 获取到所有的链接

url_lists = self.make_url()

for url in url_lists:

html_content = self.download(url)

# 获取到对应页数

page_num = url_lists.index(url)+1

self.save_content(html_content,page_num)

if __name__=='__main__':

qiushi= Qiushi('糗事百科')

qiushi.run()

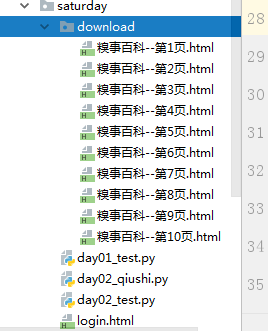

爬取成功后的结果