今天下载了最新版本的4.2.0的内核进行阅读。

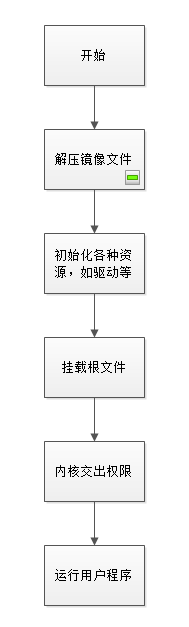

这是我理解的流程图

首先开始解压zImage内核镜像文件。代码在linux4.2.0/arch/arm/boot/compress/head.S中

首先我们找到程序的入口

start:

.type start,#function

.rept 7

__nop

.endr

#ifndef CONFIG_THUMB2_KERNEL

mov r0, r0

#else

AR_CLASS( sub pc, pc, #3 ) @ A/R: switch to Thumb2 mode

M_CLASS( nop.w ) @ M: already in Thumb2 mode

.thumb

#endif

W(b) 1f

.word _magic_sig @ Magic numbers to help the loader

.word _magic_start @ absolute load/run zImage address

.word _magic_end @ zImage end address

.word 0x04030201 @ endianness flag

.word 0x45454545 @ another magic number to indicate

.word _magic_table @ additional data table

__EFI_HEADER

1:

ARM_BE8( setend be ) @ go BE8 if compiled for BE8

AR_CLASS( mrs r9, cpsr )

#ifdef CONFIG_ARM_VIRT_EXT

bl __hyp_stub_install @ get into SVC mode, reversibly

#endif

mov r7, r1 @ save architecture ID

mov r8, r2 @ save atags pointer

#ifndef CONFIG_CPU_V7M

/*

* Booting from Angel - need to enter SVC mode and disable

* FIQs/IRQs (numeric definitions from angel arm.h source).

* We only do this if we were in user mode on entry.

*/

mrs r2, cpsr @ get current mode

tst r2, #3 @ not user?

bne not_angel

mov r0, #0x17 @ angel_SWIreason_EnterSVC

ARM( swi 0x123456 ) @ angel_SWI_ARM

THUMB( svc 0xab ) @ angel_SWI_THUMB

not_angel:

safe_svcmode_maskall r0

msr spsr_cxsf, r9 @ Save the CPU boot mode in

@ SPSR

#endif在该文件夹下的makefile中去寻找我们的define,找到的定义如下:

ifeq ($(CONFIG_ARM_VIRT_EXT),y)

OBJS += hyp-stub.o

endif开始将机器码ID存入R7中,将参数数据存入R8中。因为没有定义CONFIG_CPU_V7M所以进入SVC模式。

SVC的进入接口safe+svcmode_maskall在linux4.2.0/arch/arm/include/asm/assembler.h

.macro safe_svcmode_maskall reg:req

#if __LINUX_ARM_ARCH__ >= 6 && !defined(CONFIG_CPU_V7M)

mrs \reg , cpsr

eor \reg, \reg, #HYP_MODE

tst \reg, #MODE_MASK

bic \reg , \reg , #MODE_MASK

orr \reg , \reg , #PSR_I_BIT | PSR_F_BIT | SVC_MODE

THUMB( orr \reg , \reg , #PSR_T_BIT )

bne 1f

orr \reg, \reg, #PSR_A_BIT

badr lr, 2f

msr spsr_cxsf, \reg

__MSR_ELR_HYP(14)

__ERET

1: msr cpsr_c, \reg

2:

#else

/*

* workaround for possibly broken pre-v6 hardware

* (akita, Sharp Zaurus C-1000, PXA270-based)

*/

setmode PSR_F_BIT | PSR_I_BIT | SVC_MODE, \reg

#endif

.endm在进入SVC模式时会关闭FIQ/IRQ。

接下来我们继续顺序执行程序

.text

#ifdef CONFIG_AUTO_ZRELADDR

/*

* Find the start of physical memory. As we are executing

* without the MMU on, we are in the physical address space.

* We just need to get rid of any offset by aligning the

* address.

*

* This alignment is a balance between the requirements of

* different platforms - we have chosen 128MB to allow

* platforms which align the start of their physical memory

* to 128MB to use this feature, while allowing the zImage

* to be placed within the first 128MB of memory on other

* platforms. Increasing the alignment means we place

* stricter alignment requirements on the start of physical

* memory, but relaxing it means that we break people who

* are already placing their zImage in (eg) the top 64MB

* of this range.

*/

mov r4, pc

and r4, r4, #0xf8000000

/* Determine final kernel image address. */

add r4, r4, #TEXT_OFFSET

#else

ldr r4, =zreladdr

#endif在该文件夹下的makefile并没有发现定义了CONFIG_AUTO_ZERLADDR。所以直接将镜像文件的地址设置为zreladdr

然后继续执行代码

/*

* Set up a page table only if it won't overwrite ourself.

* That means r4 < pc || r4 - 16k page directory > &_end.

* Given that r4 > &_end is most unfrequent, we add a rough

* additional 1MB of room for a possible appended DTB.

*/

mov r0, pc

cmp r0, r4

ldrcc r0, LC0+32

addcc r0, r0, pc

cmpcc r4, r0

orrcc r4, r4, #1 @ remember we skipped cache_on

blcs cache_on

restart: adr r0, LC0

ldmia r0, {r1, r2, r3, r6, r10, r11, r12}

ldr sp, [r0, #28]

/*

* We might be running at a different address. We need

* to fix up various pointers.

*/

sub r0, r0, r1 @ calculate the delta offset

add r6, r6, r0 @ _edata

add r10, r10, r0 @ inflated kernel size location

/*

* The kernel build system appends the size of the

* decompressed kernel at the end of the compressed data

* in little-endian form.

*/

ldrb r9, [r10, #0]

ldrb lr, [r10, #1]

orr r9, r9, lr, lsl #8

ldrb lr, [r10, #2]

ldrb r10, [r10, #3]

orr r9, r9, lr, lsl #16

orr r9, r9, r10, lsl #24

#ifndef CONFIG_ZBOOT_ROM

/* malloc space is above the relocated stack (64k max) */

add sp, sp, r0

add r10, sp, #0x10000

#else

/*

* With ZBOOT_ROM the bss/stack is non relocatable,

* but someone could still run this code from RAM,

* in which case our reference is _edata.

*/

mov r10, r6

#endif

mov r5, #0 @ init dtb size to 0

#ifdef CONFIG_ARM_APPENDED_DTB

/*

* r0 = delta

* r2 = BSS start

* r3 = BSS end

* r4 = final kernel address (possibly with LSB set)

* r5 = appended dtb size (still unknown)

* r6 = _edata

* r7 = architecture ID

* r8 = atags/device tree pointer

* r9 = size of decompressed image

* r10 = end of this image, including bss/stack/malloc space if non XIP

* r11 = GOT start

* r12 = GOT end

* sp = stack pointer

*

* if there are device trees (dtb) appended to zImage, advance r10 so that the

* dtb data will get relocated along with the kernel if necessary.

*/判断内核解压地址是否在镜像文件的地址,是则pc指针地址直接赋值给r0,如果不是则创建一个页表。之后初始化缓存,计算镜像文件地址。

然后继续向下

mov r8, r6 @ use the appended device tree

/*

* Make sure that the DTB doesn't end up in the final

* kernel's .bss area. To do so, we adjust the decompressed

* kernel size to compensate if that .bss size is larger

* than the relocated code.

*/

ldr r5, =_kernel_bss_size

adr r1, wont_overwrite

sub r1, r6, r1

subs r1, r5, r1

addhi r9, r9, r1

/* Get the current DTB size */

ldr r5, [r6, #4]

#ifndef __ARMEB__

/* convert r5 (dtb size) to little endian */

eor r1, r5, r5, ror #16

bic r1, r1, #0x00ff0000

mov r5, r5, ror #8

eor r5, r5, r1, lsr #8

#endif

/* preserve 64-bit alignment */

add r5, r5, #7

bic r5, r5, #7

/* relocate some pointers past the appended dtb */

add r6, r6, r5

add r10, r10, r5

add sp, sp, r5这里重新调整设备树

/*

* Check to see if we will overwrite ourselves.

* r4 = final kernel address (possibly with LSB set)

* r9 = size of decompressed image

* r10 = end of this image, including bss/stack/malloc space if non XIP

* We basically want:

* r4 - 16k page directory >= r10 -> OK

* r4 + image length <= address of wont_overwrite -> OK

* Note: the possible LSB in r4 is harmless here.

*/

add r10, r10, #16384

cmp r4, r10

bhs wont_overwrite

add r10, r4, r9

adr r9, wont_overwrite

cmp r10, r9

bls wont_overwrite这部分代码用来分析当前代码是否会和最后的解压部分重叠,如果有重叠则需要执行代码搬移,判断r4,r9,r10是否需要代码搬运,需要则进行代码搬运。

/*

* Relocate ourselves past the end of the decompressed kernel.

* r6 = _edata

* r10 = end of the decompressed kernel

* Because we always copy ahead, we need to do it from the end and go

* backward in case the source and destination overlap.

*/

/*

* Bump to the next 256-byte boundary with the size of

* the relocation code added. This avoids overwriting

* ourself when the offset is small.

*/

add r10, r10, #((reloc_code_end - restart + 256) & ~255)

bic r10, r10, #255

/* Get start of code we want to copy and align it down. */

adr r5, restart

bic r5, r5, #31

/* Relocate the hyp vector base if necessary */然后进行代码搬移地址的扩展

sub r9, r6, r5 @ size to copy

add r9, r9, #31 @ rounded up to a multiple

bic r9, r9, #31 @ ... of 32 bytes

add r6, r9, r5

add r9, r9, r10

bl cache_clean_flush

badr r0, restart

add r0, r0, r6

mov pc, r0这里首先计算出需要搬运的大小保存到r9中,搬运的原结束地址到r6中,搬运的目的结束地址到r9中。注意这里只搬运代码段和数据段,并不包含bss、栈和堆空间。cache_clean_flush清除缓存然后正式开始搬运

然后继续运行程序

wont_overwrite:

/*

* If delta is zero, we are running at the address we were linked at.

* r0 = delta

* r2 = BSS start

* r3 = BSS end

* r4 = kernel execution address (possibly with LSB set)

* r5 = appended dtb size (0 if not present)

* r7 = architecture ID

* r8 = atags pointer

* r11 = GOT start

* r12 = GOT end

* sp = stack pointer

*/

orrs r1, r0, r5

beq not_relocated

add r11, r11, r0

add r12, r12, r0

如果r0为0则说明当前运行的地址就是链接地址,无需进行重定位,跳转到not_relocated执行,但是这里运行的地址已经被移动到内核解压地址之后跳转到not_relocated

not_relocated: mov r0, #0

1: str r0, [r2], #4 @ clear bss

str r0, [r2], #4

str r0, [r2], #4

str r0, [r2], #4

cmp r2, r3

blo 1b

/*

* Did we skip the cache setup earlier?

* That is indicated by the LSB in r4.

* Do it now if so.

*/

tst r4, #1

bic r4, r4, #1

blne cache_on

/*

* The C runtime environment should now be setup sufficiently.

* Set up some pointers, and start decompressing.

* r4 = kernel execution address

* r7 = architecture ID

* r8 = atags pointer

*/

mov r0, r4

mov r1, sp @ malloc space above stack

add r2, sp, #0x10000 @ 64k max

mov r3, r7

bl decompress_kernel

bl cache_clean_flush

bl cache_off

#ifdef CONFIG_ARM_VIRT_EXT

mrs r0, spsr @ Get saved CPU boot mode

and r0, r0, #MODE_MASK

cmp r0, #HYP_MODE @ if not booted in HYP mode...

bne __enter_kernel @ boot kernel directly

adr r12, .L__hyp_reentry_vectors_offset

ldr r0, [r12]

add r0, r0, r12

bl __hyp_set_vectors

__HVC(0) @ otherwise bounce to hyp mode

b . @ should never be reached

.align 2

.L__hyp_reentry_vectors_offset: .long __hyp_reentry_vectors - .

#else

b __enter_kernel

#endif

__enter_kernel:

mov r0, #0 @ must be 0

mov r1, r7 @ restore architecture number

mov r2, r8 @ restore atags pointer

ARM( mov pc, r4 ) @ call kernel

M_CLASS( add r4, r4, #1 ) @ enter in Thumb mode for M class

THUMB( bx r4 ) @ entry point is always ARM for A/R classes

然后解压镜像文件,刷新缓存,关闭缓存,然后进入内核入口。之后我们就转到内核中去。在文件linux-4.20/arch/arm/kernel/head.S中。

其实我还是很多地方不懂,等学到一定程度再回来复习吧。我是参考ARM Linux启动流程分析——内核自解压阶段

这是进入内核的启动流程

__HEAD

ENTRY(stext)

ARM_BE8(setend be ) @ ensure we are in BE8 mode

THUMB( badr r9, 1f ) @ Kernel is always entered in ARM.

THUMB( bx r9 ) @ If this is a Thumb-2 kernel,

THUMB( .thumb ) @ switch to Thumb now.

THUMB(1: )

#ifdef CONFIG_ARM_VIRT_EXT

bl __hyp_stub_install

#endif

@ ensure svc mode and all interrupts masked

safe_svcmode_maskall r9

一进入内核我们就先进入SVC模式和关闭中断。

mrc p15, 0, r9, c0, c0 @ get processor id

bl __lookup_processor_type @ r5=procinfo r9=cpuid

movs r10, r5 @ invalid processor (r5=0)?

THUMB( it eq ) @ force fixup-able long branch encoding

beq __error_p @ yes, error 'p'

然后进行处理器类型检测,成功再进行下一步

#ifndef CONFIG_XIP_KERNEL

adr r3, 2f

ldmia r3, {r4, r8}

sub r4, r3, r4 @ (PHYS_OFFSET - PAGE_OFFSET)

add r8, r8, r4 @ PHYS_OFFSET

#else

ldr r8, =PLAT_PHYS_OFFSET @ always constant in this case

#endif

网络初始化

/*

* r1 = machine no, r2 = atags or dtb,

* r8 = phys_offset, r9 = cpuid, r10 = procinfo

*/

bl __vet_atags

#ifdef CONFIG_SMP_ON_UP

bl __fixup_smp

#endif

#ifdef CONFIG_ARM_PATCH_PHYS_VIRT

bl __fixup_pv_table

#endif

bl __create_page_tables创建核心页

ldr r13, =__mmap_switched @ address to jump to after

@ mmu has been enabled

badr lr, 1f @ return (PIC) address

#ifdef CONFIG_ARM_LPAE

mov r5, #0 @ high TTBR0

mov r8, r4, lsr #12 @ TTBR1 is swapper_pg_dir pfn

#else

mov r8, r4 @ set TTBR1 to swapper_pg_dir

#endif

ldr r12, [r10, #PROCINFO_INITFUNC]

add r12, r12, r10

ret r12

1: b __enable_mmu

ENDPROC(stext)

.ltorg

#ifndef CONFIG_XIP_KERNEL

2: .long .

.long PAGE_OFFSET

#endif/*

* Setup common bits before finally enabling the MMU. Essentially

* this is just loading the page table pointer and domain access

* registers. All these registers need to be preserved by the

* processor setup function (or set in the case of r0)

*

* r0 = cp#15 control register

* r1 = machine ID

* r2 = atags or dtb pointer

* r4 = TTBR pointer (low word)

* r5 = TTBR pointer (high word if LPAE)

* r9 = processor ID

* r13 = *virtual* address to jump to upon completion

*/

__enable_mmu:

#if defined(CONFIG_ALIGNMENT_TRAP) && __LINUX_ARM_ARCH__ < 6

orr r0, r0, #CR_A

#else

bic r0, r0, #CR_A

#endif

#ifdef CONFIG_CPU_DCACHE_DISABLE

bic r0, r0, #CR_C

#endif

#ifdef CONFIG_CPU_BPREDICT_DISABLE

bic r0, r0, #CR_Z

#endif

#ifdef CONFIG_CPU_ICACHE_DISABLE

bic r0, r0, #CR_I

#endif

#ifdef CONFIG_ARM_LPAE

mcrr p15, 0, r4, r5, c2 @ load TTBR0

#else

mov r5, #DACR_INIT

mcr p15, 0, r5, c3, c0, 0 @ load domain access register

mcr p15, 0, r4, c2, c0, 0 @ load page table pointer

#endif

b __turn_mmu_on

ENDPROC(__enable_mmu)/*

* Enable the MMU. This completely changes the structure of the visible

* memory space. You will not be able to trace execution through this.

* If you have an enquiry about this, *please* check the linux-arm-kernel

* mailing list archives BEFORE sending another post to the list.

*

* r0 = cp#15 control register

* r1 = machine ID

* r2 = atags or dtb pointer

* r9 = processor ID

* r13 = *virtual* address to jump to upon completion

*

* other registers depend on the function called upon completion

*/

.align 5

.pushsection .idmap.text, "ax"

ENTRY(__turn_mmu_on)

mov r0, r0

instr_sync

mcr p15, 0, r0, c1, c0, 0 @ write control reg

mrc p15, 0, r3, c0, c0, 0 @ read id reg

instr_sync

mov r3, r3

mov r3, r13

ret r3

__turn_mmu_on_end:

ENDPROC(__turn_mmu_on)这里进行mmu的使能,然后返回R13的地址,让我们来看看r13到底是什么,我们一点点网上找

mov r13, r12 @ __secondary_switched addressadr r4, __secondary_data

ldmia r4, {r5, r7, r12} @ address to jump to after就是_secondary_data的地址,然后我们继续代码的运行

__secondary_data:

.long .

.long secondary_data

.long __secondary_switchedENTRY(__secondary_switched)

ldr sp, [r7, #12] @ get secondary_data.stack

mov fp, #0

b secondary_start_kernel

ENDPROC(__secondary_switched)这样我们就运行到代码的内核启动了,开始代码在init/main.c下面,因为已经初始化栈和指针所以这部分是c语言写的

asmlinkage __visible void __init start_kernel(void)

{

char *command_line;

char *after_dashes;

set_task_stack_end_magic(&init_task);

smp_setup_processor_id();

debug_objects_early_init();

cgroup_init_early();

local_irq_disable();

early_boot_irqs_disabled = true;

/*

* Interrupts are still disabled. Do necessary setups, then

* enable them.

*/

boot_cpu_init();

page_address_init();

pr_notice("%s", linux_banner);

setup_arch(&command_line);

/*

* Set up the the initial canary and entropy after arch

* and after adding latent and command line entropy.

*/

add_latent_entropy();

add_device_randomness(command_line, strlen(command_line));

boot_init_stack_canary();

mm_init_cpumask(&init_mm);

setup_command_line(command_line);

setup_nr_cpu_ids();

setup_per_cpu_areas();

smp_prepare_boot_cpu(); /* arch-specific boot-cpu hooks */

boot_cpu_hotplug_init();

build_all_zonelists(NULL);

page_alloc_init();

pr_notice("Kernel command line: %s\n", boot_command_line);

parse_early_param();

after_dashes = parse_args("Booting kernel",

static_command_line, __start___param,

__stop___param - __start___param,

-1, -1, NULL, &unknown_bootoption);

if (!IS_ERR_OR_NULL(after_dashes))

parse_args("Setting init args", after_dashes, NULL, 0, -1, -1,

NULL, set_init_arg);

jump_label_init();

/*

* These use large bootmem allocations and must precede

* kmem_cache_init()

*/

setup_log_buf(0);

vfs_caches_init_early();

sort_main_extable();

trap_init();

mm_init();

ftrace_init();

/* trace_printk can be enabled here */

early_trace_init();

/*

* Set up the scheduler prior starting any interrupts (such as the

* timer interrupt). Full topology setup happens at smp_init()

* time - but meanwhile we still have a functioning scheduler.

*/

sched_init();

/*

* Disable preemption - early bootup scheduling is extremely

* fragile until we cpu_idle() for the first time.

*/

preempt_disable();

if (WARN(!irqs_disabled(),

"Interrupts were enabled *very* early, fixing it\n"))

local_irq_disable();

radix_tree_init();

/*

* Set up housekeeping before setting up workqueues to allow the unbound

* workqueue to take non-housekeeping into account.

*/

housekeeping_init();

/*

* Allow workqueue creation and work item queueing/cancelling

* early. Work item execution depends on kthreads and starts after

* workqueue_init().

*/

workqueue_init_early();

rcu_init();

/* Trace events are available after this */

trace_init();

if (initcall_debug)

initcall_debug_enable();

context_tracking_init();

/* init some links before init_ISA_irqs() */

early_irq_init();

init_IRQ();

tick_init();

rcu_init_nohz();

init_timers();

hrtimers_init();

softirq_init();

timekeeping_init();

time_init();

printk_safe_init();

perf_event_init();

profile_init();

call_function_init();

WARN(!irqs_disabled(), "Interrupts were enabled early\n");

early_boot_irqs_disabled = false;

local_irq_enable();

kmem_cache_init_late();

/*

* HACK ALERT! This is early. We're enabling the console before

* we've done PCI setups etc, and console_init() must be aware of

* this. But we do want output early, in case something goes wrong.

*/

console_init();

if (panic_later)

panic("Too many boot %s vars at `%s'", panic_later,

panic_param);

lockdep_init();

/*

* Need to run this when irqs are enabled, because it wants

* to self-test [hard/soft]-irqs on/off lock inversion bugs

* too:

*/

locking_selftest();

/*

* This needs to be called before any devices perform DMA

* operations that might use the SWIOTLB bounce buffers. It will

* mark the bounce buffers as decrypted so that their usage will

* not cause "plain-text" data to be decrypted when accessed.

*/

mem_encrypt_init();

#ifdef CONFIG_BLK_DEV_INITRD

if (initrd_start && !initrd_below_start_ok &&

page_to_pfn(virt_to_page((void *)initrd_start)) < min_low_pfn) {

pr_crit("initrd overwritten (0x%08lx < 0x%08lx) - disabling it.\n",

page_to_pfn(virt_to_page((void *)initrd_start)),

min_low_pfn);

initrd_start = 0;

}

#endif

page_ext_init();

kmemleak_init();

debug_objects_mem_init();

setup_per_cpu_pageset();

numa_policy_init();

acpi_early_init();

if (late_time_init)

late_time_init();

sched_clock_init();

calibrate_delay();

pid_idr_init();

anon_vma_init();

#ifdef CONFIG_X86

if (efi_enabled(EFI_RUNTIME_SERVICES))

efi_enter_virtual_mode();

#endif

thread_stack_cache_init();

cred_init();

fork_init();

proc_caches_init();

uts_ns_init();

buffer_init();

key_init();

security_init();

dbg_late_init();

vfs_caches_init();

pagecache_init();

signals_init();

seq_file_init();

proc_root_init();

nsfs_init();

cpuset_init();

cgroup_init();

taskstats_init_early();

delayacct_init();

check_bugs();

acpi_subsystem_init();

arch_post_acpi_subsys_init();

sfi_init_late();

if (efi_enabled(EFI_RUNTIME_SERVICES)) {

efi_free_boot_services();

}

/* Do the rest non-__init'ed, we're now alive */

arch_call_rest_init();

}

先将各种资源初始化好后最后调用进程初始化。arch_call_rest_init()里面是这样的

void __init __weak arch_call_rest_init(void)

{

rest_init();

}

noinline void __ref rest_init(void)

{

struct task_struct *tsk;

int pid;

rcu_scheduler_starting();

/*

* We need to spawn init first so that it obtains pid 1, however

* the init task will end up wanting to create kthreads, which, if

* we schedule it before we create kthreadd, will OOPS.

*/

pid = kernel_thread(kernel_init, NULL, CLONE_FS);

/*

* Pin init on the boot CPU. Task migration is not properly working

* until sched_init_smp() has been run. It will set the allowed

* CPUs for init to the non isolated CPUs.

*/

rcu_read_lock();

tsk = find_task_by_pid_ns(pid, &init_pid_ns);

set_cpus_allowed_ptr(tsk, cpumask_of(smp_processor_id()));

rcu_read_unlock();

numa_default_policy();

pid = kernel_thread(kthreadd, NULL, CLONE_FS | CLONE_FILES);

rcu_read_lock();

kthreadd_task = find_task_by_pid_ns(pid, &init_pid_ns);

rcu_read_unlock();

/*

* Enable might_sleep() and smp_processor_id() checks.

* They cannot be enabled earlier because with CONFIG_PREEMPT=y

* kernel_thread() would trigger might_sleep() splats. With

* CONFIG_PREEMPT_VOLUNTARY=y the init task might have scheduled

* already, but it's stuck on the kthreadd_done completion.

*/

system_state = SYSTEM_SCHEDULING;

complete(&kthreadd_done);

/*

* The boot idle thread must execute schedule()

* at least once to get things moving:

*/

schedule_preempt_disabled();

/* Call into cpu_idle with preempt disabled */

cpu_startup_entry(CPUHP_ONLINE);

}内核启动

static int __ref kernel_init(void *unused)

{

int ret;

kernel_init_freeable();

/* need to finish all async __init code before freeing the memory */

async_synchronize_full();

ftrace_free_init_mem();

free_initmem();

mark_readonly();

/*

* Kernel mappings are now finalized - update the userspace page-table

* to finalize PTI.

*/

pti_finalize();

system_state = SYSTEM_RUNNING;

numa_default_policy();

rcu_end_inkernel_boot();

if (ramdisk_execute_command) {

ret = run_init_process(ramdisk_execute_command);

if (!ret)

return 0;

pr_err("Failed to execute %s (error %d)\n",

ramdisk_execute_command, ret);

}

/*

* We try each of these until one succeeds.

*

* The Bourne shell can be used instead of init if we are

* trying to recover a really broken machine.

*/

if (execute_command) {

ret = run_init_process(execute_command);

if (!ret)

return 0;

panic("Requested init %s failed (error %d).",

execute_command, ret);

}

if (!try_to_run_init_process("/sbin/init") ||

!try_to_run_init_process("/etc/init") ||

!try_to_run_init_process("/bin/init") ||

!try_to_run_init_process("/bin/sh"))

return 0;

panic("No working init found. Try passing init= option to kernel. "

"See Linux Documentation/admin-guide/init.rst for guidance.");

}static noinline void __init kernel_init_freeable(void)

{

/*

* Wait until kthreadd is all set-up.

*/

wait_for_completion(&kthreadd_done);

/* Now the scheduler is fully set up and can do blocking allocations */

gfp_allowed_mask = __GFP_BITS_MASK;

/*

* init can allocate pages on any node

*/

set_mems_allowed(node_states[N_MEMORY]);

cad_pid = task_pid(current);

smp_prepare_cpus(setup_max_cpus);

workqueue_init();

init_mm_internals();

do_pre_smp_initcalls();

lockup_detector_init();

smp_init();

sched_init_smp();

page_alloc_init_late();

do_basic_setup();

/* Open the /dev/console on the rootfs, this should never fail */

if (ksys_open((const char __user *) "/dev/console", O_RDWR, 0) < 0)

pr_err("Warning: unable to open an initial console.\n");

(void) ksys_dup(0);

(void) ksys_dup(0);

/*

* check if there is an early userspace init. If yes, let it do all

* the work

*/

if (!ramdisk_execute_command)

ramdisk_execute_command = "/init";

if (ksys_access((const char __user *)

ramdisk_execute_command, 0) != 0) {

ramdisk_execute_command = NULL;

prepare_namespace();

}

/*

* Ok, we have completed the initial bootup, and

* we're essentially up and running. Get rid of the

* initmem segments and start the user-mode stuff..

*

* rootfs is available now, try loading the public keys

* and default modules

*/

integrity_load_keys();

load_default_modules();

}其中prepare_namespace()是根文件系统的挂载,代码在linux-4.20/init/do_mounts.c中

/*

* Prepare the namespace - decide what/where to mount, load ramdisks, etc.

*/

void __init prepare_namespace(void)

{

int is_floppy;

if (root_delay) {

printk(KERN_INFO "Waiting %d sec before mounting root device...\n",

root_delay);

ssleep(root_delay);

}

/*

* wait for the known devices to complete their probing

*

* Note: this is a potential source of long boot delays.

* For example, it is not atypical to wait 5 seconds here

* for the touchpad of a laptop to initialize.

*/

wait_for_device_probe();

md_run_setup();

if (saved_root_name[0]) {

root_device_name = saved_root_name;

if (!strncmp(root_device_name, "mtd", 3) ||

!strncmp(root_device_name, "ubi", 3)) {

mount_block_root(root_device_name, root_mountflags);

goto out;

}

ROOT_DEV = name_to_dev_t(root_device_name);

if (strncmp(root_device_name, "/dev/", 5) == 0)

root_device_name += 5;

}

if (initrd_load())

goto out;

/* wait for any asynchronous scanning to complete */

if ((ROOT_DEV == 0) && root_wait) {

printk(KERN_INFO "Waiting for root device %s...\n",

saved_root_name);

while (driver_probe_done() != 0 ||

(ROOT_DEV = name_to_dev_t(saved_root_name)) == 0)

msleep(5);

async_synchronize_full();

}

is_floppy = MAJOR(ROOT_DEV) == FLOPPY_MAJOR;

if (is_floppy && rd_doload && rd_load_disk(0))

ROOT_DEV = Root_RAM0;

mount_root();

out:

devtmpfs_mount("dev");

ksys_mount(".", "/", NULL, MS_MOVE, NULL);

ksys_chroot(".");

}加载完成,最后交出权限,运行用户代码(linux首先启动/sbin/init)。总结下精简的流程如下