这是在处理特征降维的过程中遇到的问题,要降纬特征向量,首先要读出特征向量。我们的特征存储在字典中,key是类似于这样:CCTV1HD_4m_20180129-112144_1800_3600_2375_2525_37_0_126,但是value有下面两种形式。可以看出来,有一种value还是一个字典,有key 'fea',而另一种value是一个list。我在代码中加入了判断数据类型的代码段。成功解决了这个问题。

{'fea': array([ 0.31566852, 0.09721687, 0.16430685, ..., 0.14451009,

0.14061043, 0.66630179], dtype=float32), 'label': 2}

0.44859198]

附上代码:

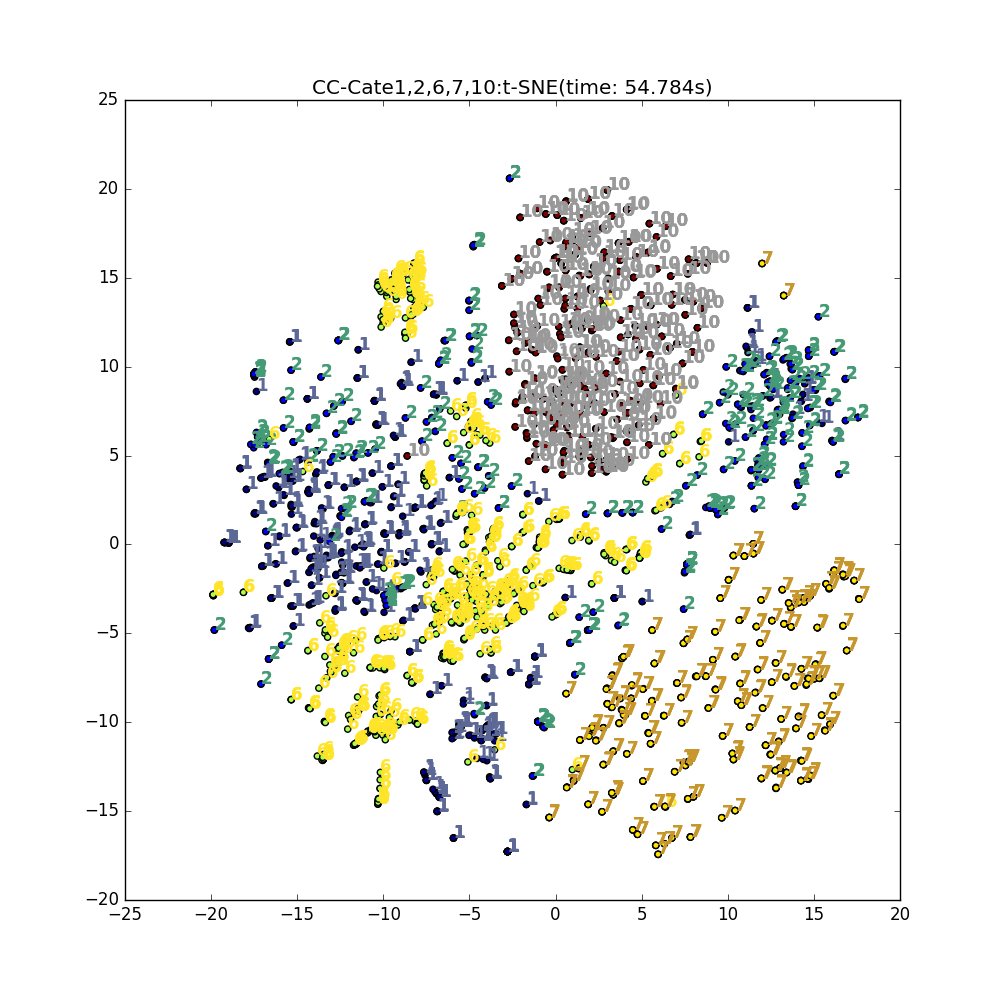

# -*- coding:utf-8 -*- import pickle import numpy as np from sklearn.manifold import TSNE import matplotlib.pyplot as plt plt.switch_backend('agg') import collections import os import types import time os.environ['CUDA_VISIBLE_DEVICES'] = '0' BTVX = [] a = [] label = [] path = 'D:\PycharmProject\\video_feature\Jan_videos_feature\Jan_videos\cate1' path1 = 'D:\PycharmProject\\video_feature\Jan_videos_feature\Jan_videos\cate2' path2 = 'D:\PycharmProject\\video_feature\Jan_videos_feature\Jan_videos\cate6' path3 = 'D:\PycharmProject\\video_feature\Jan_videos_feature\Jan_videos\cate7' path4 = 'D:\PycharmProject\\video_feature\Jan_videos_feature\Jan_videos\cate10' def gen_dataset_from_pkl_feature(path,cate_value): count = 0 x_dataset = [] y_dataset = [] x_train = collections.OrderedDict() for fpathe, dirs, filenames in os.walk(path): for filename in filenames: if filename.startswith('CCTV1'): if filename.endswith('.pkl'): count += 1 pkl_file = os.path.join(fpathe, filename) pkl_fp = open(pkl_file, 'rb') x_train.update(pickle.load(pkl_fp)) # print x_train x_values = x_train.values() for i in range(len(x_values)): if type(x_values[i]) == dict: x_dataset.append(x_values[i]['fea']) else: x_dataset.append(x_values[i]) x_dataset = np.array(x_dataset) print x_dataset.shape y_dataset = [cate_value for i in range(len(x_train))] y_dataset = np.array(y_dataset) print y_dataset.shape # y_dataset.append(x_train[i]['label']) x_dataset = np.array(x_dataset[:500]) y_dataset = np.array(y_dataset[:500]) # print(len(x_train)) # print x_dataset.shape return x_dataset, y_dataset,count x_dataset_cate1,y_dataset_cate1,count1 = gen_dataset_from_pkl_feature(path,1) x_dataset_cate2,y_dataset_cate2,count2= gen_dataset_from_pkl_feature(path1,2) x_dataset_cate6,y_dataset_cate6,count6 = gen_dataset_from_pkl_feature(path2,6) x_dataset_cate7,y_dataset_cate7,count7 = gen_dataset_from_pkl_feature(path3,7) x_dataset_cate10,y_dataset_cate10,count10 = gen_dataset_from_pkl_feature(path4,10) # print x_dataset_cate1.shape # x_dataset = x_dataset_cate2 # y_dataset = y_dataset_cate2 x_dataset = np.concatenate((x_dataset_cate1,x_dataset_cate2,x_dataset_cate6,x_dataset_cate7,x_dataset_cate10),axis=0) y_dataset = np.concatenate((y_dataset_cate1,y_dataset_cate2,y_dataset_cate6,y_dataset_cate7,y_dataset_cate10),axis=0) xx = [] for i in range(0,len(x_dataset)): #正则化 xx.append((x_dataset[i] - np.min(x_dataset)) / (np.max(x_dataset) - np.min(x_dataset))) x_dataset = xx tsne = TSNE(n_components=2) start_time = time.time() tSNE = tsne.fit_transform(x_dataset) fig = plt.figure(figsize=(10,10)) ax = plt.subplot(111) plt.title('CC-Cate1,2,6,7,10:t-SNE(time: %.3fs)'%(time.time() - start_time)) # #画散点图 # cValue = ['r','y','g','b','r','y','g','b','r'] # ax1.scatter(x,y,c=cValue,marker='s') plt.scatter(tSNE[:, 0], tSNE[:, 1],c=y_dataset) for i in range(tSNE.shape[0]): plt.text(tSNE[i, 0], tSNE[i, 1], str(y_dataset[i])) plt.savefig('t-SNE.png')