HDFS文件:Java程序:

import java.io.BufferedOutputStream;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.InputStream;

import java.io.OutputStream;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

public class FileFormHdfsToLocal {

public static void main(String[] args) {

String hdfs = args[0];

String local = args[1];

OutputStream out;

try {

out = new BufferedOutputStream(new FileOutputStream(local));

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(URI.create(hdfs), conf);

InputStream in = fs.open(new Path(hdfs));

byte [] bytes = new byte [20];

in.skip(100); //跳过前100字节

int readNumberOfBytes = in.read(bytes, 0, 20); //读入第101至200字节

out.write(bytes);

out.flush();

in.close();

out.close();

} catch (FileNotFoundException e1) {

e1.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

}

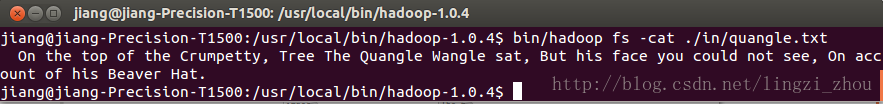

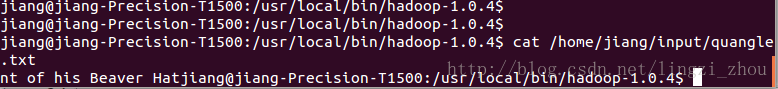

}运行结果: