Class org.datanucleus.api.jdo.JDOPersistenceManagerFactory was not found

Class org.datanucleus.api.jdo.JDOPersistenceManagerFactory was not found

问题描述:

一次应用程序迁移底层Hadoop集群(由CDH迁移至HDP),在发起Spark On YARN任务的时候,Spark任务连接Hive异常,出现如标题所示的异常。

软件版本:

| 软件 | 版本 |

|---|---|

| HDP | 2.6.4.0-91 |

| Spark | 1.6.3 |

| Hadoop | 2.7.3 |

1. 思路第一步

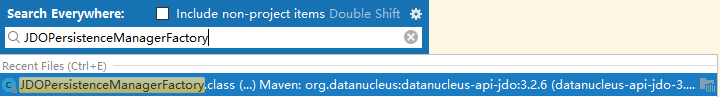

- 首先查找此类,此类是在datanucleus-api-jdo.jar中的,如下图所示;

- 也就是说,在Spark On YARN调用的时候其Classpath并没有该类,考虑添加此jar包到Classpath;

- 此jar包在Hive的安装目录,直接把整个Hive的lib添加到Classpath;

2. 新问题及解决

- 添加hive的lib到Classpath后,出现如下所示的错误:

java.lang.SecurityException: sealing violation: package org.apache.derby.impl.services.timer is sealed

at java.net.URLClassLoader.getAndVerifyPackage(URLClassLoader.java:399)

at java.net.URLClassLoader.definePackageInternal(URLClassLoader.java:419)

at java.net.URLClassLoader.defineClass(URLClassLoader.java:451)

at java.net.URLClassLoader.access$100(URLClassLoader.java:73)

at java.net.URLClassLoader$1.run(URLClassLoader.java:368)

at java.net.URLClassLoader$1.run(URLClassLoader.java:362)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:361)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:335)

并且会提示:

Caused by: java.lang.NoClassDefFoundError: Could not initialize class org.apache.derby.jdbc.EmbeddedDriver

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

- 如果单看上面第二个NoClassDefFoundError,那么会认为是没有加入相应的jar包,通过查询,此类位于derby.jar中,同时在Hive的lib中是有该jar包的;

- 通过查询(参考:https://stackoverflow.com/questions/6454061/securityexception-sealing-violation-when-starting-derby-connection ),发现第一个错误,是由于有多个derby.jar在Classpath中导致的,所以了解到时加入了Hive的lib中的jar包后,引入的jar包过多;

- 在hdp中spark-client的lib包中有datanucleus-api-jdo.jar,并且没有derby.jar,可以考虑替换Hive的lib为spark-client中的lib到Classpath中;

- 经过实验,此方案可行!