一、前言

Openshift基于OVS SDN的CNI网络方案中,存在一个SDN master节点,而Openshift的所有工作节点也都是SDN的工作节点。

SDN工作节点在启动的时候先向master注册(建立etcd子节点),master监控到这个节点注册信息之后,会为自节点分配一个子网写入到etcd该子节点下面,然后自节点利用这些信息在节点本地进行初始化配置:

- 建立OVS bridge设备br0,并在br0上设置一些traffic的基本规则,之后所有Openshift启动的POD都可以挂载到这个bridge上面

- 建立OVS internal port设备tun0,并为这个设备配置SDN子网的网关,然后借助于iptables的NAT,POD就可以通过这个设备访问外部网络。

- 建立OVS VXLAN设备vxlan_sys_4789(vxlan0),这个设备进行基于vxlan协议的overlay封装,POD借助这个设备访问其它节点的POD。

在SDN工作节点运行期间的职责主要包括:

- 配置POD网络

- 监听SDN节点的加入和删除事件,更新br0规则以保证访问新的subnet的数据包被转发到vxlan0

- 监听Openshift project的加入和删除事件,在ovs-multitenant模式下为不同project配置vxlan的VNID,进行流量隔离

在Openshift启动POD的时候,需要SDN工作节点为POD配置网络环境:

- 从子网里面分配一个IP地址给这个POD

- 将POD的veth对主机侧挂载到br0

- 修改OVS规则保证不同目的地的数据包可以路由到合适的OVS端口

- 在ovs-multitenant模式下,添加OVS规则来根据VNID为数据包打包,以满足流量隔离策略

载自https://blog.csdn.net/cloudvtech

二、Openshift节点和网络启动代码分析(Openshift origin v1.5)

1. /etc/systemd/system/multi-user.target.wants/origin-node.service

2. /usr/bin/openshift start node --config=/etc/origin/node/node-config.yaml --loglevel=5

(openshift.CommandFor(basename))

(cmd = NewCommandOpenShift("openshift”))

5.

openshift.go@ startAllInOne, _ := start.NewCommandStartAllInOne(name, out, errout)

root.AddCommand(startAllInOne)

6. start_allinone.go@ startNode, _ := NewCommandStartNode(basename, out, errout)

7. start_allinone.go@ startNodeNetwork, _ := NewCommandStartNetwork(basename, out, errout)

8. start_node.go@ resolveNodeConfig

9. start_node.go@ StartNode

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.677281 1724 start_node.go:250] Reading node configuration from /etc/origin/node/node-config.yaml

10. node_config.go@ BuildKubernetesNodeConfig

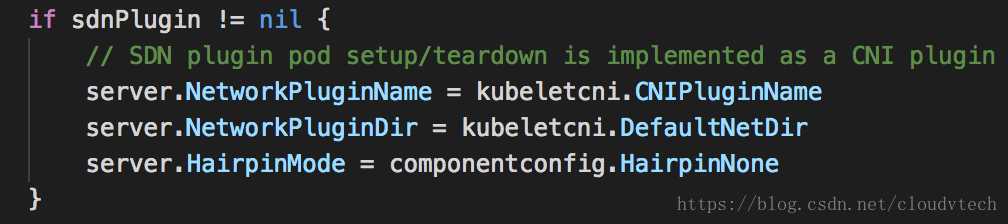

let kubelet know the CNI plugin we used

…

KubeletServer: server,

...

origin/pkg/cmd/server/kubernetes/node.go

origin/pkg/cmd/server/kubernetes/node.go

start kubelet with config

11. sdnPlugin, err := sdnplugin.NewNodePlugin(options.NetworkConfig.NetworkPluginName, originClient, kubeClient, options.NodeName, options.NodeIP, iptablesSyncPeriod, options.NetworkConfig.MTU)

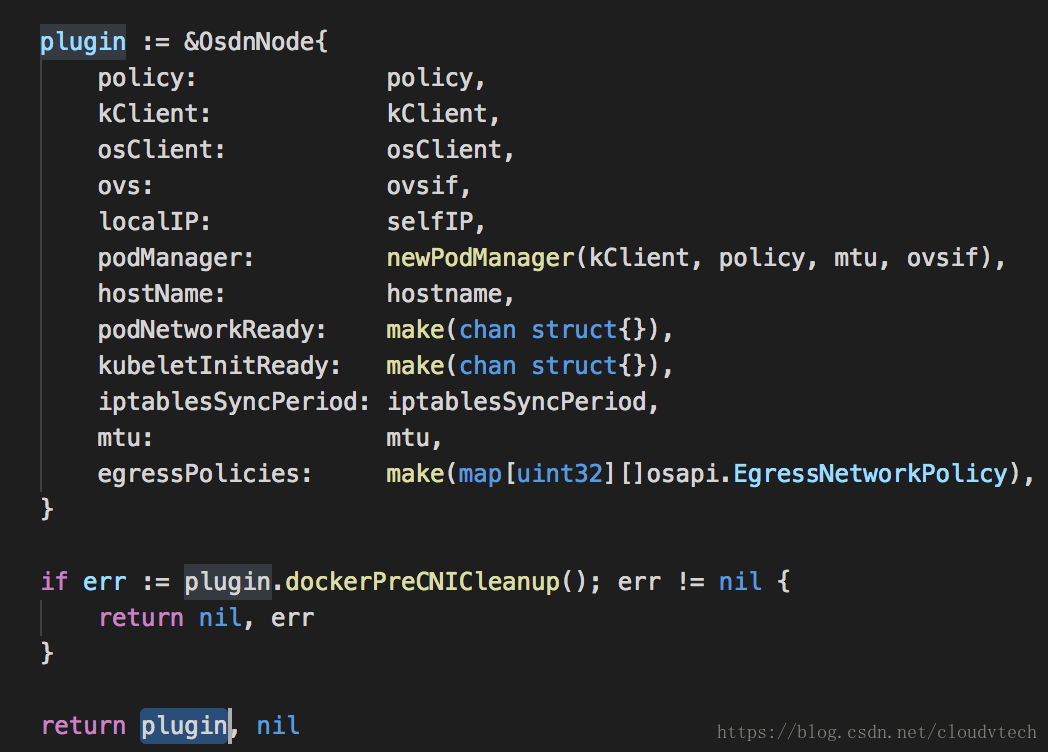

12. origin/pkg/sdn/plugin/node.go@ NewNodePlugin (

this function returns a instance of NodeConfig)

13. Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.820421 1724 node.go:88] Initializing SDN node of type "redhat/openshift-ovs-subnet" with configured hostname "ic-node8" (IP ""), iptables sync period “30s"

13. Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.820421 1724 node.go:88] Initializing SDN node of type "redhat/openshift-ovs-subnet" with configured hostname "ic-node8" (IP ""), iptables sync period “30s"

14 create OVS instance (ovsif, err := ovs.New(kexec.New(), BR, minOvsVersion))

15 calls kubelet initialize function

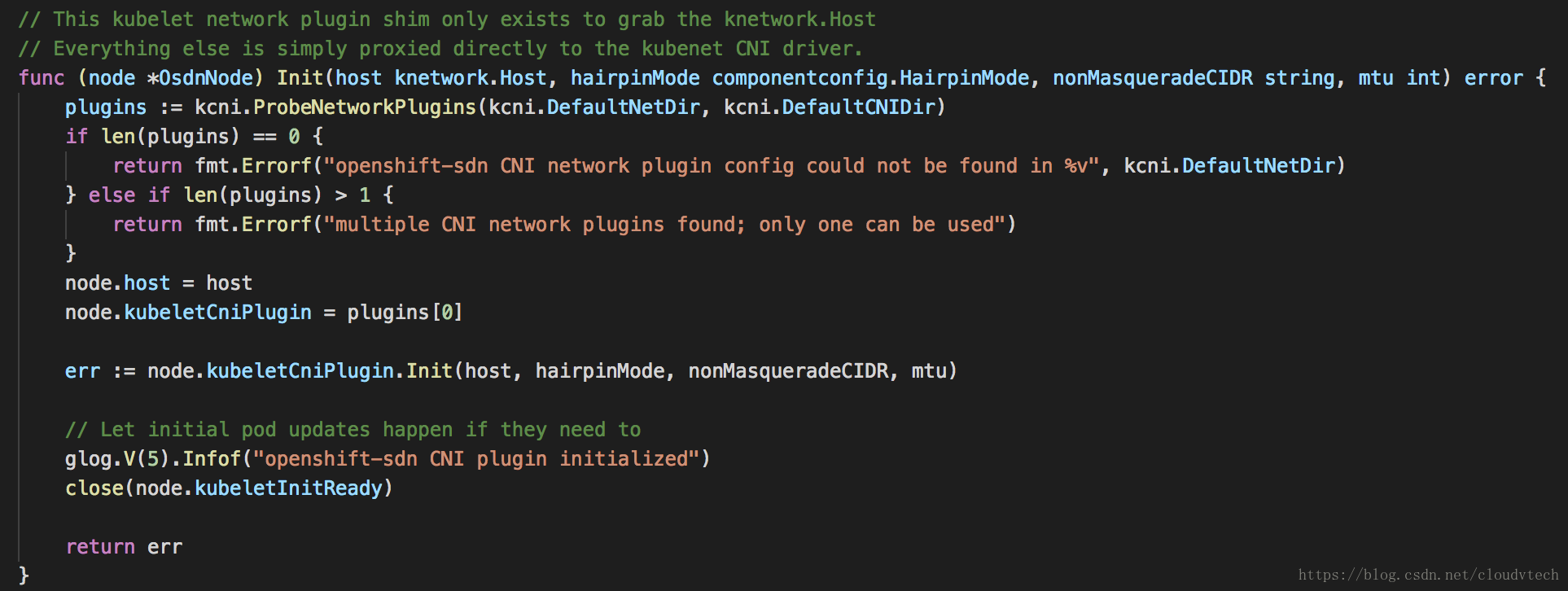

origin/pkg/sdn/plugin/plugin.go @ func (node *OsdnNode) Init(host knetwork.Host, hairpinMode componentconfig.HairpinMode, nonMasqueradeCIDR string, mtu int) error {

origin/pkg/sdn/plugin/plugin.go @ func (node *OsdnNode) Init(host knetwork.Host, hairpinMode componentconfig.HairpinMode, nonMasqueradeCIDR string, mtu int) error {

origin/vendor/k8s.io/kubernetes/pkg/kubelet/network/plugins.go @ func (plugin *NoopNetworkPlugin) Init(host Host, hairpinMode componentconfig.HairpinMode, nonMasqueradeCIDR string, mtu int) error {

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.933879 1724 plugin.go:30] openshift-sdn CNI plugin initialized

origin/vendor/k8s.io/kubernetes/pkg/kubelet/network/plugins.go @ func InitNetworkPlugin(plugins []NetworkPlugin, networkPluginName string, host Host, hairpinMode componentconfig.HairpinMode, nonMasqueradeCIDR string, mtu int) (NetworkPlugin, error) {

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.934221 1724 plugins.go:181] Loaded network plugin “cni"

载自https://blog.csdn.net/cloudvtech

三、Openshift SDN 工作接节点运行代码分析

1. start_node.go@ StartNode

if components.Enabled(ComponentPlugins) {

config.RunPlugin()

}

2 origin/pkg/cmd/server/kubernetes/node.go @

and calls origin/pkg/sdn/plugin/singletenant.go

3 origin/pkg/sdn/plugin/node.go @ func (node *OsdnNode) Start() error {

3.1 get network info (ClusterNetwork CIDR and ServiceNetwork CIDR) from Openshift(etcd)

3.2 get local subnet from Openshift(etcd)

3.3 initialize and setup IPTables

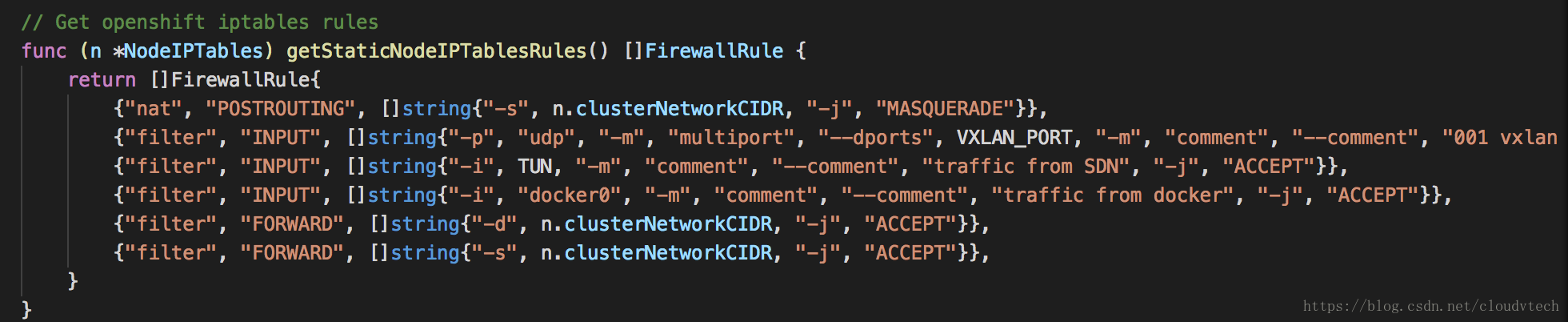

3.3.1 newNodeIPTables instance

3.3.2 Setup IPtables (node_iptables.go @ Setup and syncIPTableRules, iptables will get reload and call a SDN defined relocate func, in this case syncIPTableRules)

3.3.2.1 syncIPTableRules

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.877751 1724 node_iptables.go:79] Syncing openshift iptables rules

3.3.2.1.1 getStaticNodeIPTablesRules

3.3.2.1.2 EnsureRule

3.3.2.1.2 EnsureRule

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.877778 1724 iptables.go:362] running iptables -C [POSTROUTING -t nat -s 10.128.0.0/14 -j MASQUERADE]

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.879372 1724 iptables.go:362] running iptables -I [POSTROUTING -t nat -s 10.128.0.0/14 -j MASQUERADE]

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.880786 1724 iptables.go:362] running iptables -C [INPUT -t filter -p udp -m multiport --dports 4789 -m comment --comment 001 vxlan incoming -j ACCEPT]

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.933728 1724 iptables.go:362] running iptables -I [INPUT -t filter -p udp -m multiport --dports 4789 -m comment --comment 001 vxlan incoming -j ACCEPT]

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.939130 1724 iptables.go:362] running iptables -C [INPUT -t filter -i tun0 -m comment --comment traffic from SDN -j ACCEPT]

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.940629 1724 iptables.go:362] running iptables -I [INPUT -t filter -i tun0 -m comment --comment traffic from SDN -j ACCEPT]

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.942110 1724 iptables.go:362] running iptables -C [INPUT -t filter -i docker0 -m comment --comment traffic from docker -j ACCEPT]

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.943486 1724 iptables.go:362] running iptables -I [INPUT -t filter -i docker0 -m comment --comment traffic from docker -j ACCEPT]

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.944922 1724 iptables.go:362] running iptables -C [FORWARD -t filter -d 10.128.0.0/14 -j ACCEPT]

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.946310 1724 iptables.go:362] running iptables -I [FORWARD -t filter -d 10.128.0.0/14 -j ACCEPT]

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.948098 1724 iptables.go:362] running iptables -C [FORWARD -t filter -s 10.128.0.0/14 -j ACCEPT]

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.955293 1724 iptables.go:362] running iptables -I [FORWARD -t filter -s 10.128.0.0/14 -j ACCEPT]

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.958209 1724 iptables.go:362] running iptables -N [KUBE-MARK-DROP -t nat]

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.960938 1724 iptables.go:362] running iptables -C [KUBE-MARK-DROP -t nat -j MARK --set-xmark 0x00008000/0x00008000]

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.968867 1724 node_iptables.go:77] syncIPTableRules took 91.11705ms

3.3.2.2 add IPTables reload hook function (syncIPTableRules)

4 setup SDN ( networkChanged, err := node.SetupSDN())

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.968901 1724 controller.go:196] [SDN setup] node pod subnet 10.131.2.0/23 gateway 10.131.2.1

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.970339 1724 controller.go:213] [SDN setup] full SDN setup required

4.1 create bridge: controller.go @ err = plugin.ovs.AddBridge("fail-mode=secure", "protocols=OpenFlow13”) --> origin/pkg/util/ovs/ovs.go @ func (ovsif *Interface) AddBridge(properties ...string) error {

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.970571 1724 ovs.go:62] Executing: ovs-vsctl --if-exists del-br br0 -- add-br br0 -- set Bridge br0 fail-mode=secure protocols=OpenFlow13

Nov 28 06:45:46 ic-node8 ovs-vsctl: ovs|00001|vsctl|INFO|Called as ovs-vsctl --if-exists del-br br0 -- add-br br0 -- set Bridge br0 fail-mode=secure protocols=OpenFlow13

4.2 add port vxlan0

_, err = plugin.ovs.AddPort(VXLAN, 1, "type=vxlan", `options:remote_ip="flow"`, `options:key="flow”`)

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.006615 1724 ovs.go:62] Executing: ovs-vsctl --may-exist add-port br0 vxlan0 -- set Interface vxlan0 ofport_request=1 type=vxlan options:remote_ip="flow" options:key=“flow”

Nov 28 06:45:47 ic-node8 ovs-vsctl: ovs|00001|vsctl|INFO|Called as ovs-vsctl --may-exist add-port br0 vxlan0 -- set Interface vxlan0 ofport_request=1 type=vxlan "options:remote_ip=\"flow\"" "options:key=\"flow\""

4.3 add port tun0

_, err = plugin.ovs.AddPort(TUN, 2, "type=internal”)

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.045586 1724 ovs.go:62] Executing: ovs-vsctl --may-exist add-port br0 tun0 -- set Interface tun0 ofport_request=2 type=internal

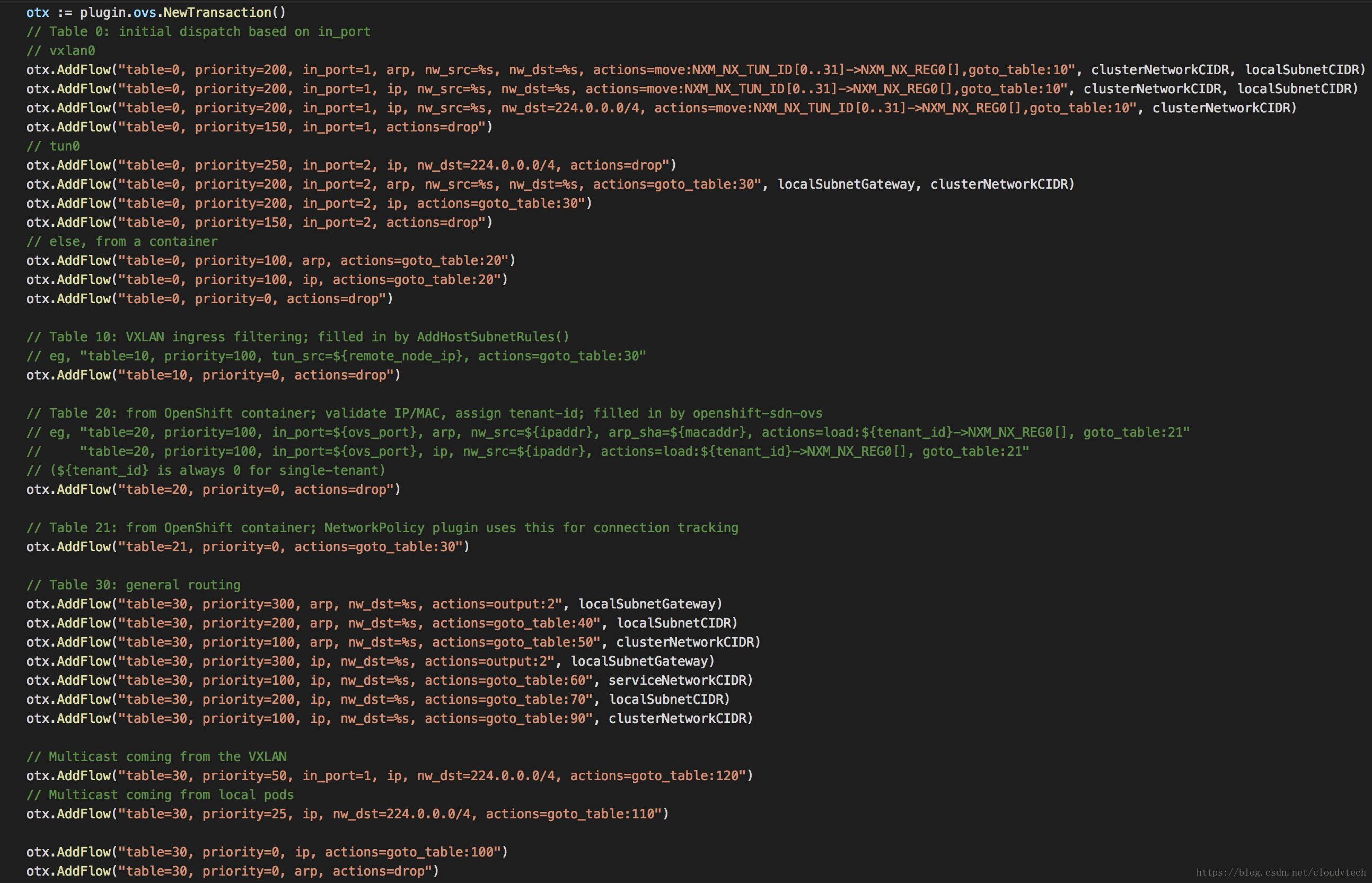

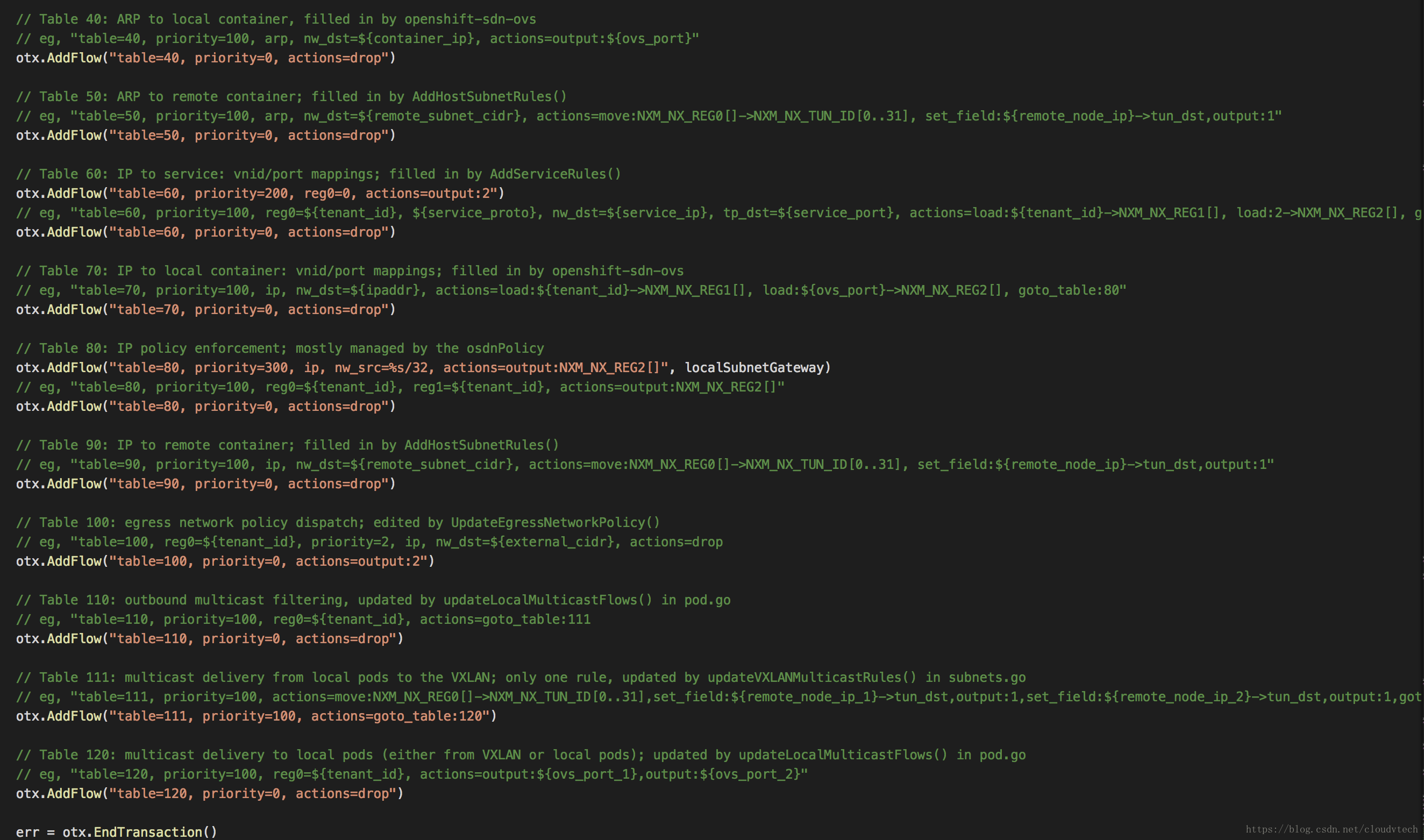

4.4 setup open flow table

4.5 set up network device

4.5 set up network device

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.239953 1724 ipcmd.go:44] Executing: /usr/sbin/ip addr add 10.131.2.1/23 dev tun0

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.241346 1724 ipcmd.go:44] Executing: /usr/sbin/ip link set tun0 mtu 1450

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.242810 1724 ipcmd.go:44] Executing: /usr/sbin/ip link set tun0 up

Nov 28 06:45:47 ic-node8 NetworkManager[587]: <info> [1511851547.2442] device (tun0): link connected

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.244605 1724 ipcmd.go:44] Executing: /usr/sbin/ip route add 10.128.0.0/14 dev tun0 proto kernel scope link

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.256330 1724 ipcmd.go:44] Executing: /usr/sbin/ip route add 172.30.0.0/16 dev tun0

4.6 add version to flow table

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.257798 1724 ovs.go:62] Executing: ovs-ofctl -O OpenFlow13 add-flow br0 table=253, actions=note:00.03

4.7 delete route

defer deleteLocalSubnetRoute(TUN, localSubnetCIDR)

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.261667 1724 ipcmd.go:44] Executing: /usr/sbin/ip route show dev tun0

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.263312 1724 ipcmd.go:44] Executing: /usr/sbin/ip route del 10.131.2.0/23 dev tun0

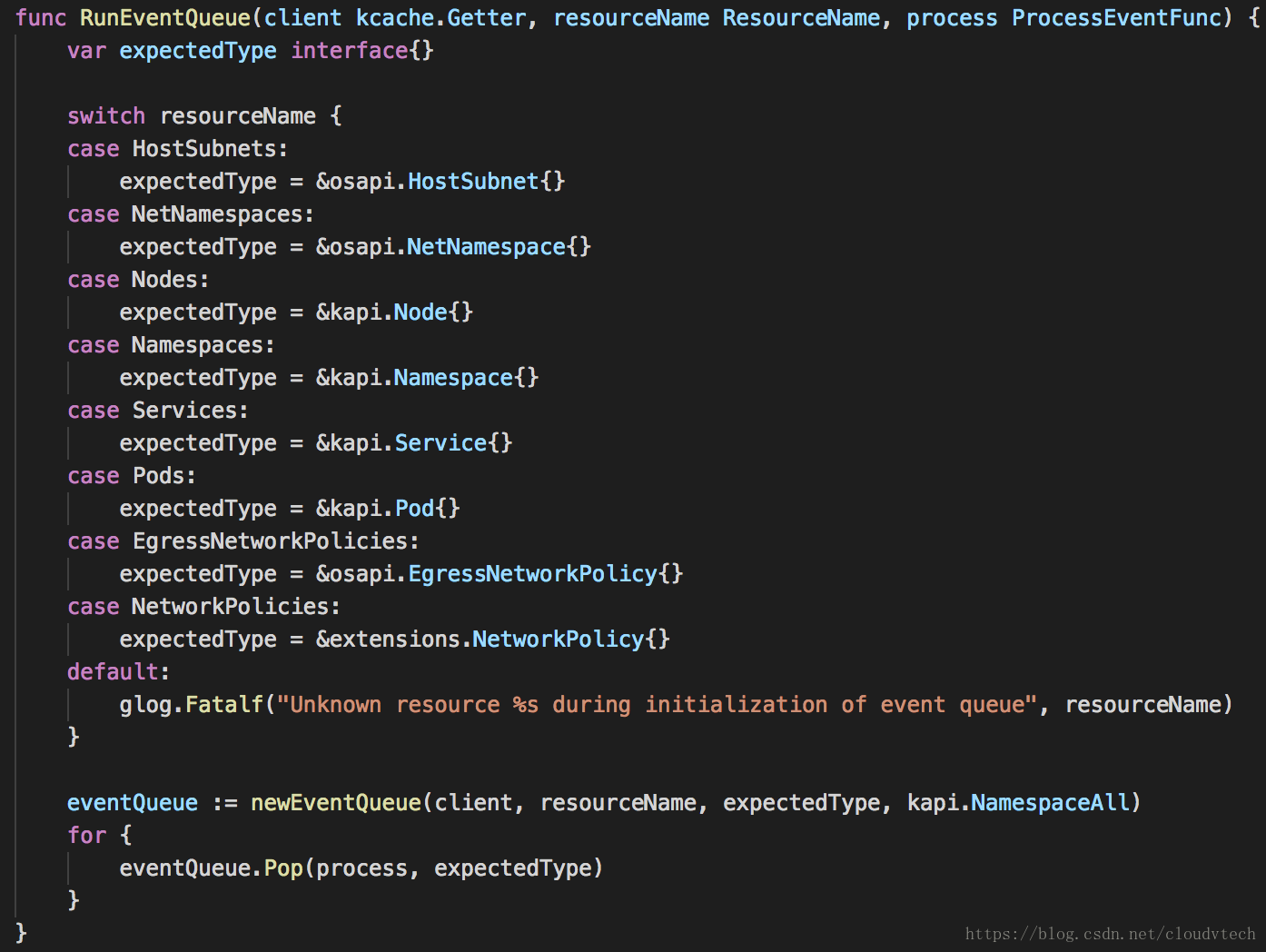

5 start subnet change handling task loop

5.1 start the go routing (go utilwait.Forever(node.watchSubnets, 0))

5.2 create an event queue and repopulate the event queue every 30 minutes

origin/pkg/sdn/plugin/subnets.go @ func (node *OsdnNode) watchSubnets() {

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.265598 1724 reflector.go:185] Starting reflector *api.HostSubnet (30m0s) from github.com/openshift/origin/pkg/sdn/plugin/common.go:107

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.265645 1724 reflector.go:234] Listing and watching *api.HostSubnet from github.com/openshift/origin/pkg/sdn/plugin/common.go:107

the event queue is from Openshift stats cache

5.3 upon event of k8s host node changes, the process function will be triggered

5.3.1 on add/sync event

5.3.1 on add/sync event

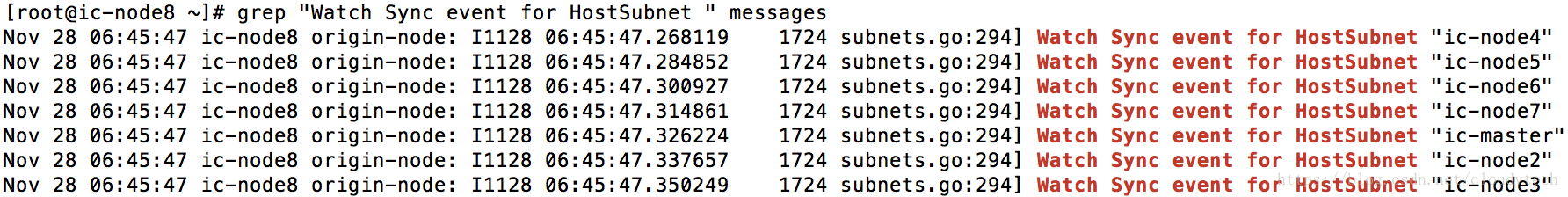

5.3.1.1 add host subnet rules in flow table

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.268119 1724 subnets.go:294] Watch Sync event for HostSubnet "ic-node4”

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.268155 1724 controller.go:427] AddHostSubnetRules for ic-node4 (host: "ic-node4", ip: "200.222.0.174", subnet: "10.129.2.0/23")

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.268194 1724 ovs.go:62] Executing: ovs-ofctl -O OpenFlow13 add-flow br0 table=10, priority=100, tun_src=200.222.0.174, actions=goto_table:30

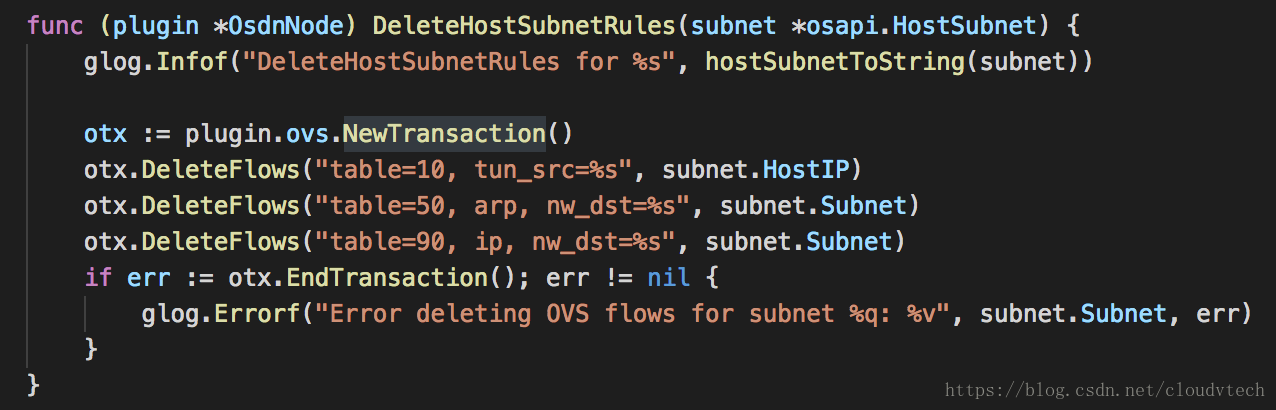

5.3.2 on delete event

5.3.2 update multicast rules @ updateVXLANMulticastRules

5.3.2 update multicast rules @ updateVXLANMulticastRules

otx.AddFlow("table=111, priority=100, actions=move:NXM_NX_REG0[]->NXM_NX_TUN_ID[0..31]%s,goto_table:120", strings.Join(tun_dsts, ""))

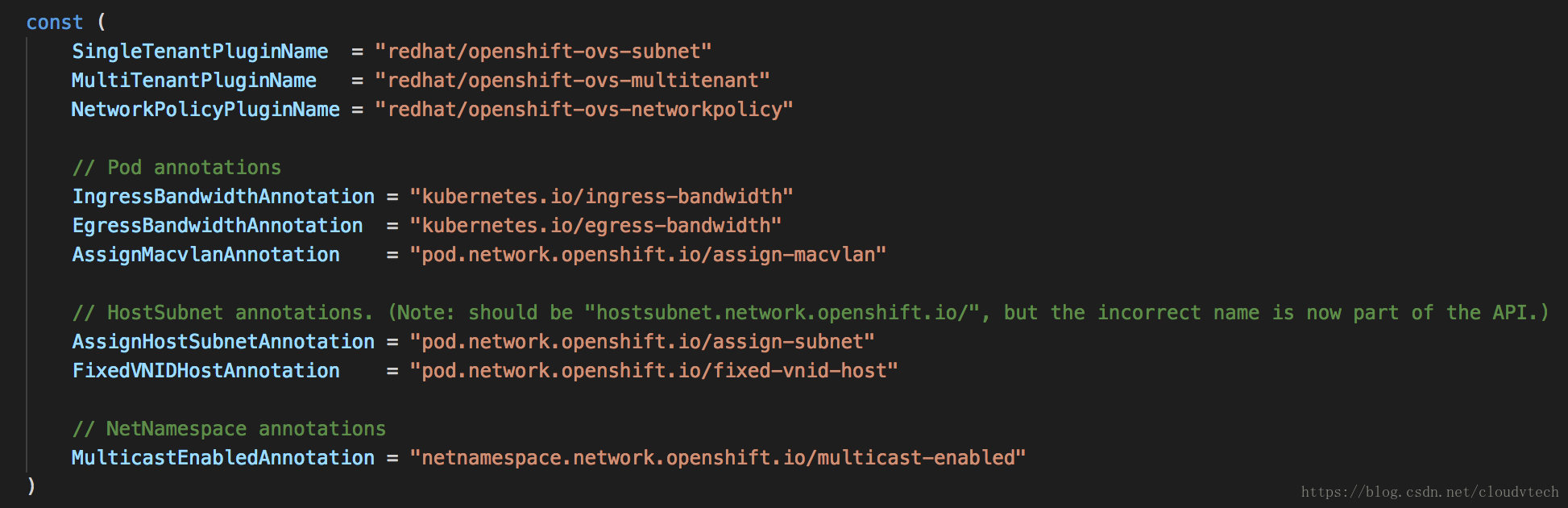

6 start single tenant SDN policy

case osapi.SingleTenantPluginName:

case osapi.SingleTenantPluginName:

policy = NewSingleTenantPlugin() // @ origin/pkg/sdn/plugin/singletenant.go

if err = node.policy.Start(node); err != nil { // @ origin/pkg/sdn/plugin/node.go

add flow table

otx.AddFlow("table=80, priority=200, actions=output:NXM_NX_REG2[]")

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.264897 1724 ovs.go:62] Executing: ovs-ofctl -O OpenFlow13 add-flow br0 table=80, priority=200, actions=output:NXM_NX_REG2[]

7 start go routing for K8S service changes

7.1 start the go routing (go kwait.Forever(node.watchServices, 0))

7.2 create an event queue and repopulate the event queue every 30 minutes

7.3 upon event of k8s service changes, the process function will be triggered

7.3.1 on add/sync event

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.271236 1724 node.go:347] Watch Sync event for Service “prometheus"

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.271254 1724 controller.go:457] AddServiceRules for &TypeMeta{Kind:,APIVersion:,}

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.271319 1724 ovs.go:62] Executing: ovs-ofctl -O OpenFlow13 add-flow br0 table=60, tcp, nw_dst=172.30.85.130, tp_dst=9090, priority=100, actions=load:0->NXM_NX_REG1[], load:2->NXM_NX_REG2[], goto_table:80

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.272503 1724 ovs.go:62] Executing: ovs-ofctl -O OpenFlow13 add-flow br0 table=50, priority=100, arp, nw_dst=10.129.2.0/23, actions=move:NXM_NX_REG0[]->NXM_NX_TUN_ID[0..31],set_field:200.222.0.174->tun_dst,output:1

7.3.1.1 get vnid according to k8s namespace

7.3.1.2 add rules to flow table

return fmt.Sprintf("table=60, %s, nw_dst=%s, tp_dst=%d", strings.ToLower(string(protocol)), IP, port)

return fmt.Sprintf("%s, priority=100, actions=load:%d->NXM_NX_REG1[], load:2->NXM_NX_REG2[], goto_table:80", baseRule, netID)

7.3.2 on delete event

return fmt.Sprintf("table=60, %s, nw_dst=%s, tp_dst=%d", strings.ToLower(string(protocol)), IP, port)

7.1 start the go routing (go kwait.Forever(node.watchServices, 0))

7.2 create an event queue and repopulate the event queue every 30 minutes

7.3 upon event of k8s service changes, the process function will be triggered

7.3.1 on add/sync event

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.271236 1724 node.go:347] Watch Sync event for Service “prometheus"

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.271254 1724 controller.go:457] AddServiceRules for &TypeMeta{Kind:,APIVersion:,}

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.271319 1724 ovs.go:62] Executing: ovs-ofctl -O OpenFlow13 add-flow br0 table=60, tcp, nw_dst=172.30.85.130, tp_dst=9090, priority=100, actions=load:0->NXM_NX_REG1[], load:2->NXM_NX_REG2[], goto_table:80

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.272503 1724 ovs.go:62] Executing: ovs-ofctl -O OpenFlow13 add-flow br0 table=50, priority=100, arp, nw_dst=10.129.2.0/23, actions=move:NXM_NX_REG0[]->NXM_NX_TUN_ID[0..31],set_field:200.222.0.174->tun_dst,output:1

7.3.1.1 get vnid according to k8s namespace

7.3.1.2 add rules to flow table

return fmt.Sprintf("table=60, %s, nw_dst=%s, tp_dst=%d", strings.ToLower(string(protocol)), IP, port)

return fmt.Sprintf("%s, priority=100, actions=load:%d->NXM_NX_REG1[], load:2->NXM_NX_REG2[], goto_table:80", baseRule, netID)

7.3.2 on delete event

return fmt.Sprintf("table=60, %s, nw_dst=%s, tp_dst=%d", strings.ToLower(string(protocol)), IP, port)

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.369006 1724 node.go:244] Starting openshift-sdn pod manager

8.1 get IPAM configure for CNI host-local IPAM plugin use

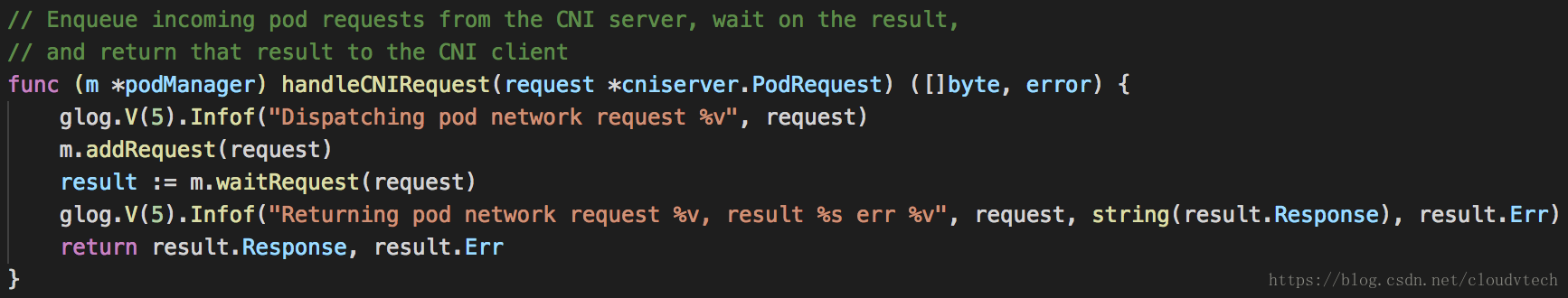

(func (m *podManager) Start(socketPath string, host knetwork.Host, localSubnetCIDR string, clusterNetwork *net.IPNet) error {)

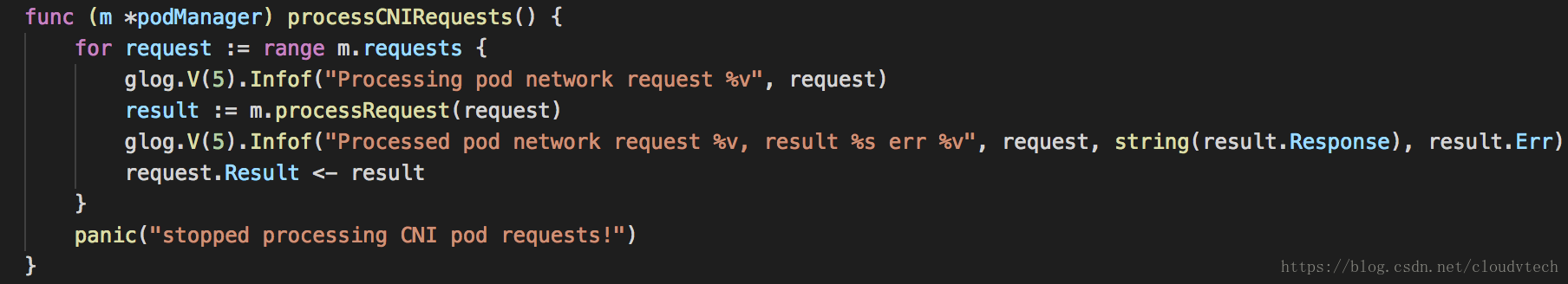

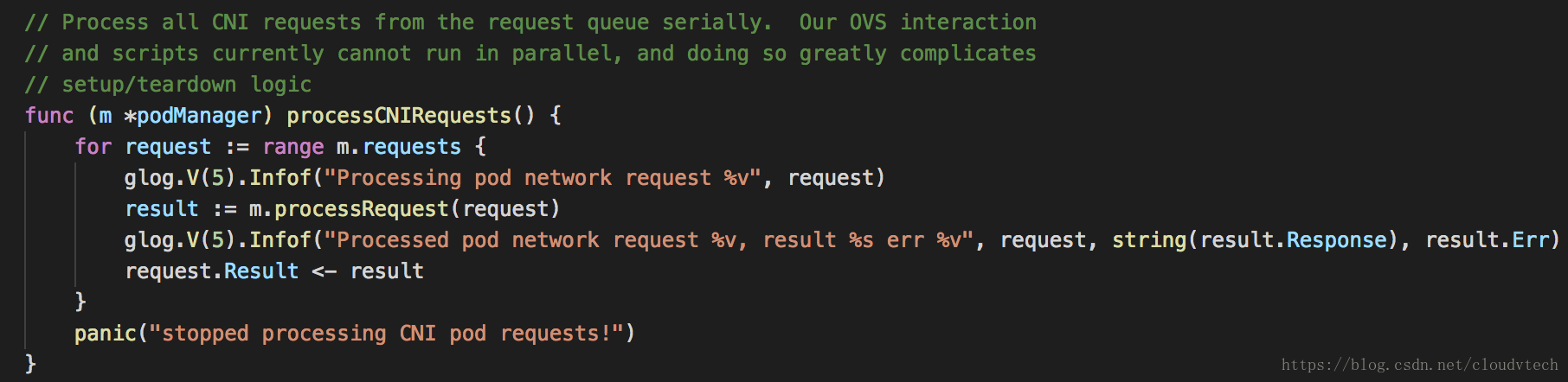

8.2 start CNI request process go routing (go m.processCNIRequests())

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.377831 1724 pod.go:240] Processing pod network request &{UPDATE application application-2368900360-nltrr 314aa5ec024dfd329a46ad4d779dfc200a2ba85a288187499c0930988a82795d 0xc421d71740}

8.2.1 process the request (CNI ADD/UPDATE/DELETE)

8.2.1.1 setup/update/teardown is defined at pod_linux.go

8.2.1.2 process add request

ipamResult, runningPod, err := m.podHandler.setup(request)

8.2.1.2.1 get POD configure (GetVNID, Get(req.PodName), wantsMacvlan(pod), getBandwidth(pod))

8.2.1.2.2 Run CNI IPAM allocation for the container and return the allocated IP address

result, err := invoke.ExecPluginWithResult("/opt/cni/bin/host-local", m.ipamConfig, args)

8.2.1.2.3 open hostport (m.hostportHandler.OpenPodHostportsAndSync)

载自https://blog.csdn.net/cloudvtech

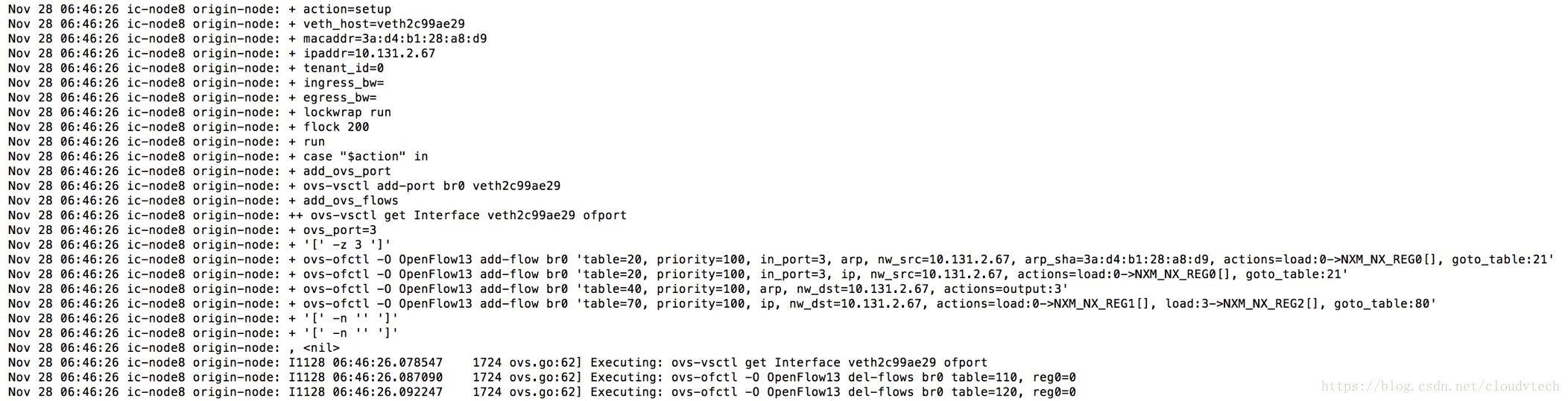

8.2.1.2.4 setup veth

8.2.1.2.5 configure interface

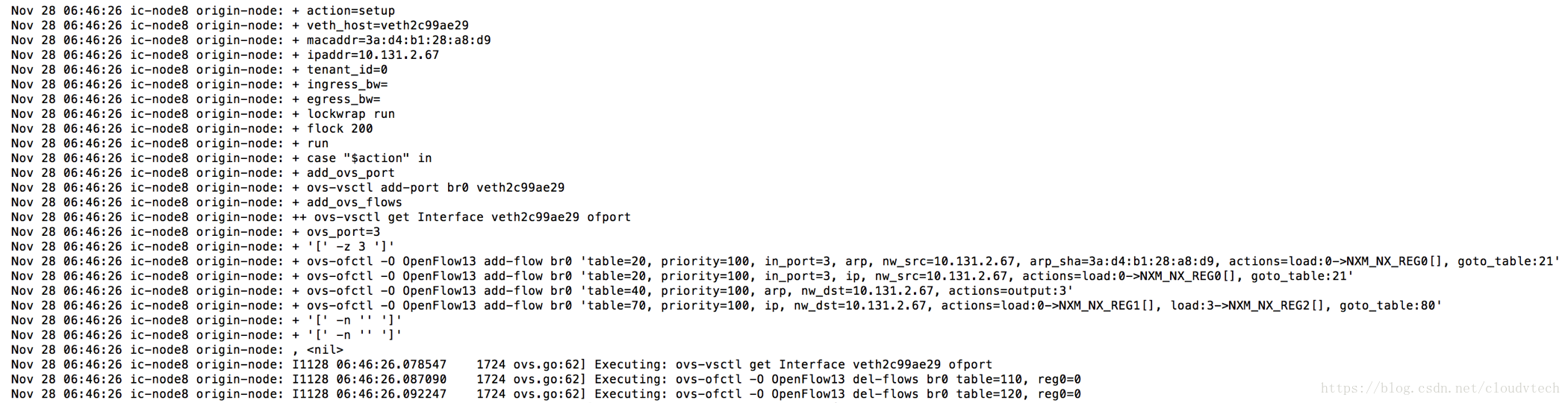

Nov 28 06:46:26 ic-node8 NetworkManager[587]: <info> [1511851586.0352] manager: (veth2c99ae29): new Veth device (/org/freedesktop/NetworkManager/Devices/11)

Nov 28 06:46:26 ic-node8 NetworkManager[587]: <info> [1511851586.0365] device (veth2c99ae29): link connected

Nov 28 06:46:26 ic-node8 ovs-vsctl: ovs|00001|vsctl|INFO|Called as ovs-vsctl add-port br0 veth2c99ae29

Nov 28 06:46:26 ic-node8 NetworkManager[587]: <info> [1511851586.0530] device (veth2c99ae29): enslaved to non-master-type device ovs-system; ignoring

Nov 28 06:46:26 ic-node8 kernel: device veth2c99ae29 entered promiscuous mode

8.2.1.2.6 enable macvlan if needed

8.2.1.2.6 run SDN script

out, err := exec.Command(sdnScript, setUpCmd, hostVeth.Attrs().Name, contVethMac, podIP.String(), vnidStr, podConfig.ingressBandwidth, podConfig.egressBandwidth).CombinedOutput()

Nov 28 06:46:26 ic-node8 origin-node: I1128 06:46:26.078507 1724 pod_linux.go:442] SetUpPod network plugin output: + lock_file=/var/lock/openshift-sdn.lock

8.2.1.2.7 get ofport

8.2.1.2.8 EnsureVNIDRules

8.2.1.3 process update request

func (m *podManager) update(req *cniserver.PodRequest) (uint32, error) {

8.2.1.3.1 get namespace

8.2.1.3.2 get POD configure

8.2.1.3.2 get veth info

8.2.1.3.3 exec SDN script

out, err := exec.Command(sdnScript, updateCmd, hostVethName, contVethMac, podIP, vnidStr, podConfig.ingressBandwidth, podConfig.egressBandwidth).CombinedOutput()

8.2.1.4 process teardown request

8.2.1.4.1 get veth info

8.2.1.4.2 exec SDN script

8.2.1.4.3 Run CNI IPAM release for the container

8.2.1.4.4 sync hostport changes

8.3 start CNI server with handleCNIRequest as handler function

container run time send request to CNI server for POD configure request

and the request is process as show in 8.2.1

9 start vnid sync go routing (go kwait.Forever(node.policy.SyncVNIDRules, time.Hour))

10 openshift-sdn ready

Nov 28 06:45:47 ic-node8 origin-node: I1128 06:45:47.382565 1724 node.go:267] openshift-sdn network plugin ready

载自https://blog.csdn.net/cloudvtech