版权声明:本文为博主原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。

浏览器驱动

from time import time, sleep

from selenium.webdriver import Chrome

from selenium.webdriver.chrome.options import Options # chrome设置

class Driver:

def __init__(self, headless=False):

self.t = time()

options = Options()

options.headless = headless # 设置浏览器为【无头】

self.d = Chrome(chrome_options=options)

def close(self):

self.d.quit()

t = (time() - self.t) / 60

print('%.2f分钟' % t)

def get(self, url):

self.d.get(url)

sleep(9)

return self.d.page_source

def test_xpath(self, url, xpath):

from lxml import etree

html = self.get(url)

element = etree.HTML(html, etree.HTMLParser())

return element.xpath(xpath)

def run_xpath(url, xpath):

d = Driver()

try:

ls = d.test_xpath(url, xpath)

except Exception as e:

ls = repr(e).split('\\n')

finally:

d.close()

return '\n'.join(ls)

if __name__ == '__main__':

url = 'https://blog.csdn.net/Yellow_python'

xpath = '//*[@id="asideProfile"]/div[3]/dl[2]/dd/@title'

print(run_xpath(url, xpath))

url = '123'

xpath = '123'

print(run_xpath(url, xpath))

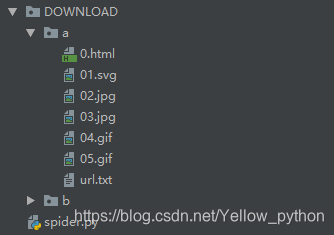

图文保存

from time import time, sleep

from selenium.webdriver import Chrome

from requests import get

from urllib.parse import urlsplit, urljoin

import os, re

def red(*args):

for a in args:

print('\033[031m{}\033[0m'.format(a))

PATH = 'DOWNLOAD/'

SECONDS = 5

class Driver:

"""网页下载"""

def __init__(self):

self.t = time()

self.d = Chrome()

def close(self):

self.d.quit()

t = (time() - self.t) / 60

print('%.2f分钟' % t)

def get(self, url):

self.d.get(url)

sleep(SECONDS)

return self.d.page_source

def get_img(url, times=3):

"""下载图片"""

if times < 0:

return b''

headers = {'User-Agent': 'Mozilla/4.0(compatible;MSIE7.0;WindowsNT5.1;360SE)'}

try:

r = get(url, headers=headers, timeout=30)

except Exception as e:

red(url, times, e)

return get_img(url, times - 1)

if r.status_code == 200:

return r.content

else:

red(url, times, r.status_code)

return get_img(url, times - 1)

def save_img(url_img, catalog, name):

"""下载并保存图片"""

suffixes = ['.gif', '.jpg', '.png', '.svg']

for suffix in suffixes:

if suffix in url_img:

path_img = name + suffix

break

else:

path_img = name + suffixes[1]

path_img_abs = os.path.join(catalog, path_img)

content = get_img(url_img)

with open(path_img_abs, 'wb') as f:

f.write(content)

return path_img

def mkdir(path):

"""创建文件夹"""

if not os.path.exists(path):

os.mkdir(path)

def join_url(url, postfix):

"""补全URL"""

tu = urlsplit(url)

domain = tu[0] + '://' + tu[1]

return urljoin(domain, postfix)

def save_html(html, path, url):

"""HTML图文保存"""

# 创建文件夹

mkdir(path)

# 保存网址

with open(path + '/url.txt', 'w', encoding='utf-8') as f:

f.write(url)

# 提取图片链接

url_imgs = re.findall('img[^>]+src="([^"]+)"', html)

for i, url_img in enumerate(url_imgs, 1):

# URL补全

url_total = join_url(url, url_img)

# 图片路径

path_img = '%02d' % i

# 保存图片

path_img = save_img(url_total, path, path_img)

# 替换图片路径

html = html.replace(url_img, path_img, 1)

# 保存html

with open(path + '/0.html', 'w', encoding='utf-8') as f:

f.write(html)

def download_html(url, path):

mkdir(PATH)

d = Driver()

try:

html = d.get(url) # 网页下载

save_html(html, os.path.join(PATH, path), url) # 图文保存

except Exception as e:

print('\033[031m{}\033[0m'.format(e))

finally:

d.close()

if __name__ == '__main__':

download_html('https://github.com/AryeYellow', 'a')

download_html('https://me.csdn.net/Yellow_python', 'b')

采集效果