版权声明:本文为博主原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。

文章目录

原理简述

统计语言模型(Statistical Language Model),可用于计算一个句子的合理程度。

表示句子,由有序的

个词

组成,句子概率

的计算公式如下:

N-gram

unigram

bigram

Add-k Smoothing

k=1;bigram;C表示count

e.g.

我很帅

她很美

代码&步骤

from collections import Counter

import numpy as np

"""语料"""

corpus = '''她的菜很好 她的菜很香 她的他很好 他的菜很香 他的她很好

很香的菜 很好的她 很菜的他 她的好 菜的香 他的菜 她很好 他很菜 菜很好'''.split()

"""语料预处理"""

counter = Counter() # 词频统计

for sentence in corpus:

for word in sentence:

counter[word] += 1

counter = counter.most_common()

lec = len(counter)

word2id = {counter[i][0]: i for i in range(lec)}

id2word = {i: w for w, i in word2id.items()}

"""N-gram建模训练"""

unigram = np.array([i[1] for i in counter]) / sum(i[1] for i in counter)

bigram = np.zeros((lec, lec)) + 1e-8

for sentence in corpus:

sentence = [word2id[w] for w in sentence]

for i in range(1, len(sentence)):

bigram[[sentence[i - 1]], [sentence[i]]] += 1

for i in range(lec):

bigram[i] /= bigram[i].sum()

"""句子概率"""

def prob(sentence):

s = [word2id[w] for w in sentence]

les = len(s)

if les < 1:

return 0

p = unigram[s[0]]

if les < 2:

return p

for i in range(1, les):

p *= bigram[s[i - 1], s[i]]

return p

print('很好的菜', prob('很好的菜'))

print('菜很好的', prob('菜很好的'))

print('菜好的很', prob('菜好的很'))

"""排列组合"""

def permutation_and_combination(ls_ori, ls_all=None):

ls_all = ls_all or [[]]

le = len(ls_ori)

if le == 1:

ls_all[-1].append(ls_ori[0])

ls_all.append(ls_all[-1][: -2])

return ls_all

for i in range(le):

ls, lsi = ls_ori[:i] + ls_ori[i + 1:], ls_ori[i]

ls_all[-1].append(lsi)

ls_all = permutation_and_combination(ls, ls_all)

if ls_all[-1]:

ls_all[-1].pop()

else:

ls_all.pop()

return ls_all

print('123排列组合', permutation_and_combination([1, 2, 3]))

"""给定词组,返回最大概率组合的句子"""

def max_prob(words):

pc = permutation_and_combination(words) # 生成排列组合

p, w = max((prob(s), s) for s in pc)

return p, ''.join(w)

print(*max_prob(list('香很的菜')))

print(*max_prob(list('好很的他菜')))

print(*max_prob(list('好很的的她菜')))

1、工具导入

from collections import Counter

import numpy as np, pandas as pd

pdf = lambda data, index=None, columns=None: pd.DataFrame(data, index, columns)

pandas用于可视化(Jupyter下),亦可用matplotlib、seaborn等工具

2、语料预处理

corpus = '她很香 她很菜 她很好 他很菜 他很好 菜很好'.split()

counter = Counter() # 词频统计

for sentence in corpus:

for word in sentence:

counter[word] += 1

counter = counter.most_common()

words = [wc[0] for wc in counter] # 词库(用于可视化)

lec = len(counter)

word2id = {counter[i][0]: i for i in range(lec)}

id2word = {i: w for w, i in word2id.items()}

pdf(counter, None, ['word', 'freq'])

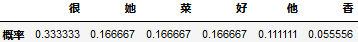

3、unigram

unigram = np.array([i[1] for i in counter]) / sum(i[1] for i in counter)

pdf(unigram.reshape(1, lec), ['概率'], words)

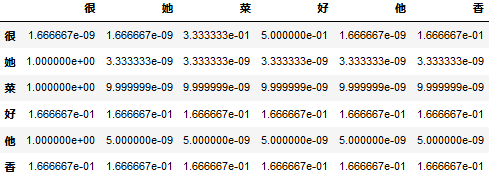

4、bigram

bigram = np.zeros((lec, lec)) + 1e-8 # 平滑

for sentence in corpus:

sentence = [word2id[w] for w in sentence]

for i in range(1, len(sentence)):

bigram[[sentence[i - 1]], [sentence[i]]] += 1

# 频数

pd.DataFrame(bigram, words, words, int)

# 频数 --> 概率

for i in range(lec):

bigram[i] /= bigram[i].sum()

pdf(bigram, words, words)

5、概率计算

def prob(sentence):

s = [word2id[w] for w in sentence]

les = len(s)

if les < 1:

return 0

p = unigram[s[0]]

if les < 2:

return p

for i in range(1, les):

p *= bigram[s[i - 1], s[i]]

return p

print(prob('菜很香'), 1 / 6 / 6)

基于Bigram的文本生成

https://github.com/AryeYellow/NLP/blob/master/TextGeneration/tg_trigram_and_cnn.ipynb

from collections import Counter

from random import choice

from jieba import lcut

# 语料读取、分词

with open('corpus.txt', encoding='utf-8') as f:

corpus = [lcut(line) for line in f.read().strip().split()]

# 词频统计

counter = Counter(word for words in corpus for word in words)

# N-gram建模训练

bigram = {w: Counter() for w in counter.keys()}

for words in corpus:

for i in range(1, len(words)):

bigram[words[i - 1]][words[i]] += 1

for k, v in bigram.items():

total2 = sum(v.values())

v = {w: c / total2 for w, c in v.items()}

bigram[k] = v

# 文本生成

n = 5 # 开放度

while True:

first = input('首字:').strip()

if first not in counter:

first = choice(list(counter))

next_words = sorted(bigram[first], key=lambda w: bigram[first][w])[:n]

next_word = choice(next_words) if next_words else ''

sentence = first + next_word

while bigram[next_word]:

next_word = choice(sorted(bigram[next_word], key=lambda w: bigram[next_word][w])[:n])

sentence += next_word

print(sentence)

附录

| en | cn |

|---|---|

| grammar | 语法 |

| unique | 唯一的 |

| binary | 二元的 |

| permutation | 排列 |

| combination | 组合 |

统计语言模型应用于词性标注,Python算法实现