查spark version:

spark-sql --version

spark的开源包: apache的dist下载

spark-2.4.3-bin-hadoop2.8.tgz

1/ spark要访问s3需要

cp /usr/lib/hadoop-current/share/hadoop/tools/lib/*aws* /usr/lib/spark-current/jars/

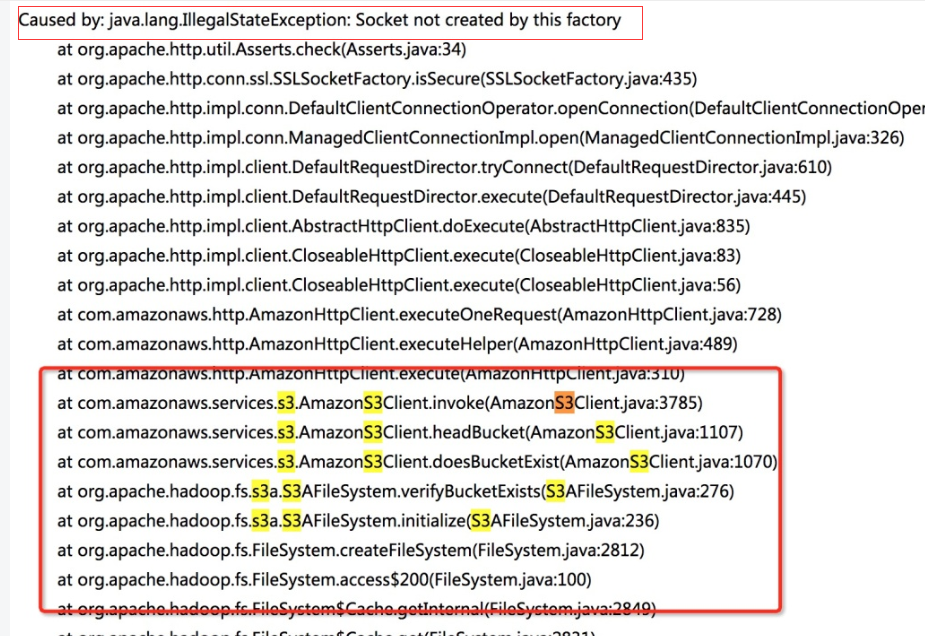

socket问题:

wget https://repo1.maven.org/maven2/org/apache/httpcomponents/httpclient/4.3.6/httpclient-4.3.6.jar

把/usr/lib/spark-current/jars/下面的httpclient-4.5.6.jar换成httpclient-4.3.6.jar就行了

#刚开始运行时报 socket not created by this factory, 先是想到替换spark的包,还是不行,替换httpclitent就可以了.

<property>

<name>fs.s3a.access.key</name>

<value>access.key</value>

</property>

<property>

<name>fs.s3a.secret.key</name>

<value>secret.key</value>

</property>

<property>

<name>fs.s3a.impl</name>

<value>org.apache.hadoop.fs.s3a.S3AFileSystem</value>

</property>

#振乾发的:emr hadoop访问s3

sudo cp /usr/lib/hadoop-current/share/hadoop/tools/lib/*aws* /usr/lib/hadoop-current/share/hadoop/common/lib/

sudo cp /usr/lib/hadoop-current/share/hadoop/tools/lib/*jackson* /usr/lib/hadoop-current/share/hadoop/common/lib/

sudo cp /usr/lib/hadoop-current/share/hadoop/tools/lib/joda-time-2.9.4.jar /usr/lib/hadoop-current/share/hadoop/common/lib/

#测试例子

例子1 测访问s3

pyspark --queue algo_spark

data = spark.sql("""

select * from oride_source.order_driver_feature_new where dt="{dt}" and hour="10"

""".format(dt="2020-01-09"))

data.take(2)

例子2 测访问s3

su - hdfs

cd /home/hdfs/bowen.wang/algo-offline-job/direct_dispatch_info

PYTHONPATH=./ /usr/lib/spark-current/bin/spark-submit --queue algo_spark ./all_city_driver_info.py prod