这是我的第一篇技术博客!

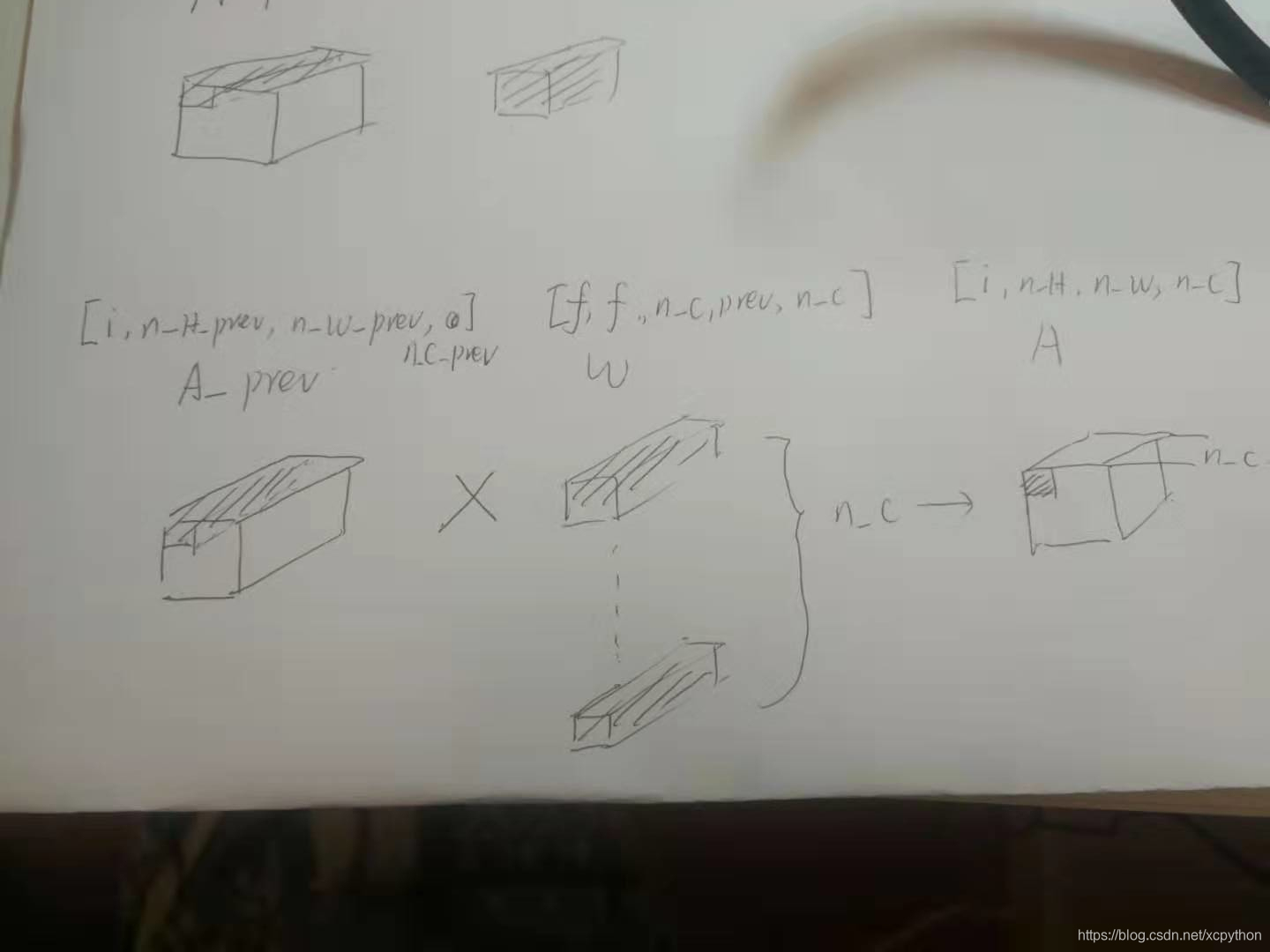

在学习吴恩达老师的深度学习课程中的卷积神经网络的建模部分,我遇到了一个问题,就是在不使用深度学习框架的情况下做CNN的前馈时,搞不清楚循环和各变量的维度之间的关系,比如下面这个过程:

最好的方法其实就是把问题化简,看其最小、最本质的步骤是什么,就是如上图所示的过程,看输出层的单个例子、单个通道的特定一点

是怎么得来的,具体实现代码如下:

def conv_forward(A_prev, W, b, hparameters):

"""

Implements the forward propagation for a convolution function

Arguments:

A_prev -- output activations of the previous layer,

numpy array of shape (m, n_H_prev, n_W_prev, n_C_prev)

W -- Weights, numpy array of shape (f, f, n_C_prev, n_C)

b -- Biases, numpy array of shape (1, 1, 1, n_C)

hparameters -- python dictionary containing "stride" and "pad"

Returns:

Z -- conv output, numpy array of shape (m, n_H, n_W, n_C)

cache -- cache of values needed for the conv_backward() function

"""

### START CODE HERE ###

# Retrieve dimensions from A_prev's shape (≈1 line)

(m, n_H_prev, n_W_prev, n_C_prev) = A_prev.shape

# Retrieve dimensions from W's shape (≈1 line)

(f, f, n_C_prev, n_C) =W.shape

# Retrieve information from "hparameters" (≈2 lines)

stride = hparameters["stride"]

pad = hparameters['pad']

# Compute the dimensions of the CONV output volume using the formula given above.

# Hint: use int() to apply the 'floor' operation. (≈2 lines)

n_H = int(np.floor((n_H_prev-f+2*pad)/stride)+1)

n_W = int(np.floor((n_W_prev-f+2*pad)/stride)+1)

# Initialize the output volume Z with zeros. (≈1 line)

Z = np.zeros((m,n_H,n_W,n_C))

# Create A_prev_pad by padding A_prev

A_prev_pad = zero_pad(A_prev, pad)

for i in range(m): # loop over the batch of training examples

a_prev_pad = A_prev_pad[i,:,:,:] # Select ith training example's padded activation

for h in range(n_H): # loop over vertical axis of the output volume

# Find the vertical start and end of the current "slice" (≈2 lines)

vert_start = h*stride

vert_end =vert_start+f

for w in range(n_W): # loop over horizontal axis of the output volume

# Find the horizontal start and end of the current "slice" (≈2 lines)

horiz_start = w*stride

horiz_end = horiz_start+f

for c in range(n_C): # loop over channels (= #filters) of the output volume

# Use the corners to define the (3D) slice of a_prev_pad (See Hint above the cell). (≈1 line)

a_slice_prev = a_prev_pad[vert_start:vert_end,horiz_start:horiz_end,:]

# Convolve the (3D) slice with the correct filter W and bias b, to get back one output neuron. (≈3 line)

weights = W[:,:,:,c]

biases = b[:,:,:,c]

Z[i, h, w, c] = conv_single_step(a_slice_prev, weights,biases)

### END CODE HERE ###

# Making sure your output shape is correct

assert(Z.shape == (m, n_H, n_W, n_C))

# Save information in "cache" for the backprop

cache = (A_prev, W, b, hparameters)

return Z, cache

结束了