构建网络

此为优达学城共享单车项目的第二部分,第一部分见https://blog.csdn.net/xiuxiuxiu666/article/details/104253921

主要通过此次网络搭建,熟悉向前传播和向后传播过程!

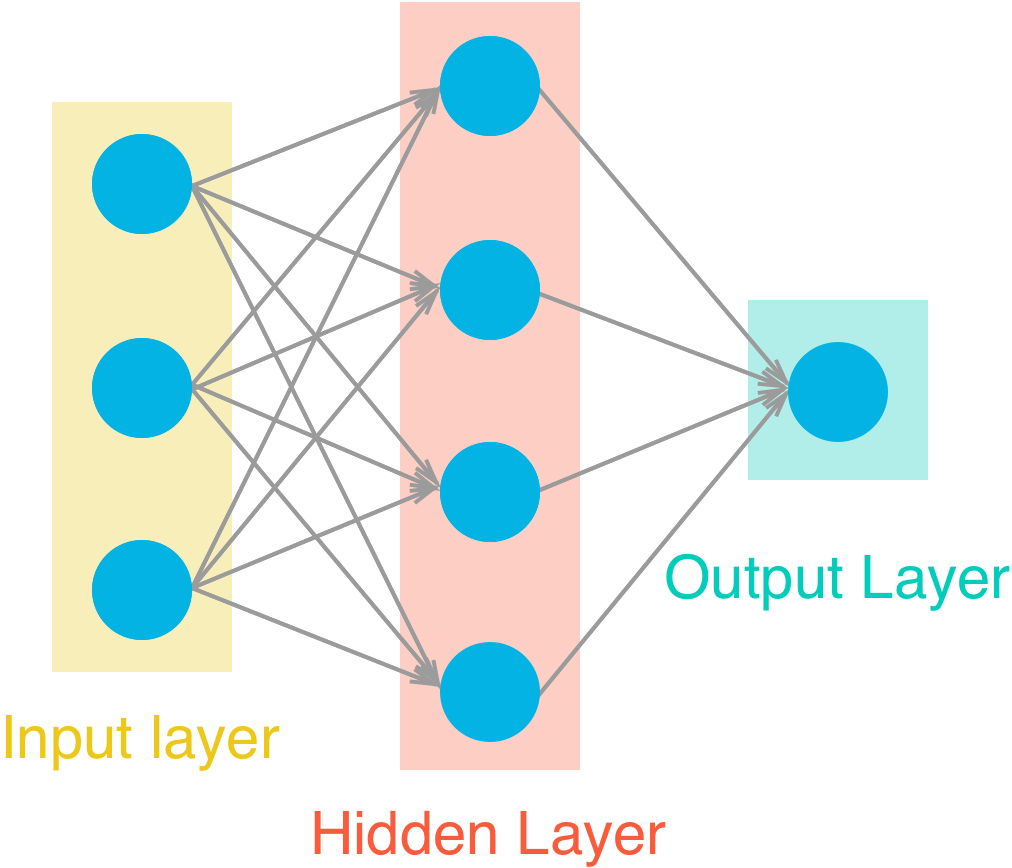

该网络有两个层级,一个隐藏层和一个输出层。隐藏层级将使用 S 型函数作为激活函数。输出层只有一个节点。据此搭建网络。

完整代码:

class NeuralNetwork(object):

def __init__(self, input_nodes, hidden_nodes, output_nodes, learning_rate):

# Set number of nodes in input, hidden and output layers.

self.input_nodes = input_nodes

self.hidden_nodes = hidden_nodes

self.output_nodes = output_nodes

# Initialize weights

self.weights_input_to_hidden = np.random.normal(0.0, self.input_nodes**-0.5,

(self.input_nodes, self.hidden_nodes))

self.weights_hidden_to_output = np.random.normal(0.0, self.hidden_nodes**-0.5,

(self.hidden_nodes, self.output_nodes))

self.lr = learning_rate

####Set self.activation_function to your implemented sigmoid function ####

self.activation_function = lambda x : 1/(1 + np.exp(-x)) # 设置sigmoid函数.

def train(self, features, targets):

''' Train the network on batch of features and targets.

features: 2D array, each row is one data record, each column is a feature

targets: 1D array of target values

'''

n_records = features.shape[0]

delta_weights_i_h = np.zeros(self.weights_input_to_hidden.shape)

delta_weights_h_o = np.zeros(self.weights_hidden_to_output.shape)

for X, y in zip(features, targets):

### Forward pass ###

hidden_inputs = np.dot(X , self.weights_input_to_hidden) # signals into hidden layer

hidden_outputs = self.activation_function(hidden_inputs) # signals from hidden layer

final_inputs = np.dot(hidden_outputs , self.weights_hidden_to_output) # signals into final output layer

final_outputs = final_inputs # signals from final output layer

#### Implement the backward pass here ####

### Backward pass ###

error = y - final_outputs # Output layer error is the difference between desired target and actual output.#误差函数的导数

output_error_term = error

hidden_error = np.dot(self.weights_hidden_to_output , output_error_term ) #连接权重的导数*误差函数的导数

# output_error_term = error

hidden_error_term = hidden_error * hidden_outputs * (1 - hidden_outputs) #连接权重的导数*误差函数的导数*激活函数的导数

# Weight step (input to hidden)

delta_weights_i_h += hidden_error_term * X[:,None] #乘以输入值

# Weight step (hidden to output)

delta_weights_h_o += output_error_term * hidden_outputs[:,None] #误差函数的导数*连接权重的导数

# Update the weights - Replace these values with your calculations.

self.weights_hidden_to_output += self.lr * delta_weights_h_o / n_records # update hidden-to-output weights with gradient descent step

self.weights_input_to_hidden += self.lr * delta_weights_i_h / n_records # update input-to-hidden weights with gradient descent step

def run(self, features):

''' Run a forward pass through the network with input features

Arguments

---------

features: 1D array of feature values

'''

#### Implement the forward pass here ####

hidden_inputs = np.dot( features, self.weights_input_to_hidden ) # signals into hidden layer

hidden_outputs = self.activation_function( hidden_inputs ) # signals from hidden layer

final_inputs = np.dot( hidden_outputs, self.weights_hidden_to_output ) # signals into final output layer

final_outputs = final_inputs # signals from final output layer

return final_outputs

def MSE(y, Y):

return np.mean((y-Y)**2)

==========================================

其中:

向前传播:

hidden_inputs = np.dot(X , self.weights_input_to_hidden) # 输入值乘以权重

hidden_outputs = self.activation_function(hidden_inputs) # 激活函数

final_inputs = np.dot(hidden_outputs , self.weights_hidden_to_output) # 隐藏层输入值乘以第二层权重

final_outputs = final_inputs # 最终输出

接下来即为误差反向传播,使用梯度下降法调整权重

连接权重调整值△w = - η (∂E/∂w)

w:权重

E:误差

η:学习速率

激活函数表示为f(u)

根据复合函数求导法则:

∂E/∂wi = (∂E/∂y)*(∂y/∂wi ) (误差函数为输出结果y的函数,y为权重的函数)

误差函数对y求导∂E/∂y = r - y

∂y/∂wi = ∂f(u)/∂wi = (∂f(u)/∂u) x (∂u/∂wi)

u对wi求导只和xi相关 ∂u/∂wi = xi

∂f(u)/∂u为对sigmoid函数求导:∂f(u)/∂u = f(u)(1-f(u))

所以,综上 △wi = η(r - y)y(1-y)xi

此次搭建的网络,最后一层输出没有使用sigmoid函数

则第一层的权重调整值为

hidden_error_term = hidden_error * hidden_outputs * (1 - hidden_outputs) #连接权重的导数*误差函数的导数*激活函数的导数

# Weight step (input to hidden)

delta_weights_i_h += hidden_error_term * X[:,None] #乘以输入值

第二层为:

output_error_term = error

delta_weights_h_o += output_error_term * hidden_outputs[:,None] #误差函数的导数*连接权重的导数

(详细推导见《图解深度学习》p25)

=========================================

训练网络

import sys

### Set the hyperparameters here ###

iterations = 2500

learning_rate = 0.8

hidden_nodes = 12

output_nodes = 1

N_i = train_features.shape[1]

network = NeuralNetwork(N_i, hidden_nodes, output_nodes, learning_rate)

losses = {'train':[], 'validation':[]}

for ii in range(iterations):

# Go through a random batch of 128 records from the training data set

batch = np.random.choice(train_features.index, size=128)

X, y = train_features.ix[batch].values, train_targets.ix[batch]['cnt']

network.train(X, y)

# Printing out the training progress

train_loss = MSE(network.run(train_features).T, train_targets['cnt'].values)

val_loss = MSE(network.run(val_features).T, val_targets['cnt'].values)

sys.stdout.write("\rProgress: {:2.1f}".format(100 * ii/float(iterations)) \

+ "% ... Training loss: " + str(train_loss)[:5] \

+ " ... Validation loss: " + str(val_loss)[:5])

sys.stdout.flush()

losses['train'].append(train_loss)

losses['validation'].append(val_loss)

Progress: 100.0% … Training loss: 0.067 … Validation loss: 0.159

plt.plot(losses['train'], label='Training loss')

plt.plot(losses['validation'], label='Validation loss')

plt.legend()

_ = plt.ylim()

扫描二维码关注公众号,回复:

9152315 查看本文章

检查预测结果

fig, ax = plt.subplots(figsize=(8,4))

mean, std = scaled_features['cnt']

predictions = network.run(test_features).T*std + mean

ax.plot(predictions[0], label='Prediction')

ax.plot((test_targets['cnt']*std + mean).values, label='Data')

ax.set_xlim(right=len(predictions))

ax.legend()

dates = pd.to_datetime(rides.ix[test_data.index]['dteday'])

dates = dates.apply(lambda d: d.strftime('%b %d'))

ax.set_xticks(np.arange(len(dates))[12::24])

_ = ax.set_xticklabels(dates[12::24], rotation=45)