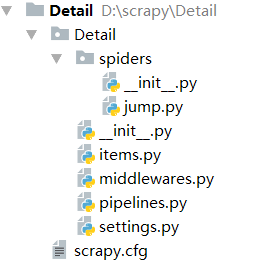

目录

一、爬虫文件jump.py

import scrapy

from Detail.items import DetailItem

class JumpSpider(scrapy.Spider):

name = 'jump'

allowed_domains = ['meishij.net']

start_urls = ['https://www.meishij.net/hongpei/']

page = 1 #第一页

def parse(self, response):

list = response.css('#listtyle1_list .listtyle1')

for sel in list:

item = DetailItem()

item['name'] = sel.css('.i_w .c1 strong::text').extract()

url= sel.css('a::attr(href)').extract()[0]

yield scrapy.Request(url,callback=self.parse_details,meta={"item":item}) #层与层之间通过meta参数传递数据

#翻页

self.page +=1

if self.page<5: #爬取前5页

url = "https://www.meishij.net/hongpei/?&page="+str(self.page)

yield scrapy.Request(url,callback=self.parse)

#处理详情页

def parse_details(self,response):

item = response.meta["item"]

item['introduce'] = response.css('.materials p::text').extract()

yield item

二、item.py

name = scrapy.Field()

url = scrapy.Field()

introduce = scrapy.Field()

三、pipelines.py(处理空字符串)

import re

class DetailPipeline(object):

def process_item(self, item, spider):

item['introduce'] = self.process_introduce(item['introduce'])

print(item)

return item

def process_introduce(self,introduce):

introduce = [re.sub(r"\r\n|\s","",i) for i in introduce]

introduce = [i for i in introduce if len(i)>0]#去除列表的空字符串

return introduce

四、settings.py(开启pipelines)

ITEM_PIPELINES = {

'Detail.pipelines.DetailPipeline': 300,

}

三、保存json文件

scrapy crawl jump -o 烘培.json

运行成后在目录里有一个烘培.json文件

如果出现以下显示不了中文的可以在settings.py添加

FEED_EXPORT_ENCODING = 'utf-8'