1.P3P

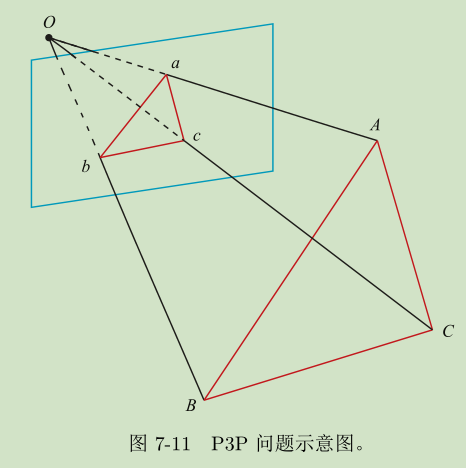

P3P输入数据为三对3D-2D的匹配点,一个单目相机,经过初始化,得到初始的3D点,就可以依次得到后续的姿态和3D点。

ABC是上一时刻求的的3D点, abc是与上一次时刻的匹配点。利用相似原理,可求出abc在相机坐标下的3D坐标,最后就可以把问题转换为3D-3D坐标的估计问题。

- 问题:只利用3个点,不能运用多余的信息;受噪声影响,存在无匹配,容易算法失败

通常做法是用P3P估计出相机位姿,然后再构建最小二乘优化问题对估计值进行优化调整(Bundle Adjustment)

2.使用Pnp来求解

int main(int argc, char **argv) {

if (argc != 5) {

cout << "usage: pose_estimation_3d2d img1 img2 depth1 depth2" << endl;

return 1;

}

//-- 读取图像

Mat img_1 = imread(argv[1], CV_LOAD_IMAGE_COLOR);

Mat img_2 = imread(argv[2], CV_LOAD_IMAGE_COLOR);

assert(img_1.data && img_2.data && "Can not load images!");

vector<KeyPoint> keypoints_1, keypoints_2;

vector<DMatch> matches;

//获取匹配点

find_feature_matches(img_1, img_2, keypoints_1, keypoints_2, matches);

cout << "一共找到了" << matches.size() << "组匹配点" << endl;

// 建立3D点

Mat d1 = imread(argv[3], CV_LOAD_IMAGE_UNCHANGED); // 第一张图的深度图为16位无符号数,单通道图像

Mat K = (Mat_<double>(3, 3) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1);

vector<Point3f> pts_3d;

vector<Point2f> pts_2d;

for (DMatch m:matches) {

//取出第一张图匹配点的深度数据

ushort d = d1.ptr<unsigned short>(int(keypoints_1[m.queryIdx].pt.y))[int(keypoints_1[m.queryIdx].pt.x)];

if (d == 0) // bad depth

continue;

float dd = d / 5000.0;

//将图1的匹配点的像素坐标转相机归一化坐标

Point2d p1 = pixel2cam(keypoints_1[m.queryIdx].pt, K);

//转换为相机坐标的3D点

pts_3d.push_back(Point3f(p1.x * dd, p1.y * dd, dd));

//获取图2的匹配点的像素坐标

pts_2d.push_back(keypoints_2[m.trainIdx].pt);

}

cout << "3d-2d pairs: " << pts_3d.size() << endl;

/**************pnp**************/

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();

Mat r, t;

solvePnP(pts_3d, pts_2d, K, Mat(), r, t, false); // 调用OpenCV 的 PnP 求解,可选择EPNP,DLS等方法

Mat R;

cv::Rodrigues(r, R); // r为旋转向量形式,用Rodrigues公式转换为矩阵

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();

chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);

cout << "solve pnp in opencv cost time: " << time_used.count() << " seconds." << endl;

cout << "R=" << endl << R << endl;

cout << "t=" << endl << t << endl;

}

3.使用高斯牛顿法来优化求解

1.构建最小二乘问题:

\(T^{*}=argmin\frac{1}{2} \displaystyle \sum^{n}_{i=1}||u_{i}-\frac{1}{s_{i}}KTP_{i}||^{2}_{2}\)

误差是观测像素点-投影像素点,称为重投影误差

使用高斯牛顿法的重点在于求误差项对于每个优化变量的导数

线性化:\(e(x+\Delta x) \approx e(x) + J^{T}\Delta x\)

2.求雅可比矩阵:

1.使用非齐次坐标,像素误差e是2维,x为相机位姿是6维,\(J^{T}\)是一个2*6的矩阵。

2.将P变换到相机坐标下为\(P^{'}=[X^{'},Y^{'},Z^{'}]^{T}\),则:\(su=KP^{'}\)

3.消去s得:\(u=f_{x}\frac{X^{'}}{Z^{'}}+c_{x}\) \(v=f_{y}\frac{Y^{'}}{Z^{'}}+c_{y}\)

4.对T左乘扰动量\(\delta \xi\),考虑e的变化关于扰动量的导数。则\(\frac{\partial e}{\partial \delta \xi}= \frac{\partial e}{\partial P^{'}} \frac{\partial P^{'}}{\partial \delta \xi}\)

5.容易得出\(\frac{\partial e}{\partial P^{'}}\) = \(-\left[ \begin{matrix} \frac{f_{x}}{Z^{'}} & 0 & -\frac{f_{x}X^{'}}{Z^{'2}} \\ 0 & \frac{f_{y}}{Z^{'}} & -\frac{f_{y}Y^{'}}{Z^{'2}} \end{matrix} \right]\)

6.由李代数导数得:\(\frac{\partial (TP)}{\partial \delta \xi} = \left[ \begin{matrix} I & -P^{' \Lambda} \\ 0^{T} & 0^{T} \end{matrix} \right]\)

7.在\(P^{'}\)的定义中,取了前三维,所以\(\frac{\partial P^{'}}{\partial \delta \xi} = \left[ \begin{matrix} I & -P^{' \Lambda} \end{matrix} \right]\)

8.将两个式子相乘就可以得到雅可比矩阵:

\(\frac{\partial e}{\partial \delta \xi} = - \left[ \begin{matrix} \frac{f_{x}}{Z^{'}} & 0 & -\frac{f_{x}X^{'}}{Z^{'2}} & -\frac{f_{x}X^{'}Y^{'}}{Z^{'2}} & f_{x} + \frac{f_{x}X^{'2}}{Z^{'2}} &- \frac{f_{x}Y^{'}}{Z^{'}} \\ 0 & \frac{f_{y}}{Z^{'}} & -\frac{f_{y}Y^{'}}{Z^{'2}} & -f_{y} - \frac{f_{y}Y^{'2}}{Z^{'2}} & \frac{f_{y}X^{'}Y^{'}}{Z^{'2}} & \frac{f_{y}X^{'}}{Z^{'}} \end{matrix} \right]\)

3.程序:

void bundleAdjustmentGaussNewton(

const VecVector3d &points_3d,

const VecVector2d &points_2d,

const Mat &K,

Sophus::SE3d &pose) {

typedef Eigen::Matrix<double, 6, 1> Vector6d;

const int iterations = 10;

double cost = 0, lastCost = 0;

double fx = K.at<double>(0, 0);

double fy = K.at<double>(1, 1);

double cx = K.at<double>(0, 2);

double cy = K.at<double>(1, 2);

for (int iter = 0; iter < iterations; iter++) {

Eigen::Matrix<double, 6, 6> H = Eigen::Matrix<double, 6, 6>::Zero();

Vector6d b = Vector6d::Zero();

cost = 0;

// compute cost

for (int i = 0; i < points_3d.size(); i++) {

//世界坐标转为相机坐标

Eigen::Vector3d pc = pose * points_3d[i];

double inv_z = 1.0 / pc[2];

double inv_z2 = inv_z * inv_z;

//相机坐标转为像素坐标

Eigen::Vector2d proj(fx * pc[0] / pc[2] + cx, fy * pc[1] / pc[2] + cy);

Eigen::Vector2d e = points_2d[i] - proj; //误差,观测值-预测值,反之取负

cost += e.squaredNorm(); //误差里的每项平方和

Eigen::Matrix<double, 2, 6> J;

//雅可比矩阵赋值

J << -fx * inv_z,

0,

fx * pc[0] * inv_z2,

fx * pc[0] * pc[1] * inv_z2,

-fx - fx * pc[0] * pc[0] * inv_z2,

fx * pc[1] * inv_z,

0,

-fy * inv_z,

fy * pc[1] * inv_z2,

fy + fy * pc[1] * pc[1] * inv_z2,

-fy * pc[0] * pc[1] * inv_z2,

-fy * pc[0] * inv_z;

H += J.transpose() * J;

b += -J.transpose() * e;

}

Vector6d dx;

dx = H.ldlt().solve(b);

if (isnan(dx[0])) {

cout << "result is nan!" << endl;

break;

}

if (iter > 0 && cost >= lastCost) {

// cost increase, update is not good

cout << "cost: " << cost << ", last cost: " << lastCost << endl;

break;

}

// update your estimation

//更新位姿

pose = Sophus::SE3d::exp(dx) * pose;

lastCost = cost;

cout << "iteration " << iter << " cost=" << std::setprecision(12) << cost << endl;

if (dx.norm() < 1e-6) {

// converge

break;

}

}

cout << "pose by g-n: \n" << pose.matrix() << endl;

}