文章目录

一、配置文件的介绍和使用

1.settings.py 介绍

# 项目名字

BOT_NAME = 'guba_scrapy'

# 爬虫的位置和我们新建一个爬虫存放的位置

SPIDER_MODULES = ['guba_scrapy.spiders']

NEWSPIDER_MODULE = 'guba_scrapy.spiders'

# 浏览器标识

USER_AGENT = 'guba_scrapy (+http://www.yourdomain.com)'

# 是否遵守robots协议,默认遵守

ROBOTSTXT_OBEY = True

# 最大并发请求,默认16,不是越大越好,若对方没反爬可以大一些

CONCURRENT_REQUESTS = 32

# 下载延迟,每次下载时候等待3秒

DOWNLOAD_DELAY = 3

# 配合DOWNLOAD_DELAY使用,分别是每个域名的最大并发数和每个IP的最大并发数:

CONCURRENT_REQUESTS_PER_DOMAIN = 16

CONCURRENT_REQUESTS_PER_IP = 16

# cookies是否开启,默认开启,一般请求页面会携带上一次的cookie

COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)插件

# TELNETCONSOLE_ENABLED = False

# 默认的请求头,里面也可以写user-agent:

DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en',

}

# 爬虫中间件,前边为地址,后变为优先级,越小越先启动

SPIDER_MIDDLEWARES = {

'guba_scrapy.middlewares.GubaScrapySpiderMiddleware': 543,

}

# 下载中间件,前边为地址,后变为优先级,越小越先启动

DOWNLOADER_MIDDLEWARES = {

'guba_scrapy.middlewares.GubaScrapyDownloaderMiddleware': 543,

}

# 插件

EXTENSIONS = {

'scrapy.extensions.telnet.TelnetConsole': None,

}

# 管道文件,前边为地址,后变为优先级,越小越先启动

ITEM_PIPELINES = {

'guba_scrapy.pipelines.GubaScrapyPipeline': 300,

}

# Enable and configure the AutoThrottle extension (disabled by default) 自动限速

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

# AUTOTHROTTLE_ENABLED = True

# The initial download delay

# AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

# AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

# AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

# AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default) 缓存

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

# HTTPCACHE_ENABLED = True

# HTTPCACHE_EXPIRATION_SECS = 0

# HTTPCACHE_DIR = 'httpcache'

# HTTPCACHE_IGNORE_HTTP_CODES = []

# HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

# 日志

LOG_LEVEL = 'WARNING'

LOG_FILE = './日志.log'

2.在配置文件中定义变量

一般我们将全局变量存放在配置文件中,一般全局变量采用大写

例如我们在settings.py 定义一个全局变量

MYSQL_HOST='127.0.0.1'

然后我们在爬虫文件使用,有两种使用方法

二、日志

1.使用简单的内建logging模块

首先在settings.py文件中配置最低显示等级

LOG_LEVEL='WARNING'

然后比如我们spiders.py文件中导入日志,并输出

import scrapy

import logging

class BaiduSpiderSpider(scrapy.Spider):

name = 'baidu_spider'

allowed_domains = []

start_urls = ['http://www.baidu.com']

def parse(self, response):

item = {

}

item['from_spider'] = 'baidu_spider'

item['url'] = response.url

logging.warning(item)

return item

运行spider

scrapy crawl baidu_spider

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-jkJ3YKzD-1596811101123)(C:\Users\qinfan\AppData\Roaming\Typora\typora-user-images\1596809915359.png)]](https://img-blog.csdnimg.cn/20200807224007135.png)

我们发现warning前边显示的是root,代表根目录输出的。但是当有多个spider爬虫文件,都显示spider我们无法观察是哪个文件的问题,因此我们如何将root改为文件的位置呢?

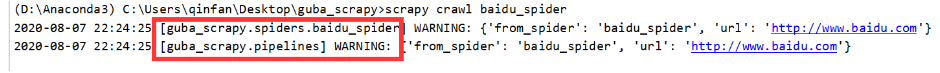

2.logging模块下的getlogger方法

为了解决我们可以清晰看到是哪个文件输出的日志,因此我们使用getlogger方法,首先我们修改我们的baidu_spider.py文件

import scrapy

import logging

logger = logging.getLogger(__name__)

class BaiduSpiderSpider(scrapy.Spider):

name = 'baidu_spider'

allowed_domains = []

start_urls = ['http://www.baidu.com']

def parse(self, response):

item = {

}

item['from_spider'] = 'baidu_spider'

item['url'] = response.url

logger.warning(item)

return item

再修改管道文件

from itemadapter import ItemAdapter

import logging

logger = logging.getLogger(__name__)

class GubaScrapyPipeline:

def process_item(self, item, spider):

if spider.name == 'baidu_spider':

logger.warning(item)

return item

运行,此时就会显示出是哪个文件的日志

3.将日志保存到本地

settings.py配置

LOG_LEVEL='WARNING'

LOG_FILE='./日志.log'

然后运行,会生成日志文件,存入我们本地

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-38DbJBW5-1596811101124)(C:\Users\qinfan\AppData\Roaming\Typora\typora-user-images\1596810563841.png)]](https://img-blog.csdnimg.cn/20200807224033421.png)

4.运行过程不显示日志

运行命令

scrapy crawl baidu_spider --nolog