一、卡尔曼滤波器概述

大多数现代系统都有许多传感器,它们根据一系列测量来估计隐藏(未知)状态。例如,GPS 接收器提供位置和速度估计,其中位置和速度是隐藏状态,而卫星信号到达的差分时间是测量值。

跟踪和控制系统的最大挑战之一是在存在不确定性的情况下提供对隐藏状态的准确和精确的估计。在 GPS 接收器中,测量不确定性取决于许多外部因素,例如热噪声、大气影响、卫星位置的微小变化、接收器时钟精度等等。

卡尔曼滤波器,也称为线性二次估计( LQE ),是最重要和最常见的估计算法之一。卡尔曼滤波器根据不准确和不确定的测量结果生成隐藏变量的估计值。此外,卡尔曼滤波器根据过去的估计预测未来的系统状态。

卡尔曼滤波器主要(但不完全)是由鲁道夫·卡尔曼(Rudolf Kalman)在20世纪50年代后期开发出来的一种算法。卡尔曼滤波器在许多领域都有实际应用,尤其是各种交通工具的导航系统。

下面的文章比较好的阐述了卡尔曼滤波器。

The background break![]() https://www.kalmanfilter.net/background.html 卡尔曼滤波器递归地对有噪声的输入数据流进行操作,以产生底层系统状态的统计最优估计。在计算机视觉背景下,卡尔曼滤波器可以对跟踪物体的位置进行平滑估计。

https://www.kalmanfilter.net/background.html 卡尔曼滤波器递归地对有噪声的输入数据流进行操作,以产生底层系统状态的统计最优估计。在计算机视觉背景下,卡尔曼滤波器可以对跟踪物体的位置进行平滑估计。

想象桌子上有一个红色小球,并想象有一个摄像头对着此场景。将球作为要跟踪的主体,然后用手指轻弹小球。球将根据运动规律开始在桌子上滚动。如果球在特定的方向上以1米/秒的速度滚动,那么很容易估计出球在1秒后的位置:它将在1米远的地方。卡尔曼滤波器应用这样的规律,根据先前收集到的帧的跟踪结果来预测当前视频帧中物体的位置。卡尔曼滤波器本身并没有收集这些跟踪结果,但是它会根据来自另一种算法(如MeanShift)的跟踪结果更新物体的运动模型。自然,卡尔曼滤波器无法预见作用在球上的力(例如与桌上的铅笔的碰撞),但是它可以在事后根据跟踪结果更新它的运动模型。通过使用卡尔曼滤波器,我们可以获得比单独跟踪的结果更稳定、更符合运动规律的估计结果。

卡尔曼滤波器算法有两个阶段:

预测(在第一阶段):卡尔曼滤波器使用计算到当前时间点的协方差估计物体的新位置。

更新(在第二阶段):卡尔曼滤波器记录物体的位置,并调整协方差用于下一个计算周期。

为了平滑跟踪物体,首先调用predict方法来估计物体的位置,然后使用correct方法来指示卡尔曼滤波器根据另一种算法(如MeanShift)的新的跟踪结果调整其计算。

二、使用标准卡尔曼滤波器的示例

#include "opencv2/video/tracking.hpp"

#include "opencv2/highgui.hpp"

#include "opencv2/core/cvdef.h"

#include <stdio.h>

using namespace cv;

static inline Point calcPoint(Point2f center, double R, double angle)

{

return center + Point2f((float)cos(angle), (float)-sin(angle))*(float)R;

}

static void help()

{

printf( "\nExample of c calls to OpenCV's Kalman filter.\n"

" Tracking of rotating point.\n"

" Point moves in a circle and is characterized by a 1D state.\n"

" state_k+1 = state_k + speed + process_noise N(0, 1e-5)\n"

" The speed is constant.\n"

" Both state and measurements vectors are 1D (a point angle),\n"

" Measurement is the real state + gaussian noise N(0, 1e-1).\n"

" The real and the measured points are connected with red line segment,\n"

" the real and the estimated points are connected with yellow line segment,\n"

" the real and the corrected estimated points are connected with green line segment.\n"

" (if Kalman filter works correctly,\n"

" the yellow segment should be shorter than the red one and\n"

" the green segment should be shorter than the yellow one)."

"\n"

" Pressing any key (except ESC) will reset the tracking.\n"

" Pressing ESC will stop the program.\n"

);

}

int main(int, char**)

{

help();

Mat img(500, 500, CV_8UC3);

KalmanFilter KF(2, 1, 0);

Mat state(2, 1, CV_32F); /* (phi, delta_phi) */

Mat processNoise(2, 1, CV_32F);

Mat measurement = Mat::zeros(1, 1, CV_32F);

char code = (char)-1;

for(;;)

{

img = Scalar::all(0);

state.at<float>(0) = 0.0f;

state.at<float>(1) = 2.f * (float)CV_PI / 6;

KF.transitionMatrix = (Mat_<float>(2, 2) << 1, 1, 0, 1);

setIdentity(KF.measurementMatrix);

setIdentity(KF.processNoiseCov, Scalar::all(1e-5));

setIdentity(KF.measurementNoiseCov, Scalar::all(1e-1));

setIdentity(KF.errorCovPost, Scalar::all(1));

randn(KF.statePost, Scalar::all(0), Scalar::all(0.1));

for(;;)

{

Point2f center(img.cols*0.5f, img.rows*0.5f);

float R = img.cols/3.f;

double stateAngle = state.at<float>(0);

Point statePt = calcPoint(center, R, stateAngle);

Mat prediction = KF.predict();

double predictAngle = prediction.at<float>(0);

Point predictPt = calcPoint(center, R, predictAngle);

// generate measurement

randn( measurement, Scalar::all(0), Scalar::all(KF.measurementNoiseCov.at<float>(0)));

measurement += KF.measurementMatrix*state;

double measAngle = measurement.at<float>(0);

Point measPt = calcPoint(center, R, measAngle);

// correct the state estimates based on measurements

// updates statePost & errorCovPost

KF.correct(measurement);

double improvedAngle = KF.statePost.at<float>(0);

Point improvedPt = calcPoint(center, R, improvedAngle);

// plot points

img = img * 0.2;

drawMarker(img, measPt, Scalar(0, 0, 255), cv::MARKER_SQUARE, 5, 2);

drawMarker(img, predictPt, Scalar(0, 255, 255), cv::MARKER_SQUARE, 5, 2);

drawMarker(img, improvedPt, Scalar(0, 255, 0), cv::MARKER_SQUARE, 5, 2);

drawMarker(img, statePt, Scalar(255, 255, 255), cv::MARKER_STAR, 10, 1);

// forecast one step

Mat test = Mat(KF.transitionMatrix*KF.statePost);

drawMarker(img, calcPoint(center, R, Mat(KF.transitionMatrix*KF.statePost).at<float>(0)),

Scalar(255, 255, 0), cv::MARKER_SQUARE, 12, 1);

line( img, statePt, measPt, Scalar(0,0,255), 1, LINE_AA, 0 );

line( img, statePt, predictPt, Scalar(0,255,255), 1, LINE_AA, 0 );

line( img, statePt, improvedPt, Scalar(0,255,0), 1, LINE_AA, 0 );

randn( processNoise, Scalar(0), Scalar::all(sqrt(KF.processNoiseCov.at<float>(0, 0))));

state = KF.transitionMatrix*state + processNoise;

imshow( "Kalman", img );

code = (char)waitKey(1000);

if( code > 0 )

break;

}

if( code == 27 || code == 'q' || code == 'Q' )

break;

}

return 0;

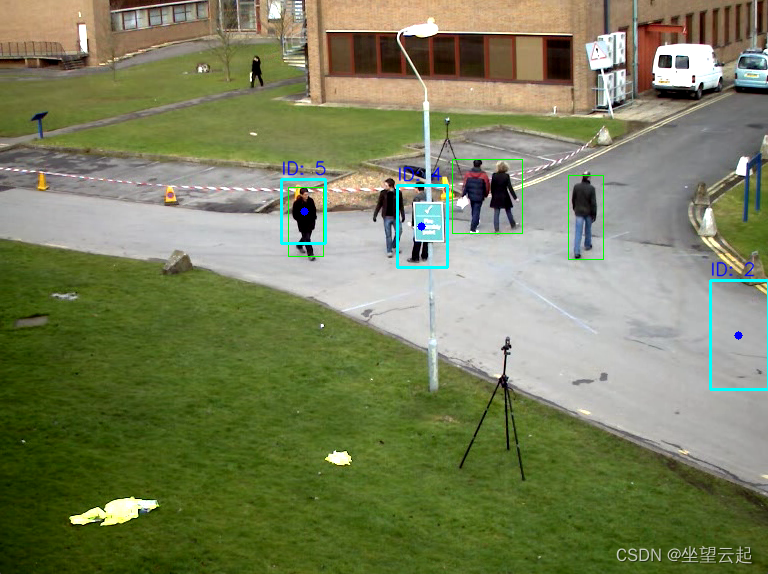

}三、MeanShift结合卡尔曼滤波器

import cv2

import numpy as np

OPENCV_MAJOR_VERSION = int(cv2.__version__.split('.')[0])

class Pedestrian():

"""A tracked pedestrian with a state including an ID, tracking

window, histogram, and Kalman filter.

"""

def __init__(self, id, hsv_frame, track_window):

self.id = id

self.track_window = track_window

self.term_crit = \

(cv2.TERM_CRITERIA_COUNT | cv2.TERM_CRITERIA_EPS, 10, 1)

# Initialize the histogram.

x, y, w, h = track_window

roi = hsv_frame[y:y+h, x:x+w]

roi_hist = cv2.calcHist([roi], [0], None, [16], [0, 180])

self.roi_hist = cv2.normalize(roi_hist, roi_hist, 0, 255,

cv2.NORM_MINMAX)

# Initialize the Kalman filter.

self.kalman = cv2.KalmanFilter(4, 2)

self.kalman.measurementMatrix = np.array(

[[1, 0, 0, 0],

[0, 1, 0, 0]], np.float32)

self.kalman.transitionMatrix = np.array(

[[1, 0, 1, 0],

[0, 1, 0, 1],

[0, 0, 1, 0],

[0, 0, 0, 1]], np.float32)

self.kalman.processNoiseCov = np.array(

[[1, 0, 0, 0],

[0, 1, 0, 0],

[0, 0, 1, 0],

[0, 0, 0, 1]], np.float32) * 0.03

cx = x+w/2

cy = y+h/2

self.kalman.statePre = np.array(

[[cx], [cy], [0], [0]], np.float32)

self.kalman.statePost = np.array(

[[cx], [cy], [0], [0]], np.float32)

def update(self, frame, hsv_frame):

back_proj = cv2.calcBackProject(

[hsv_frame], [0], self.roi_hist, [0, 180], 1)

ret, self.track_window = cv2.meanShift(

back_proj, self.track_window, self.term_crit)

x, y, w, h = self.track_window

center = np.array([x+w/2, y+h/2], np.float32)

prediction = self.kalman.predict()

estimate = self.kalman.correct(center)

center_offset = estimate[:,0][:2] - center

self.track_window = (x + int(center_offset[0]),

y + int(center_offset[1]), w, h)

x, y, w, h = self.track_window

# Draw the predicted center position as a blue circle.

cv2.circle(frame, (int(prediction[0]), int(prediction[1])),

4, (255, 0, 0), -1)

# Draw the corrected tracking window as a cyan rectangle.

cv2.rectangle(frame, (x,y), (x+w, y+h), (255, 255, 0), 2)

# Draw the ID above the rectangle in blue text.

cv2.putText(frame, 'ID: %d' % self.id, (x, y-5),

cv2.FONT_HERSHEY_SIMPLEX, 0.6, (255, 0, 0),

1, cv2.LINE_AA)

def main():

cap = cv2.VideoCapture('pedestrians.avi')

# Create the KNN background subtractor.

bg_subtractor = cv2.createBackgroundSubtractorKNN()

history_length = 20

bg_subtractor.setHistory(history_length)

erode_kernel = cv2.getStructuringElement(

cv2.MORPH_ELLIPSE, (3, 3))

dilate_kernel = cv2.getStructuringElement(

cv2.MORPH_ELLIPSE, (8, 3))

pedestrians = []

num_history_frames_populated = 0

while True:

grabbed, frame = cap.read()

if (grabbed is False):

break

# Apply the KNN background subtractor.

fg_mask = bg_subtractor.apply(frame)

# Let the background subtractor build up a history.

if num_history_frames_populated < history_length:

num_history_frames_populated += 1

continue

# Create the thresholded image.

_, thresh = cv2.threshold(fg_mask, 127, 255,

cv2.THRESH_BINARY)

cv2.erode(thresh, erode_kernel, thresh, iterations=2)

cv2.dilate(thresh, dilate_kernel, thresh, iterations=2)

# Detect contours in the thresholded image.

if OPENCV_MAJOR_VERSION >= 4:

# OpenCV 4 or a later version is being used.

contours, hier = cv2.findContours(

thresh, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

else:

# OpenCV 3 or an earlier version is being used.

# cv2.findContours has an extra return value.

# The extra return value is the thresholded image, which

# is unchanged, so we can ignore it.

_, contours, hier = cv2.findContours(

thresh, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

hsv_frame = cv2.cvtColor(frame, cv2.COLOR_BGR2HSV)

# Draw green rectangles around large contours.

# Also, if no pedestrians are being tracked yet, create some.

should_initialize_pedestrians = len(pedestrians) == 0

id = 0

for c in contours:

if cv2.contourArea(c) > 500:

(x, y, w, h) = cv2.boundingRect(c)

cv2.rectangle(frame, (x, y), (x+w, y+h),

(0, 255, 0), 1)

if should_initialize_pedestrians:

pedestrians.append(

Pedestrian(id, hsv_frame,

(x, y, w, h)))

id += 1

# Update the tracking of each pedestrian.

for pedestrian in pedestrians:

pedestrian.update(frame, hsv_frame)

cv2.imshow('Pedestrians Tracked', frame)

k = cv2.waitKey(110)

if k == 27: # Escape

break

if __name__ == "__main__":

main()并不精准,适合参考