卷积网络模型CNN的实现

结构以及计算

CNN主要包括以下结构:

输入层(Input layer):输入数据;

卷积层(Convolution layer,CONV):使用卷积核进行特征提取和特征映射;

激活层:非线性映射(ReLU)

池化层(Pooling layer,POOL):进行下采样降维;

光栅化(Rasterization):展开像素,与全连接层全连接,某些情况下这一层可以省去;

全连接层(Affine layer / Fully Connected layer,FC):在尾部进行拟合,减少特征信息的损失;

激活层:非线性映射(ReLU)

输出层(Output layer):输出结果。

卷积后shape计算参考我写的:卷积神经网络的计算

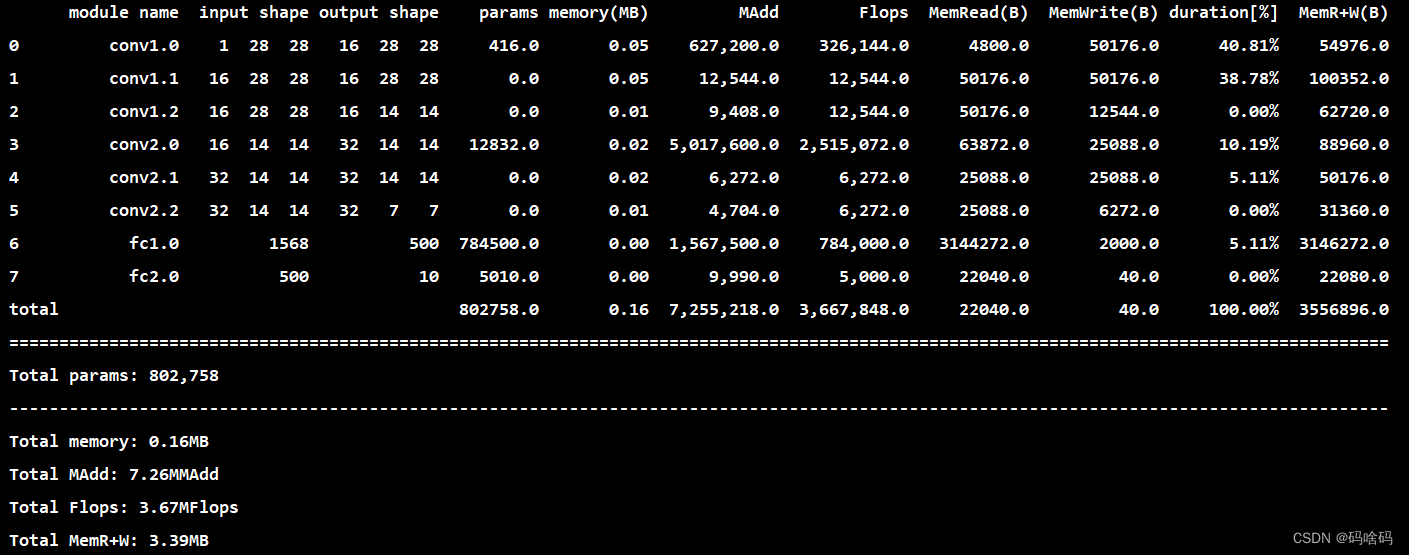

| input | process | outpout | |

|---|---|---|---|

| 卷积层 | 1 ∗ 28 ∗ 28 1* 28* 28 1∗28∗28 | in_channels=1,out_channels=16 kernel_size=5,stride=1,padding=2 输出长度= 28 + 2 ∗ 2 − 5 + 1 = 28 28+2*2-5+1=28 28+2∗2−5+1=28 |

16 ∗ 28 ∗ 28 16*28*28 16∗28∗28 |

| ReLU | 16 ∗ 28 ∗ 28 16*28*28 16∗28∗28 | 16 ∗ 28 ∗ 28 16*28*28 16∗28∗28 | |

| MaxPool2d | 16 ∗ 28 ∗ 28 16*28*28 16∗28∗28 | in_channels=16,out_channels=16 kernel_size=2,strides=2,padding=0 输出长度= ( 28 − 2 + 1 ) / 2 + 1 = 14 (28-2+1)/2+1=14 (28−2+1)/2+1=14 |

16 ∗ 14 ∗ 14 16*14*14 16∗14∗14 |

| 卷积层 | 16 ∗ 14 ∗ 14 16*14*14 16∗14∗14 | in_channels=16, out_channels=32 kernel_size=5, stride=1, padding=2 输出长度= 14 + 2 ∗ 2 − 5 + 1 = 14 14+2*2-5+1=14 14+2∗2−5+1=14 |

32 ∗ 14 ∗ 14 32*14*14 32∗14∗14 |

| ReLU | 32 ∗ 14 ∗ 14 32*14*14 32∗14∗14 | 32 ∗ 14 ∗ 14 32*14*14 32∗14∗14 | |

| MaxPool2d | 32 ∗ 14 ∗ 14 32*14*14 32∗14∗14 | in_channels=32,out_channels=32 kernel_size=2,strides=2,padding=0 输出长度=$(14-2+1)/2+1=7 |

32 ∗ 7 ∗ 7 32*7*7 32∗7∗7 |

| 全连接层 | 32 ∗ 7 ∗ 7 32*7*7 32∗7∗7 | 32 ∗ 7 ∗ 7 32*7*7 32∗7∗7 |

代码

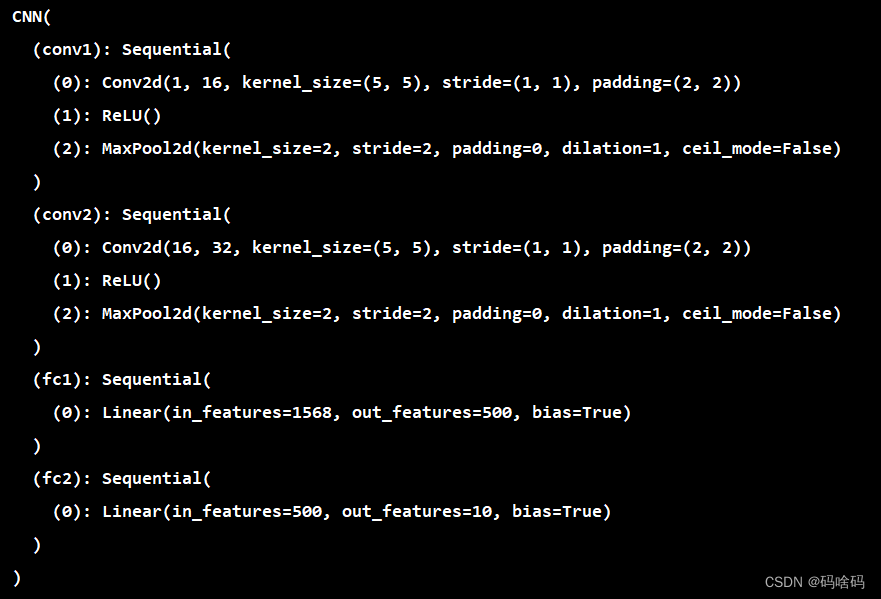

CNN

import torch

from torch import nn

class CNN(nn.Module):

def __init__(self):

super(CNN, self).__init__()

self.conv1 = nn.Sequential( # input shape (1, 28, 28)

nn.Conv2d(

in_channels=1, # input height gray just have one level

out_channels=16, # n_filters

kernel_size=5, # filter size

stride=1, # filter movement/step

padding=2

), # output shape (16, 28, 28)

#nn.ReLU(), # activation

nn.MaxPool2d(kernel_size=2,stride=2,padding=0), # choose max value in 2x2 area, output shape (16, 14, 14)

)

self.conv2 = nn.Sequential( # input shape (16, 14, 14)

nn.Conv2d(16,32, 5, 1, 2), # output shape (32, 14, 14)

#nn.ReLU(), # activation

nn.MaxPool2d(2,stride=2), # output shape (32, 7, 7)

)

# flatten

# fc unit

self.fc1 = nn.Sequential(

nn.Linear(32*7*7,500),

#nn.ReLU()

)

self.fc2 = nn.Sequential(

nn.Linear(500,10),

#nn.ReLU()

)

def forward(self, x):

batchsz = x.size(0)

x = self.conv1(x)

x = self.conv2(x)

x = x.view(batchsz, 50*4*4)

x= self.fc1(x)

logits = self.fc2(x)

# # [b, 10]

# pred = F.softmax(logits, dim=1)

# loss = self.criteon(logits, y)

return logits

from torchstat import stat

from CNN import CNN

# 导入模型,输入一张输入图片的尺寸

cnn=CNN()

print(cnn)

stat(cnn,(1, 28,28))