主要内容,参考http://www.analyticsvidhya.com/blog/2016/04/neural-networks-python-theano/

本文使用Theano实现XNOR功能。对tensor的变量定义、自动求导,以及theano.function做一个简单学习。具体如下:

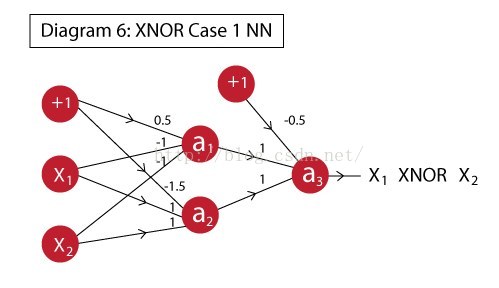

XNOR函数的网络表达:

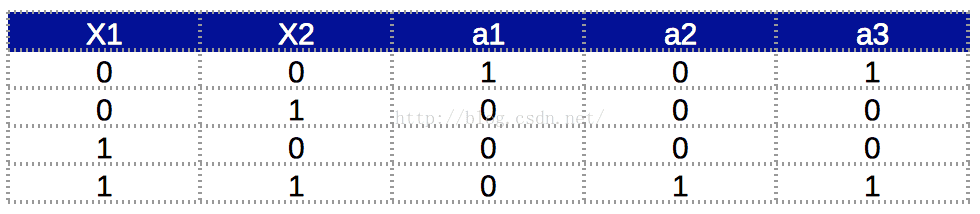

真值表:

编程实现:

import theano

import theano.tensor as T

import numpy as np

import matplotlib.pyplot as plt

if __name__ == '__main__':

#Define variables:

x = T.matrix('x')

w1 = theano.shared(np.random.rand(2,2)) # weight for layer1

w2 = theano.shared(np.random.rand(2))

b = theano.shared(np.ones(2)) # bias

learning_rate = 1.;

#Step2:Define mathematical expression

a1 = 1/(1+T.exp(-T.dot(x,w1)-b[0]))

a = 1/(1+T.exp(-T.dot(a1,w2)-b[1])) # predict output

#Step3:Define gradient and update rule

y = T.vector('y') #Actual output

cost = -(y*T.log(a) + (1-y)*T.log(1-a)).sum()

dw1,dw2,db = T.grad(cost,[w1,w2,b])

train = theano.function(

inputs = [x,y],

outputs = [a,cost],

updates = [

[w1, w1-learning_rate*dw1],

[w2, w2-learning_rate*dw2],

[b, b-learning_rate*db]

]

)

inputs = [

[0, 0],

[0, 1],

[1, 0],

[1, 1]

]

outputs = [1,0,0,1]

#Iterate through all inputs and find outputs:

cost = []

for iteration in range(1000):

pred, cost_iter = train(inputs, outputs)

cost.append(cost_iter)

#Print the outputs:

print 'The outputs of the NN are:'

for i in range(len(inputs)):

print 'The output for x1=%d | x2=%d is %.2f' % (inputs[i][0],inputs[i][1],pred[i])

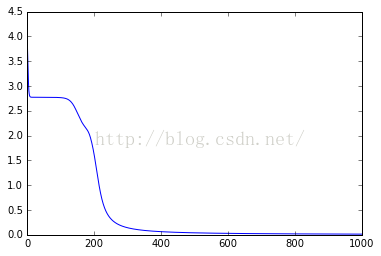

#Plot the flow of cost:

print '\nThe flow of cost during model run is as following:'

plt.plot(cost)实验结果:

The outputs of the NN are:

The output for x1=0 | x2=0 is 1.00

The output for x1=0 | x2=1 is 0.00

The output for x1=1 | x2=0 is 0.00

The output for x1=1 | x2=1 is 1.00

The flow of cost during model run is as following: