逻辑回归:

本章内容主要讲述简单的逻辑回归:这个可以归纳为二分类的问题。

逻辑,非假即真。两种可能,我们可以联想一下在继电器控制的电信号(0 or 1)

举个栗子:比如说你花了好几个星期复习的考试(通过 or 失败)

哇,那个女孩子长得真好看,你同不同意?

一场NBA,湖人赢了火箭还是输给火箭?

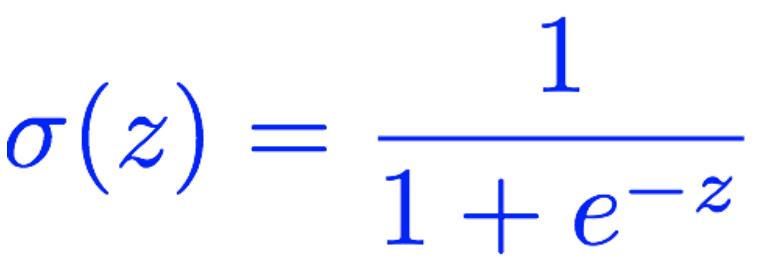

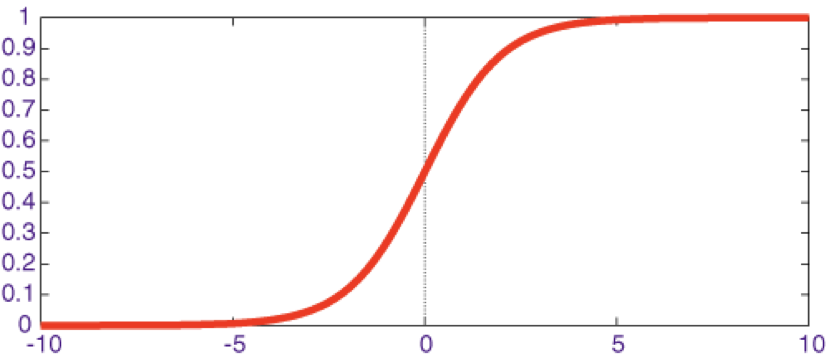

这里:我们引入sigmoid函数,可以设定一个阈值来区分两类。

这样我们可以设定一个阈值:0.5. 超过0.5的值归为1这一类,其余的(>0)都归为零这一类

这里的代码跟上一篇博客的很像,如果你不熟悉的话,希望你可以回头看看。这里我说明一下,不同的点

import torch

from torch.autograd import Variable

import torch.nn.functional as F

x_data = Variable(torch.Tensor([[1.0], [2.0], [3.0], [4.0]]))

y_data = Variable(torch.Tensor([[0.], [0.], [1.], [1.]]))

class Model(torch.nn.Module):

def __init__(self):

"""

In the constructor we instantiate nn.Linear module

"""

super(Model, self).__init__()

self.linear = torch.nn.Linear(1, 1) # One in and one out

def forward(self, x):

"""

In the forward function we accept a Variable of input data and we must return

a Variable of output data.

"""

这里是不同的点,使用了function 模块的 sigmoid的函数

y_pred = F.sigmoid(self.linear(x))

return y_pred

# our model

model = Model()

# Construct our loss function and an Optimizer. The call to model.parameters()

# in the SGD constructor will contain the learnable parameters of the two

# nn.Linear modules which are members of the model.

criterion = torch.nn.BCELoss(size_average=True)

optimizer = torch.optim.SGD(model.parameters(), lr=0.01)

# Training loop

for epoch in range(1000):

# Forward pass: Compute predicted y by passing x to the model

y_pred = model(x_data)

# Compute and print loss

loss = criterion(y_pred, y_data)

print(epoch, loss.data[0])

# Zero gradients, perform a backward pass, and update the weights.

optimizer.zero_grad()

loss.backward()

optimizer.step()

# After training

hour_var = Variable(torch.Tensor([[1.0]]))

print("predict 1 hour ", 1.0, model(hour_var).data[0][0] > 0.5)

hour_var = Variable(torch.Tensor([[7.0]]))

print("predict 7 hours", 7.0, model(hour_var).data[0][0] > 0.5)