参考吴恩达的 course,《统计学方法》(李航),《Deep Learning》

数据集下载地址

载入数据,数据有三列,前两列是

x

1

x_{1}

x 1

x

2

x_{2}

x 2

y

y

y

x

1

x_{1}

x 1

x

2

x_{2}

x 2

data = load( 'ex2data1.txt' ) ;

% ( 100 , 3 )

X = data( : , [ 1 , 2 ] ) ; y = data( : , 3 ) ;

% y是0 , 1

可视化数据

plotData( X, y) ;

% Put some labels

hold on;

xlabel( 'x1' )

ylabel( 'x2' )

legend( 'positive' , 'negative' )

hold off;

plotData()具体实现为:

function plotData( X, y)

figure;

hold on;

pos = find( y == 1 ) ;

neg = find( y == 0 ) ;

% 画正样本

plot( X( pos , 1 ) , X( pos , 2 ) , 'k+' , 'LineWidth' , 2 , 'MarkerSize' , 10 ) ;

% LineWidth:线宽

% MarkerSize:标记点的大小

% k黑

% MarkerFaceColor:标记点内部的填充颜色

% y黄

% 画负样本

plot( X( neg , 1 ) , X( neg , 2 ) , 'ko' , 'MarkerFaceColor' , 'y' , 'MarkerSize' , 10 ) ;

hold off;

end

横纵坐标分别为

x

1

x_{1}

x 1

x

2

x_{2}

x 2

[ m, n] = size( X) ;

% (100 ,2 )

X = [ ones( m, 1 ) X] ;

% (100 ,3 ),第一列全是1

initial_theta = zeros( n + 1 , 1 ) ;

% (3 ,1 )

sigmoid函数为:

g

(

z

)

=

1

1

+

e

−

z

g\left ( z \right ) = \frac{1}{1+e^{-z}}

g ( z ) = 1 + e − z 1

推导可知:

g

′

(

z

)

=

d

d

z

1

1

+

e

−

z

=

1

(

1

+

e

−

z

)

2

(

e

−

z

)

=

1

1

+

e

−

z

⋅

(

1

−

1

1

+

e

−

z

)

=

g

(

z

)

(

1

−

g

(

z

)

)

\begin{aligned} g{}'\left ( z \right ) & =\frac{d}{dz}\frac{1}{1+e^{-z}} = \frac{1}{\left ( {1+e^{-z}} \right )^{2}}\left ( e^{-z} \right ) \\ & =\frac{1}{1+e^{-z}}\cdot \left ( 1- \frac{1}{1+e^{-z}}\right ) \\ & =g\left ( z \right )\left ( 1-g\left ( z \right ) \right ) \end{aligned}

g ′ ( z ) = d z d 1 + e − z 1 = ( 1 + e − z ) 2 1 ( e − z ) = 1 + e − z 1 ⋅ ( 1 − 1 + e − z 1 ) = g ( z ) ( 1 − g ( z ) )

这一条性质非常好

预测函数为sigmoid函数的形式:

h

θ

(

x

)

=

g

(

θ

T

x

)

=

1

1

+

e

−

θ

T

x

h_{\theta }\left ( x \right )=g\left ( \theta ^{T} x\right )=\frac{1}{1+e^{-\theta ^{T}x}}

h θ ( x ) = g ( θ T x ) = 1 + e − θ T x 1

具体实现为:

function g = sigmoid( z)

g = zeros( size( z) ) ;

g = 1 . / ( 1 + exp( - z) ) ;

end

logistic regression 交叉熵损失函数如下:

J

(

θ

)

=

1

m

∑

i

=

1

m

[

−

y

(

i

)

l

o

g

h

(

x

(

i

)

)

−

(

1

−

y

(

i

)

)

l

o

g

(

1

−

h

(

x

(

i

)

)

)

]

J\left ( \theta \right )=\frac{1}{m}\sum_{i=1}^{m}\left [ -y^{\left ( i \right )}logh\left ( x^{\left ( i \right )} \right ) -\left ( 1-y^{\left ( i \right )} \right )log\left ( 1-h\left ( x^{\left ( i \right )} \right ) \right )\right ]

J ( θ ) = m 1 i = 1 ∑ m [ − y ( i ) l o g h ( x ( i ) ) − ( 1 − y ( i ) ) l o g ( 1 − h ( x ( i ) ) ) ]

这个地方log是ln,求梯度过程如下

∂

∂

θ

j

J

(

θ

)

=

−

1

m

∑

i

=

1

m

(

y

(

i

)

1

g

(

θ

T

x

(

i

)

)

−

(

1

−

y

(

i

)

)

1

1

−

g

(

θ

T

x

(

i

)

)

)

∂

∂

θ

j

g

(

θ

T

x

(

i

)

)

\frac{\partial }{\partial \theta _{j}}J\left ( \theta \right )=-\frac{1}{m}\sum_{i=1}^{m}\left ( y^{(i)}\frac{1}{g(\theta ^{T}x^{(i)})} -\left ( 1-y^{(i)} \right )\frac{1}{1-g\left ( \theta ^{T}x^{(i)} \right )}\right )\frac{\partial }{\partial \theta _{j}}{g(\theta ^{T}x^{(i)})}

∂ θ j ∂ J ( θ ) = − m 1 i = 1 ∑ m ( y ( i ) g ( θ T x ( i ) ) 1 − ( 1 − y ( i ) ) 1 − g ( θ T x ( i ) ) 1 ) ∂ θ j ∂ g ( θ T x ( i ) )

括号右边的数乘进去

=

−

1

m

∑

i

=

1

m

(

y

(

i

)

1

g

(

θ

T

x

(

i

)

)

−

(

1

−

y

(

i

)

)

1

1

−

g

(

θ

T

x

(

i

)

)

)

g

(

θ

T

x

(

i

)

)

(

1

−

g

(

θ

T

x

(

i

)

)

)

∂

∂

θ

j

θ

T

x

(

i

)

=-\frac{1}{m}\sum_{i=1}^{m}\left ( y^{(i)}\frac{1}{g(\theta ^{T}x^{(i)})} -\left ( 1-y^{(i)} \right )\frac{1}{1-g\left ( \theta ^{T}x^{(i)} \right )}\right ){g(\theta ^{T}x^{(i)})}\left ( 1-{g(\theta ^{T}x^{(i)})} \right )\frac{\partial }{\partial \theta _{j}}\theta ^{T}x^{(i)}

= − m 1 i = 1 ∑ m ( y ( i ) g ( θ T x ( i ) ) 1 − ( 1 − y ( i ) ) 1 − g ( θ T x ( i ) ) 1 ) g ( θ T x ( i ) ) ( 1 − g ( θ T x ( i ) ) ) ∂ θ j ∂ θ T x ( i )

展开括号

=

−

1

m

∑

i

=

1

m

(

y

(

i

)

(

1

−

g

(

θ

T

x

(

i

)

)

)

−

(

1

−

y

(

i

)

)

g

(

θ

T

x

(

i

)

)

)

x

j

(

i

)

=

1

m

∑

i

=

1

m

(

h

θ

(

x

(

i

)

)

−

y

(

i

)

)

x

j

(

i

)

\begin{aligned} & =-\frac{1}{m}\sum_{i=1}^{m}\left ( y^{(i)}\left ( 1-{g(\theta ^{T}x^{(i)})} \right ) -\left ( 1-y^{(i)} \right )g\left ( \theta ^{T}x^{(i)} \right )\right )x^{(i)}_{j}\\ & =\frac{1}{m}\sum_{i=1}^{m}\left ( h_{\theta }\left ( x^{(i)} \right )-y^{(i)} \right ) x^{(i)}_{j} \end{aligned}

= − m 1 i = 1 ∑ m ( y ( i ) ( 1 − g ( θ T x ( i ) ) ) − ( 1 − y ( i ) ) g ( θ T x ( i ) ) ) x j ( i ) = m 1 i = 1 ∑ m ( h θ ( x ( i ) ) − y ( i ) ) x j ( i )

[ cost, grad] = costFunction( initial_theta, X, y) ;

调用costFunction()函数,具体实现如下:

function [ J, grad] = costFunction( theta, X, y)

m = length( y) ;

J = 0 ;

grad = zeros( size( theta) ) ;

H = sigmoid( X* theta) ;

T = - y. * log( H) - ( 1 - y) . * log( 1 - H) ;

% Matlab中log( ) 的默认值为ln()

% log( exp( 1 ) ) = 1

J = 1 / m* sum ( T) ;

% 交叉熵损失

for i = 1 : m,

grad = grad + ( H( i) - y( i) ) * X( i, : ) ';

end

grad = 1 / m* grad;

end

结果显示

fprintf( 'Cost at initial theta (zeros): %f\n' , cost) ;

fprintf( 'Gradient at initial theta (zeros): \n' ) ;

fprintf( ' %f \n' , grad) ;

Cost at initial theta (zeros): 0.693147

优化过程直接调用matlab的fminunc()函数,这样不用设置学习率了,也不用写循环迭代了,替换一下(costFunction(t, X, y)),设为自己的就行。

options = optimset( 'GradObj' , 'on' , 'MaxIter' , 400 ) ;

% Run fminunc to obtain the optimal theta

% This function will return theta and the cost

[ theta, cost] = . . .

fminunc( @( t) ( costFunction( t, X, y) ) , initial_theta, options) ;

查看结果

fprintf( 'Cost at theta found by fminunc: %f\n' , cost) ;

fprintf( 'theta: \n' ) ;

fprintf( ' %f \n' , theta) ;

Cost at theta found by fminunc: 0.203506

调用plotDecisionBoundary()函数

% Plot Boundary

plotDecisionBoundary( theta, X, y) ;

% Put some labels

hold on;

% Labels and Legend

xlabel( 'x1' )

ylabel( 'x2' )

% Specified in plot order

legend( 'positive' , 'nagetive' )

hold off;

plotDecisionBoundary()函数具体实现为:

function plotDecisionBoundary( theta, X, y)

% the decision boundary defined by theta

% PLOTDECISIONBOUNDARY( theta, X, y) plots the data points with + for the

% positive examples and o for the negative examples. X is assumed to be

% a either

% 1 ) Mx3 matrix, where the first column is an all - ones column for the

% intercept.

% 2 ) MxN, N> 3 matrix, where the first column is all - ones

% Plot Data

plotData( X( : , 2 : 3 ) , y) ;

hold on

if size( X, 2 ) <= 3

% Only need 2 points to define a line, so choose two endpoints

plot_x = [ min ( X( : , 2 ) ) - 2 , max ( X( : , 2 ) ) + 2 ] ;

% Calculate the decision boundary line

plot_y = ( - 1 . / theta( 3 ) ) . * ( theta( 2 ) . * plot_x + theta( 1 ) ) ;

% Plot, and adjust axes for better viewing

plot( plot_x, plot_y)

% Legend, specific for the exercise

legend( 'Admitted' , 'Not admitted' , 'Decision Boundary' )

axis( [ 30 , 100 , 30 , 100 ] )

else

% Here is the grid range

u = linspace( - 1 , 1.5 , 50 ) ;

v = linspace( - 1 , 1.5 , 50 ) ;

z = zeros( length( u) , length( v) ) ;

% Evaluate z = theta* x over the grid

for i = 1 : length( u)

for j = 1 : length( v)

z( i, j) = mapFeature( u( i) , v( j) ) * theta;

end

end

z = z'; % important to transpose z before calling contour

% Plot z = 0

% Notice you need to specify the range [ 0 , 0 ]

contour( u, v, z, [ 0 , 0 ] , 'LineWidth' , 2 )

end

hold off

end

结果如下:

x

1

x_{1}

x 1

x

2

x_{2}

x 2

y = sigmoid( [ 1 45 85 ] * theta) ;

fprintf( [ 'For 45 and 85, we predict y ' . . .

'probability of %f\n\n' ] , y) ;

结果为:0.774321

调用了predict()函数

% Compute accuracy on our training set

p = predict( theta, X) ;

fprintf( 'Train Accuracy: %f\n' , mean( double( p == y) ) * 100 ) ;

fprintf( '\nProgram paused. Press enter to continue.\n' ) ;

具体实现为:

function p = predict(theta, X)

m = size(X, 1);

p = zeros(m, 1);

p = (sigmoid(X*theta) >= 0.5);

sigmoid函数计算出来,如果大于等于0.5,我们就认为应聘者通过面试,否者未通过。

最终在这个数据集上的准确率为:

Train Accuracy: 89.000000

2019-8-23 更新

logistic regression 模型是如下的条件概率分布:

P

(

y

=

1

∣

x

)

=

e

w

⋅

x

+

b

1

+

e

w

⋅

x

+

b

P(y = 1 | x) = \frac{e^{w\cdot x + b}}{1 + e^{w\cdot x + b}}

P ( y = 1 ∣ x ) = 1 + e w ⋅ x + b e w ⋅ x + b

P

(

y

=

0

∣

x

)

=

1

1

+

e

w

⋅

x

+

b

P(y = 0 | x) = \frac{1}{1 + e^{w\cdot x + b}}

P ( y = 0 ∣ x ) = 1 + e w ⋅ x + b 1

其中输入

x

∈

R

n

x \in \mathbb{R}^n

x ∈ R n

y

∈

{

0

,

1

}

y \in \{0,1\}

y ∈ { 0 , 1 }

w

∈

R

n

w \in \mathbb{R}^n

w ∈ R n

b

∈

R

b \in \mathbb{R}

b ∈ R

为了方便,会把

b

b

b

w

x

wx

w x

x

=

(

x

(

1

)

,

x

(

2

)

,

.

.

.

,

x

(

n

)

,

1

)

T

x = (x^{(1)},x^{(2)},...,x^{(n)},1)^T

x = ( x ( 1 ) , x ( 2 ) , . . . , x ( n ) , 1 ) T

w

=

(

w

(

1

)

,

w

(

2

)

,

.

.

.

,

w

(

n

)

,

b

)

T

w = (w^{(1)},w^{(2)},...,w^{(n)},b)^T

w = ( w ( 1 ) , w ( 2 ) , . . . , w ( n ) , b ) T

w

⋅

x

=

w

T

x

w \cdot x = w^Tx

w ⋅ x = w T x

w

⋅

x

+

b

w \cdot x + b

w ⋅ x + b

所以条件概率简化为:

P

(

y

=

1

∣

x

)

=

e

w

⋅

x

1

+

e

w

⋅

x

P(y = 1 | x) = \frac{e^{w\cdot x}}{1 + e^{w\cdot x}}

P ( y = 1 ∣ x ) = 1 + e w ⋅ x e w ⋅ x

P

(

y

=

0

∣

x

)

=

1

1

+

e

w

⋅

x

P(y = 0 | x) = \frac{1}{1 + e^{w\cdot x}}

P ( y = 0 ∣ x ) = 1 + e w ⋅ x 1

一件事的几率(odds)是指该事情发生与不发生概率的比值,由上面的式子我们可以得对数几率为:

l

o

g

P

(

y

=

1

∣

x

)

1

−

P

(

y

=

1

∣

x

)

=

l

o

g

P

(

y

=

1

∣

x

)

P

(

y

=

0

∣

x

)

=

w

⋅

x

log\frac{P(y = 1 | x)}{1 - P(y = 1 | x)} = log\frac{P(y = 1 | x)}{P(y = 0 | x)} = w \cdot x

l o g 1 − P ( y = 1 ∣ x ) P ( y = 1 ∣ x ) = l o g P ( y = 0 ∣ x ) P ( y = 1 ∣ x ) = w ⋅ x

也即输出

y

=

1

y = 1

y = 1

x

x

x

下面我们用极大似然估计来估计一下

w

w

w MLE, MAP and LSM )

似然函数:

∏

i

=

1

n

P

(

y

i

=

1

∣

x

i

)

y

i

(

P

(

y

i

=

0

∣

x

i

)

)

(

1

−

y

i

)

=

∏

i

=

1

n

P

(

y

i

=

1

∣

x

i

)

y

i

(

1

−

P

(

y

i

=

1

∣

x

i

)

)

(

1

−

y

i

)

\prod_{i=1}^{n}P(y_i = 1 | x_i)^{y_i}(P(y_i = 0 | x_i))^{(1-y_i)} = \prod_{i=1}^{n}P(y_i = 1 | x_i)^{y_i}(1 - P(y_i = 1 | x_i))^{(1-y_i)}

i = 1 ∏ n P ( y i = 1 ∣ x i ) y i ( P ( y i = 0 ∣ x i ) ) ( 1 − y i ) = i = 1 ∏ n P ( y i = 1 ∣ x i ) y i ( 1 − P ( y i = 1 ∣ x i ) ) ( 1 − y i )

把所有样本发生的概率乘起来,分

y

i

=

0

y_i =0

y i = 0

1

1

1

对数似然函数:

w

M

L

=

∑

i

=

1

n

[

y

i

l

o

g

P

(

y

i

=

1

∣

x

i

)

+

(

1

−

y

i

)

l

o

g

(

1

−

P

(

y

i

=

1

∣

x

i

)

)

]

=

∑

i

=

1

n

[

y

i

l

o

g

e

w

⋅

x

i

1

+

e

w

⋅

x

i

+

(

1

−

y

i

)

l

o

g

1

1

+

e

w

⋅

x

i

]

(

合

并

y

i

)

=

∑

i

=

1

n

[

y

i

l

o

g

e

w

⋅

x

i

1

+

e

w

⋅

x

i

(

1

+

e

w

⋅

x

i

)

+

l

o

g

1

1

+

e

w

⋅

x

i

]

=

∑

i

=

1

n

[

y

i

(

w

⋅

x

i

)

−

l

o

g

(

1

+

e

w

⋅

x

i

)

]

\begin{aligned} w_{ML} = & \sum_{i=1}^{n}[y_i \ log P(y_i = 1 | x_i)+(1-y_i)\ log (1-P(y_i = 1 | x_i))] \\ = & \sum_{i=1}^{n}[y_i \ log \frac{e^{w\cdot x_i}}{1 + e^{w\cdot x_i}}+(1-y_i) \ log \frac{1}{1 + e^{w\cdot x_i}}] \ \ \ (合并 y_i)\\ = & \sum_{i=1}^{n}[y_i \ log \frac{e^{w\cdot x_i}}{1 + e^{w\cdot x_i}}(1 + e^{w\cdot x_i})+ \ log \frac{1}{1 + e^{w\cdot x_i}}] \\ = & \sum_{i=1}^{n}[y_i (w \cdot x_i) - \ log (1 + e^{w\cdot x_i})] \end{aligned}

w M L = = = = i = 1 ∑ n [ y i l o g P ( y i = 1 ∣ x i ) + ( 1 − y i ) l o g ( 1 − P ( y i = 1 ∣ x i ) ) ] i = 1 ∑ n [ y i l o g 1 + e w ⋅ x i e w ⋅ x i + ( 1 − y i ) l o g 1 + e w ⋅ x i 1 ] ( 合 并 y i ) i = 1 ∑ n [ y i l o g 1 + e w ⋅ x i e w ⋅ x i ( 1 + e w ⋅ x i ) + l o g 1 + e w ⋅ x i 1 ] i = 1 ∑ n [ y i ( w ⋅ x i ) − l o g ( 1 + e w ⋅ x i ) ]

看过上面 1、2 小节实验的同学会发现,损失函数和这个对数似然函数的形式是一样的?这个是为什么呢?有什么联系吗?

最大(极大)似然估计在干一件什么事情呢?

数学上来说,极大似然估计其实是理想地认为,对于极少的样本观测,我们很可能观测到的就是发生概率最大的那次实现。 (如何通俗地理解概率论中的「极大似然估计法」? - 维彼的回答 - 知乎 https://www.zhihu.com/question/24124998/answer/108188755)

比如投 10 次硬币,6 次正,4次反,那么我们可以推算经验分布中

p

(

x

=

正

)

=

0.6

p(x=正) = 0.6

p ( x = 正 ) = 0 . 6

下面来分析下公式

最大似然

θ

M

L

=

a

r

g

max

θ

∏

i

=

1

m

p

m

o

d

e

l

(

x

(

i

)

;

θ

)

\theta_{ML}=\underset{\theta }{arg \max}\prod_{i=1}^{m}p_{model}(x^{(i)};\theta )

θ M L = θ a r g max i = 1 ∏ m p m o d e l ( x ( i ) ; θ )

对数似然

θ

M

L

=

a

r

g

max

θ

∑

i

=

1

m

l

o

g

p

m

o

d

e

l

(

x

(

i

)

;

θ

)

\theta_{ML}=\underset{\theta }{arg \max}\sum_{i=1}^{m}log\ p_{model}(x^{(i)};\theta )

θ M L = θ a r g max i = 1 ∑ m l o g p m o d e l ( x ( i ) ; θ )

除以样本的数量

m

m

m

p

d

a

t

a

p_{data}

p d a t a

θ

M

L

=

a

r

g

max

θ

E

x

∼

p

d

a

t

a

l

o

g

p

m

o

d

e

l

(

x

(

i

)

;

θ

)

\theta_{ML}=\underset{\theta }{arg \max} \ \mathbb{E}_{x\sim p_{data}} log\ p_{model}(x^{(i)};\theta )

θ M L = θ a r g max E x ∼ p d a t a l o g p m o d e l ( x ( i ) ; θ )

注意期望的公式:

E

x

∼

p

(

q

)

=

∑

p

(

x

i

)

q

E_{x\sim p}(q)= \sum p(x_i)q

E x ∼ p ( q ) = ∑ p ( x i ) q

一种解释的观点是,可以看做 最小化经验分布

p

d

a

t

a

p_{data}

p d a t a

p

m

o

d

e

l

p_{model}

p m o d e l

再回过头来体味下面一句话:

数学上来说,极大似然估计其实是理想地认为,对于极少的样本观测,我们很可能观测到的就是发生概率最大的那次实现。 (如何通俗地理解概率论中的「极大似然估计法」? - 维彼的回答 - 知乎 https://www.zhihu.com/question/24124998/answer/108188755)

比如投 10 次硬币,6 次正,4次反,那么我们可以推算经验分布中

p

(

x

=

正

)

=

0.6

p(x=正) = 0.6

p ( x = 正 ) = 0 . 6

经验分布是二项分布,我们的模型分布要尽量逼近于真实的二项分布!

import matplotlib. pyplot as plt

import numpy as np

x = np. linspace( 0 , 1 , 100 )

y = x** 6 * ( 1 - x) ** 4

plt. plot( [ 0.6 , 0.6 ] , [ 0 , 0.0012 ] , 'k--' , lw = 2.5 )

plt. plot( x, y)

plt. show( )

最大似然估计方法中,逼近的过程就是寻找最优待估参数的过程(上图中的 0.6)!也就是缩小经验分布

p

d

a

t

a

p_{data}

p d a t a

p

m

o

d

e

l

p_{model}

p m o d e l

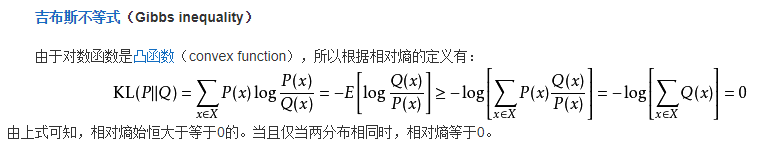

这样的话就可以用 KL 散度来度量两个分布(经验分布和模型分布)的差异:

D

K

L

(

p

d

a

t

a

∣

∣

p

m

o

d

e

l

)

=

E

x

∼

p

d

a

t

a

[

l

o

g

p

d

a

t

a

(

x

)

−

l

o

g

p

m

o

d

e

l

(

x

)

]

D_{KL}(p_{data}||p_{model}) = \mathbb{E}_{x\sim p_{data}}[log \ p_{data}(x) - log \ p_{model}(x)]

D K L ( p d a t a ∣ ∣ p m o d e l ) = E x ∼ p d a t a [ l o g p d a t a ( x ) − l o g p m o d e l ( x ) ]

第一项和模型无关,所以等价于最小化

−

E

x

∼

p

d

a

t

a

l

o

g

p

m

o

d

e

l

(

x

)

(

交

叉

熵

)

- \mathbb{E}_{x\sim p_{data}} log \ p_{model}(x)(交叉熵)

− E x ∼ p d a t a l o g p m o d e l ( x ) ( 交 叉 熵 )

所以,最大化似然函数等价于最小化 KL 散度,也等价于最小化交叉熵!

交叉熵(经验分布和模型分布) = 信息熵(经验分布) + KL散度(经验分布和模型分布)

这也是为什么我们用极大似然估计求出来的对数似然函数就是 logistic regression 的损失函数的形式(交叉熵的形式,仅是正负号的差别,最大化似然,最小化交叉熵嘛)

我们接着求解

w

M

L

=

∑

i

=

1

n

[

y

i

l

o

g

P

(

y

i

=

1

∣

x

i

)

+

(

1

−

y

i

)

l

o

g

(

1

−

P

(

y

i

=

1

∣

x

i

)

)

]

=

∑

i

=

1

n

[

y

i

l

o

g

e

w

⋅

x

i

1

+

e

w

⋅

x

i

+

(

1

−

y

i

)

l

o

g

1

1

+

e

w

⋅

x

i

]

(

合

并

y

i

)

=

∑

i

=

1

n

[

y

i

l

o

g

e

w

⋅

x

i

1

+

e

w

⋅

x

i

(

1

+

e

w

⋅

x

i

)

+

l

o

g

1

1

+

e

w

⋅

x

i

]

=

∑

i

=

1

n

[

y

i

(

w

⋅

x

i

)

−

l

o

g

(

1

+

e

w

⋅

x

i

)

]

\begin{aligned} w_{ML}= & \sum_{i=1}^{n}[y_i \ log P(y_i = 1 | x_i)+(1-y_i)\ log (1-P(y_i = 1 | x_i))] \\ = & \sum_{i=1}^{n}[y_i \ log \frac{e^{w\cdot x_i}}{1 + e^{w\cdot x_i}}+(1-y_i) \ log \frac{1}{1 + e^{w\cdot x_i}}] \ \ \ (合并 y_i)\\ = & \sum_{i=1}^{n}[y_i \ log \frac{e^{w\cdot x_i}}{1 + e^{w\cdot x_i}}(1 + e^{w\cdot x_i})+ \ log \frac{1}{1 + e^{w\cdot x_i}}] \\ = & \sum_{i=1}^{n}[y_i (w \cdot x_i) - \ log (1 + e^{w\cdot x_i})] \end{aligned}

w M L = = = = i = 1 ∑ n [ y i l o g P ( y i = 1 ∣ x i ) + ( 1 − y i ) l o g ( 1 − P ( y i = 1 ∣ x i ) ) ] i = 1 ∑ n [ y i l o g 1 + e w ⋅ x i e w ⋅ x i + ( 1 − y i ) l o g 1 + e w ⋅ x i 1 ] ( 合 并 y i ) i = 1 ∑ n [ y i l o g 1 + e w ⋅ x i e w ⋅ x i ( 1 + e w ⋅ x i ) + l o g 1 + e w ⋅ x i 1 ] i = 1 ∑ n [ y i ( w ⋅ x i ) − l o g ( 1 + e w ⋅ x i ) ]

对数似然函数对 w 求偏导

∂

w

M

L

∂

w

=

∑

i

=

1

n

[

y

i

x

i

−

e

w

⋅

x

i

1

+

e

w

⋅

x

i

x

i

]

=

∑

i

=

1

n

[

y

i

x

i

−

1

1

+

e

−

w

⋅

x

i

x

i

]

\begin{aligned} \frac{\partial w_{ML}}{\partial w} &= \sum_{i=1}^{n}[y_i x_i - \frac{e^{w\cdot x_i}}{1 + e^{w\cdot x_i}} x_i]\\ &= \sum_{i=1}^{n}[y_i x_i - \frac{1}{1 + e^{-w\cdot x_i}} x_i] \end{aligned}

∂ w ∂ w M L = i = 1 ∑ n [ y i x i − 1 + e w ⋅ x i e w ⋅ x i x i ] = i = 1 ∑ n [ y i x i − 1 + e − w ⋅ x i 1 x i ]

和上面 1、2 小节的公式推导一样的

显然

w

w

w

w

w

w

推广一下:

任何一个由负对数似然组成的损失都是定义在训练集上的经验分布和定义在模型上的概率分布之间的交叉熵!eg:均方误差就是经验分布和高斯模型之间的交叉熵!